在原来基础上修改:https://blog.csdn.net/z564359805/article/details/80798955

1、创建Scrapy项目

scrapy startproject Stats2.进入项目目录,使用命令genspider创建Spider

scrapy genspider stats stats.gov.cn3、定义要抓取的数据(处理items.py文件)

# -*- coding: utf-8 -*-

import scrapy

class StatsItem(scrapy.Item):

# 添加当前时间戳,格林尼治时间

crawled = scrapy.Field()

# 爬虫名,区分多人爬取的时候谁爬的数据

spider = scrapy.Field()

# 第一级名称,各个省、直辖市

first_titles = scrapy.Field()

# 第一级url

first_urls = scrapy.Field()

# 第一级存储目录

first_filename = scrapy.Field()

# 第二级名称,市、县

second_titles = scrapy.Field()

# 第二级url

second_urls = scrapy.Field()

# 第二级代码ID

second_id = scrapy.Field()

# 二级存储目录

second_filename = scrapy.Field()

# 第三级名称,区

third_titles = scrapy.Field()

# 第三级url

third_urls = scrapy.Field()

# 第三级代码ID

third_id = scrapy.Field()

# 三级存储目录

third_filename = scrapy.Field()

# 第四级名称,办事处

fourth_titles = scrapy.Field()

# 第四级url

fourth_urls = scrapy.Field()

# 第四级代码ID

fourth_id = scrapy.Field()

# 四级存储目录

fourth_filename = scrapy.Field()

# 第五级名称,村,居委会

fifth_titles = scrapy.Field()

# 第五级代码ID

fifth_id = scrapy.Field()

# 五级存储目录

fifth_filename = scrapy.Field()4、编写提取item数据的Spider(在spiders文件夹下:stats.py)

# -*- coding: utf-8 -*-

# 爬取统计局的城乡代码

import scrapy

import os

from Stats.items import StatsItem

from scrapy_redis.spiders import RedisSpider

# class StatsSpider(scrapy.Spider):

class StatsSpider(RedisSpider):

name = 'stats'

# 指定爬取域范围

allowed_domains = ['stats.gov.cn']

# start_urls = ['http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/index.html']

# 各个省、直辖市url前缀

# 启动所有slaver端(爬虫程序执行端)爬虫的指令

redis_key = 'StatsSpider:start_urls'

url = 'http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/'

# 动态获取爬取域的范围,这个方式显示Filtered offsite request to错误

# def __init__(self, *args, **kwargs):

# domain = kwargs.pop('domain', '')

# self.allowed_domains = filter(None, domain.split(','))

# super(StatsSpider, self).__init__(*args, **kwargs)

def parse(self, response):

print("处理第一级数据……")

items = []

# 第一级名称,各个省、直辖市(这里可以修改规则,爬取一部分省份)

first_titles = response.xpath('//tr//td/a/text()').extract()

# 第一级url>>>13.html

first_urls_list = response.xpath('//tbody//table//tr//td//a//@href').extract()

# 爬取第一级名称,各个省、直辖市

for i in range(0, len(first_titles)):

item = StatsItem()

# 指定第一级目录的路径和目录名

first_filename = "./Data/" + first_titles[i]

#如果目录不存在,则创建目录

if(not os.path.exists(first_filename)):

os.makedirs(first_filename)

item['first_filename'] = first_filename

# 保存第一级名称和url,但是url需要补全

item['first_titles'] = first_titles[i]

# http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/13.html

item['first_urls'] = self.url + first_urls_list[i]

items.append(item)

# 发送第一级爬取的url

for item in items:

yield scrapy.Request(url=item['first_urls'],meta={'meta_1': item},callback= self.second_parse)

# 处理第二级名称以及url,市、县

def second_parse(self,response):

print("处理第二级数据……")

# 提取每次Response的meta数据

meta_1 = response.meta['meta_1']

# 提取第二级的名称、url及id

second_titles = response.xpath('//tr//td[2]/a/text()').extract()

# 第二级url>>>>13/1301.html

second_urls_list = response.xpath('//tbody//table//tr//td[2]//a//@href').extract()

second_id = response.xpath('//tr//td[1]/a/text()').extract()

items = []

for i in range(0,len(second_urls_list)):

# url拼接>>>http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/13/1301.html

second_urls = self.url + second_urls_list[i]

# 第一级链接地址:http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/13.html

# 如果属于第一级相应省、直辖市,将存储目录放在相应文件夹中,第一级的链接去掉.html才能判断是否属于

#例如:修改后第一级链接http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/13 和

# 第二级链接http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/13/1301.html 这样才可以判断

if_belong = second_urls.startswith(meta_1['first_urls'][:-5])

if (if_belong):

# 目录类似于:\Data\河北省\河北省 石家庄市

second_filename = meta_1['first_filename']+"/"+ meta_1['first_titles']+" " + second_titles[i]

# 如果目录不存在,则创建目录

if (not os.path.exists(second_filename)):

os.makedirs(second_filename)

item = StatsItem()

item['first_titles'] = meta_1['first_titles']

item['first_urls'] = meta_1['first_urls']

item['second_titles'] = meta_1['first_titles']+" " + second_titles[i]

item['second_urls'] = second_urls

item['second_id'] = second_id[i]

item['second_filename'] = second_filename

items.append(item)

# 发送第二级url

for item in items:

yield scrapy.Request(url= item['second_urls'],meta={'meta_2':item},callback= self.third_parse)

# 处理第三级数据名称,区

def third_parse(self,response):

# 提取每次Response的meta数据

meta_2 = response.meta['meta_2']

print("处理第三级数据……")

# 提取第三级的名称、url及id

third_titles = response.xpath('//tr//td[2]/a/text()').extract()

# 第三级url>>>01/130102.html http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/13/01/130102.html

third_urls_list = response.xpath('//tbody//table//tr//td[2]//a//@href').extract()

third_id = response.xpath('//tr//td[1]/a/text()').extract()

items = []

for i in range(0,len(third_urls_list)):

# url拼接>>>http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/13/01/130102.html

third_urls = self.url + meta_2['second_id'][:2] + "/" + third_urls_list[i]

# 第二级链接地址:http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/13/1301.html

# http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/11/1101.html

# http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/34/3415.html

# 如果属于第二级相应市、县,将存储目录放在相应文件夹中,第二级的链接最后两位数字01是否和

# 第三级链接倒数第14位至倒数第13位是否相等(或者第三级链接倒数第9位至第8位)

# 或者:第二级链接最后4位(1301)和第三级链接倒数第11位至第8位是否相等

# http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/13/01/130102.html

# http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/11/01/110101.html

# http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/34/15/341502.html

# if_belong = third_urls.startswith(meta_2['second_urls'][:-9])

if (meta_2['second_urls'][-7:-5])==(third_urls[-14:-12]):

# if (if_belong):

# 目录类似于:\Data\河北省\河北省 石家庄市\河北省 石家庄市 长安区 130102000000

third_filename=meta_2['second_filename']+'/'+meta_2['second_titles']+" "+third_titles[i]+" "+third_id[i]

# 如果目录不存在,则创建目录

if (not os.path.exists(third_filename)):

os.makedirs(third_filename)

item = StatsItem()

item['first_titles'] = meta_2['first_titles']

item['first_urls'] = meta_2['first_urls']

item['second_titles'] = meta_2['second_titles']

item['second_urls'] = meta_2['second_urls']

item['second_id'] = meta_2['second_id']

item['second_filename'] = meta_2['second_filename']

item['third_titles'] = meta_2['second_titles'] + " "+third_titles[i]+" "+third_id[i]

item['third_urls'] = third_urls

item['third_id'] = third_id[i]

item['third_filename'] = third_filename

items.append(item)

# 发送第三级url

for item in items:

yield scrapy.Request(url=item['third_urls'],meta={'meta_3':item},callback=self.fourth_parse)

# 处理第四级数据名称,办事处

def fourth_parse(self,response):

# 提取每次Response的meta数据

meta_3 = response.meta['meta_3']

print("处理第四级数据……")

# 提取第四级的名称、url及id

fourth_titles = response.xpath('//tr//td[2]/a/text()').extract()

# 第四级url>>>02/130102001.html http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/13/01/02/130102001.html

fourth_urls_list = response.xpath('//tbody//table//tr//td[2]//a//@href').extract()

fourth_id = response.xpath('//tr//td[1]/a/text()').extract()

items = []

for i in range(0,len(fourth_urls_list)):

# url拼接>>>http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/13/01/02/130102001.html

fourth_urls = self.url + meta_3['third_id'][:2] + "/" + meta_3['third_id'][2:4]+"/"+fourth_urls_list[i]

# 第三级链接地址:http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/13/01/130102.html

# http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/13/01/130104.html

# http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/11/01/110101.html

# http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/11/01/110102.html

# 如果属于第三级相应区,将存储目录放在相应文件夹中,第三级链接最后两位数字02和第四级链接倒数第17位至

# 倒数第16位是否相等来判断(或者第四级链接的倒数第10位至倒数第9位)

# 或者第三级链接最后6位数字(130102)和第四级链接倒数第14位至倒数第9位是否相等

# 第四级链接http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/13/01/02/130102001.html

# http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/13/01/04/130104001.html

# http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/11/01/01/110101001.html

# http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/11/01/02/110102001.html

if (meta_3['third_urls'][-7:-5]) == fourth_urls[-17:-15]:

# 最后一级目录不应该出现上一级目录结尾的数字和最后一个空格,即: 130102000000,要切掉

# 目录类似于:\Data\河北省\河北省 石家庄市\河北省 石家庄市 长安区 130102000000\河北省 石家庄市 长安区 建北街道办事处 130102001000

m = meta_3['third_titles']

fourth_filename=meta_3['third_filename']+'/'+m[:m.rfind(" ")]+" "+fourth_titles[i]+" "+fourth_id[i]

# 如果目录不存在,则创建

if (not os.path.exists(fourth_filename)):

os.makedirs(fourth_filename)

item = StatsItem()

item['first_titles'] = meta_3['first_titles']

item['first_urls'] = meta_3['first_urls']

item['second_titles'] = meta_3['second_titles']

item['second_urls'] = meta_3['second_urls']

item['second_id'] = meta_3['second_id']

item['second_filename'] = meta_3['second_filename']

item['third_titles'] = meta_3['third_titles']

item['third_urls'] = meta_3['third_urls']

item['third_id'] = meta_3['third_id']

item['third_filename'] = meta_3['third_filename']

item['fourth_titles'] = m[:m.rfind(" ")]+" "+fourth_titles[i]+" "+fourth_id[i]

item['fourth_urls'] = fourth_urls

item['fourth_id'] = fourth_id[i]

item['fourth_filename'] = fourth_filename

items.append(item)

# 发送第四级url

for item in items:

yield scrapy.Request(url=item['fourth_urls'],meta={"meta_4":item},callback=self.fifth_parse)

# 处理第五级数据名称,村、居委会

def fifth_parse(self,response):

#提取每次Response的meta数据

meta_4 = response.meta['meta_4']

print("处理第五级数据……")

# 提取第五级的名称及id

fifth_titles = response.xpath('//tr[@class="villagetr"]//td[3]/text()').extract()

fifth_id = response.xpath('//tr[@class="villagetr"]//td[1]/text()').extract()

# items = []

for i in range(0, len(fifth_titles)):

# 因为最后一级没有url链接,所以可以判断本页的url地址和上次传的url地址是否一样

if (response.url == meta_4['fourth_urls']):

# 目录类似于:\Data\河北省\河北省 石家庄市\河北省 石家庄市 长安区 130102000000\河北省 石家庄市 长安区 建北街道办事处 130102001000\

# 河北省 石家庄市 长安区 建北街道办事处 棉一社区居民委员会 130102001001

# 最后一级目录不应该出现上一级目录结尾的数字和最后一个空格,即: 130102001000,要切掉

m = meta_4['fourth_titles']

fifth_filename = meta_4['fourth_filename']+"/"+m[:m.rfind(" ")]+" "+fifth_titles[i]+" "+fifth_id[i]

if (not os.path.exists(fifth_filename)):

os.makedirs(fifth_filename)

item = StatsItem()

# item['first_titles'] = meta_4['first_titles']

# item['first_urls'] = meta_4['first_urls']

# item['second_titles'] = meta_4['second_titles']

# item['second_urls'] = meta_4['second_urls']

# item['second_id'] = meta_4['second_id']

# item['second_filename'] = meta_4['second_filename']

item['third_titles'] = meta_4['third_titles']

# item['third_urls'] = meta_4['third_urls']

item['third_id'] = meta_4['third_id']

# item['third_filename'] = meta_4['third_filename']

item['fourth_titles'] = meta_4['fourth_titles']

# item['fourth_urls'] = meta_4['fourth_urls']

item['fourth_id'] = meta_4['fourth_id']

# item['fourth_filename'] = meta_4['fourth_filename']

# item['fifth_filename'] = fifth_filename

item['fifth_id'] = fifth_id[i]

# 河北省 石家庄市 长安区 建北街道办事处 棉一社区居民委员会 130102001001

item['fifth_titles'] = m[:m.rfind(" ")]+" "+fifth_titles[i]+" "+fifth_id[i]

# items.append(item)

yield item5.处理pipelines管道文件保存数据,可将结果保存到文件中(pipelines.py)

# -*- coding: utf-8 -*-

import json

from openpyxl import Workbook

from datetime import datetime

# 转码操作,继承json.JSONEncoder的子类

class MyEncoder(json.JSONEncoder):

def default(self, o):

if isinstance(o, bytes):

return str(o, encoding='utf-8')

return json.JSONEncoder.default(self, o)

# 处理数据,将数据保存在本地的excel表中

class StatsPipeline(object):

def __init__(self):

# self.filename = open("stats.csv", "w", encoding="utf-8")

self.wb = Workbook()

self.ws = self.wb.active

# 创建表头

self.ws.append(['third_titles','third_id','fourth_titles','fourth_id','fifth_titles','fifth_id'])

def process_item(self, item, spider):

# text = json.dumps((dict(item)), ensure_ascii=False, cls=MyEncoder) + '\n'

# self.filename.write(text)

# 添加当前时间戳,格林尼治时间

item["crawled"] = datetime.utcnow()

# 爬虫名,区分多人爬取的时候谁爬的数据

item["spider"] = spider.name

# 只取六项,可以结合情况修改,保存到excel表中

text = [item['third_titles'],item['third_id'],item['fourth_titles'],item['fourth_id'],item['fifth_titles'],item['fifth_id']]

self.ws.append(text)

return item

def close_spider(self, spider):

self.wb.save('stats.xlsx')

print("数据处理完毕,谢谢使用!")

6.配置settings文件(settings.py)

# 使用scrapy-redis里的去重组件,不再使用scrapy默认的去重

DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter"

# 使用了scrapy-redis里的调度器组件,不再使用scrapy默认的调度器

SCHEDULER = "scrapy_redis.scheduler.Scheduler"

# 允许暂停,redis请求记录不丢失

SCHEDULER_PERSIST = True

# 不写默认存储到本地数据库

# REDIS_HOST = "192.168.0.109"

# REDIS_PORT = 6379

# 默认的scrapy-redis请求队列形式

SCHEDULER_QUEUE_CLASS = "scrapy_redis.queue.SpiderPriorityQueue"

# 队列形式,先进先出,选这个会报错:Unhandled error in Deferred

# SCHEDULER_QUEUE_CLASS = "scrapy_redis.queue.SpiderQueue"

# 栈形式,先进后出

#SCHEDULER_QUEUE_CLASS = "scrapy_redis.queue.SpiderStack"

# Configure item pipelines去掉下面注释,打开管道文件,添加RedisPipeline

ITEM_PIPELINES = {

'Stats.pipelines.StatsPipeline': 300,

'scrapy_redis.pipelines.RedisPipeline': 400,

}

# Obey robots.txt rules,具体含义参照:https://blog.csdn.net/z564359805/article/details/80691677

ROBOTSTXT_OBEY = False

# Override the default request headers:添加User-Agent信息

DEFAULT_REQUEST_HEADERS = {

'User-Agent': 'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Trident/5.0);',

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

}

# 还可以将日志存到本地文件中(可选添加设置)

LOG_FILE = "stats.log"

LOG_LEVEL = "DEBUG"

# 包含print全部放在日志中

LOG_STDOUT = True7.参照以下链接打开redis数据库:

https://blog.csdn.net/z564359805/article/details/808081558.以上设置完毕,进行爬取:进入到spiders文件夹下执行项目命令,启动Spider:

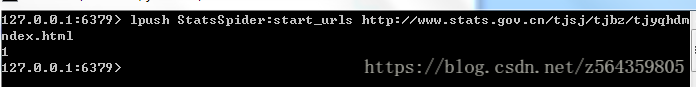

scrapy runspider stats.py9.在Master端(核心服务器)的redis-cli输入push指令,参考格式:

输入:lpush StatsSpider:start_urls http://www.stats.gov.cn/tjsj/tjbz/tjyqhdmhcxhfdm/2016/index.html