Dubbo服务调用

之前写十几篇文章, 自己对Dubbo的运行有了一定的了解。而Dubbo服务调用则是重中之重, 目测将这个过程写出来起码需要5-6篇文章;

服务端Netty的hander包装

服务导出的过程中, 会做两件事情、一件事情是将服务提供者的信息转换为URL放入注册中心;二是启动服务器; 而netty处理请求的数据过程依赖于一个个的handler,因此需要了解处理请求的Handler;

private ExchangeServer createServer(URL url) {

//省略部分代码

ExchangeServer server;

try {

// requestHandler是请求处理器,类型为ExchangeHandler

// 表示从url的端口接收到请求后,requestHandler来进行处理

server = Exchangers.bind(url, requestHandler);

} catch (RemotingException e) {

throw new RpcException("Fail to start server(url: " + url + ") " + e.getMessage(), e);

}

return server;

}

首先会传入requestHandler, 而这个handler是一个ExchangeHandlerAdapter类;

ExchangeHandlerAdapter

当请求到来的时候, 首先是调用received(Channel channel, Object message),然后会调用reply(ExchangeChannel channel, Object message)处理ing求,message就是请求的数据, channel则表示与客户端的长连接;

工作过程: 最终就是通过反射技术调用Method.invoke执行服务;

- 类型转换, 将Object转换为Invocation;

- 调用getInvoker获取服务提供者的执行器;(这个Invoker会被多层包装)

- 设置远程地址remoteAddress给RpcContext

- 调用执行器的invoke方法, 返回结果;

- 返回一个CompletionFuture实例;

private ExchangeHandler requestHandler = new ExchangeHandlerAdapter() {

@Override

public CompletableFuture<Object> reply(ExchangeChannel channel, Object message) throws RemotingException {

Invocation inv = (Invocation) message;

Invoker<?> invoker = getInvoker(channel, inv);

//省略部分代码

RpcContext.getContext().setRemoteAddress(channel.getRemoteAddress());

Result result = invoker.invoke(inv);

return result.completionFuture().thenApply(Function.identity());

}

@Override

public void received(Channel channel, Object message) throws RemotingException {

if (message instanceof Invocation) {

// 这是服务端接收到Invocation时的处理逻辑

reply((ExchangeChannel) channel, message);

} else {

super.received(channel, message);

}

}

}

Exchangers.bind(url, requestHandler)

过程:

- 调用getExchanger(url),通过SPI机制获取Exchanger的扩展实现类, 默认的实现类是HeaderExhanger

- 调用bind方法启动netty;

public static ExchangeServer bind(URL url, ExchangeHandler handler) throws RemotingException {

// codec表示协议编码方式

url = url.addParameterIfAbsent(Constants.CODEC_KEY, "exchange");

// 通过url得到HeaderExchanger, 利用HeaderExchanger进行bind,将得到一个HeaderExchangeServer

return getExchanger(url).bind(url, handler);

}

HeaderExchanger#bind(Url, hander)

工作:

- 首先会传进来的ExchangeHandlerAdapter实例handler进行包装, 包装为HeaderExchangerHandler;

- 再对HeaderExchangerHandler包装为DecodeHandler的实例;

- 调用Transporters#bind方法创建一个NettyServer,

- 将NettyServer实例包装为HeaderExchangerServer; 返回;

@Override

public ExchangeServer bind(URL url, ExchangeHandler handler) throws RemotingException {

// 下面会去启动Netty

// 对handler包装了两层,表示当处理一个请求时,每层Handler负责不同的处理逻辑

// 为什么在connect和bind时都是DecodeHandler,解码,解的是把InputStream解析成RpcInvocation对象

return new HeaderExchangeServer(Transporters.bind(url, new DecodeHandler(new HeaderExchangeHandler(handler))));

}

Transporters.bind(url, handlers)

工作流程:

- 如果bind了多个handler,那么当有一个连接过来时,会循环每个handler去处理连接

- 调用getTransport()方法通过SPI机制获取一个Transporter实例, 默认情况下是NettyTransporter实例;

- 调用NettyTransport#bind方法,创建一个nettyServer;

public static Server bind(URL url, ChannelHandler... handlers) throws RemotingException {

//....

//

ChannelHandler handler;

if (handlers.length == 1) {

handler = handlers[0];

} else {

handler = new ChannelHandlerDispatcher(handlers);

}

// 调用NettyTransporter去绑定,Transporter表示网络传输层

return getTransporter().bind(url, handler);

}

NettyTransporter#bind(url, listener)

创建Nettyserver实例;

public class NettyTransporter implements Transporter {

public static final String NAME = "netty";

@Override

public Server bind(URL url, ChannelHandler listener) throws RemotingException {

return new NettyServer(url, listener);

}

@Override

public Client connect(URL url, ChannelHandler listener) throws RemotingException {

return new NettyClient(url, listener);

}

}

NettyServer(URL url, ChannelHandler handler)

- 调用ChannerHandlers.wrap方法包装DecoderHandler实例;

- 调用父类构造方法;

public class NettyServer extends AbstractServer implements Server {

private Map<String, Channel> channels;

private ServerBootstrap bootstrap;

private io.netty.channel.Channel channel;

private EventLoopGroup bossGroup;

private EventLoopGroup workerGroup;

public NettyServer(URL url, ChannelHandler handler) throws RemotingException {

super(url, ChannelHandlers.wrap(handler, ExecutorUtil.setThreadName(url, SERVER_THREAD_POOL_NAME)));

}

ChannelHandlers.wrap(ChannelHandler handler, URL url)

- 调用ChannelHandlers#getInstance()方法获取单例ChannelHandlers, 使用的是饿汉式;

- 调用wrapInternal(handler, url) 包装DecoderHandler实例handler:

public static ChannelHandler wrap(ChannelHandler handler, URL url) {

return ChannelHandlers.getInstance().wrapInternal(handler, url);

}

ChannelHandlers#wrapInternal(handler, url)

工作流程:

- 通过Spi机制调用将DecoderHandler实例包装为AllChannelHandler实例;

- 再将AllChannelHandler包装为MultiMessageHandler实例;

public class ChannelHandlers {

// 单例模式

private static ChannelHandlers INSTANCE = new ChannelHandlers();

protected ChannelHandlers() {

}

public static ChannelHandler wrap(ChannelHandler handler, URL url) {

return ChannelHandlers.getInstance().wrapInternal(handler, url);

}

protected static ChannelHandlers getInstance() {

return INSTANCE;

}

static void setTestingChannelHandlers(ChannelHandlers instance) {

INSTANCE = instance;

}

protected ChannelHandler wrapInternal(ChannelHandler handler, URL url) {

// 先通过ExtensionLoader.getExtensionLoader(Dispatcher.class).getAdaptiveExtension().dispatch(handler, url)

// 得到一个AllChannelHandler(handler, url)

// 然后把AllChannelHandler包装成HeartbeatHandler,HeartbeatHandler包装成MultiMessageHandler

// 所以当Netty接收到一个数据时,会经历MultiMessageHandler--->HeartbeatHandler---->AllChannelHandler

// 而AllChannelHandler会调用handler

return new MultiMessageHandler(new HeartbeatHandler(ExtensionLoader.getExtensionLoader(Dispatcher.class)

.getAdaptiveExtension().dispatch(handler, url)));

}

}

这里Dispacter涉及到服务端的线程模型, 标记

AbstractServer(URL url, ChannelHandler handler)

创建NettyServer实例会将调用父类构造方法, 父类式AbstractServer, 抽象类;

工作流程:

- 调用父类的构造方法, 将handler赋值给父类AbstractPeer的handler属性;

- 获取本地地址localAddress;

- 获取服务绑定的IP;

- 获取服务绑定的端口号;

- 创建InetSocketAddress实例, 用来创建Socke连接的构造参数;

- 调用doOpen()启动netty;

public AbstractServer(URL url, ChannelHandler handler) throws RemotingException {

super(url, handler);

localAddress = getUrl().toInetSocketAddress();

String bindIp = getUrl().getParameter(Constants.BIND_IP_KEY, getUrl().getHost());

int bindPort = getUrl().getParameter(Constants.BIND_PORT_KEY, getUrl().getPort());

if (url.getParameter(ANYHOST_KEY, false) || NetUtils.isInvalidLocalHost(bindIp)) {

bindIp = ANYHOST_VALUE;

}

bindAddress = new InetSocketAddress(bindIp, bindPort);

this.accepts = url.getParameter(ACCEPTS_KEY, DEFAULT_ACCEPTS);

this.idleTimeout = url.getParameter(IDLE_TIMEOUT_KEY, DEFAULT_IDLE_TIMEOUT);

try {

doOpen();

if (logger.isInfoEnabled()) {

logger.info("Start " + getClass().getSimpleName() + " bind " + getBindAddress() + ", export " + getLocalAddress());

}

} catch (Throwable t) {

//....

}

//...

}

AbstractEndpoint(url, handler)

调用父类构造方法, 设置编码方式, 设置服务提供者的超时时间, 设置创建连接的超时时间。

public AbstractEndpoint(URL url, ChannelHandler handler) {

super(url, handler);

this.codec = getChannelCodec(url);

this.timeout = url.getPositiveParameter(TIMEOUT_KEY, DEFAULT_TIMEOUT);

this.connectTimeout = url.getPositiveParameter(Constants.CONNECT_TIMEOUT_KEY, Constants.DEFAULT_CONNECT_TIMEOUT);

}

AbstractPeer(URL url, ChannelHandler handler)

将handler保存起来;

public abstract class AbstractPeer implements Endpoint, ChannelHandler {

private final ChannelHandler handler;

private volatile URL url;

private volatile boolean closing;

private volatile boolean closed;

public AbstractPeer(URL url, ChannelHandler handler) {

this.url = url;

this.handler = handler;

}

NettyServer#doOpen()

上面过程中,调用new NettyServer, 会将MultiMessageHandler赋值给NettyServer的Handler属性;

因此,NettyServer另一种身份是一个MultiMessageHandler:

工作流程:

- 创建ServerBootstrap服务端;

- 创建工作线程组,IO事件线程组;

- 创建NettyServerHandler, 将this传进去, 即传了一个MultiMessageHandler,包装为一个NettyServerHandler实例;

- 服务端参数的设置;

@Override

protected void doOpen() throws Throwable {

bootstrap = new ServerBootstrap();

bossGroup = new NioEventLoopGroup(1, new DefaultThreadFactory("NettyServerBoss", true));

workerGroup = new NioEventLoopGroup(getUrl().getPositiveParameter(IO_THREADS_KEY, Constants.DEFAULT_IO_THREADS),

new DefaultThreadFactory("NettyServerWorker", true));

final NettyServerHandler nettyServerHandler = new NettyServerHandler(getUrl(), this);

channels = nettyServerHandler.getChannels();

bootstrap.group(bossGroup, workerGroup)

.channel(NioServerSocketChannel.class)

.childOption(ChannelOption.TCP_NODELAY, Boolean.TRUE)

.childOption(ChannelOption.SO_REUSEADDR, Boolean.TRUE)

.childOption(ChannelOption.ALLOCATOR, PooledByteBufAllocator.DEFAULT)

.childHandler(new ChannelInitializer<NioSocketChannel>() {

@Override

protected void initChannel(NioSocketChannel ch) throws Exception {

// FIXME: should we use getTimeout()?

int idleTimeout = UrlUtils.getIdleTimeout(getUrl());

NettyCodecAdapter adapter = new NettyCodecAdapter(getCodec(), getUrl(), NettyServer.this);

ch.pipeline()//.addLast("logging",new LoggingHandler(LogLevel.INFO))//for debug

.addLast("decoder", adapter.getDecoder())

.addLast("encoder", adapter.getEncoder())

.addLast("server-idle-handler", new IdleStateHandler(0, 0, idleTimeout, MILLISECONDS))

.addLast("handler", nettyServerHandler);

}

});

// bind

ChannelFuture channelFuture = bootstrap.bind(getBindAddress());

channelFuture.syncUninterruptibly();

channel = channelFuture.channel();

}

服务端Netty的hander包装过程总结

- ExchangeHandlerAdapter的requestHandler实例包装为HeaderExchangerhandler

- 将HandlerExchangerHandler实例包装为Decodehandler;

- 将DecodeHandler实例包装为AllChannelHandler;

- 将AllChannelHandler实例包装为MultiMessageHandler实例;

- 将MultiMessageHandler实例包装为NettyServerHandler;

- 最终将NettyServerHandler绑定到PipeLine种的handler处理器;

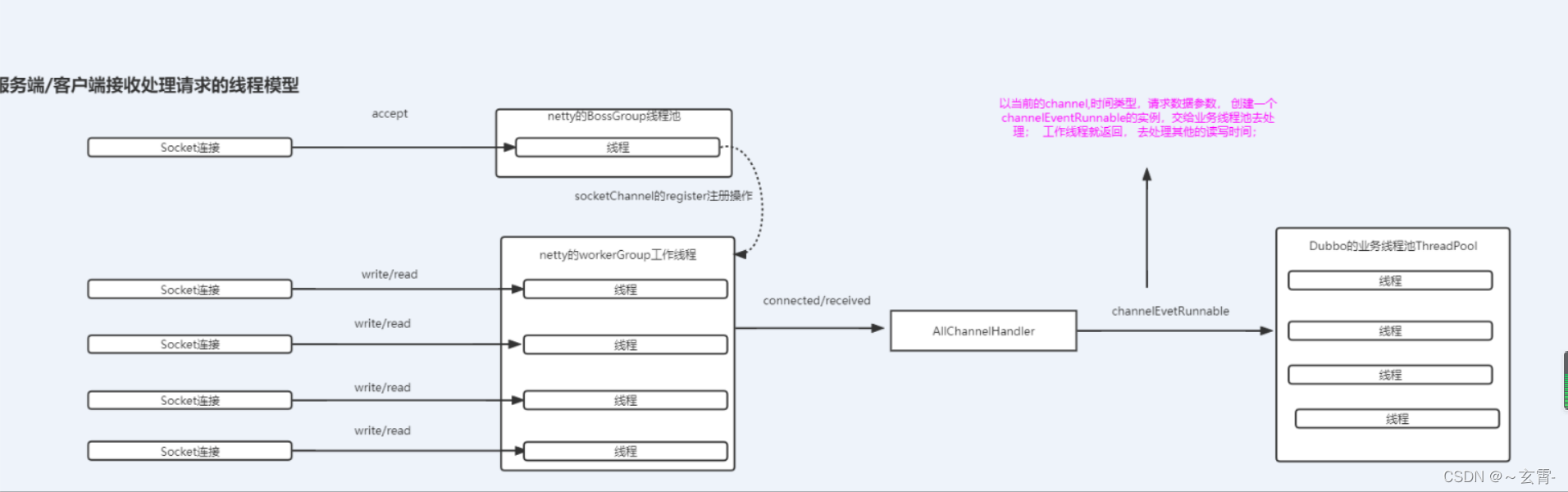

服务端线程模型

涉及的过程是 : 将DecodeHandler包装为AllChannelhandler的过程;

protected ChannelHandler wrapInternal(ChannelHandler handler, URL url) {

return new MultiMessageHandler(new HeartbeatHandler(ExtensionLoader.getExtensionLoader(Dispatcher.class)

.getAdaptiveExtension().dispatch(handler, url)));

}

通过SPI机制获取Dispatcher的扩展实现类,

@SPI(AllDispatcher.NAME)

public interface Dispatcher {

/**

* dispatch the message to threadpool.

*

* @param handler

* @param url

* @return channel handler

*/

@Adaptive({

Constants.DISPATCHER_KEY, "dispather", "channel.handler"})

// The last two parameters are reserved for compatibility with the old configuration

ChannelHandler dispatch(ChannelHandler handler, URL url);

}

默认使用AllDispatcher#dispatch方法;

AllDispatcher #dispatch(handler, url)

创建AllChannelHandler实例, 包装了一个handler;

public class AllDispatcher implements Dispatcher {

public static final String NAME = "all";

@Override

public ChannelHandler dispatch(ChannelHandler handler, URL url) {

return new AllChannelHandler(handler, url);

}

}

AllChannelHandler

当netty接收到数据后,会调用received方法

public class AllChannelHandler extends WrappedChannelHandler {

public AllChannelHandler(ChannelHandler handler, URL url) {

// 会生成一个线程池

super(handler, url);

}

//连接完成处理

@Override

public void connected(Channel channel) throws RemotingException {

ExecutorService executor = getExecutorService();

try {

executor.execute(new ChannelEventRunnable(channel, handler, ChannelState.CONNECTED));

} catch (Throwable t) {

throw new ExecutionException("connect event", channel, getClass() + " error when process connected event .", t);

}

}

//连接断开处理

@Override

public void disconnected(Channel channel) throws RemotingException {

ExecutorService executor = getExecutorService();

try {

executor.execute(new ChannelEventRunnable(channel, handler, ChannelState.DISCONNECTED));

} catch (Throwable t) {

throw new ExecutionException("disconnect event", channel, getClass() + " error when process disconnected event .", t);

}

}

@Override

public void received(Channel channel, Object message) throws RemotingException {

ExecutorService executor = getExecutorService();

try {

// 交给线程池去处理message

executor.execute(new ChannelEventRunnable(channel, handler, ChannelState.RECEIVED, message));

} catch (Throwable t) {

//TODO A temporary solution to the problem that the exception information can not be sent to the opposite end after the thread pool is full. Need a refactoring

//fix The thread pool is full, refuses to call, does not return, and causes the consumer to wait for time out

if(message instanceof Request && t instanceof RejectedExecutionException){

Request request = (Request)message;

if(request.isTwoWay()){

String msg = "Server side(" + url.getIp() + "," + url.getPort() + ") threadpool is exhausted ,detail msg:" + t.getMessage();

Response response = new Response(request.getId(), request.getVersion());

response.setStatus(Response.SERVER_THREADPOOL_EXHAUSTED_ERROR);

response.setErrorMessage(msg);

channel.send(response);

return;

}

}

throw new ExecutionException(message, channel, getClass() + " error when process received event .", t);

}

}

//异常处理;

@Override

public void caught(Channel channel, Throwable exception) throws RemotingException {

ExecutorService executor = getExecutorService();

try {

executor.execute(new ChannelEventRunnable(channel, handler, ChannelState.CAUGHT, exception));

} catch (Throwable t) {

throw new ExecutionException("caught event", channel, getClass() + " error when process caught event .", t);

}

}

}

WrappedChannelHandler

该类是AllChannelHandler的父类;

构造方法工作流程:

- 将DecodeHandler实例给属性handler

- 通过SPI机制,获取获取一个固定的线程池;使用的SPI线程池默认扩展类是FixedThreadPool,

- 确定componentKey的值, 如果是消费者,componentKey为consumer, 如果是服务提供者,则是java.util.concurrent.ExecutorService;

- 通过SPI机制,获取一个DataStore;使用的是SimpleDataStore#put方法;内部是一个Map<String, Map>的结构, 既是两层Map的结构, 第一层的Map的Key是componentKey,第二层的map的key是服务的端口号, value是一个2创建出来的线程池;

public class WrappedChannelHandler implements ChannelHandlerDelegate {

protected static final Logger logger = LoggerFactory.getLogger(WrappedChannelHandler.class);

protected static final ExecutorService SHARED_EXECUTOR = Executors.newCachedThreadPool(new NamedThreadFactory("DubboSharedHandler", true));

protected final ExecutorService executor;

protected final ChannelHandler handler;

protected final URL url;

public WrappedChannelHandler(ChannelHandler handler, URL url) {

this.handler = handler;

this.url = url;

executor = (ExecutorService) ExtensionLoader.getExtensionLoader(ThreadPool.class).getAdaptiveExtension().getExecutor(url);

String componentKey = Constants.EXECUTOR_SERVICE_COMPONENT_KEY;

if (CONSUMER_SIDE.equalsIgnoreCase(url.getParameter(SIDE_KEY))) {

componentKey = CONSUMER_SIDE;

}

// DataStore底层就是一个map,存储的格式是这样的:{"java.util.concurrent.ExecutorService":{"20880":executor}}

// 这里记录了干嘛?应该是在请求处理的时候会用到

DataStore dataStore = ExtensionLoader.getExtensionLoader(DataStore.class).getDefaultExtension();

dataStore.put(componentKey, Integer.toString(url.getPort()), executor);

}

}

FixedThreadPool

创建一个线程池;

public class FixedThreadPool implements ThreadPool {

@Override

public Executor getExecutor(URL url) {

String name = url.getParameter(THREAD_NAME_KEY, DEFAULT_THREAD_NAME);

int threads = url.getParameter(THREADS_KEY, DEFAULT_THREADS);

int queues = url.getParameter(QUEUES_KEY, DEFAULT_QUEUES);

return new ThreadPoolExecutor(threads, threads, 0, TimeUnit.MILLISECONDS,

queues == 0 ? new SynchronousQueue<Runnable>() :

(queues < 0 ? new LinkedBlockingQueue<Runnable>()

: new LinkedBlockingQueue<Runnable>(queues)),

new NamedInternalThreadFactory(name, true), new AbortPolicyWithReport(name, url));

}

}

SimpleDataStore

这个DataStore内部存在data 属性, 是一个Map<String ,Map>的结构;

public class SimpleDataStore implements DataStore {

// <component name or id, <data-name, data-value>>

private ConcurrentMap<String, ConcurrentMap<String, Object>> data =

new ConcurrentHashMap<String, ConcurrentMap<String, Object>>();

@Override

public Map<String, Object> get(String componentName) {

ConcurrentMap<String, Object> value = data.get(componentName);

if (value == null) {

return new HashMap<String, Object>();

}

return new HashMap<String, Object>(value);

}

@Override

public Object get(String componentName, String key) {

if (!data.containsKey(componentName)) {

return null;

}

return data.get(componentName).get(key);

}

@Override

public void put(String componentName, String key, Object value) {

Map<String, Object> componentData = data.get(componentName);

if (null == componentData) {

data.putIfAbsent(componentName, new ConcurrentHashMap<String, Object>());

componentData = data.get(componentName);

}

componentData.put(key, value);

}

@Override

public void remove(String componentName, String key) {

if (!data.containsKey(componentName)) {

return;

}

data.get(componentName).remove(key);

}

}

Dubbo涉及的线程池

作为服务端, 存在几个线程池

- Netty负责处理连接事件的服务线程池BossGroup;

- Netty负责处理读写事件的工作线程池workerGroup;

- 负责处理业务的业务线程池, 即AllChannelHandler对应的线程池;

Dubbo的线程模型

- 如果是accept事件, 则由Netty的BossGroup服务线程池处理, 处理完后,会将channel通道信息注册中工作线程池workGroup中级工

- 如果是read/write事件, 则由Netty的WorkerGroup工作线程池处理, 在Dubbo中成为IO线程池,因为是负责处理网络IO请求的。

- 工作线程池调用AllChannelHandler的received接收数据事件处理, 会以当前的channel,时间类型,请求数据参数, 创建一个channelEventRunnable的实例,交给业务线程池去处理; 工作线程就返回, 去处理其他的读写事件;

- 为什么这样创建一个业务线程池来处理请求呢?

- 因为工作线程池的线程数量一般是CPU核数+1, 而如果使用IO线程来处理业务, 如果每个IO线程都执行了耗时的业务, 那么整个服务端/消费端都会处于阻塞状态,短时间内服务就不可用了。因此将 IO线程的业务处理交给 Dubbo的业务线程池去处理, 而IO线程直接返回, 去处理新的读写请求事件;这样可以提高系统的性能;因此Dubbo中,如果不指定线程模型, 通常使用的是All类型的线程模型, 即会创建AllchennelHandler来创建业务线程池;

Dubbo的其他线程模型

- All

所有消息都派发到线程池,包括请求,响应,连接事件,断开事件,心跳等。 - direct

所有消息直接在IO线程上处理, 即使用IO线程处理业务; - message

只有请求响应消息派发到线程池,其它连接断开事件,心跳等消息,直接在 IO 线程上执行 - execution

只有请求消息派发到线程池,不含响应,响应和其它连接断开事件,心跳等消息,直接在 IO 线程上执行。 - connection

在 IO 线程上,将连接断开事件放入队列,有序逐个执行,其它消息派发到线程池。

对应的集中线程池:都是ThreadPool接口的实现类;

- fixed

固定大小线程池,启动时建立线程,不关闭,一直持有。

对应使用的是FixedThreadPool 创建的线程池; - cached

存线程池,空闲一分钟自动删除,需要时重建

对应使用的是CachedThreadPool创建的线程池 - limited

可伸缩线程池,但池中的线程数只会增长不会收缩。只增长不收缩的目的是为了避免收缩时突然来了大流量引起的性能问题。

对应使用的是LimitedThreadPool创建的线程池 - eager

优先创建Worker线程池。在任务数量大于corePoolSize但是小于maximumPoolSize时,优先创建Worker来处理任务。当任务数量大于maximumPoolSize时,将任务放入阻塞队列中。阻塞队列充满时抛出RejectedExecutionException。(相比于cached:cached在任务数量超过maximumPoolSize时直接抛出异常而不是将任务放入阻塞队列)

对应使用的是EagertThreadPool创建的线程池