目录

1. 问题

tensorrtx项目的yolov5-v4.0版本提供了python脚本yolov5-trt.py,但是该脚本在jetson nano上运行,发现显存占用非常大,导致后面报出killed的错误,使用c++脚本则无此类错误。

通过查看yolov5-trt.py脚本的内容,发现该脚本并非调用c++代码,而是用python环境的torch、pycuda、tensorrt等重新做一遍,导致显存占用比单纯用pytorch还要大。

因此,尝试用python调用写好的c++代码。

主要参考博客《YOLOv5 Tensorrt Python/C++部署》,该博客主要介绍项目Yolov5_Tensorrt_Win10,即win系统上yolov5-v6.0的tensorrt加速部署,原作者提供jetson上部署的方法,需做些修改方能使用。

我的基础环境:

- jetpack 4.5 (L4T 32.5.0)

- cuda 10.2

- cudnn 8.0

- python环境 python 3.6

- tensorrt 7.1.3

- opencv 4.1.1

2. 准备

2.1. 下载yolov5-v6.0和Yolov5_Tensorrt_Win10源码

git clone -b v6.0 http://github.com/ultralytics/yolov5.git

git clone https://gitcode.net/mirrors/Monday-Leo/yolov5_tensorrt_win10.git

yolov5-v6.0的权重下载地址:

https://github.com/ultralytics/yolov5/releases/download/v6.0/yolov5s.pt

2.2. 编译Yolov5_Tensorrt_Win10

首先删除Include目录

cd yolov5_tensorrt_win10

rm -r include

其次,修改cmakelists.txt,如下

mv CMakeLists.txt CMakeLists_win.txt # 默认的CMakeLists.txt是win上的

vim CMakeLists.txt # 新建CMakeLists.txt

在新的CMakeLists.txt填充如下内容,然后保存。

cmake_minimum_required(VERSION 2.6)

project(yolov5)

add_definitions(-std=c++11)

add_definitions(-DAPI_EXPORTS)

option(CUDA_USE_STATIC_CUDA_RUNTIME OFF)

set(CMAKE_CXX_STANDARD 11)

set(CMAKE_BUILD_TYPE Debug)

find_package(CUDA REQUIRED)

if(WIN32)

enable_language(CUDA)

endif(WIN32)

include_directories(${PROJECT_SOURCE_DIR}/include)

# include and link dirs of cuda and tensorrt, you need adapt them if yours are different

# cuda

include_directories(/usr/local/cuda/include)

link_directories(/usr/local/cuda/lib64)

# tensorrt

include_directories(/usr/include/x86_64-linux-gnu/)

link_directories(/usr/lib/x86_64-linux-gnu/)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11 -Wall -Ofast -g -Wfatal-errors -D_MWAITXINTRIN_H_INCLUDED")

#cuda_add_library(myplugins SHARED yololayer.cu)

cuda_add_library(myplugins SHARED ${PROJECT_SOURCE_DIR}/yolov5.cpp ${PROJECT_SOURCE_DIR}/yololayer.cu ${PROJECT_SOURCE_DIR}/yololayer.h ${PROJECT_SOURCE_DIR}/preprocess.cu )

find_package(OpenCV)

include_directories(${OpenCV_INCLUDE_DIRS})

target_link_libraries(myplugins nvinfer cudart ${OpenCV_LIBS})

cuda_add_executable(yolov5 calibrator.cpp yolov5.cpp preprocess.cu)

#cuda_add_library(myplugins SHARED ${PROJECT_SOURCE_DIR}/yolov5.cpp ${PROJECT_SOURCE_DIR}/yololayer.cu ${PROJECT_SOURCE_DIR}/yololayer.h ${PROJECT_SOURCE_DIR}/preprocess.cu)

target_link_libraries(yolov5 nvinfer)

target_link_libraries(yolov5 cudart)

target_link_libraries(yolov5 myplugins)

target_link_libraries(yolov5 ${OpenCV_LIBS})

if(UNIX)

add_definitions(-O2 -pthread)

endif(UNIX)

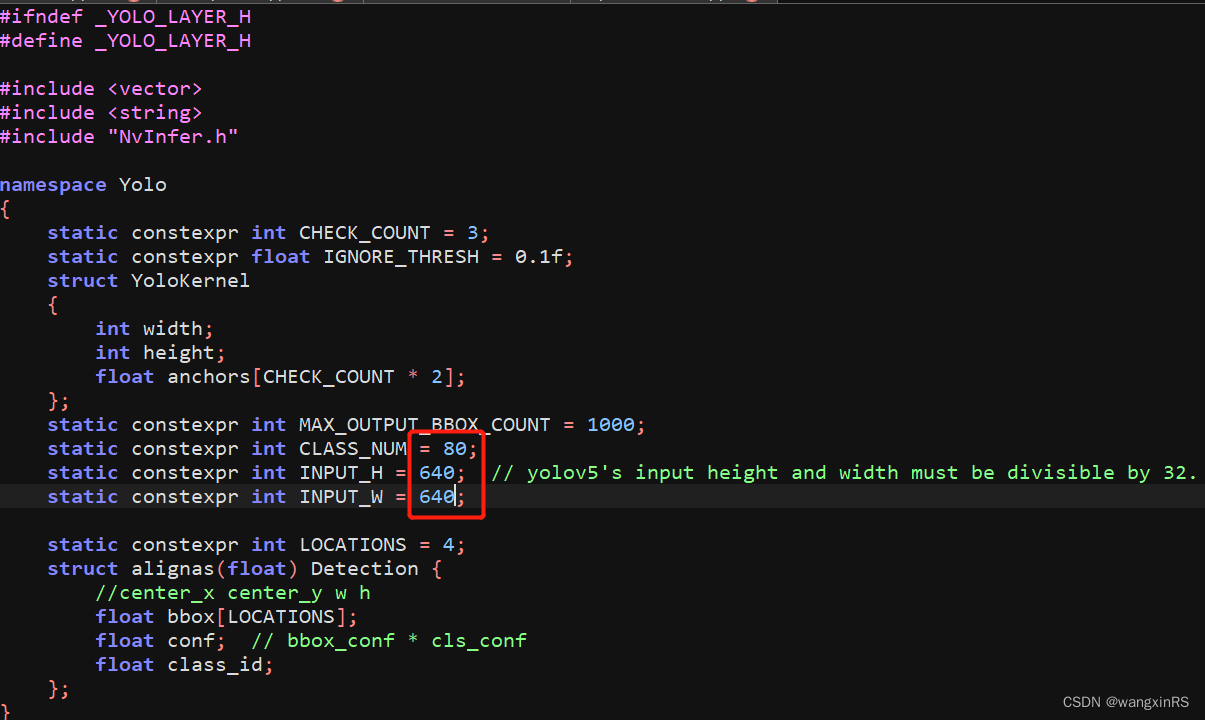

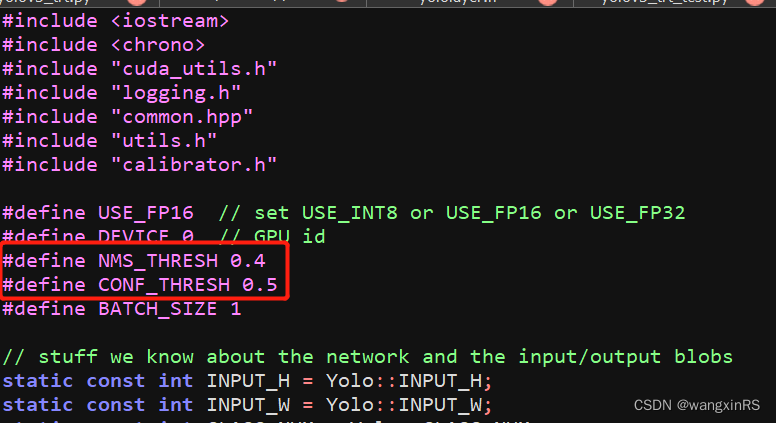

如果模型是自己训练的,就要根据自己的情况修改yololayer.h和yolov5.cpp,通常是修改输入图片宽高以及类别,如

然后执行

mkdir build

cd build

cmake ..

make (没有权限的用sudo make)

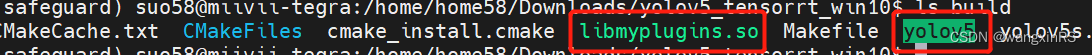

结束后在build目录下存在libmyplugins.so第三方库和名为yolov5的可执行文件,如下

2.3. 生成engine文件

- 生成.wts文件

将Yolov5_Tensorrt_Win10中的gen_wts.py复制到yolov5项目目录下,在终端中执行

python3 gen_wts.py -w yolov5s.pt -o yolov5s.wts

-w:训练好的yolov5模型的路径

-o:输出的 .wts 文件路径

备注:这里可能会出现下载.ttf文件,可根据下载地址自行下载,然后根据提示内容放置到对应位置

- 生成engine文件

将上一步生成的.wts文件放入Yolov5_Tensorrt_Win10/build/目录下,在终端中执行

sudo ./yolov5 -s [.wts] [.engine] [n/s/m/l/x/n6/s6/m6/l6/x6 or c/c6 gd gw]

以yolov5s模型为例:

sudo ./yolov5 -s yolov5s.wts yolov5s.engine s

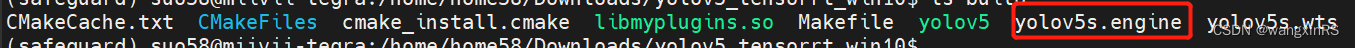

此时在build目录中出现yolov5s.engine文件,如下,

3. python调用c++代码

注意:yolv5s.engine和libmyplugins.so均在build目录下。

在Yolov5_Tensorrt_Win10目录下新建python_trt_test.py,脚本内容如下

from ctypes import *

import cv2

import numpy as np

import numpy.ctypeslib as npct

import os

import time

class Detector():

def __init__(self,model_path,dll_path):

self.yolov5 = CDLL(dll_path)

self.yolov5.Detect.argtypes = [c_void_p,c_int,c_int,POINTER(c_ubyte),npct.ndpointer(dtype = np.float32, ndim = 2, shape = (50, 6), flags="C_CONTIGUOUS")]

self.yolov5.Init.restype = c_void_p

self.yolov5.Init.argtypes = [c_void_p]

self.yolov5.cuda_free.argtypes = [c_void_p]

self.c_point = self.yolov5.Init(model_path)

def predict(self,img):

rows, cols = img.shape[0], img.shape[1]

res_arr = np.zeros((50,6),dtype=np.float32)

self.yolov5.Detect(self.c_point,c_int(rows), c_int(cols), img.ctypes.data_as(POINTER(c_ubyte)),res_arr)

self.bbox_array = res_arr[~(res_arr==0).all(1)]

return self.bbox_array

def free(self):

self.yolov5.cuda_free(self.c_point)

def visualize(img,bbox_array):

for temp in bbox_array:

bbox = [temp[0],temp[1],temp[2],temp[3]] #xywh

clas = int(temp[4])

score = temp[5]

cv2.rectangle(img,(int(temp[0]),int(temp[1])),(int(temp[0]+temp[2]),int(temp[1]+temp[3])), (105, 237, 249), 2)

img = cv2.putText(img, "class:"+str(clas)+" "+str(round(score,2)), (int(temp[0]),int(temp[1])-5), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (105, 237, 249), 1)

return img

det = Detector(model_path=b"./build/yolov5s.engine",dll_path="./build/libmyplugins.so") # b'' is needed

print("模型加载完毕!")

# 当前目录

curpath = os.path.dirname(os.path.abspath(__file__))

for pic in os.listdir(os.path.join(curpath, 'pictures')):

curtime = time.time()

img = cv2.imread(os.path.join(curpath, 'pictures', pic))

for i in range(20):

print("当前图片:{},处于第{}轮".format(pic, i+1))

result = det.predict(img)

print("\n当前图片{}检测速度:{}帧/秒\n".format(pic, int(1 / ((time.time() - curtime) / 20))))

img = visualize(img,result)

det.free()

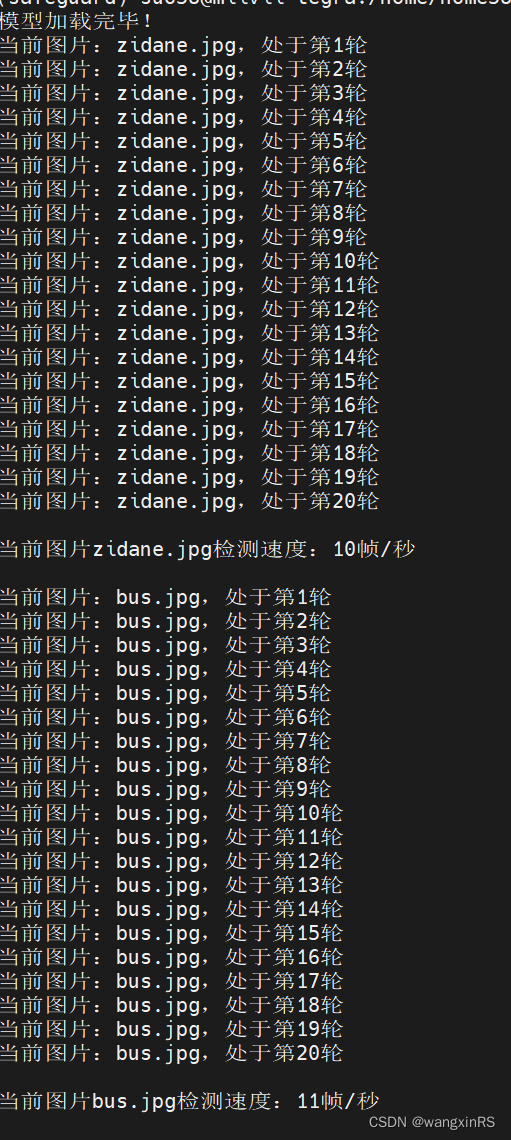

执行python python_trt_test.py,即可出现

完整代码链接:

链接:https://pan.baidu.com/s/1sYbkbtCnF4ivwWsCU0TWXQ

提取码:36cb

4. 报错相关

- opencv2:No such file or directory

fatal error: opencv2/opencv.hpp: No such file or directory #include <opencv2/opencv.hpp>

解决办法:

这是因为opencv头文件的路径中多了一个opencv4的文件夹:/usr/include/opencv4/opencv2,可以将opencv2文件夹链接到include文件夹下

sudo ln -s /usr/include/opencv4/opencv2 /usr/include/

- nvcc

执行vim ~/.bashrc,在文件最后面添加下列内容,保存后执行source ~/.bashrc

export PATH=/usr/local/cuda-10.2/bin${

PATH:+:${

PATH}}

export LD_LIBRARY_PATH=/usr/local/cuda-10.2/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

export CUDA_ROOT=/usr/local/cuda