PCA介绍

主成分分析(Principal Component Analysis, 简称PCA)是将多个变量通过线性变换以选出较少个数重要变量(主成分)的一种多元统计分析方法。

PCA通常用于数据降维,其主要思想是将n维特征映射到k维主成分特征上。主成分分析的目标是寻找在最小均方误差意义下最能够代表原始数据的投影方向,即对角化协方差矩阵。

PCA大致思路

1. 样本中心化

算出数据在每一个维度上的平均值,让该维数值减去这个平均值,中心化不会改变求得的新空间,但会减少计算量。

2. 计算协方差矩阵

协方差矩阵的含义:第 i 行k 列的值,表示 i k 对应的两个方向(坐标轴)的协方差。

3. 对协方差矩阵进行对角化即算出协方差矩阵的特征值与特征向量

含义:对角化意味着非对角线元素为0,也就意味着不同坐标轴(不同方向)之间两两互不相关(协方差为0)。而协方差矩阵对角线上元素,就是数据在各个方向上的方差(也是特征值),方差越大意味着数据在这个方向上的散度越大,也就意味着这个方向包含的信息更多。

4.计算投影矩阵

取特征值大的一些特征向量构成一个矩阵 A,这个矩阵是一个投影矩阵:能够把原始空间的数据投影到新的空间。

PCA人脸识别(特征脸法)

1.数据预处理

我们使用YaleB 数据集进行实验,首先将每一张人脸拉成一个列向量,所有人脸构成一个矩阵,每列是一张人脸数据,如下图所示。

2.求平均脸

对每一行都求平均值,得到一个列向量,我们称之为“平均脸”,是所有人脸的平均。下图是 YaleB 数据集求得的一张平均脸。

3.样本中心化

每一个脸都减去平均脸。下图是原始数据在减去平均脸后得到的中心化的数据。

4.求特征向量

对中心化后的样本,求协方差矩阵的特征向量。每一个特征向量都是一张脸,我们称之为“特征脸”(eigenface),原始的人脸可以表示为特征脸的线性组合。下图把特征脸按照特征值大小排列,可以看到特征值大的脸,其包含的有效信息更多。

5.计算投影矩阵

取出特征值较大的特征脸,构成投影矩阵。这个投影矩阵可以把人脸投影到一个子空间上,我们称之为脸空间(face space)。

6.将人脸投影

把某个人的所有脸都投影到脸空间中,求均值,得到脸空间中的一个点,称之为这个人的“特征”(pattern vector)。以此类推,求出每一个人的“特征”,每一个特征代表一个人的脸。

7.人脸分类

现在有了一个待识别的人脸,只要把它也投影成子空间的一个点,看这个点和空间中哪个点(这个点代表某个人的脸)离得近,我们就认为这个脸是某个人的。

matlab代码实现

function[all, face_train, face_test] =DataDivision(file, categoryNum, everySize)

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% 读取数据 %

all_face = load(file + ".mat").ORL4646; % 所有人脸

face_train = []; % 训练人脸

face_test = []; % 测试人脸

all = []; % 所有人脸

% 一共40人,每个人取4张图片作为数据集

for i = 0 : (categoryNum-1)

for j = 1 : 6

tmp = all_face(:, :, i*everySize+j);

new_face = reshape(tmp, size(all_face, 1)*size(all_face, 2), 1); % 将像素矩阵按列拼接

face_train = [face_train new_face]; % 加入到训练集中

all = [all new_face];

end

for k = 7 : everySize

tmp = all_face(:, :, i*everySize+k);

new_face = reshape(tmp, size(all_face, 1)*size(all_face, 2), 1); % 将像素矩阵按列拼接

face_test = [face_test new_face]; % 加入到测试集中

all = [all new_face];

end

end

end

function[A, EigenFaces, eigenVector] = PCAFit(face_train, dimension)

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% 进行训练 %

train_mean = mean(face_train, 2); % 平均人脸

%subplot(1, 1, 1);

%imshow(reshape(train_mean, 50, 40), []); % 显示平均人脸

%title("平均人脸");

X_mean(:, 1:240) = face_train(:, 1:240) - train_mean; % 中心化

L_xx = X_mean*X_mean'; % M*M矩阵

[V, D] = eig(L_xx);

% 将特征向量按照特征值降序

eigenValue = wrev(diag(D));

eigenVector = fliplr(V);

A = eigenVector(:, 1 :dimension); % 获取投影矩阵的转置

EigenFaces = A' * face_train;

end

function[rate] = PCATest(face_test,EigenFaces,A)

TestFaces = A' * face_test;

correct_sum = 0;

for test_index = 1 : size(TestFaces, 2)

test_face = TestFaces(:, test_index);

Euc_dist = [];

for i = 1 : size(EigenFaces, 2)

tmp = ( norm(test_face - EigenFaces(:, i)))^2; % 计算L2范数

Euc_dist = [Euc_dist tmp]; % 用于存放距离

end

[Euc_dist_min , Recognized_index] = min(Euc_dist); % 获得最小距离

% disp([Recognized_index test_index]);

if ceil(Recognized_index / 6) == ceil(test_index / 4) % 进行分类

correct_sum = correct_sum + 1;

end

end

rate = correct_sum / size(TestFaces, 2); % 计算准确率

end

Python代码实现

import scipy.io as scio

import numpy

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

def getdata():#取得需要降维的矩阵

dataFile = 'D:\大学作业\大二下机器学习\算法常用数据\ORL4646.mat'

data = scio.loadmat(dataFile)

datamatrix = data['ORL4646'] # 删除作者版本等信息,只采用人脸数据

datamatrix = numpy.reshape(datamatrix, (46 * 46, 400)) # 将人脸数据转化为二维数组

datamatrix = numpy.array(datamatrix)

datamatrix = numpy.transpose(datamatrix)

matrix = []

for i in range(300):

matrix.append(datamatrix[i])

matrix = numpy.array(matrix)

#print(matrix.shape) #将矩阵打印出来,方便检查

facematrix = []

for i in range(20,25):

facematrix.append(datamatrix[i*10])

facematrix = numpy.array(facematrix)

return matrix,facematrix

def getdataByRecog(eachNum):

dataFile = 'D:\大学作业\大二下机器学习\算法常用数据\ORL4646.mat'

data = scio.loadmat(dataFile)

datamatrix = data['ORL4646'] # 删除作者版本等信息,只采用人脸数据

datamatrix = numpy.reshape(datamatrix, (46 * 46, 400)) #将人脸数据转化为二维数组

datamatrix = numpy.array(datamatrix)

datamatrix = numpy.transpose(datamatrix)

trainMatrix = []

testMatrix = []

for i in range(40): #ORL共40个人

for j in range(10): #每个人10张照片

if j < eachNum: #每个人用n张照片进行训练

trainMatrix.append(datamatrix[i*10 + j])

else: #剩下的进行测试

testMatrix.append(datamatrix[i*10 + j])

trainMatrix = numpy.array(trainMatrix)

testMatrix = numpy.array(testMatrix)

#print(trainMatrix.shape)

#print(testMatrix.shape)

return trainMatrix,testMatrix

def getdataByorl():

dataFile = 'D:\大学作业\大二下机器学习\算法常用数据\ORL4646.mat'

data = scio.loadmat(dataFile)

datamatrix = data['ORL4646'] # 删除作者版本等信息,只采用人脸数据

datamatrix = numpy.reshape(datamatrix, (46 * 46, 400)) # 将人脸数据转化为二维数组

datamatrix = numpy.array(datamatrix)

datamatrix = numpy.transpose(datamatrix)

train_orl = []

view1_orl = []

view2_orl = []

view3_orl = []

for i in range(30):

train_orl.append(datamatrix[i])

for i in range(30,40):

view1_orl.append(datamatrix[i])

for i in range(40,50):

view2_orl.append(datamatrix[i])

for i in range(50,60):

view3_orl.append(datamatrix[i])

return train_orl,view1_orl,view2_orl,view3_orl

def getdatabyYaleB():

dataFile = 'D:\大学作业\大二下机器学习\算法常用数据\YaleB_32x32.mat'

data = scio.loadmat(dataFile)

datamatrix = data['fea']

train_yb = []

view1_yb = []

view2_yb = []

view3_yb = []

for i in range(30):

train_yb.append(datamatrix[i])

for i in range(64, 124):

view1_yb.append(datamatrix[i])

for i in range(128, 188):

view2_yb.append(datamatrix[i])

for i in range(192, 252):

view3_yb.append(datamatrix[i])

return train_yb, view1_yb, view2_yb, view3_yb

def getdataByYale():

dataFile = 'D:\大学作业\大二下机器学习\算法常用数据\Yale5040165.mat'

data = scio.loadmat(dataFile)

datamatrix = data['Yale5040165']

datamatrix = numpy.reshape(datamatrix, (50 * 40, 165)) # 将人脸数据转化为二维数组

datamatrix = numpy.array(datamatrix)

datamatrix = numpy.transpose(datamatrix)

train_y = []

view1_y = []

view2_y = []

view3_y = []

for i in range(30):

train_y.append(datamatrix[i])

for i in range(33, 44):

view1_y.append(datamatrix[i])

for i in range(44, 55):

view2_y.append(datamatrix[i])

for i in range(77, 88):

view3_y.append(datamatrix[i])

return train_y, view1_y, view2_y, view3_y

def pca(matrix,k):

#print(matrix)

matrix = numpy.float32(numpy.mat(matrix))

#print('matrix:{}'.format(matrix.shape))

meanMatrix = numpy.mean(matrix,axis=1) #按行计算平均

#print(meanMatrix)

matrix = matrix - meanMatrix #去平均值

#print("matrix");print(matrix.shape)

covMatrix = numpy.cov(matrix.T,rowvar=1) #协方差矩阵

#print(covMatrix.shape)

eigenvalue,eigenvector = numpy.linalg.eigh(numpy.mat(covMatrix)) #特征值和特征向量

#print("vector");print(eigenvector.shape)

index = numpy.argsort(eigenvalue) #排序

index = index[:-(k+1):-1]

select = eigenvector[:,index] #最大的特征值对应的特征向量

#print("select");print(select.shape)

return meanMatrix,select

def pca_recog(matrix,k):

matrix = numpy.float32(numpy.mat(matrix))

eachMean = []

#eachMean = numpy.array(eachMean)

for i in range(matrix.shape[0]):

eachMean.append(matrix[i].mean(axis=0))

eachMean = numpy.array(eachMean)

eachMean = eachMean.reshape(eachMean.shape[0],eachMean.shape[2])

matrix = matrix.T

meanMatrix = matrix.mean(axis=1) # 按行计算平均

matrix = matrix - meanMatrix # 去平均值

u,sigma,v = numpy.linalg.svd(matrix)

w = u[:,:k]

w = numpy.array(w)

eachMean = numpy.array(eachMean)

return w,eachMean

def rebuild():

matrix, facematrix = getdata()

print(facematrix.shape)

meanMatrix,eigenvector = pca(matrix, 160) # 降维后矩阵用来给出重构图

for j in range(0,5):

plt.figure()

plt.subplot(1,9,1)

plt.imshow(facematrix[j].reshape(46,46),cmap=plt.cm.gray)

plt.axis('off')

#plt.show()

for i in range(1,9):

dimen = eigenvector[:,:i*20]

#print(dimen.shape)

lowMatrix = facematrix[j]*dimen #1,2116 * 2116,d

#print(lowMatrix.shape)

face = lowMatrix*dimen.T #1,d * d*2116

#print(face)

face = numpy.reshape(face,(46,46))

plt.subplot(1,9,i+1)

plt.imshow(face, cmap=plt.cm.gray)

plt.title("d={}".format(i * 20))

plt.axis('off')

#plt.imshow(face, cmap=plt.cm.gray)

plt.show()

def recognize():

eachNum = 5 #每个人用来训练的照片数

trainMatrix,testMatrix = getdataByRecog(eachNum)

print(testMatrix.shape)

trainNum = trainMatrix.shape[0]

testNum = testMatrix.shape[0]

acc = []

dimen = []

for d in range(1,2):

w,eachMean = pca_recog(trainMatrix,60)

#print("each:{}".format(eachMean.shape))

#print("w:{}".format(w.shape))

project = []

for each in eachMean:

tmp = numpy.dot(w.T,each.T)

project.append(tmp.T)

project = numpy.array(project)

#print("project:{}".format(project.shape))

correct = 0

#print("fish");print(fisherface.shape);print(projection.shape)

for i in range(testNum):

tmp = numpy.reshape(testMatrix[i],(2116,1))

test = numpy.dot(w.T , tmp)

#print("test:{}".format(test.shape))

disMatrix = []

for j in range(project.shape[0]):

distance = numpy.linalg.norm(test.T - project[j])

disMatrix.append(distance)

classes = int(disMatrix.index(min(disMatrix))/eachNum) #eachNum张为一类

if classes == int(i/(10-eachNum)): #10-eachNum张为一类

correct += 1

accuracy = float(correct) / testNum

acc.append(accuracy)

print(accuracy)

dimen.append(d*10)

print(accuracy)

print(acc)

print(dimen)

#plt.plot(dimen,acc)

#plt.xlabel("dimen")

#plt.ylabel("accuracy")

#plt.show()

def view(train, view1, view2, view3,num):

meanMatrix,eigenvector = pca(train, 2)

data1x = [];data1y = []

for i in range(num):

lowMatrix = view1[i]*eigenvector

print("lowmatrix: ",lowMatrix.shape)

data1x.append(lowMatrix[0,0])

data1y.append(lowMatrix[0,1])

fig = plt.figure()

ax1 = fig.add_subplot(111)

ax1.scatter(data1x,data1y)

plt.show()

data2x = [];data2y = []

for i in range(num):

lowMatrix = view2[i]*eigenvector

#print(lowMatrix)

data2x.append(lowMatrix[0,0])

data2y.append(lowMatrix[0,1])

fig = plt.figure()

ax1 = fig.add_subplot(111)

ax1.scatter(data2x,data2y)

plt.show()

data3x = [];data3y = []

for i in range(num):

lowMatrix = view3[i]*eigenvector

#print(lowMatrix.shape)

data3x.append(lowMatrix[0,0])

data3y.append(lowMatrix[0,1])

fig = plt.figure()

ax1 = fig.add_subplot(111)

ax1.scatter(data3x,data3y)

plt.show()

meanMatrix, eigenvector = pca(train, 3)

lowMatrix = []

for i in range(num):

lowMatrix.append(view1[i] * eigenvector)

lowMatrix = numpy.array(lowMatrix)

lowMatrix = numpy.reshape(lowMatrix,(num,3))

print(lowMatrix.shape)

fig = plt.figure()

ax = fig.add_subplot(111, projection='3d')

ax.scatter(lowMatrix[:, 0], lowMatrix[:, 1],lowMatrix[:, 2])

ax.set_xlabel('X Label')

ax.set_ylabel('Y Label')

ax.set_zlabel('Z Label')

plt.show()

lowMatrix1 = []

for i in range(num):

lowMatrix1.append(view2[i] * eigenvector)

lowMatrix1 = numpy.array(lowMatrix1)

lowMatrix1 = numpy.reshape(lowMatrix1, (num, 3))

print(lowMatrix1.shape)

fig = plt.figure()

ax = fig.add_subplot(111, projection='3d')

ax.scatter(lowMatrix1[:, 0], lowMatrix1[:, 1], lowMatrix1[:, 2])

ax.set_xlabel('X Label')

ax.set_ylabel('Y Label')

ax.set_zlabel('Z Label')

plt.show()

lowMatrix2 = []

for i in range(num):

lowMatrix2.append(view1[i] * eigenvector)

lowMatrix2 = numpy.array(lowMatrix2)

lowMatrix2 = numpy.reshape(lowMatrix2, (num, 3))

print(lowMatrix2.shape)

fig = plt.figure()

ax = fig.add_subplot(111, projection='3d')

ax.scatter(lowMatrix2[:, 0], lowMatrix2[:, 1], lowMatrix2[:, 2])

ax.set_xlabel('X Label')

ax.set_ylabel('Y Label')

ax.set_zlabel('Z Label')

plt.show()

if __name__ == "__main__":

#rebuild()

recognize()

#train_orl, view1_orl, view2_orl, view3_orl = getdataByorl()

#view(train_orl, view1_orl, view2_orl, view3_orl,10) #orl

#train_yb, view1_yb, view2_yb, view3_yb = getdatabyYaleB()

#view(train_yb, view1_yb, view2_yb, view3_yb,60)

#train_y, view1_y, view2_y, view3_y = getdataByYale()

#view(train_y, view1_y, view2_y, view3_y, 11)

PCA几何解释

找到一组新的坐标轴,或者说是一组新的基(basis),用于表示原来的数据,使得在表示数据时不同轴是不相关的(即协方差为0。

取出其中含有信息较多(即方差较大)的坐标轴(基),构成(span)一个新的空间,舍弃其他维度的信息。

由于新空间的维度小于原来的空间,所以把数据投影到新的空间后,可以大大降低数据的复杂度(虽然会损失少量信息)。

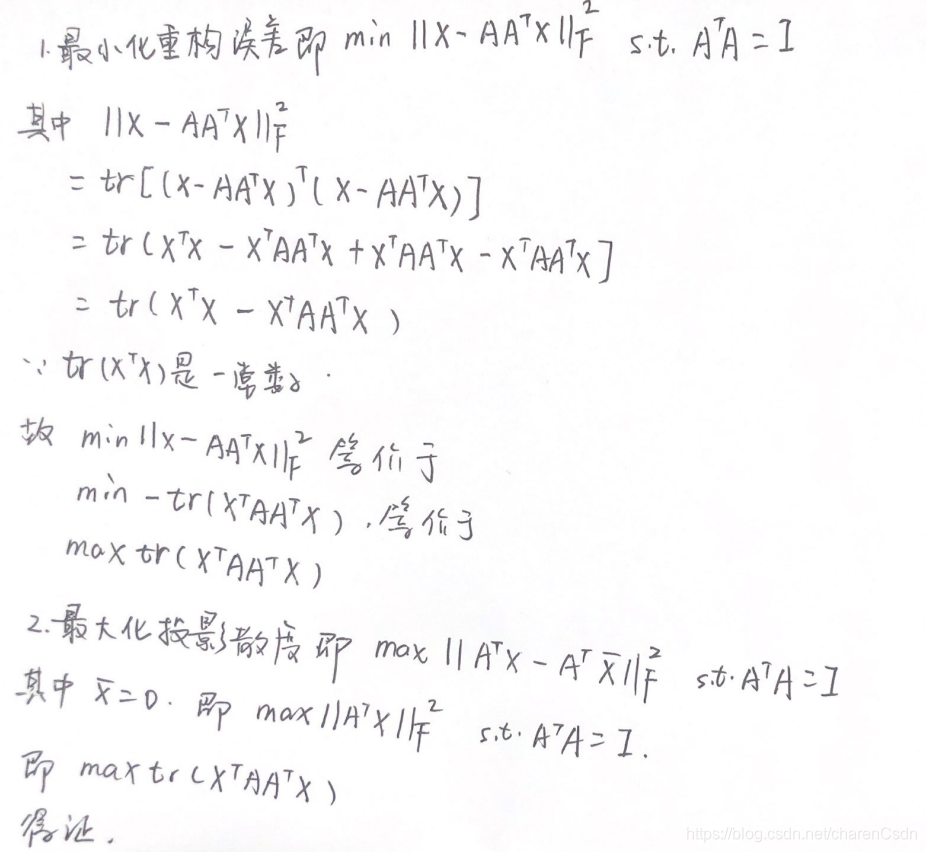

PCA证明最小重构误差和最大散度等价

实验结果

根据不同维度,生成不同的投影矩阵,然后根据投影矩阵将测试集投影到低维空间,然后计算与训练集中的样本的欧式距离,用KNN分类器对其进行分类。