目录

一,准备

1.1代码

SlowFast官网地址

代码下载:

git clone https://github.com/facebookresearch/slowfast

这里建议使用码云来下载,使用上面的那个命令实在太慢,码云下载方法:

git clone 显著提速,解决Github代码拉取速度缓慢问题

下载链接

1.2 环境准备

这里推荐几个网站,租用这些网站的GPU然后跑代码,因为普通的笔记本搭建图像处理的环境会遇到各种各样的问题。在网站上租用GPU可以使用官方搭建好的镜像,如果跑代码或者自己把环境弄乱了,就可以释放实例,重新再来(约等于自己电脑的重装系统,但是这个租用的释放和搭建新镜像速度非常快)

以下是我推荐的几个网站:

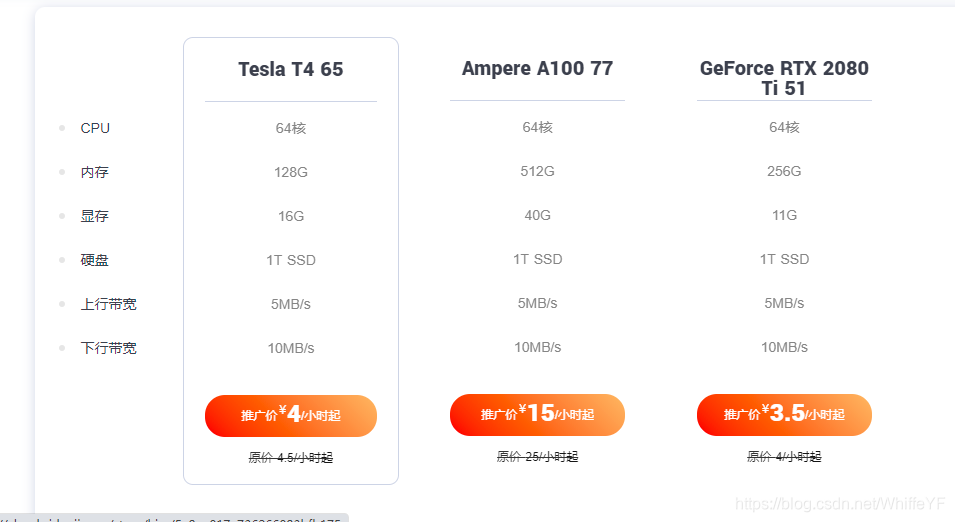

1,极链AI云平台(我用的就是这个,有50元的白嫖额度,我就是靠这50元的额度跑出来slowfast)

2,MistGPU

1.3 搭建镜像

第一步,在极链AI云平台选择一个机子,我选的一般都是4元/小时的。

第二步,选择GPU数量,我们跑自己的视频,一般1个GPU就够了

第三步,实例镜像,官方给的框架是PyTorch,这里也就PyTorch,PyTorch的版本官方给的1.3(当时官方发布的时候,PyTorch最高版本就1.3,但是这里的镜像没有1.3,所以选择一个比它高的1.4版本)。注意,python一定要选3.7版本,python一定要选3.7版本,python一定要选3.7版本,3.6版本我是跑出了问题。CUDA只有10.1可选(这也是官方镜像的好处,在自己电脑上安装CUDA很烦的)

备注:有读者尝试的时候,这里出现问题,这是我2021年2月份做出来的,读者是4月份尝试的,然后报错,这里读者采用了pytorch1.4的版本,cuda10.2的版本。所以如果版本问题的话,尝试把pytorch和cuda版本提高。

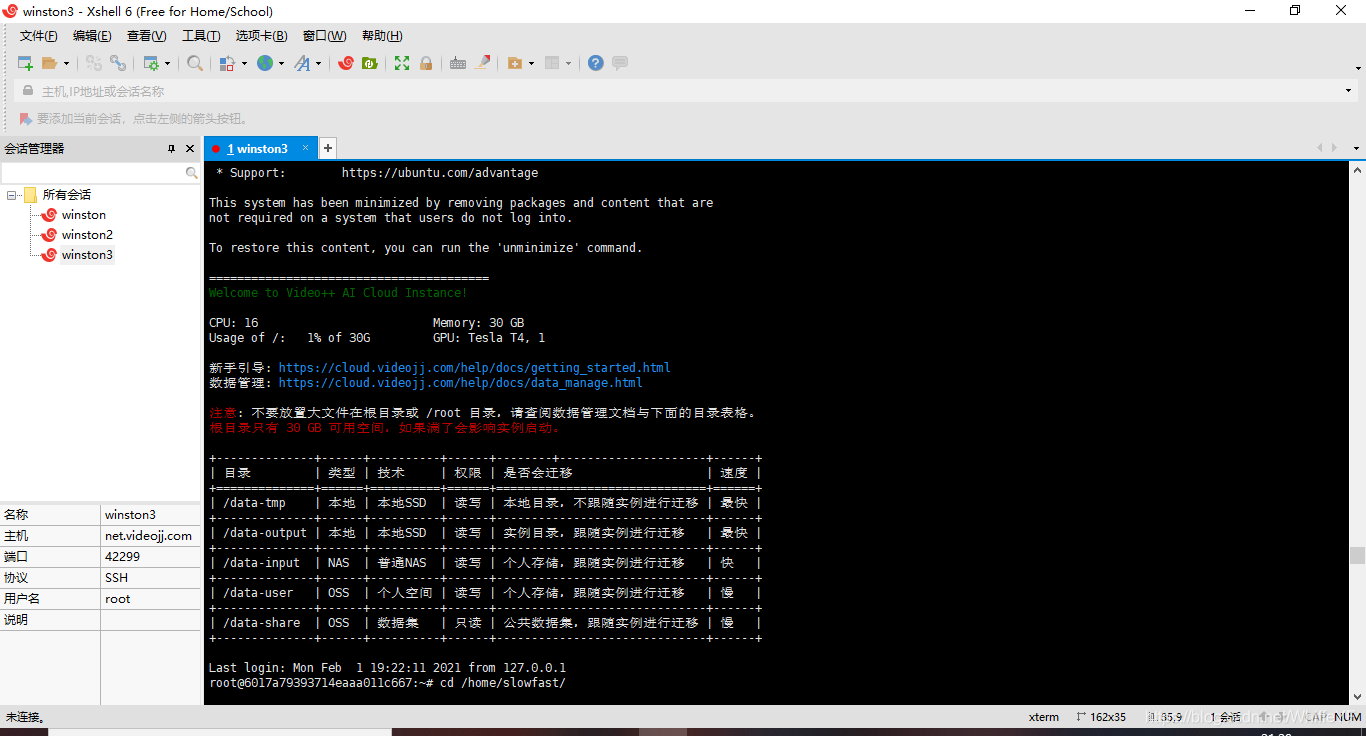

第四步,按照极链AI云平台的手册指引,在Xshell上打开实例。

1.4 配置slowfast环境

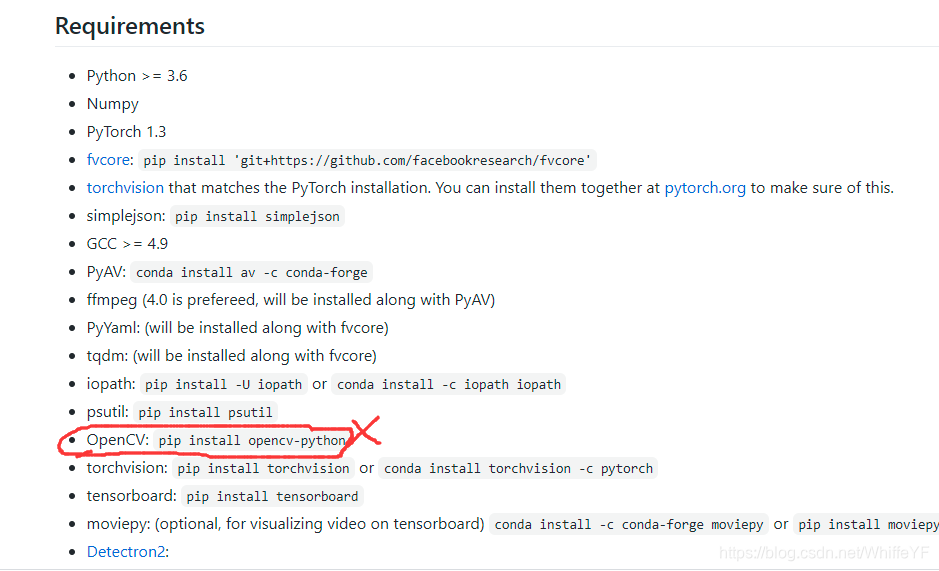

按照官网上的步骤来

Installation

注意,这里的opencv-python就不要执行,因为镜像里自带了opencv-python!

1.5 ava.json

{

"bend/bow (at the waist)": 0, "crawl": 1, "crouch/kneel": 2, "dance": 3, "fall down": 4, "get up": 5, "jump/leap": 6, "lie/sleep": 7, "martial art": 8, "run/jog": 9, "sit": 10, "stand": 11, "swim": 12, "walk": 13, "answer phone": 14, "brush teeth": 15, "carry/hold (an object)": 16, "catch (an object)": 17, "chop": 18, "climb (e.g., a mountain)": 19, "clink glass": 20, "close (e.g., a door, a box)": 21, "cook": 22, "cut": 23, "dig": 24, "dress/put on clothing": 25, "drink": 26, "drive (e.g., a car, a truck)": 27, "eat": 28, "enter": 29, "exit": 30, "extract": 31, "fishing": 32, "hit (an object)": 33, "kick (an object)": 34, "lift/pick up": 35, "listen (e.g., to music)": 36, "open (e.g., a window, a car door)": 37, "paint": 38, "play board game": 39, "play musical instrument": 40, "play with pets": 41, "point to (an object)": 42, "press": 43, "pull (an object)": 44, "push (an object)": 45, "put down": 46, "read": 47, "ride (e.g., a bike, a car, a horse)": 48, "row boat": 49, "sail boat": 50, "shoot": 51, "shovel": 52, "smoke": 53, "stir": 54, "take a photo": 55, "text on/look at a cellphone": 56, "throw": 57, "touch (an object)": 58, "turn (e.g., a screwdriver)": 59, "watch (e.g., TV)": 60, "work on a computer": 61, "write": 62, "fight/hit (a person)": 63, "give/serve (an object) to (a person)": 64, "grab (a person)": 65, "hand clap": 66, "hand shake": 67, "hand wave": 68, "hug (a person)": 69, "kick (a person)": 70, "kiss (a person)": 71, "lift (a person)": 72, "listen to (a person)": 73, "play with kids": 74, "push (another person)": 75, "sing to (e.g., self, a person, a group)": 76, "take (an object) from (a person)": 77, "talk to (e.g., self, a person, a group)": 78, "watch (a person)": 79}

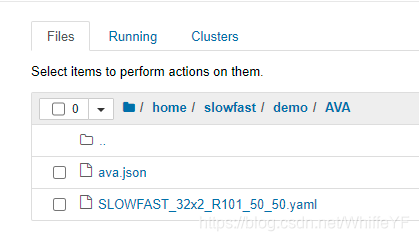

将上面的内容,保存在ava.json里面,然后保存在/home/slowfast/demo/AVA目录下。

1.6 SLOWFAST_32x2_R101_50_50.yaml

在目录:/home/slowfast/demo/AVA中打开SLOWFAST_32x2_R101_50_50.yaml

内容改为如下:

TRAIN:

ENABLE: False

DATASET: ava

BATCH_SIZE: 16

EVAL_PERIOD: 1

CHECKPOINT_PERIOD: 1

AUTO_RESUME: True

CHECKPOINT_FILE_PATH: '/home/slowfast/configs/AVA/c2/SLOWFAST_32x2_R101_50_50.pkl' #path to pretrain model

CHECKPOINT_TYPE: pytorch

DATA:

NUM_FRAMES: 32

SAMPLING_RATE: 2

TRAIN_JITTER_SCALES: [256, 320]

TRAIN_CROP_SIZE: 224

TEST_CROP_SIZE: 256

INPUT_CHANNEL_NUM: [3, 3]

DETECTION:

ENABLE: True

ALIGNED: False

AVA:

BGR: False

DETECTION_SCORE_THRESH: 0.8

TEST_PREDICT_BOX_LISTS: ["person_box_67091280_iou90/ava_detection_val_boxes_and_labels.csv"]

SLOWFAST:

ALPHA: 4

BETA_INV: 8

FUSION_CONV_CHANNEL_RATIO: 2

FUSION_KERNEL_SZ: 5

RESNET:

ZERO_INIT_FINAL_BN: True

WIDTH_PER_GROUP: 64

NUM_GROUPS: 1

DEPTH: 101

TRANS_FUNC: bottleneck_transform

STRIDE_1X1: False

NUM_BLOCK_TEMP_KERNEL: [[3, 3], [4, 4], [6, 6], [3, 3]]

SPATIAL_DILATIONS: [[1, 1], [1, 1], [1, 1], [2, 2]]

SPATIAL_STRIDES: [[1, 1], [2, 2], [2, 2], [1, 1]]

NONLOCAL:

LOCATION: [[[], []], [[], []], [[6, 13, 20], []], [[], []]]

GROUP: [[1, 1], [1, 1], [1, 1], [1, 1]]

INSTANTIATION: dot_product

POOL: [[[2, 2, 2], [2, 2, 2]], [[2, 2, 2], [2, 2, 2]], [[2, 2, 2], [2, 2, 2]], [[2, 2, 2], [2, 2, 2]]]

BN:

USE_PRECISE_STATS: False

NUM_BATCHES_PRECISE: 200

SOLVER:

MOMENTUM: 0.9

WEIGHT_DECAY: 1e-7

OPTIMIZING_METHOD: sgd

MODEL:

NUM_CLASSES: 80

ARCH: slowfast

MODEL_NAME: SlowFast

LOSS_FUNC: bce

DROPOUT_RATE: 0.5

HEAD_ACT: sigmoid

TEST:

ENABLE: False

DATASET: ava

BATCH_SIZE: 8

DATA_LOADER:

NUM_WORKERS: 2

PIN_MEMORY: True

NUM_GPUS: 1

NUM_SHARDS: 1

RNG_SEED: 0

OUTPUT_DIR: .

#TENSORBOARD:

# MODEL_VIS:

# TOPK: 2

DEMO:

ENABLE: True

LABEL_FILE_PATH: "/home/slowfast/demo/AVA/ava.json"

INPUT_VIDEO: "/home/slowfast/Vinput/2.mp4"

OUTPUT_FILE: "/home/slowfast/Voutput/1.mp4"

DETECTRON2_CFG: "COCO-Detection/faster_rcnn_R_50_FPN_3x.yaml"

DETECTRON2_WEIGHTS: detectron2://COCO-Detection/faster_rcnn_R_50_FPN_3x/137849458/model_final_280758.pkl

下面这一行代码存放的是输入视频的位置,当然Vinput这个文件夹是我自己建的

INPUT_VIDEO: "/home/slowfast/Vinput/2.mp4"

下面这一行代码存放的是检测后视频的位置,当然Voutput这个文件夹是我自己建的

OUTPUT_FILE: "/home/slowfast/Voutput/1.mp4"

1.7 SLOWFAST_32x2_R101_50_50 .pkl

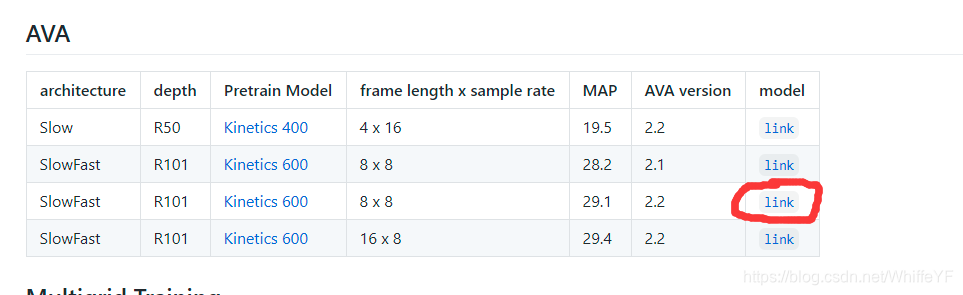

下载页面

下载模型SLOWFAST_32x2_R101_50_50 .pkl 到/home/slowfast/configs/AVA/c2目录下

然后在目录:/home/slowfast/demo/AVA下文件SLOWFAST_32x2_R101_50_50.yaml里修改如下:

TRAIN:

ENABLE: False

DATASET: ava

BATCH_SIZE: 16

EVAL_PERIOD: 1

CHECKPOINT_PERIOD: 1

AUTO_RESUME: True

CHECKPOINT_FILE_PATH: '/home/slowfast/configs/AVA/c2/SLOWFAST_32x2_R101_50_50.pkl' #path to pretrain model

CHECKPOINT_TYPE: pytorch

二,代码运行

在xShell终端中,跳转到以下目录

cd /home/slowfast/

输入运行命令

python tools/run_net.py --cfg demo/AVA/SLOWFAST_32x2_R101_50_50.yaml

最后结果:

最后结果上传到了B站上:视频链接

三 错误解决

from av._core import time_base, pyav_version as version, library_versions

ImportError: libopenh264.so.5: cannot open shared object file: No such file or directory

解决方案:

参考之前写的解决方案链接

直接再终端运行:

conda install x264=='1!152.20180717' ffmpeg=4.0.2 -c conda-forge