1、pandas astype

报错 Cannot convert non-finite values (NA or inf) to intege

解决方法:去掉含有0和na的行

df111_2 = df111_2.drop(df111_2[df111_2["dnum"]==0].index)

df111_2=df111_2[df111_2['dnum'].notna()]

df111_2[“dnum”] = df111_2[“dnum”].astype(int)

去重保留最大值

df.sort_values("Value", ascending = False).drop_duplicates(subset=["Date", "id"], keep="first")

2、hive regexp_extract

正则匹配

参考:https://blog.csdn.net/qq_30331643/article/details/93051421

regexp_extract

语法: regexp_extract(string subject, string pattern, int index)

返回值: string

说明: 将字符串subject按照pattern正则表达式的规则拆分,返回index指定的字符。

第一参数: 要处理的字段

第二参数: 需要匹配的正则表达式

第三个参数:

0是显示与之匹配的整个字符串

1 是显示第一个括号里面的

2 是显示第二个括号里面的字段

select

regexp_extract('x=a3&x=18abc&x=2&y=3&x=4','x=([0-9]+)([a-z]+)',0), -- x=18abc

select

regexp_extract(log_json,'"dnum":"([^"]+)',1) as dnum,

count(*)

from **表*

where imp_date = '20211026'

group by dnum

order by count(*) desc

;

3、pyspark agg、log、to_timestamp

参考:https://www.baidu.com/link?url=EJtscaGCmxoYwZICFtdGFhYxAakHTXXJWPX9ijnYyMRPQ4rBlhZUwjTC2Eng9uQA&wd=&eqid=a6ddd83300034b8900000006617a8bef

import pyspark.sql.functions as F

df1=df.groupby("aid").agg(F.count('finish_rate').alias("count_finish_rate"),F.sum('finish_rate').alias("sum_finish_rate"))

df1=df1.withColumn("avg_finish_rate", F.col("sum_finish_rate")/F.col("count_finish_rate"))

df1=df1.withColumn("aid_score", F.col("avg_finish_rate")*F.log(F.col("count_finish_rate")+1))

to_timestamp可以把字符串时间转化为hive时间格式

import pyspark.sql.functions as F

df1 = df1.withColumn("datetime",F.lit("2021-10-18"))

df1 = df1.withColumn('n_datetime2', F.to_timestamp(F.col('datetime'),"yyyy-MM-dd"))

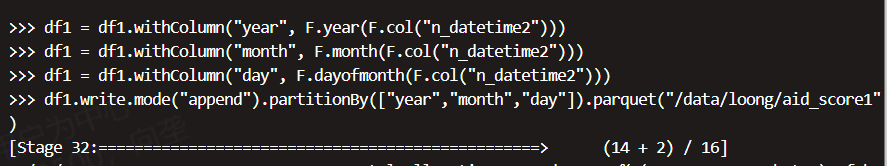

分时间分区保存

import pyspark.sql.functions as F

df1 = df1.withColumn("year", F.year(F.col("n_datetime2")))

df1 = df1.withColumn("month", F.month(F.col("n_datetime2")))

df1 = df1.withColumn("day", F.dayofmonth(F.col("n_datetime2")))

df1.write.mode("append").partitionBy(["year","month","day"]).parquet("/data/loong/aid_score1")

去重保留最大值

df.groupBy("year", "month", "day", "is_night", "dnum", "aid").agg(F.max("finish_rate").alias("finish_rate"),

F.max("played_duration").alias(

"played_duration"))

&&pyspark dataframe多个合并

unionByName

df1 = spark.createDataFrame([[1, 2, 3]], ["col0", "col1", "col2"])

df2 = spark.createDataFrame([[4, 5, 6,7]], ["col1", "col2", "col0","col3"])

df1.unionByName(df2).show()

from functools import reduce

dff=[]

for ii in range(10):

******

*****

dff.append(df1)

merge_df = reduce(lambda x,y:x.union(y),dff)