根据个人经验总结的深度学习入门路线(简单快速)

https://blog.csdn.net/weixin_44414948/article/details/109704871

深度学习入门二阶段demo练习:

https://blog.csdn.net/weixin_44414948/article/details/110673660

Demo任务:

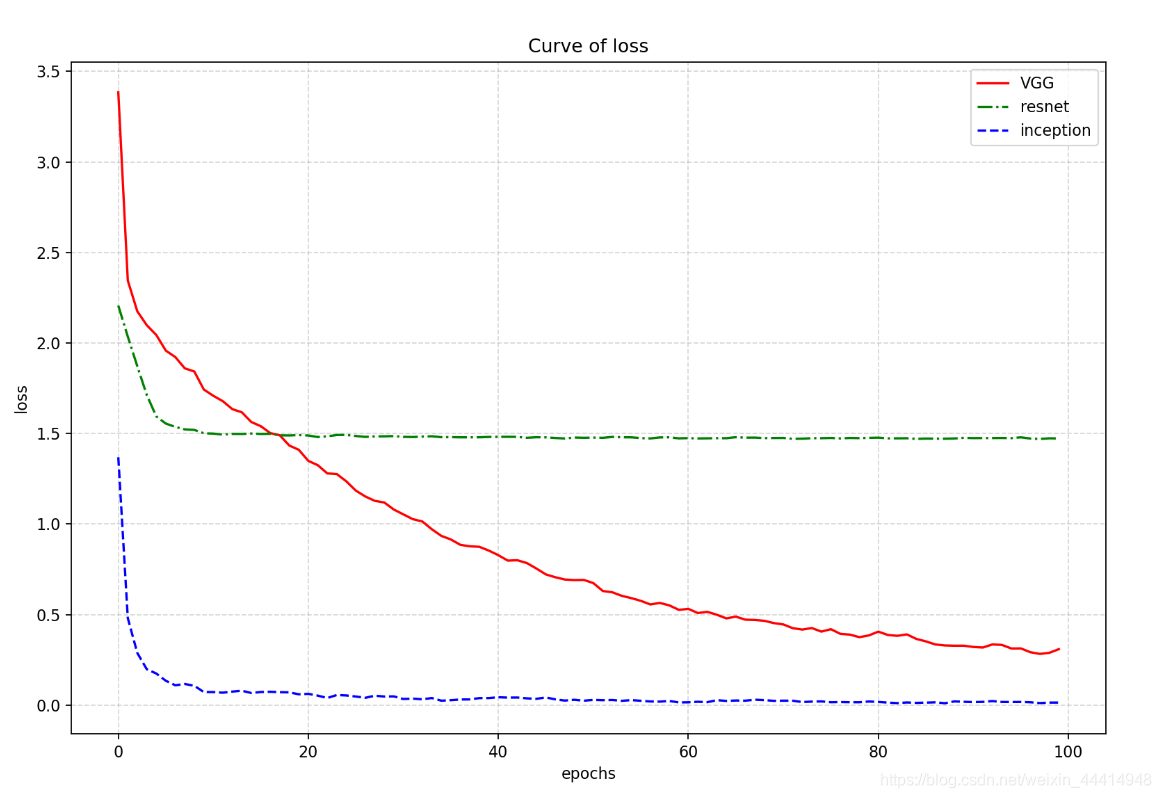

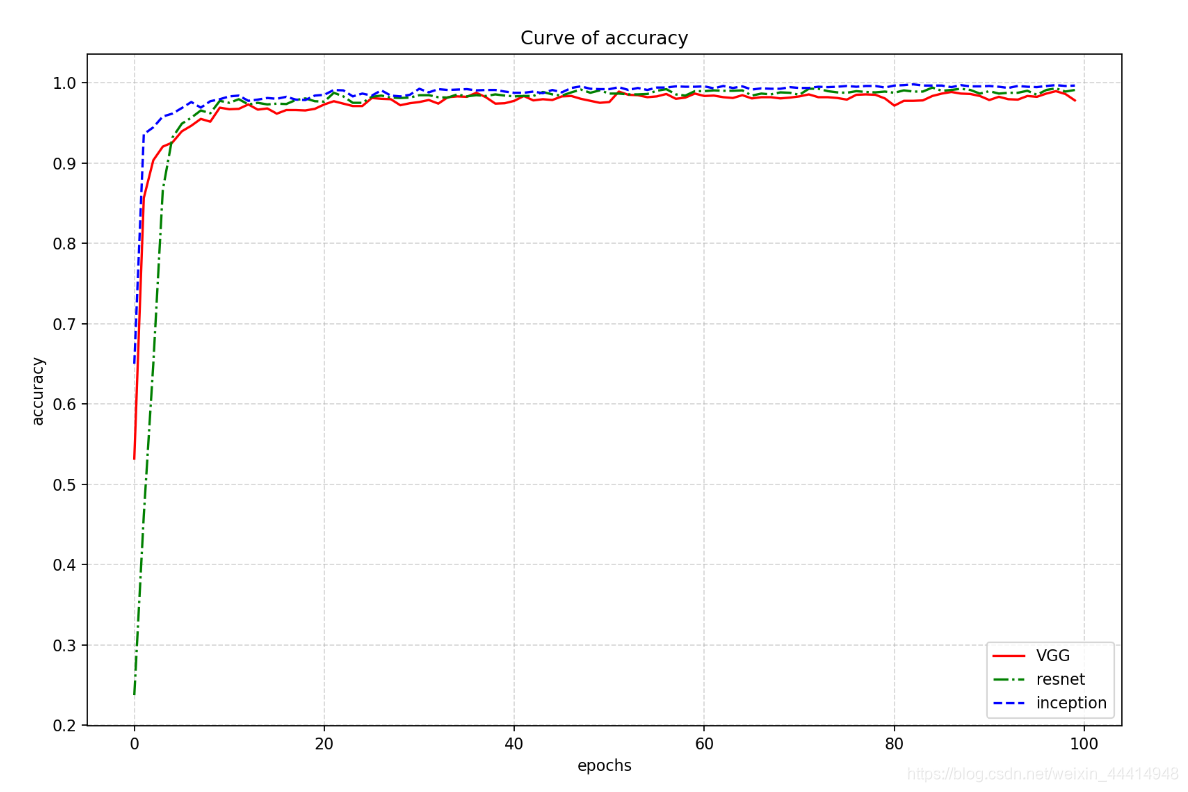

使用深度学习框架(tensorflow2.0及以上、pytorch1.0及以上版本)搭建VGG、inception、resnet网络。

数据集:mnist,准确率高于90%。

追加要求:训练过程loss曲线图(一个画布同时画出3个网络的loss曲线),训练过程acc曲线图(一个画布同时画出3个网络的acc曲线)。

示例代码:

VGG实现部分(history_VGG变量会用于后面的曲线联合绘制)

import tensorflow as tf

from tensorflow.keras import layers

import numpy as np

#加载mnist数据集

(x_train, y_train), (x_test, y_test) = tf.keras.datasets.mnist.load_data()

#预处理

x_train, x_test = x_train.astype(np.float32)/255., x_test.astype(np.float32)/255.

x_train, x_test = np.expand_dims(x_train, axis=3), np.expand_dims(x_test, axis=3)

# 创建训练集50000、验证集10000以及测试集10000

x_val = x_train[-10000:]

y_val = y_train[-10000:]

x_train = x_train[:-10000]

y_train = y_train[:-10000]

#标签转为one-hot格式

y_train = tf.one_hot(y_train, depth=10).numpy()

y_val = tf.one_hot(y_val, depth=10).numpy()

y_test = tf.one_hot(y_test, depth=10).numpy()

# tf.data.Dataset 批处理

train_dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train)).batch(100).repeat()

val_dataset = tf.data.Dataset.from_tensor_slices((x_val, y_val)).batch(100).repeat()

test_dataset = tf.data.Dataset.from_tensor_slices((x_test, y_test)).batch(100).repeat()

from tensorflow.keras import datasets, layers, optimizers, Sequential, metrics,regularizers

from tensorflow import keras

input_shape = (28, 28, 1)

weight_decay = 0.001

num_classes = 10

model = tf.keras.Sequential()

model.add(layers.Conv2D(64, (3, 3), padding='same',

input_shape=input_shape, kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.3))

model.add(layers.Conv2D(64, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

model.add(layers.Conv2D(128, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

model.add(layers.Conv2D(128, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

model.add(layers.Conv2D(256, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

model.add(layers.Conv2D(256, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.4))

model.add(layers.Conv2D(256, (3, 3), padding='same',kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.MaxPooling2D(pool_size=(2, 2)))

model.add(layers.Flatten())

model.add(layers.Dense(512,kernel_regularizer=regularizers.l2(weight_decay)))

model.add(layers.Activation('relu'))

model.add(layers.BatchNormalization())

model.add(layers.Dropout(0.5))

model.add(layers.Dense(num_classes, activation='softmax'))

#设置网络优化方法、损失函数、评价指标

model.compile(optimizer=tf.keras.optimizers.Adam(0.001),

loss=tf.keras.losses.CategoricalCrossentropy(),

metrics = ['acc']

)

#开始训练

history_VGG = model.fit(train_dataset, epochs=100, steps_per_epoch=20, validation_data=val_dataset, validation_steps=3)

#在测试集上评估并保存权重文件

model.evaluate(test_dataset, steps=100)

model.save_weights('save_model/VGG_minst/VGG_mnist_weights.ckpt')

———————————————————————————————————————————

ResNet实现部分(**history_resnet **变量会用于后面的曲线联合绘制)

import tensorflow as tf

from tensorflow.keras import layers

import numpy as np

#加载mnist数据集

(x_train, y_train), (x_test, y_test) = tf.keras.datasets.mnist.load_data()

#预处理

x_train, x_test = x_train.astype(np.float32)/255., x_test.astype(np.float32)/255.

x_train, x_test = np.expand_dims(x_train, axis=3), np.expand_dims(x_test, axis=3)

# 创建训练集50000、验证集10000以及测试集10000

x_val = x_train[-10000:]

y_val = y_train[-10000:]

x_train = x_train[:-10000]

y_train = y_train[:-10000]

#标签转为one-hot格式

y_train = tf.one_hot(y_train, depth=10).numpy()

y_val = tf.one_hot(y_val, depth=10).numpy()

y_test = tf.one_hot(y_test, depth=10).numpy()

# tf.data.Dataset 批处理

train_dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train)).batch(100).repeat()

val_dataset = tf.data.Dataset.from_tensor_slices((x_val, y_val)).batch(100).repeat()

test_dataset = tf.data.Dataset.from_tensor_slices((x_test, y_test)).batch(100).repeat()

from tensorflow.keras import datasets, layers, optimizers, Sequential, metrics

from tensorflow import keras

# 3x3 convolution

def conv3x3(channels, stride=1, kernel=(3, 3)):

return keras.layers.Conv2D(channels, kernel, strides=stride, padding='same',

use_bias=False,

kernel_initializer=tf.random_normal_initializer())

class ResnetBlock(keras.Model):

def __init__(self, channels, strides=1, residual_path=False):

super(ResnetBlock, self).__init__()

self.channels = channels

self.strides = strides

self.residual_path = residual_path

self.conv1 = conv3x3(channels, strides)

self.bn1 = keras.layers.BatchNormalization()

self.conv2 = conv3x3(channels)

self.bn2 = keras.layers.BatchNormalization()

if residual_path:

self.down_conv = conv3x3(channels, strides, kernel=(1, 1))

self.down_bn = tf.keras.layers.BatchNormalization()

def call(self, inputs, training=None):

residual = inputs

x = self.bn1(inputs, training=training)

x = tf.nn.relu(x)

x = self.conv1(x)

x = self.bn2(x, training=training)

x = tf.nn.relu(x)

x = self.conv2(x)

# this module can be added into self.

# however, module in for can not be added.

if self.residual_path:

residual = self.down_bn(inputs, training=training)

residual = tf.nn.relu(residual)

residual = self.down_conv(residual)

x = x + residual

return x

class ResNet(keras.Model):

def __init__(self, block_list, num_classes, initial_filters=16, **kwargs):

super(ResNet, self).__init__(**kwargs)

self.num_blocks = len(block_list)

self.block_list = block_list

self.in_channels = initial_filters

self.out_channels = initial_filters

self.conv_initial = conv3x3(self.out_channels)

self.blocks = keras.models.Sequential(name='dynamic-blocks')

# build all the blocks

for block_id in range(len(block_list)):

for layer_id in range(block_list[block_id]):

if block_id != 0 and layer_id == 0:

block = ResnetBlock(self.out_channels, strides=2, residual_path=True)

else:

if self.in_channels != self.out_channels:

residual_path = True

else:

residual_path = False

block = ResnetBlock(self.out_channels, residual_path=residual_path)

self.in_channels = self.out_channels

self.blocks.add(block)

self.out_channels *= 2

self.final_bn = keras.layers.BatchNormalization()

self.avg_pool = keras.layers.GlobalAveragePooling2D()

self.fc = keras.layers.Dense(num_classes, activation='softmax')

def call(self, inputs, training=None):

out = self.conv_initial(inputs)

out = self.blocks(out, training=training)

out = self.final_bn(out, training=training)

out = tf.nn.relu(out)

out = self.avg_pool(out)

out = self.fc(out)

return out

#网络参数设置

resnet_model = ResNet([2, 2, 2], 10)

resnet_model.compile(optimizer=keras.optimizers.Adam(0.001),

loss=keras.losses.CategoricalCrossentropy(from_logits=True),

metrics=['acc'])

resnet_model.build(input_shape=(None, 28, 28, 1))

#打印网络参数

print("Number of variables in the model :", len(resnet_model.variables))

resnet_model.summary()

#开始训练

history_resnet = resnet_model.fit(train_dataset, epochs=100, steps_per_epoch=20, validation_data=val_dataset, validation_steps=3)

#测试集评估及保存权重

resnet_model.evaluate(test_dataset, steps=100)

resnet_model.save_weights('save_model/resnet_mnist/resnet_mnist_weights.ckpt')

———————————————————————————————————————————

inception实现部分(history_inception变量会用于后面的曲线联合绘制)

import tensorflow as tf

from tensorflow.keras import layers

import numpy as np

#加载mnist数据集

(x_train, y_train), (x_test, y_test) = tf.keras.datasets.mnist.load_data()

#预处理

x_train, x_test = x_train.astype(np.float32)/255., x_test.astype(np.float32)/255.

x_train, x_test = np.expand_dims(x_train, axis=3), np.expand_dims(x_test, axis=3)

# 创建训练集50000、验证集10000以及测试集10000

x_val = x_train[-10000:]

y_val = y_train[-10000:]

x_train = x_train[:-10000]

y_train = y_train[:-10000]

#标签转为one-hot格式

y_train = tf.one_hot(y_train, depth=10).numpy()

y_val = tf.one_hot(y_val, depth=10).numpy()

y_test = tf.one_hot(y_test, depth=10).numpy()

# tf.data.Dataset 批处理

train_dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train)).batch(100).repeat()

val_dataset = tf.data.Dataset.from_tensor_slices((x_val, y_val)).batch(100).repeat()

test_dataset = tf.data.Dataset.from_tensor_slices((x_test, y_test)).batch(100).repeat()

from tensorflow.keras import datasets, layers, optimizers, Sequential, metrics

from tensorflow import keras

class ConvBNRelu(keras.Model):

def __init__(self, ch, kernelsz=3, strides=1, padding='same'):

super(ConvBNRelu, self).__init__()

self.model = keras.models.Sequential([

keras.layers.Conv2D(ch, kernelsz, strides=strides, padding=padding),

keras.layers.BatchNormalization(),

keras.layers.ReLU()

])

def call(self, x, training=None):

x = self.model(x, training=training)

return x

class InceptionBlk(keras.Model):

def __init__(self, ch, strides=1):

super(InceptionBlk, self).__init__()

self.ch = ch

self.strides = strides

self.conv1 = ConvBNRelu(ch, strides=strides)

self.conv2 = ConvBNRelu(ch, kernelsz=3, strides=strides)

self.conv3_1 = ConvBNRelu(ch, kernelsz=3, strides=strides)

self.conv3_2 = ConvBNRelu(ch, kernelsz=3, strides=1)

self.pool = keras.layers.MaxPooling2D(3, strides=1, padding='same')

self.pool_conv = ConvBNRelu(ch, strides=strides)

def call(self, x, training=None):

x1 = self.conv1(x, training=training)

x2 = self.conv2(x, training=training)

x3_1 = self.conv3_1(x, training=training)

x3_2 = self.conv3_2(x3_1, training=training)

x4 = self.pool(x)

x4 = self.pool_conv(x4, training=training)

# concat along axis=channel

x = tf.concat([x1, x2, x3_2, x4], axis=3)

return x

class Inception(keras.Model):

def __init__(self, num_layers, num_classes, init_ch=16, **kwargs):

super(Inception, self).__init__(**kwargs)

self.in_channels = init_ch

self.out_channels = init_ch

self.num_layers = num_layers

self.init_ch = init_ch

self.conv1 = ConvBNRelu(init_ch)

self.blocks = keras.models.Sequential(name='dynamic-blocks')

for block_id in range(num_layers):

for layer_id in range(2):

if layer_id == 0:

block = InceptionBlk(self.out_channels, strides=2)

else:

block = InceptionBlk(self.out_channels, strides=1)

self.blocks.add(block)

# enlarger out_channels per block

self.out_channels *= 2

self.avg_pool = keras.layers.GlobalAveragePooling2D()

self.fc = keras.layers.Dense(num_classes)

def call(self, x, training=None):

out = self.conv1(x, training=training)

out = self.blocks(out, training=training)

out = self.avg_pool(out)

out = self.fc(out)

return out

#网络参数设置

model_inception = Inception(2, 10)

model_inception.compile(optimizer=keras.optimizers.Adam(0.001),

loss=keras.losses.CategoricalCrossentropy(from_logits=True),

metrics=['acc'])

model_inception.build(input_shape=(None, 28, 28, 1))

#打印网络参数

model_inception.summary()

#开始训练

history_inception = model_inception.fit(train_dataset, epochs=100, steps_per_epoch=20, validation_data=val_dataset, validation_steps=3)

#模型评估及保存权重

model_inception.evaluate(test_dataset, steps=100)

model_inception.save_weights('save_model/inception_mnist/inception_mnist_weights.ckpt')

———————————————————————————————————————————

扫描二维码关注公众号,回复: 12844191 查看本文章

曲线绘制

import matplotlib.pyplot as plt

#输入三个曲线的信息

plt.figure( figsize=(12,8), dpi=160 )

plt.plot(history_VGG.epoch, history_VGG.history.get('loss'), color='r', label = 'VGG')

plt.plot(history_resnet.epoch, history_resnet.history.get('loss'), color='g', linestyle='-.', label = 'resnet')

plt.plot(history_inception.epoch, history_inception.history.get('loss'), color='b', linestyle='--', label = 'inception')

#显示图例

plt.legend() #默认loc=Best

#添加网格信息

plt.grid(True, linestyle='--', alpha=0.5) #默认是True,风格设置为虚线,alpha为透明度

#添加标题

plt.xlabel('epochs')

plt.ylabel('loss')

plt.title('Curve of loss')

plt.savefig('./save_png/loss_curve.png')

plt.show()

绘制的联合曲线如下图所示:

acc

loss