Partitioner分区

按照不同的条件将结果输出到不同的分区,比如将结果按照手机归属地不同省份输出到不同的文件中。

默认Partitioner分区

默认Partitioner分区是根据key的哈希值获取分区。

public class HashPartitioner<K, V> extends Partitioner<K, V> {

public int getPartition(K key, V value, int numReduceTasks) {

return (key.hashCode() & Integer.MAX_VALUE) % numReduceTasks;

}

}

自定义Partitioner分区

- 自定义类继承Partitioner,重写getPartition()方法,其中Partitioner的泛型参数是Map的输出KV类型。分区号必须从0开始。

public class BeanPartitioner extends Partitioner<Text, Student> {

@Override

public int getPartition(Text key, Student value, int numPartitions) {

// 代码逻辑

return partition;

}

}

- 在驱动类中设置自定义的Partitioner和NumReduceTasks

job.setPartitionerClass(BeanPartitioner.class);

job.setNumReduceTasks(2);

如果ReduceTask的数量 > 分区数,则会多产生几个空的输出文件part-r-000xx。

如果1 < ReduceTask的数量 < 分区数,则有一部分分区数据没地方放,报错。

如果ReduceTask的数量 = 1,则不管MapTask输出几个分区文件,最终结果都交给一个ReduceTask,最终产生一个结果文件part-r-00000。

WritableComparable排序

MapTask和ReduceTask均会对数据的key进行排序。该操作属于Hadoop的默认行为,任何应用程序中的数据均会被排序,不管逻辑上是否需要。默认排序是按照字典序排序,且实现该排序的方法是快速排序。

对于MapTask,它会将处理的结果暂时放到环形缓冲区中,当环形缓冲区使用率达到一定阈值时,对缓冲区中的数据进行一次快速排序,并将这些有序数据溢写到磁盘上。当数据处理完毕后,它会对磁盘上所有文件进行归并排序。

对于ReduceTask,它从每个MapTask上拷贝相应的数据文件,如果文件大小超过一定阈值,则溢写到磁盘,否则存储在内存中。如果内存中文件大小或数目超过一定阈值,则进行一次合并后将数据溢写到磁盘上。如果磁盘上的文件数目达到一定阈值,则进行一次归并排序以生成更大的文件。当所有数据拷贝完毕后,MapTask统一对内存和磁盘上的所有数据进行一次归并排序。

接下来的操作均使用下面的文件:(名字,语文成绩,数学成绩,英语成绩)

Luke 70 64 75

Jayden 79 66 92

Madge 92 63 99

Chloe 98 54 59

Oliver 52 70 76

Anthony 98 89 88

Ben 96 91 94

Jack 65 79 67

Rose 68 85 92

Tim 67 68 79

Donald 91 85 94

Smith 94 95 50

Brown 96 73 63

Taylor 91 80 78

Abby 80 75 65

Tom 76 84 92

Black 75 79 90

Michelle 56 71 70

Pony 96 98 88

Catherine 82 69 54

Lucy 72 59 52

Emma 79 79 79

全排序

实现WritableComparable接口,实现compareTo()方法。下面实现对学生总成绩由大到小排序,实现compareTo()方法,Map阶段将Student类作为Key,然后MapReduce会对其进行排序。

public class Student implements WritableComparable<Student>{

private int chinese;

private int math;

private int english;

private int sum;

public Student() {

}

public int getSum() {

return sum;

}

public void setGrade(int chinese, int math, int english) {

this.chinese = chinese;

this.math = math;

this.english = english;

this.sum = chinese + math + english;

}

@Override

public String toString() {

return "chinese=" + chinese + ",math=" + math + ",english=" + english + ",sum=" + sum;

}

//序列化方法

public void write(DataOutput out) throws IOException {

out.writeInt(chinese);

out.writeInt(math);

out.writeInt(english);

out.writeInt(sum);

}

//反序列化方法:必须与序列化方法顺序一致

public void readFields(DataInput in) throws IOException {

chinese = in.readInt();

math = in.readInt();

english = in.readInt();

sum = in.readInt();

}

public int compareTo(Student o) {

return Integer.compare(o.sum, this.sum);

}

}

public class BeanWritableMapper extends Mapper<LongWritable, Text, Student, Text> {

Student k = new Student();

Text v = new Text();

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Student, Text>.Context context)

throws IOException, InterruptedException {

String[] info = value.toString().split(" ");

v.set(info[0]);

int chinese = Integer.parseInt(info[1]);

int math = Integer.parseInt(info[2]);

int english = Integer.parseInt(info[3]);

k.setGrade(chinese, math, english);

context.write(k, v);

}

}

public class BeanWritableReducer extends Reducer<Student, Text, Text, Student> {

Text key = new Text();

@Override

protected void reduce(Student student, Iterable<Text> values, Reducer<Student, Text, Text, Student>.Context context)

throws IOException, InterruptedException {

for (Text key : values) {

// 可能有总成绩相同的学生,需要循环写出

context.write(key, student);

}

}

}

public class BeanWritableDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

args = new String[] {

"e:/grade.txt", "e:/output"};

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(BeanWritableDriver.class);

job.setMapperClass(BeanWritableMapper.class);

job.setReducerClass(BeanWritableReducer.class);

job.setMapOutputKeyClass(Student.class);

job.setMapOutputValueClass(Text.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Student.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.waitForCompletion(true);

}

}

分区排序

将总成绩分为2个文件存储,并实现分区内排序。创建一个分区类继承Partitioner类

public class BeanPartitioner extends Partitioner<Student, Text> {

@Override

public int getPartition(Student key, Text value, int numPartitions) {

int sum = key.getSum();

if (sum >= 200) {

return 0;

}else {

return 1;

}

}

}

在驱动类加上下面的代码

job.setPartitionerClass(BeanPartitioner.class);

job.setNumReduceTasks(2);

辅助分组

GroupingComparator分组,对Reduce阶段的数据根据某个或多个字段进行分组。它在reduce之前执行。

下面需要将每个订单价格最大的商品列出来。(订单号,商品名称,商品价格)

3 shampoo 25

1 novel 70

2 phone 3999

1 desk 94

3 noodles 20

2 ipad 4988

1 notebook 20

2 computer 5999

3 clothes 111

1 pen 4

3 pizza 60

利用订单ID和商品价格组成的orderbean作为key,可以将Map阶段读取到的所有订单数据按订单ID升序,如果ID相同在按价格排序,发送到Reduce。

Reduce端利用GroupingComparator将订单ID相同的KV看出一组,然后取出第一个就是价格最高的商品。

首先key是orderbean,orderbean是按照订单金额倒叙排的,那么最大值就在上面,到了Reduce的时候,key相同的就只会传一个过去,所以获取了每个订单的最大值。

Order类

public class Order implements WritableComparable<Order>{

private int id;

private String name;

private int price;

public Order() {

}

public int getId() {

return id; }

public void setId(int id) {

this.id = id; }

public String getName() {

return name; }

public void setName(String name) {

this.name = name; }

public int getPrice() {

return price;}

public void setPrice(int price) {

this.price = price;}

@Override

public void write(DataOutput out) throws IOException {

out.writeInt(id);

out.writeUTF(name);

out.writeInt(price);

}

@Override

public void readFields(DataInput in) throws IOException {

id = in.readInt();

name = in.readUTF();

price = in.readInt();

}

@Override

public int compareTo(Order o) {

if (this.id > o.id) {

return 1;

} else if (this.id < o.id) {

return -1;

} else {

return -Integer.compare(this.price, o.price);

}

}

@Override

public String toString() {

return "订单" + id + ":name=" + name + ", price=" + price;

}

}

GroupingComparator

- 继承 WritableComparator,重写 compare() 方法

- 创建构造器将比较对象的类传给父类 super(Order.class, true);

public class GroupingComparator extends WritableComparator{

protected GroupingComparator() {

super(Order.class, true);

}

@Override

public int compare(WritableComparable a, WritableComparable b) {

Order aOrder = (Order) a;

Order bOrder = (Order) b;

if (aOrder.getId() > bOrder.getId()) {

return 1;

} else if (aOrder.getId() < bOrder.getId()) {

return -1;

} else {

return 0;

}

}

}

public class GroupingComparatorMapper extends Mapper<LongWritable, Text, Order, NullWritable>{

Order order = new Order();

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Order, NullWritable>.Context context)

throws IOException, InterruptedException {

String[] splits = value.toString().split(" ");

order.setId(Integer.parseInt(splits[0]));

order.setName(splits[1]);

order.setPrice(Integer.parseInt(splits[2]));

context.write(order, NullWritable.get());

}

}

public class GroupingComparatorReducer extends Reducer<Order, NullWritable, Order, NullWritable>{

@Override

protected void reduce(Order key, Iterable<NullWritable> values,

Reducer<Order, NullWritable, Order, NullWritable>.Context context) throws IOException, InterruptedException {

context.write(key, NullWritable.get());

}

}

驱动类设置job.setGroupingComparatorClass(GroupingComparator.class);

public class GroupingComparatorDriver {

public static void main(String[] args) throws IllegalArgumentException, IOException, ClassNotFoundException, InterruptedException {

args = new String[] {

"e:/order.txt", "e:/output"};

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(GroupingComparatorDriver.class);

job.setMapperClass(GroupingComparatorMapper.class);

job.setReducerClass(GroupingComparatorReducer.class);

job.setMapOutputKeyClass(Order.class);

job.setMapOutputValueClass(NullWritable.class);

job.setOutputKeyClass(Order.class);

job.setOutputValueClass(NullWritable.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

//设置分组排序

job.setGroupingComparatorClass(GroupingComparator.class);

boolean result = job.waitForCompletion(true);

System.exit(result ? 0 : 1);

}

}

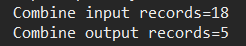

Combiner合并

Combiner是MapReduce中Mapper和Reducer之外的组件。

Combiner的父类就是Reducer,区别在于Combiner是在每一个MapTask所在的节点运行,对每一个MapTask的输出进行局部汇总,以减少网络传输量;Reducer是接收全局所有的Mapper的输出结果进行汇总。

使用Combiner的前提是不能影响最终的业务逻辑(只适合累加、汇总等操作,不适合求平均值),而且Combiner的输入KV应该与Mapper的输出KV类型对应起来,Combiner的输出KV应该与Reducer的输入KV类型对应起来。

方式一:

创建一个类继承Reducer,然后编写汇总操作,在驱动类加上setCombinerClass

public class WordCountCombiner extends Reducer<Text, IntWritable, Text, IntWritable>{

IntWritable result = new IntWritable(1);

@Override

protected void reduce(Text key, Iterable<IntWritable> values,

Reducer<Text, IntWritable, Text, IntWritable>.Context context) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable value : values) {

sum += value.get();

}

result.set(sum);

context.write(key, result);

}

}

job.setCombinerClass(WordCountCombiner.class);

方式二:

直接在驱动类加上 job.setCombinerClass(WordCountReducer.class); 。因为Combiner和Reducer实现的功能是一样的,代码也是一样的。