单变量时间序列预测

数据类型:单列

import numpy

import matplotlib.pyplot as plt

from keras.models import Sequential

from keras.layers import Dense

from keras.layers import LSTM

from keras.models import Sequential, load_model

dataset = df.values

# 将整型变为float

dataset = dataset.astype('float32')

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler(feature_range=(0, 1))

dataset = scaler.fit_transform(dataset)

train_size = int(len(dataset) * 0.65)

trainlist = dataset[:train_size]

testlist = dataset[train_size:]

def create_dataset(dataset, look_back):

#这里的look_back与timestep相同

dataX, dataY = [], []

for i in range(len(dataset)-look_back-1):

a = dataset[i:(i+look_back)]

dataX.append(a)

dataY.append(dataset[i + look_back])

return numpy.array(dataX),numpy.array(dataY)

#训练数据太少 look_back并不能过大

look_back = 1

trainX,trainY = create_dataset(trainlist,look_back)

testX,testY = create_dataset(testlist,look_back)

trainX = numpy.reshape(trainX, (trainX.shape[0], trainX.shape[1], 1))

testX = numpy.reshape(testX, (testX.shape[0], testX.shape[1] ,1 ))

# create and fit the LSTM network

model = Sequential()

model.add(LSTM(4, input_shape=(None,1)))

model.add(Dense(1))

model.compile(loss='mean_squared_error', optimizer='adam')

model.fit(trainX, trainY, epochs=100, batch_size=1, verbose=2)

# model.save(os.path.join("DATA","Test" + ".h5"))

# make predictions

#模型验证

#model = load_model(os.path.join("DATA","Test" + ".h5"))

trainPredict = model.predict(trainX)

testPredict = model.predict(testX)

#反归一化

trainPredict = scaler.inverse_transform(trainPredict)

trainY = scaler.inverse_transform(trainY)

testPredict = scaler.inverse_transform(testPredict)

testY = scaler.inverse_transform(testY)

plt.plot(trainY)

plt.plot(trainPredict[1:])

plt.show()s

plt.plot(testY)

plt.plot(testPredict[1:])

plt.show()

预测未来数据方法

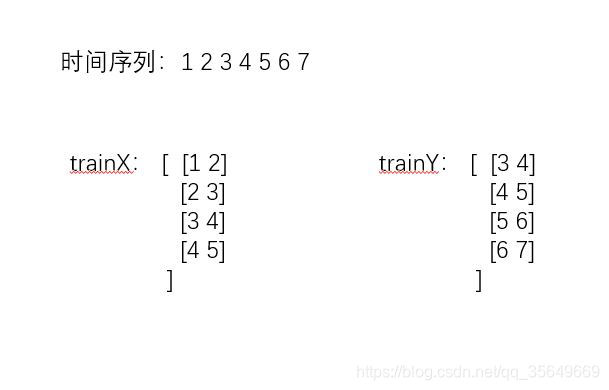

.单维单步(使用前n(2,代码演示为3)步预测后一步)

#对全部数据进行训练

import numpy

import matplotlib.pyplot as plt

from keras.models import Sequential

from keras.layers import Dense

from keras.layers import LSTM

from keras.models import Sequential, load_model

dataset = df.values

# 将整型变为float

dataset = dataset.astype('float32')

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler(feature_range=(0, 1))

dataset = scaler.fit_transform(dataset)

def create_dataset(dataset, look_back):

#这里的look_back与timestep相同

dataX, dataY = [], []

for i in range(len(dataset)-look_back-1):

a = dataset[i:(i+look_back)]

dataX.append(a)

dataY.append(dataset[i + look_back])

return numpy.array(dataX),numpy.array(dataY)

#训练数据太少 look_back并不能过大

look_back = 1

trainX,trainY = create_dataset(dataset,look_back)

trainX = numpy.reshape(trainX, (trainX.shape[0], trainX.shape[1], 1))

# create and fit the LSTM network

model = Sequential()

model.add(LSTM(2, input_shape=(None,1)))

model.add(Dense(1))

model.compile(loss='mse', optimizer='adam')

model.fit(trainX, trainY, epochs=100, batch_size=1, verbose=2)

######################滚动预测########

predict_xlist = []#添加预测x列表

predict_y = []#添加预测y列表

timesteps=1

length=12

predict_xlist.extend(dataset[dataset.shape[0]-timesteps:dataset.shape[0],0].tolist())#已经存在的最后timesteps个数据添加进列表,预测新值(比如已经有的数据从1,2,3到288。现在要预测后面的数据,所以将216到288的72个数据添加到列表中,预测新的值即288以后的数据)

while len(predict_y) < length:

predictx = np.array(predict_xlist[-timesteps:])#从最新的predict_xlist取出timesteps个数据,预测新的predict_steps个数据(因为每次预测的y会添加到predict_xlist列表中,为了预测将来的值,所以每次构造的x要取这个列表中最后的timesteps个数据词啊性)

predictx = np.reshape(predictx,(1,timesteps,1))#变换格式,适应LSTM模型

#print("predictx"),print(predictx),print(predictx.shape)

#预测新值

lstm_predict = model.predict(predictx)

#predict_list.append(train_predict)#新值y添加进列表,做x

#滚动预测

#print("lstm_predict"),print(lstm_predict[0])

predict_xlist.extend(lstm_predict[0])#将新预测出来的predict_steps个数据,加入predict_xlist列表,用于下次预测

# invert

lstm_predict = scaler.inverse_transform(lstm_predict)

predict_y.extend(lstm_predict[0])#预测的结果y,每次预测的12个数据,添加进去,直到预测288个为止

单维多步(使用前两步预测后两步)

from numpy import array

# split a univariate sequence into samples

def split_sequence(sequence, n_steps_in, n_steps_out):

X, y = list(), list()

for i in range(len(sequence)):

# find the end of this pattern

end_ix = i + n_steps_in

out_end_ix = end_ix + n_steps_out

# check if we are beyond the sequence

if out_end_ix > len(sequence):

break

# gather input and output parts of the pattern

seq_x, seq_y = sequence[i:end_ix], sequence[end_ix:out_end_ix]

X.append(seq_x)

y.append(seq_y)

return array(X), array(y)

raw_seq = [10, 20, 30, 40, 50, 60, 70, 80, 90]

# 也就是用之前的三步,预测之后的2步

n_steps_in, n_steps_out = 3, 2

X, y = split_sequence(raw_seq, n_steps_in, n_steps_out)

for i in range(len(X)):

print(X[i], y[i])

'''

[10 20 30] [40 50]

[20 30 40] [50 60]

[30 40 50] [60 70]

[40 50 60] [70 80]

[50 60 70] [80 90]

'''

# reshape from [samples, timesteps] into [samples, timesteps, features]

n_features = 1

X = X.reshape((X.shape[0], X.shape[1], n_features))

# define model

model = Sequential()

model.add(LSTM(100, activation='relu', return_sequences=True, input_shape=(n_steps_in, n_features)))

model.add(LSTM(100, activation='relu'))

# 和之前单个步不同点在于 这一层的神经元=n_steps_out

model.add(Dense(n_steps_out))

model.compile(optimizer='adam', loss='mse')

model.fit(X, y, epochs=50, verbose=0)

# demonstrate prediction

x_input = array([70, 80, 90])

x_input = x_input.reshape((1, n_steps_in, n_features))

yhat = model.predict(x_input, verbose=0)

print(yhat) # [[107.168686 118.79841 ]]

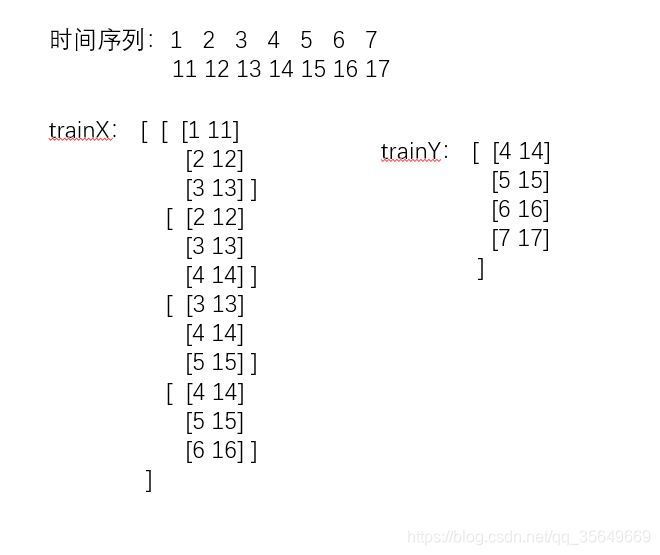

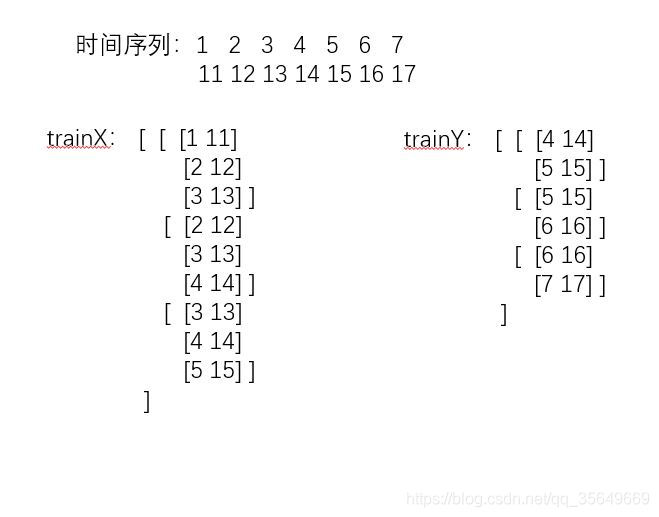

多变量时间序列预测

#######################################多变量,多步骤预测

from numpy import array

from numpy import hstack

from keras.models import Sequential

from keras.layers import LSTM

from keras.layers import Dense

# split a multivariate sequence into samples

def split_sequences(sequences, n_steps_in, n_steps_out):

X, y = list(), list()

for i in range(len(sequences)):

# find the end of this pattern

end_ix = i + n_steps_in

out_end_ix = end_ix + n_steps_out-1

# check if we are beyond the dataset

if out_end_ix > len(sequences):

break

# gather input and output parts of the pattern

seq_x, seq_y = sequences[i:end_ix, :-1], sequences[end_ix-1:out_end_ix, -1]

X.append(seq_x)

y.append(seq_y)

return array(X), array(y)

# define input sequence

in_seq1 = array([10, 20, 30, 40, 50, 60, 70, 80, 90])

in_seq2 = array([15, 25, 35, 45, 55, 65, 75, 85, 95])

out_seq = array([in_seq1[i]+in_seq2[i] for i in range(len(in_seq1))])

# convert to [rows, columns] structure

in_seq1 = in_seq1.reshape((len(in_seq1), 1))

in_seq2 = in_seq2.reshape((len(in_seq2), 1))

out_seq = out_seq.reshape((len(out_seq), 1))

# horizontally stack columns

dataset = hstack((in_seq1, in_seq2, out_seq))

# choose a number of time steps

n_steps_in, n_steps_out = 3, 2

# covert into input/output

X, y = split_sequences(dataset, n_steps_in, n_steps_out)

# the dataset knows the number of features, e.g. 2

n_features = X.shape[2]

# define model

model = Sequential()

model.add(LSTM(100, activation='relu', return_sequences=True, input_shape=(n_steps_in, n_features)))

model.add(LSTM(100, activation='relu'))

model.add(Dense(n_steps_out))

model.compile(optimizer='adam', loss='mse')

# fit model

model.fit(X, y, epochs=200, verbose=0)

# demonstrate prediction

x_input = array([[70, 75], [80, 85], [90, 95]])

x_input = x_input.reshape((1, n_steps_in, n_features))

yhat = model.predict(x_input, verbose=0)

print(yhat)

############################################################多变量,多对多

'''

[[10 15 25]

[20 25 45]

[30 35 65]] [[ 40 45 85]

[ 50 55 105]]-----y(输出为2*3维)

[[20 25 45]

[30 35 65]

[40 45 85]] [[ 50 55 105]

[ 60 65 125]]-----y

[[ 30 35 65]

[ 40 45 85]

[ 50 55 105]] [[ 60 65 125]

[ 70 75 145]]

[[ 40 45 85]

[ 50 55 105]

[ 60 65 125]] [[ 70 75 145]

[ 80 85 165]]

[[ 50 55 105]

[ 60 65 125]

[ 70 75 145]] [[ 80 85 165]

[ 90 95 185]]

'''

from numpy import array

from numpy import hstack

from keras.models import Sequential

from keras.layers import LSTM

from keras.layers import Dense

from keras.layers import RepeatVector

from keras.layers import TimeDistributed

# split a multivariate sequence into samples

def split_sequences(sequences, n_steps_in, n_steps_out):

X, y = list(), list()

for i in range(len(sequences)):

# find the end of this pattern

end_ix = i + n_steps_in

out_end_ix = end_ix + n_steps_out

# check if we are beyond the dataset

if out_end_ix > len(sequences):

break

# gather input and output parts of the pattern

seq_x, seq_y = sequences[i:end_ix, :], sequences[end_ix:out_end_ix, :]

X.append(seq_x)

y.append(seq_y)

return array(X), array(y)

# define input sequence

in_seq1 = array([10, 20, 30, 40, 50, 60, 70, 80, 90])

in_seq2 = array([15, 25, 35, 45, 55, 65, 75, 85, 95])

out_seq = array([in_seq1[i]+in_seq2[i] for i in range(len(in_seq1))])

# convert to [rows, columns] structure

in_seq1 = in_seq1.reshape((len(in_seq1), 1))

in_seq2 = in_seq2.reshape((len(in_seq2), 1))

out_seq = out_seq.reshape((len(out_seq), 1))

# horizontally stack columns

dataset = hstack((in_seq1, in_seq2, out_seq))

# choose a number of time steps

n_steps_in, n_steps_out = 3, 2

# covert into input/output

X, y = split_sequences(dataset, n_steps_in, n_steps_out)

# the dataset knows the number of features, e.g. 2

n_features = X.shape[2]

# define model

model = Sequential()

model.add(LSTM(200, activation='relu', input_shape=(n_steps_in, n_features)))

model.add(RepeatVector(n_steps_out))

model.add(LSTM(200, activation='relu', return_sequences=True))

model.add(TimeDistributed(Dense(n_features)))

'''

这里输入3D为(样本量,return_sequences数量(=n_steps_out),200)

输出为(样本量,return_sequences数量(=n_steps_out),n_features)

就是每个输出是(3,2)维度的

'''

model.compile(optimizer='adam', loss='mse')

# fit model

model.fit(X, y, epochs=300, verbose=0)

# demonstrate prediction

x_input = array([[60, 65, 125], [70, 75, 145], [80, 85, 165]])

x_input = x_input.reshape((1, n_steps_in, n_features))

yhat = model.predict(x_input, verbose=0)

print(yhat)

'''

总结:注意的点:

最大不同在DENSE的输出层,其实在多步骤的时候什么时候用n_featues,什么时候用n_steps还是看y的格式鸭

'''.多维单步(使用前三步去预测后一步)

from numpy import array

from numpy import hstack

from keras.models import Sequential

from keras.layers import LSTM

from keras.layers import Dense

# define input sequence

in_seq1 = array([10, 20, 30, 40, 50, 60, 70, 80, 90])

in_seq2 = array([15, 25, 35, 45, 55, 65, 75, 85, 95])

out_seq = array([in_seq1[i]+in_seq2[i] for i in range(len(in_seq1))])

# convert to [rows, columns] structure

in_seq1 = in_seq1.reshape((len(in_seq1), 1))

in_seq2 = in_seq2.reshape((len(in_seq2), 1))

out_seq = out_seq.reshape((len(out_seq), 1))

# horizontally stack columns

dataset = hstack((in_seq1, in_seq2, out_seq))

print(dataset)

'''

[[ 10 15 25]

[ 20 25 45]

[ 30 35 65]

[ 40 45 85]

[ 50 55 105]

[ 60 65 125]

[ 70 75 145]

[ 80 85 165]

[ 90 95 185]]

现在定为时间步长为3

举个例子,就是用

10 15

20 25

30 35

预测65

'''

# split a multivariate sequence into samples

def split_sequences(sequences, n_steps):

X, y = list(), list()

for i in range(len(sequences)):

end_ix = i + n_steps

if end_ix > len(sequences):

break

seq_x, seq_y = sequences[i:end_ix, :-1], sequences[end_ix-1, -1] # 和单变量最大的不同在这里

X.append(seq_x)

y.append(seq_y)

return array(X), array(y)

n_steps = 3

X,y = split_sequences(dataset,n_steps)

print(X.shape,y.shape)

for i in range(len(X)):

print(X[i],y[i])

n_features = X.shape[2]

'''

[[10 15]

[20 25]

[30 35]] 65

[[20 25]

[30 35]

[40 45]] 85

[[30 35]

[40 45]

[50 55]] 105

[[40 45]

[50 55]

[60 65]] 125

[[50 55]

[60 65]

[70 75]] 145

[[60 65]

[70 75]

[80 85]] 165

[[70 75]

[80 85]

[90 95]] 185

'''

model = Sequential()

model.add(LSTM(50,activation='relu',input_shape=(n_steps,n_features)))

model.add(Dense(1))

model.compile(optimizer='adam',loss='mse')

model.fit(X,y,epochs=200,verbose=0)

#预测模型

x_input = array([[80, 85], [90, 95], [100, 105]])

x_input = x_input.reshape((1, n_steps, n_features)) # 转换成样本量+步长+特征的格式

yhat = model.predict(x_input, verbose=0)

print(yhat) # 209.00851

多维多步的预测(使用前三步去预测后两步)

from numpy import array

from numpy import hstack

from keras.models import Sequential

from keras.layers import LSTM

from keras.layers import Dense

# split a multivariate sequence into samples

def split_sequences(sequences, n_steps_in, n_steps_out):

X, y = list(), list()

for i in range(len(sequences)):

# find the end of this pattern

end_ix = i + n_steps_in

out_end_ix = end_ix + n_steps_out-1

# check if we are beyond the dataset

if out_end_ix > len(sequences):

break

# gather input and output parts of the pattern

seq_x, seq_y = sequences[i:end_ix, :-1], sequences[end_ix-1:out_end_ix, -1]

X.append(seq_x)

y.append(seq_y)

return array(X), array(y)

# define input sequence

in_seq1 = array([10, 20, 30, 40, 50, 60, 70, 80, 90])

in_seq2 = array([15, 25, 35, 45, 55, 65, 75, 85, 95])

out_seq = array([in_seq1[i]+in_seq2[i] for i in range(len(in_seq1))])

# convert to [rows, columns] structure

in_seq1 = in_seq1.reshape((len(in_seq1), 1))

in_seq2 = in_seq2.reshape((len(in_seq2), 1))

out_seq = out_seq.reshape((len(out_seq), 1))

# horizontally stack columns

dataset = hstack((in_seq1, in_seq2, out_seq))

# choose a number of time steps

n_steps_in, n_steps_out = 3, 2

# covert into input/output

X, y = split_sequences(dataset, n_steps_in, n_steps_out)

# the dataset knows the number of features, e.g. 2

n_features = X.shape[2]

# define model

model = Sequential()

model.add(LSTM(100, activation='relu', return_sequences=True, input_shape=(n_steps_in, n_features)))

model.add(LSTM(100, activation='relu'))

model.add(Dense(n_steps_out))

model.compile(optimizer='adam', loss='mse')

# fit model

model.fit(X, y, epochs=200, verbose=0)

# demonstrate prediction

x_input = array([[70, 75], [80, 85], [90, 95]])

x_input = x_input.reshape((1, n_steps_in, n_features))

yhat = model.predict(x_input, verbose=0)

print(yhat)

############################################################多变量,多对多

'''

[[10 15 25]

[20 25 45]

[30 35 65]] [[ 40 45 85]

[ 50 55 105]]-----y(输出为2*3维)

[[20 25 45]

[30 35 65]

[40 45 85]] [[ 50 55 105]

[ 60 65 125]]-----y

[[ 30 35 65]

[ 40 45 85]

[ 50 55 105]] [[ 60 65 125]

[ 70 75 145]]

[[ 40 45 85]

[ 50 55 105]

[ 60 65 125]] [[ 70 75 145]

[ 80 85 165]]

[[ 50 55 105]

[ 60 65 125]

[ 70 75 145]] [[ 80 85 165]

[ 90 95 185]]

'''

from numpy import array

from numpy import hstack

from keras.models import Sequential

from keras.layers import LSTM

from keras.layers import Dense

from keras.layers import RepeatVector

from keras.layers import TimeDistributed

# split a multivariate sequence into samples

def split_sequences(sequences, n_steps_in, n_steps_out):

X, y = list(), list()

for i in range(len(sequences)):

# find the end of this pattern

end_ix = i + n_steps_in

out_end_ix = end_ix + n_steps_out

# check if we are beyond the dataset

if out_end_ix > len(sequences):

break

# gather input and output parts of the pattern

seq_x, seq_y = sequences[i:end_ix, :], sequences[end_ix:out_end_ix, :]

X.append(seq_x)

y.append(seq_y)

return array(X), array(y)

# define input sequence

in_seq1 = array([10, 20, 30, 40, 50, 60, 70, 80, 90])

in_seq2 = array([15, 25, 35, 45, 55, 65, 75, 85, 95])

out_seq = array([in_seq1[i]+in_seq2[i] for i in range(len(in_seq1))])

# convert to [rows, columns] structure

in_seq1 = in_seq1.reshape((len(in_seq1), 1))

in_seq2 = in_seq2.reshape((len(in_seq2), 1))

out_seq = out_seq.reshape((len(out_seq), 1))

# horizontally stack columns

dataset = hstack((in_seq1, in_seq2, out_seq))

# choose a number of time steps

n_steps_in, n_steps_out = 3, 2

# covert into input/output

X, y = split_sequences(dataset, n_steps_in, n_steps_out)

# the dataset knows the number of features, e.g. 2

n_features = X.shape[2]

# define model

model = Sequential()

model.add(LSTM(200, activation='relu', input_shape=(n_steps_in, n_features)))

model.add(RepeatVector(n_steps_out))

model.add(LSTM(200, activation='relu', return_sequences=True))

model.add(TimeDistributed(Dense(n_features)))

'''

这里输入3D为(样本量,return_sequences数量(=n_steps_out),200)

输出为(样本量,return_sequences数量(=n_steps_out),n_features)

就是每个输出是(3,2)维度的

'''

model.compile(optimizer='adam', loss='mse')

# fit model

model.fit(X, y, epochs=300, verbose=0)

# demonstrate prediction

x_input = array([[60, 65, 125], [70, 75, 145], [80, 85, 165]])

x_input = x_input.reshape((1, n_steps_in, n_features))

yhat = model.predict(x_input, verbose=0)

print(yhat)

'''

总结:注意的点:

最大不同在DENSE的输出层,其实在多步骤的时候什么时候用n_featues,什么时候用n_steps还是看y的格式鸭