大家好, 我是上白书妖!

知识源于积累,登峰造极源于自律

今天我根据以前所以学的一些文献,笔记等资料整理出一些小知识点,有不当之处,欢迎各位斧正

前提条件:

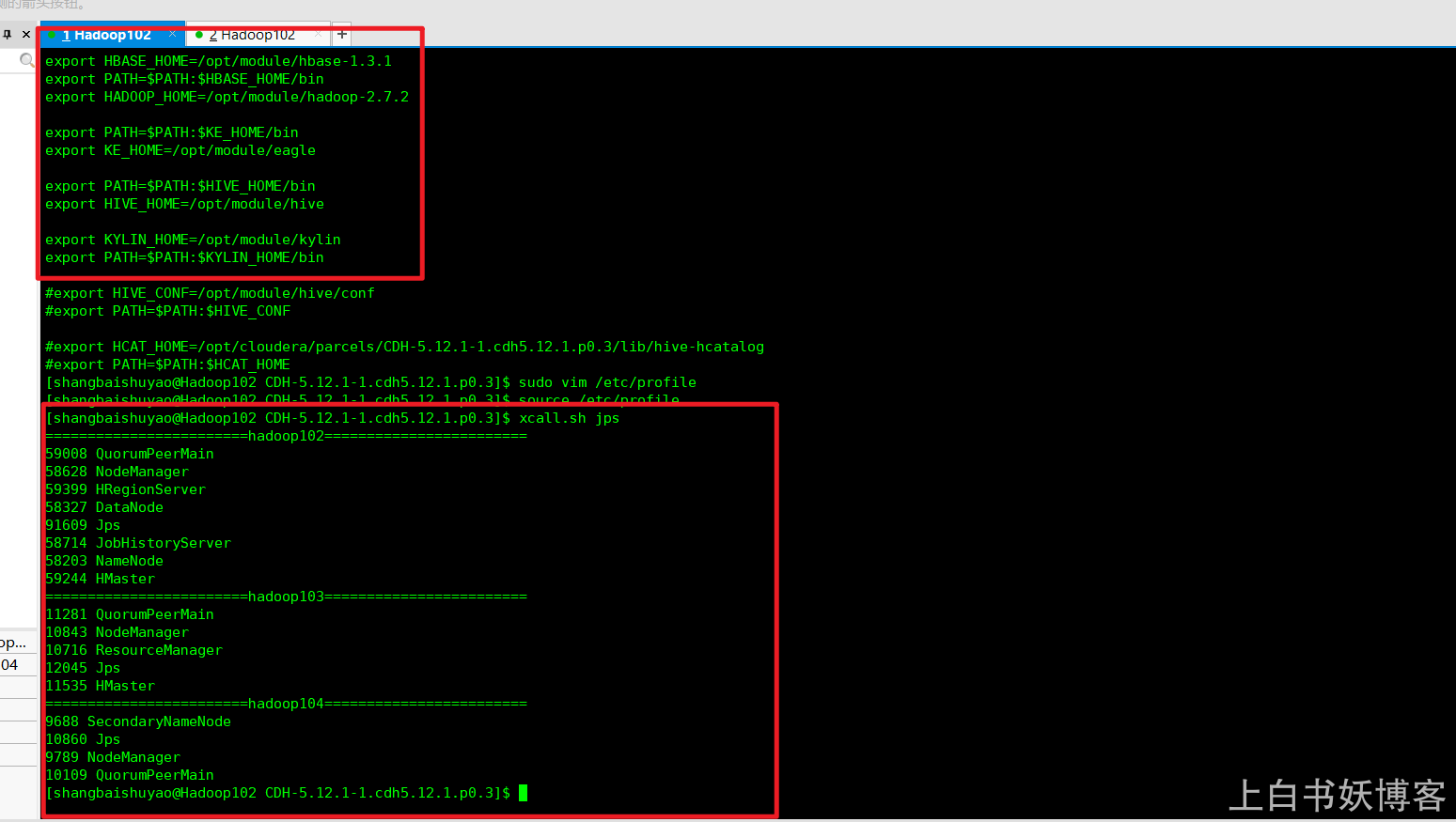

注意:需要在/etc/profile文件中配置HADOOP_HOME,HIVE_HOME,HBASE_HOME并将其对应的sbin(如果有这个目录的话)和bin目录配置到Path,最后需要source使其生效。启动Kylin之前要保证HDFS,YARN,ZK,HBASE相关进程是正常运行的。

当然,我已经具备了前提条件,如图:

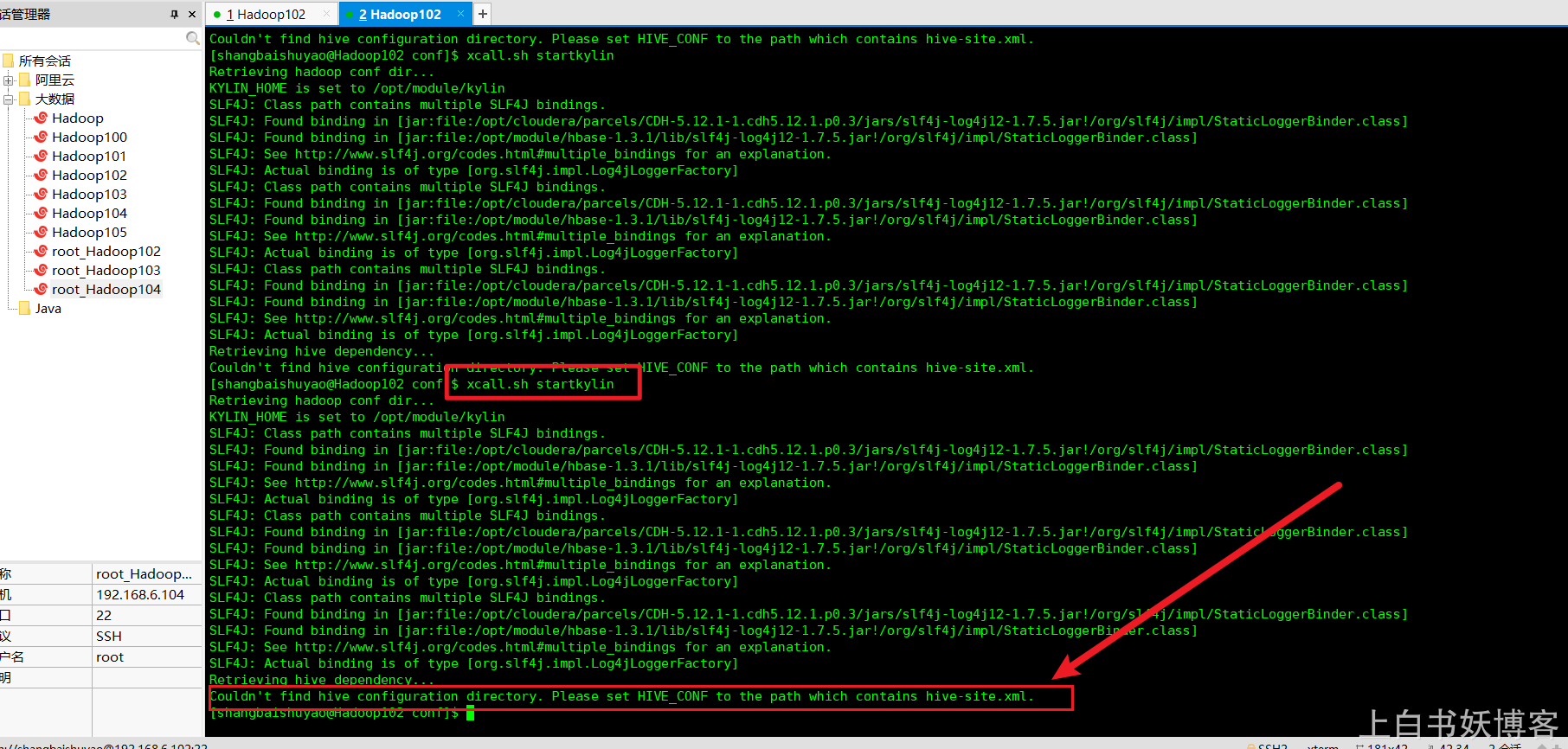

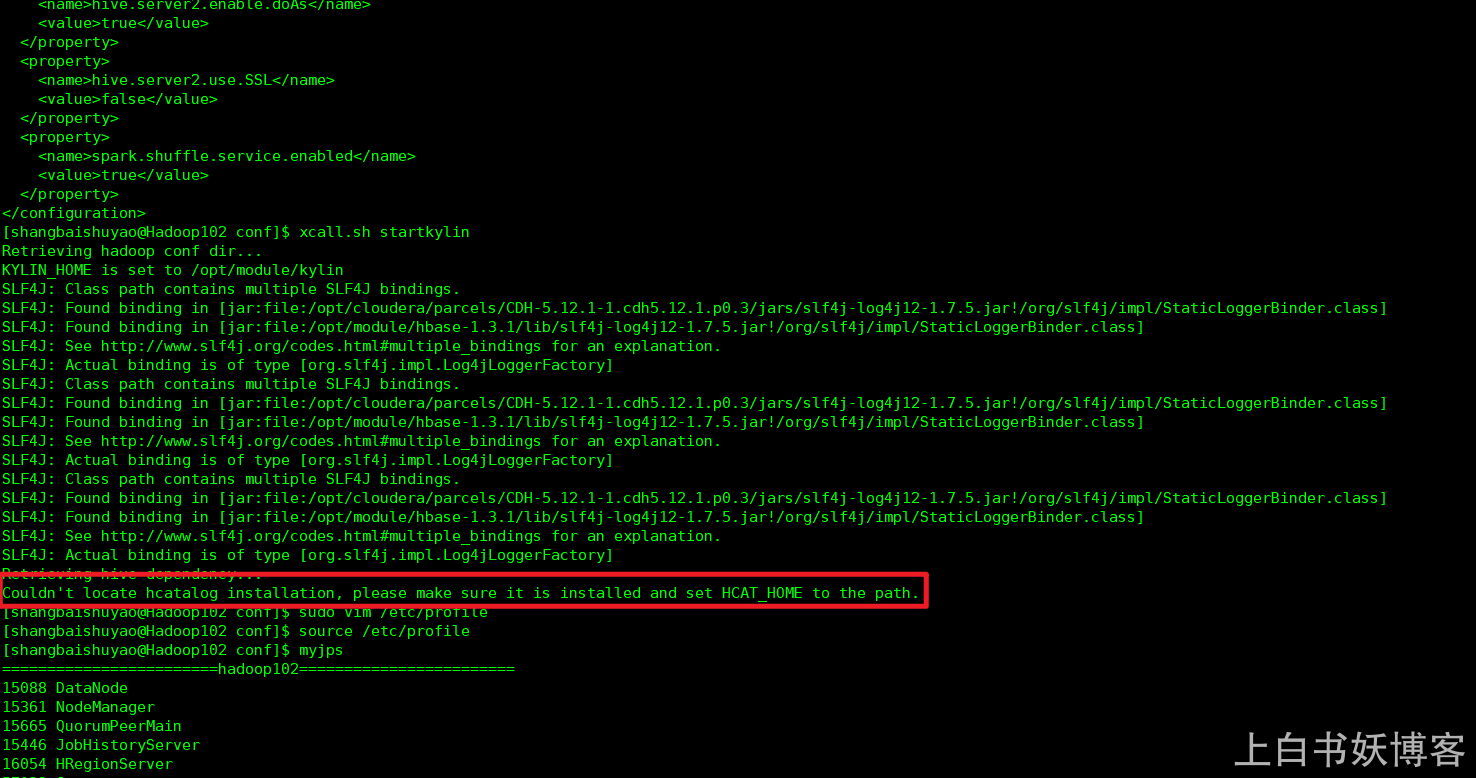

今天安装Kylin,安装完启动突然发现报错了,错误如下

kylin2.5.1配置hive beeline 出现异常Please set HIVE_CONF to the path which contains hive-site.xml

[shangbaishuyao@Hadoop102 conf]$ xcall.sh startkylin

Retrieving hadoop conf dir...

KYLIN_HOME is set to /opt/module/kylin

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-5.12.1-1.cdh5.12.1.p0.3/jars/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/module/hbase-1.3.1/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-5.12.1-1.cdh5.12.1.p0.3/jars/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/module/hbase-1.3.1/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/cloudera/parcels/CDH-5.12.1-1.cdh5.12.1.p0.3/jars/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/module/hbase-1.3.1/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

Retrieving hive dependency...

Couldn't find hive configuration directory. Please set HIVE_CONF to the path which contains hive-site.xml.

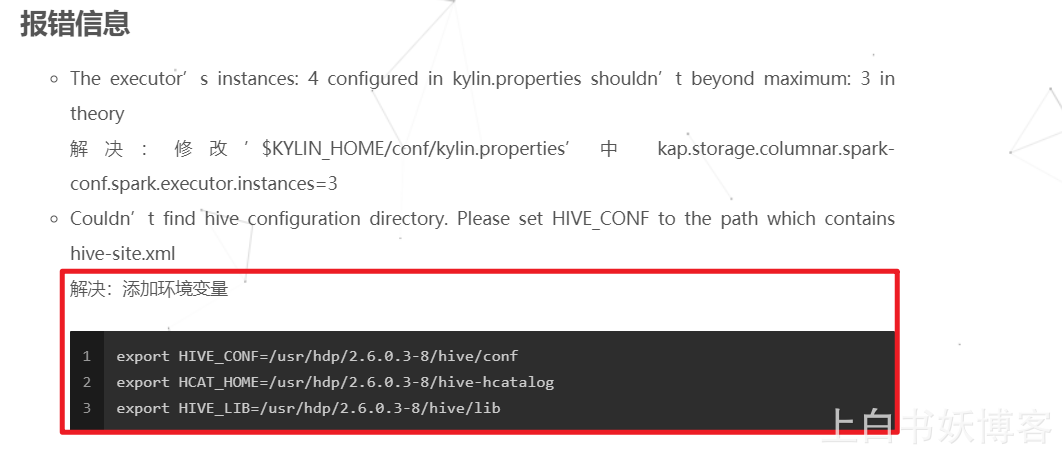

网上一查,发现有两种公认的比较好的解决方法,但是

都没有解决我的问题…如下…

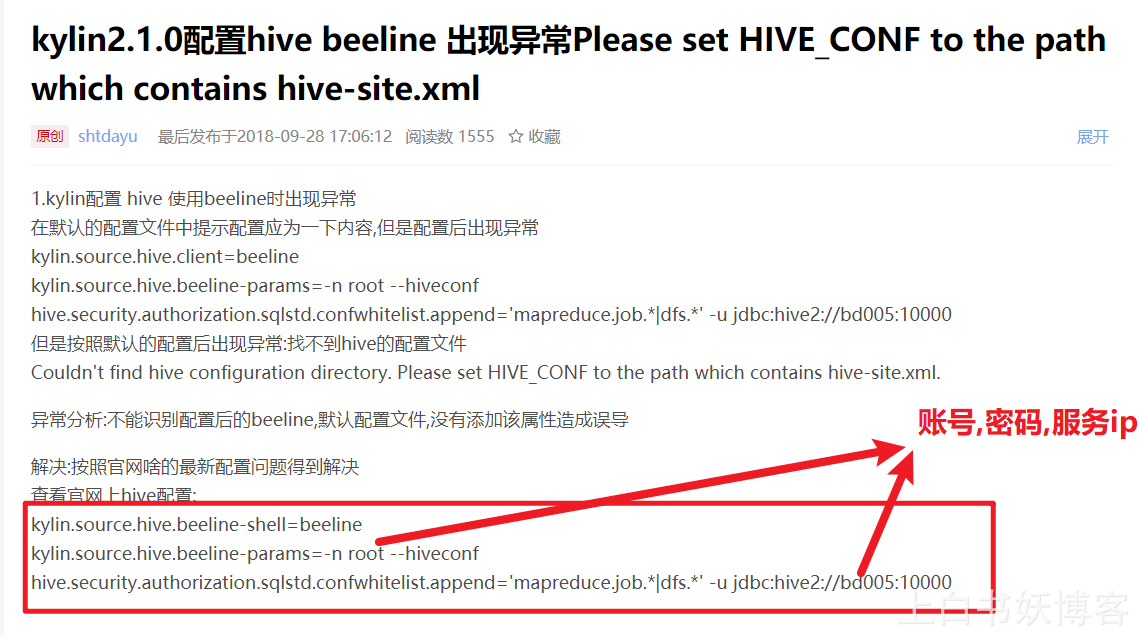

第一种: 这位老哥的

根据这个老哥的配置方法修改如下:

但是结果还是报同样的错:

倒是有人成功过,唉!!!咱人品这么好,居然凉凉…此处应有哭泣…

第二种:不得不说,这位老哥的是最接近真相的了

但是…

我按照他这种方法配置完成后发现,我的路径不对头,因为这根本不是安装路径里面的conf,hive-hcatalog,lib…如果按照你安装的路径或者解压的路径配置到上面,你会报各种奇怪的错误…

比如:

正当我想去TM的,洗洗睡吧的时候!!!,这几位大佬的文章给了我灵感 O(∩_∩)O哈哈~

这位: 0day__

这位: pengjianhua

还有这位: 啦啦啦啦

最后,我自己在cd /opt/ 目录中 和 cd /usr/ 中找啊找啊找啊找… ,终于…我找到了隐藏的配置文件…不容易啊…

如:

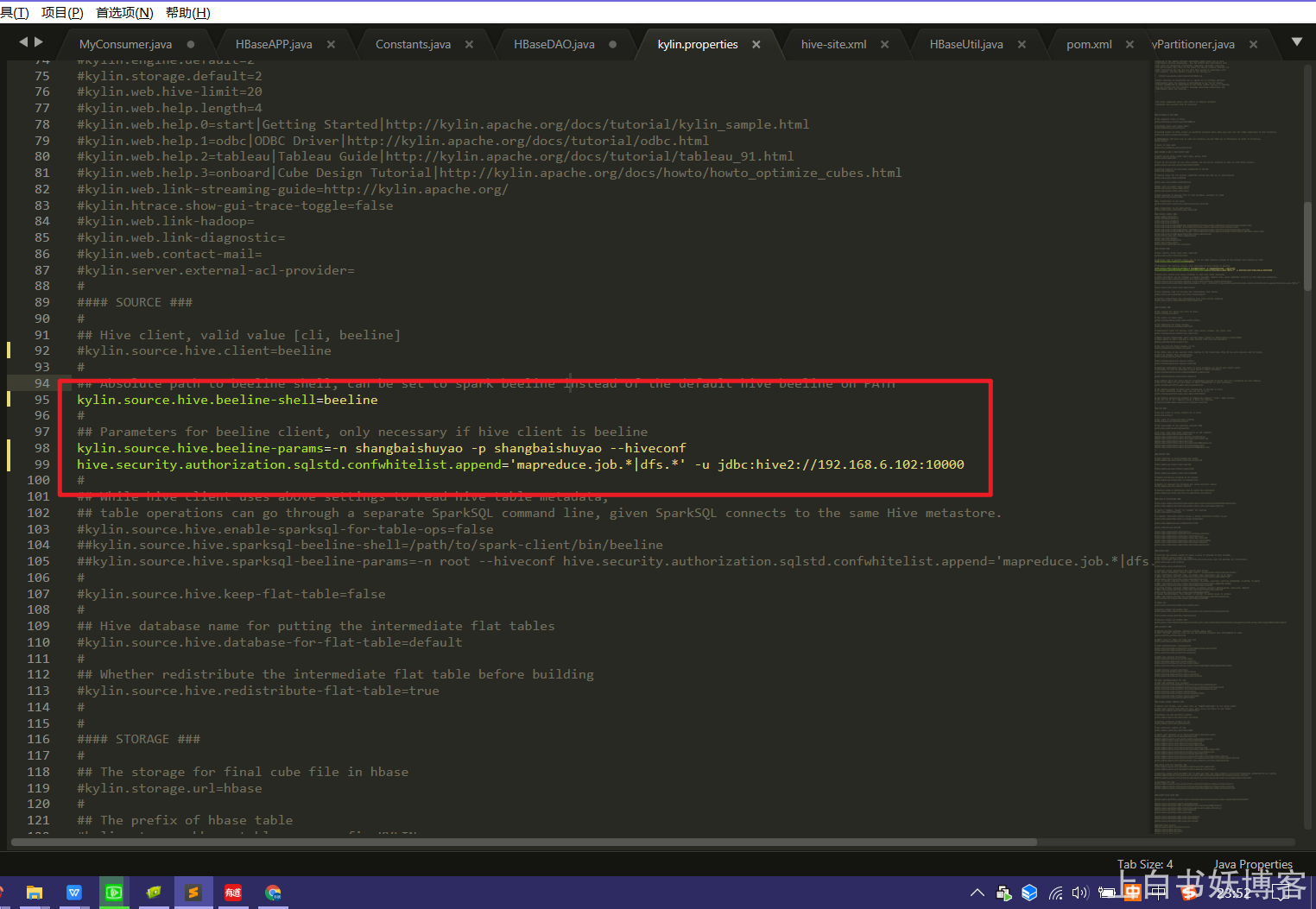

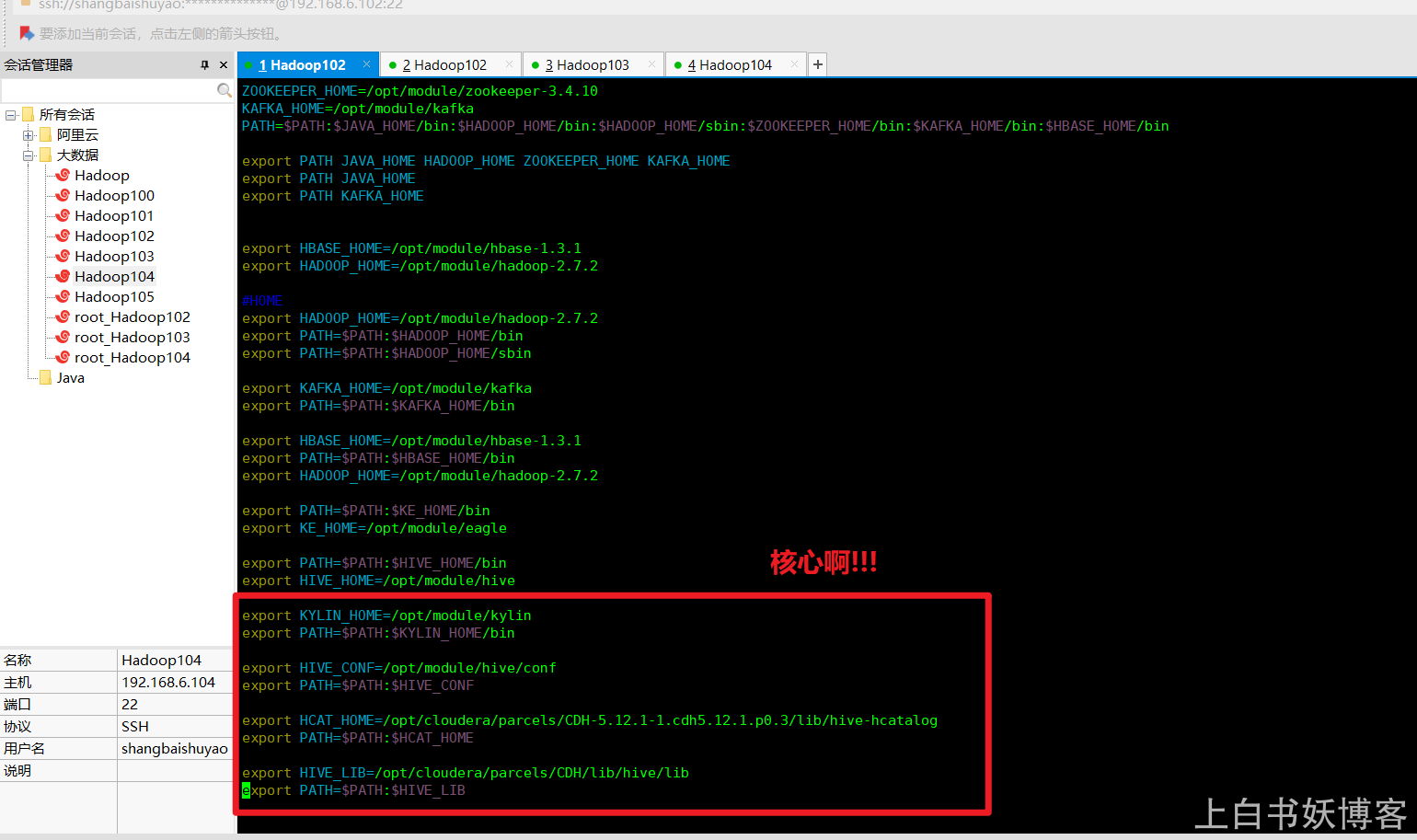

最后我在sudo vim /etc/profile中的配置是:

[shangbaishuyao@Hadoop102 CDH-5.12.1-1.cdh5.12.1.p0.3]$ cat /etc/profile

# /etc/profile

# System wide environment and startup programs, for login setup

# Functions and aliases go in /etc/bashrc

# It's NOT a good idea to change this file unless you know what you

# are doing. It's much better to create a custom.sh shell script in

# /etc/profile.d/ to make custom changes to your environment, as this

# will prevent the need for merging in future updates.

pathmunge () {

case ":${PATH}:" in

*:"$1":*)

;;

*)

if [ "$2" = "after" ] ; then

PATH=$PATH:$1

else

PATH=$1:$PATH

fi

esac

}

if [ -x /usr/bin/id ]; then

if [ -z "$EUID" ]; then

# ksh workaround

EUID=`id -u`

UID=`id -ru`

fi

USER="`id -un`"

LOGNAME=$USER

MAIL="/var/spool/mail/$USER"

fi

# Path manipulation

if [ "$EUID" = "0" ]; then

pathmunge /sbin

pathmunge /usr/sbin

pathmunge /usr/local/sbin

else

pathmunge /usr/local/sbin after

pathmunge /usr/sbin after

pathmunge /sbin after

fi

HOSTNAME=`/bin/hostname 2>/dev/null`

HISTSIZE=1000

if [ "$HISTCONTROL" = "ignorespace" ] ; then

export HISTCONTROL=ignoreboth

else

export HISTCONTROL=ignoredups

fi

export PATH USER LOGNAME MAIL HOSTNAME HISTSIZE HISTCONTROL

# By default, we want umask to get set. This sets it for login shell

# Current threshold for system reserved uid/gids is 200

# You could check uidgid reservation validity in

# /usr/share/doc/setup-*/uidgid file

if [ $UID -gt 199 ] && [ "`id -gn`" = "`id -un`" ]; then

umask 002

else

umask 022

fi

for i in /etc/profile.d/*.sh ; do

if [ -r "$i" ]; then

if [ "${-#*i}" != "$-" ]; then

. "$i"

else

. "$i" >/dev/null 2>&1

fi

fi

done

unset i

unset -f pathmunge

JAVA_HOME=/opt/module/jdk1.8.0_144

HADOOP_HOME=/opt/module/hadoop-2.7.2

ZOOKEEPER_HOME=/opt/module/zookeeper-3.4.10

KAFKA_HOME=/opt/module/kafka

PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOOKEEPER_HOME/bin:$KAFKA_HOME/bin:$HBASE_HOME/bin

export PATH JAVA_HOME HADOOP_HOME ZOOKEEPER_HOME KAFKA_HOME

export PATH JAVA_HOME

export PATH KAFKA_HOME

export HBASE_HOME=/opt/module/hbase-1.3.1

export HADOOP_HOME=/opt/module/hadoop-2.7.2

#HOME

export HADOOP_HOME=/opt/module/hadoop-2.7.2

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export KAFKA_HOME=/opt/module/kafka

export PATH=$PATH:$KAFKA_HOME/bin

export HBASE_HOME=/opt/module/hbase-1.3.1

export PATH=$PATH:$HBASE_HOME/bin

export HADOOP_HOME=/opt/module/hadoop-2.7.2

export PATH=$PATH:$KE_HOME/bin

export KE_HOME=/opt/module/eagle

export PATH=$PATH:$HIVE_HOME/bin

export HIVE_HOME=/opt/module/hive

export KYLIN_HOME=/opt/module/kylin

export PATH=$PATH:$KYLIN_HOME/bin

export HIVE_CONF=/opt/module/hive/conf

export PATH=$PATH:$HIVE_CONF

export HCAT_HOME=/opt/cloudera/parcels/CDH-5.12.1-1.cdh5.12.1.p0.3/lib/hive-hcatalog

export PATH=$PATH:$HCAT_HOME

export HIVE_LIB=/opt/cloudera/parcels/CDH/lib/hive/lib

export PATH=$PATH:$HIVE_LIB

如图所示:

配置完成别忘了source /etc/profile 哦!!!不然就白费了…

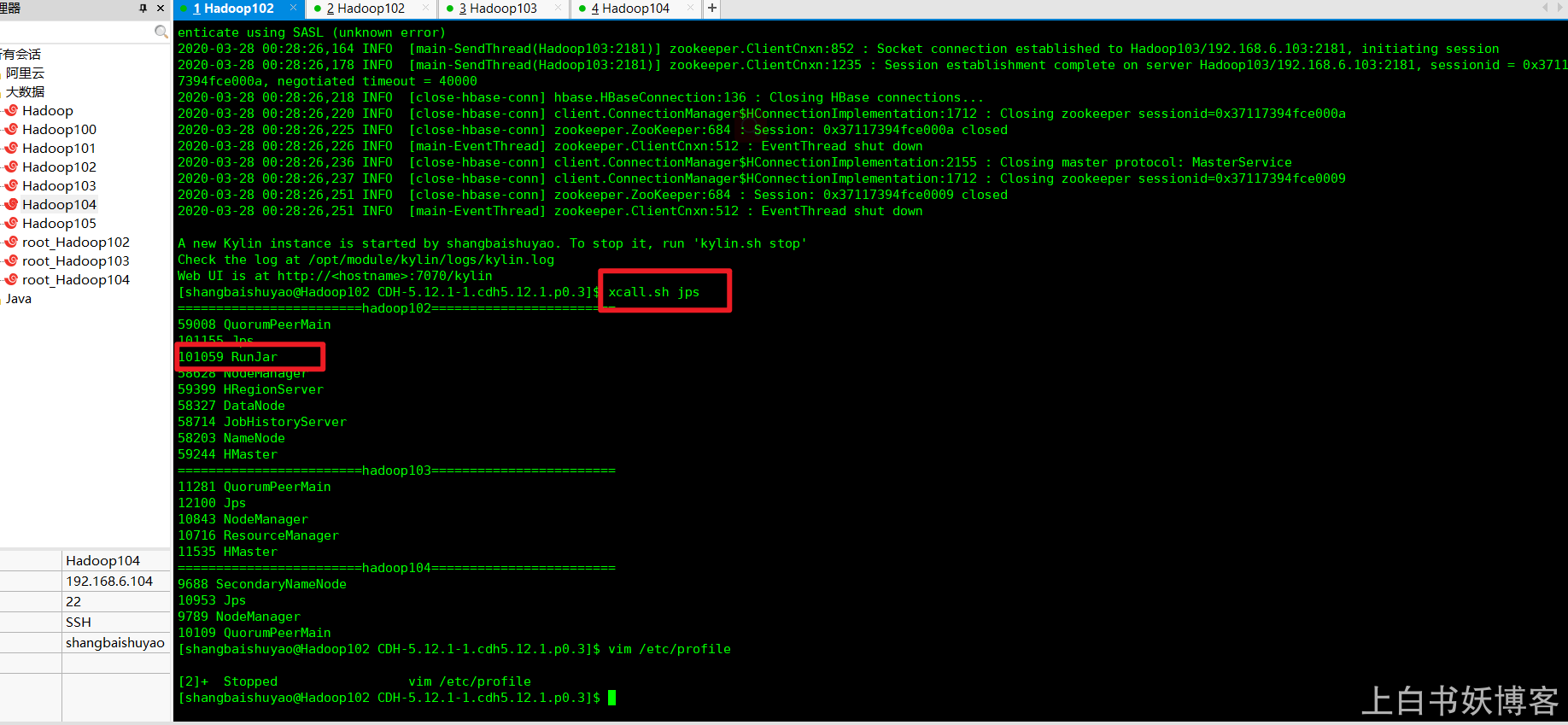

然后就是激动人心的启动结果了呢!!!

如图所示:

查看启动进程:

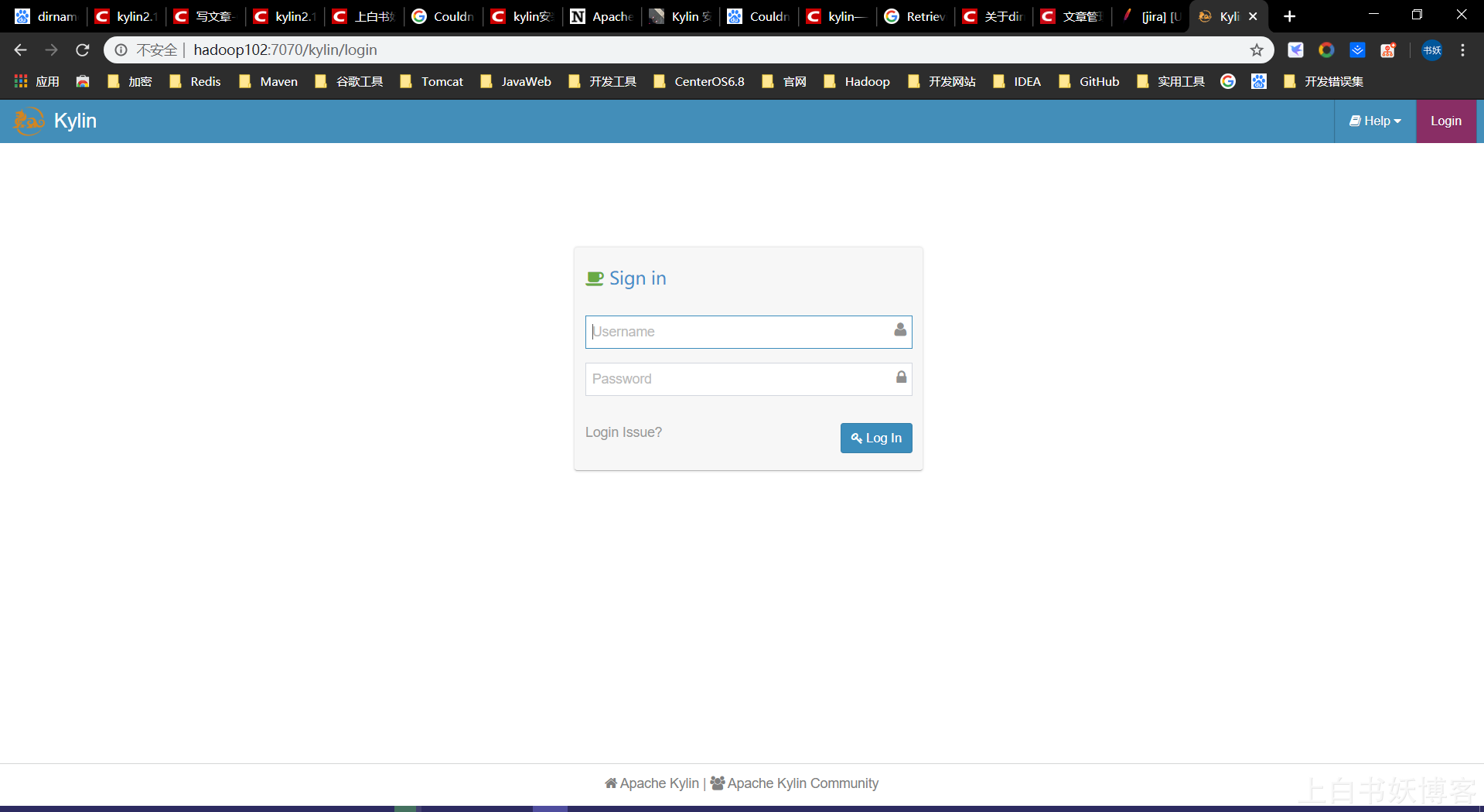

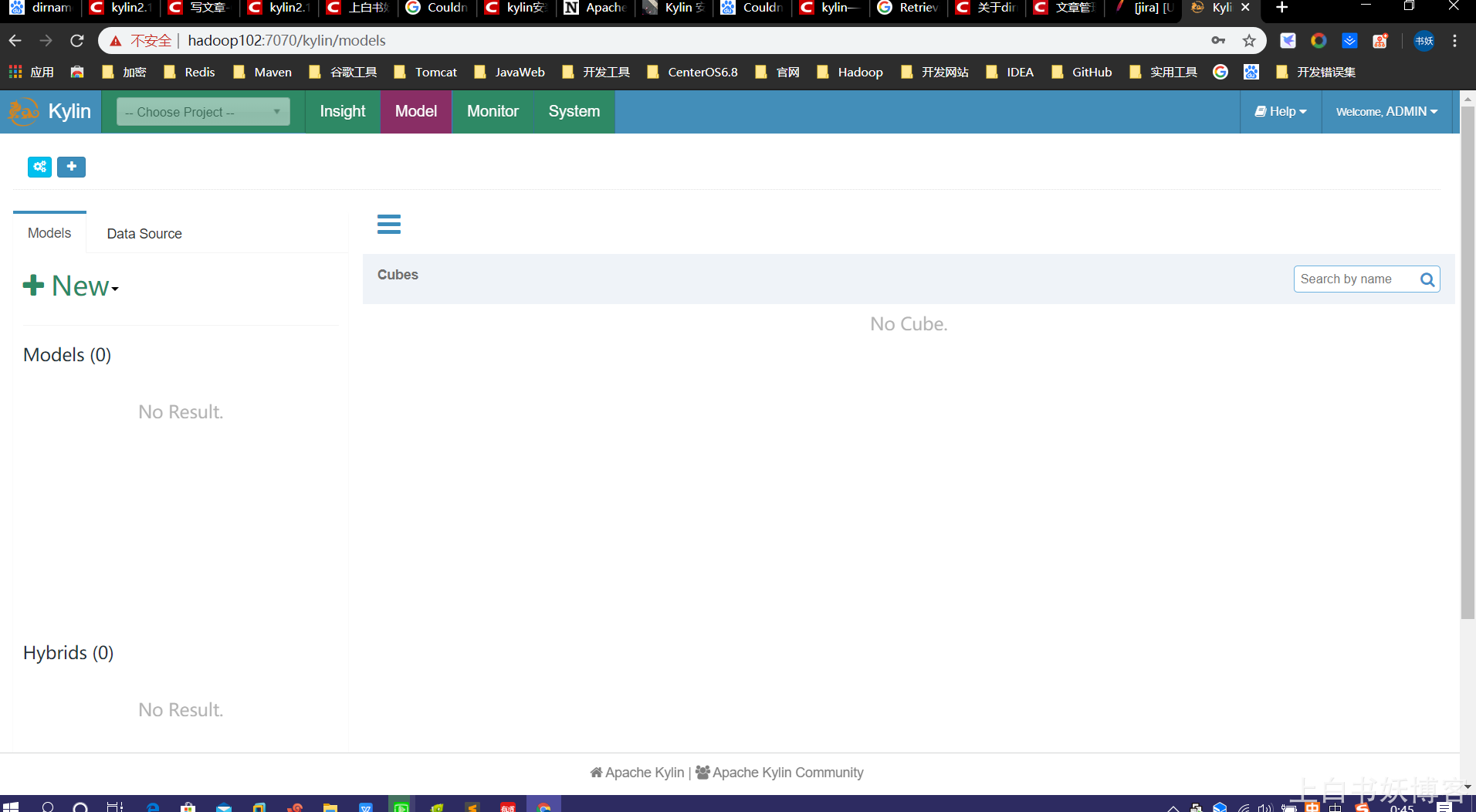

在http://hadoop102:7070/kylin查看Web页面,如图所示

用户名为:ADMIN,密码为:KYLIN(系统已填)

上白书妖结束寄语:

知识源于积累,登峰造极源于自律