日萌社

人工智能AI:Keras PyTorch MXNet TensorFlow PaddlePaddle 深度学习实战(不定时更新)

目标检测:YOLO V1、YOLO V2、YOLO V3 算法

KITTI自动驾驶数据集的训练和检测过程(人、车检测案例)、KITTI数据集的TFRecord格式存储、YOLO V3/Yolo V3 Tiny 迁移学习

5.10 案例:KITTI 人、车物体检测

学习目标

- 目标

- 掌握YOLO结构的封装接口以及结构

- 掌握TFRecord文件的读取和存储

- 掌握KITTI数据集的TFRecord格式存储

- 应用

- 应用完成KITTI自动驾驶数据集的格式转换

5.10.1 KITTI 数据集介绍

KITTI数据集由德国卡尔斯鲁厄理工学院和丰田美国技术研究院联合创办,是目前国际上最大的自动驾驶场景下的计算机视觉算法评测数据集。该数据集用于评测立体图像(stereo),光流(optical flow),视觉测距(visual odometry),3D物体检测(object detection)和3D跟踪(tracking)等计算机视觉技术在车载环境下的性能。KITTI包含市区、乡村和高速公路等场景采集的真实图像数据,每张图像中最多达15辆车和30个行人,还有各种程度的遮挡与截断。

地址:http://www.cvlibs.net/datasets/kitti/

1、kitti目标检测(object detection)2D数据集

2D数据集,是我们目前所接触的检测常用将物体使用平面框框起来的形式数据。数据和标签文件以及描述文件下载:

数据集内容介绍

TXT文件中包含着每个图片的标注信息,KITTI数据集为摄像机视野内的运动物体提供一个3D边框标注(使用激光雷达的坐标系)。该数据集的标注一共分为8个类别:’Car’, ’Van’, ’Truck’, ’Pedestrian’, ’Person (sit- ting)’, ’Cyclist’, ’Tram’ 和’Misc’或者'DontCare'。注意,'DontCare' 标签表示该区域没有被标注。

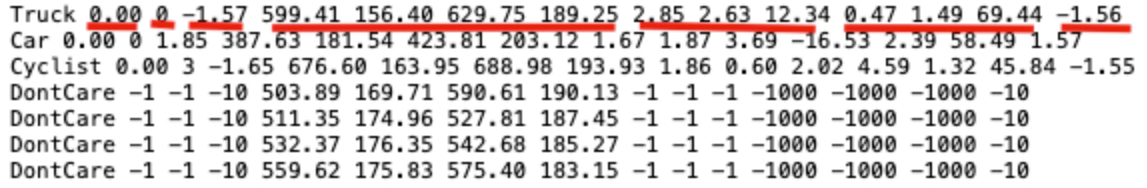

标注解释(value表示字符个数),按照标注文件分割如下,下图是一张图片的label注释,可以看到有载货汽车,汽车,自行车:

- type(类型):有'Car'-汽车, 'Van'-厢式货车, 'Truck'-载货卡车, 'Pedestrian'-行人, 'Person_sitting', 'Cyclist'-骑车人, 'Tram'-电车, 'Misc' or 'DontCare'这几种类型,其中'Misc'和'DontCare'表示可以忽略

- truncated(是否截断):0-1之间的值,这张图片为0.00没有截断。(截断就是目标对象在采集图像的边缘被截断了,是不完整的)

- occluded(被遮挡程度):0表示没有遮挡,1表示部分遮挡,2表示大面积遮挡,3表示不清楚。

- alpha(摄像机的偏转视角):不做分析

- bbox(目标在图像中的位置坐标):4个数字分别为599.41、156.40(左上)、629.75、189.25(右下):

- xmin、ymin、xmax、ymax

- 注意YOLO需要的bounding box格式是(center_x, center_y, width, height),后面的处理会说明

- dimensions+location/rotation_y(图像的三维坐标):这里不做分析。

2、数据集下载

去官网下载之后的两个data_object_label_2.zip 5.6M和data_object_image_2.zip 12.57G文件。

解压之后如下:图片下面有训练和测试数据,而另一个training就是训练数据集的目标值存放文件里面为*.txt文件

5.10.2 YOLOV3源码实现分析

5.10.2.1 源码模型下载

1、官方自带开源

由论文作者,约瑟夫·切特·雷德蒙开源的称之为DarkNet,C语言中的开源神经网络,github地址:https://github.com/pjreddie/darknet。官方实现的特点是,思路就是原论文思路,测试精度和速度无太大差异,**但是也有一些缺点比如实现的语言不是我们所擅长的语言,实现的思路比较难懂。**

2、github高星实现版本

除了官方实现的,也会有一些其他机构或个人开源的熟悉的如TensorFlow、Pytorch的版本。这里我们后面做的案例就会使用。

- 最早实现的高星版本:keras-yolo3。

- TensorFlow实现的版本,相比官方版本,优点就是源码简单易读已复现,可能存在的缺点,速度性能上与C实现的版本会有一些差异。

实现不是从零开始,而是将别人的关键代码,复制进自己的项目。

复现步骤:1、熟悉算法思想 2、介绍相关应用 3、分模块进行实战练习

3、YOLO官网上提供了很多YOLO v3的预训练模型

地址:https://pjreddie.com/darknet/yolo/。大多时候思维是基于预训练模型训练自己需要的模型,比如预训练模型中其实包括了我们需要的大类,我们还需要再细分此类,那需要建立自己的训练数据集,并开展训练。不过当训练数据不理想或训练时间不充分时,二次训练模型在大类辨别基础上并不及预训练模型,这时可以直接试试预训练模型的效果。

5.10.2.2 YOLOV3-Tensorflow2.0源码分析

1、V3整体结构

YOLOv3引入了残差模块,并进一步加深了网络,改进后的网络有53个卷积层,命名为Darknet-53。YOLOv3借鉴了FPN的思想,从不同尺度提取特征。

YOLOV3的详细结构如下:

YOLOv3 的网络结构由基础特征提取网络、multi-scale特征融合层和输出层组成。

-

特征提取网络:YOLOv3使用DarkNet53作为特征提取网络:DarkNet53 基本采用了全卷积网络,用步长为2的卷积操作替代了池化层,同时添加了 Residual 单元,避免在网络层数过深时发生梯度弥散。

-

特征融合层:为了解决之前YOLO版本对小目标不敏感的问题,YOLOv3采用了3个不同尺度的特征图来进行目标检测,分别为13x13,26x26,52x52,用来检测大、中、小三种目标。特征融合层选取 DarkNet 产出的三种尺度特征图作为输入,借鉴了FPN(feature pyramid networks)的思想,通过一系列的卷积层和上采样对各尺度的特征图进行融合。

-

输出层:同样使用了全卷积结构,其中最后一个卷积层的卷积核个数是255:3x(20+4+1)=75表示一个grid cell包含3个bounding box,4表示框的4个坐标信息,1表示Confidence Score,20表示VOC数据集中80个类别的概率。如果换用别的数据集,20可以更改为实际类别数量。

2、源码主模型

-

YOLOV3的筑结构:

-

1、Darknet

-

2、3层YoloConv进行拼接然后卷积操作得到三层输出output_0,output_1,output_2(由深到浅)

- 3、如果是预测

- 三层输出直接通过yolo_boxes计算得到bbox, objectness, class_probs, pred_box

- 然后合并进行yolo_nms过滤输出预测结果

-

def YoloV3(size=None, channels=3, anchors=yolo_anchors,

masks=yolo_anchor_masks, classes=80, training=False):

x = inputs = Input([size, size, channels])

x_36, x_61, x = Darknet(name='yolo_darknet')(x)

# 下面通过YOLO的后续

x = YoloConv(512, name='yolo_conv_0')(x)

output_0 = YoloOutput(512, len(masks[0]), classes, name='yolo_output_0')(x)

x = YoloConv(256, name='yolo_conv_1')((x, x_61))

output_1 = YoloOutput(256, len(masks[1]), classes, name='yolo_output_1')(x)

x = YoloConv(128, name='yolo_conv_2')((x, x_36))

output_2 = YoloOutput(128, len(masks[2]), classes, name='yolo_output_2')(x)

if training:

return Model(inputs, (output_0, output_1, output_2), name='yolov3')

boxes_0 = Lambda(lambda x: yolo_boxes(x, anchors[masks[0]], classes),

name='yolo_boxes_0')(output_0)

boxes_1 = Lambda(lambda x: yolo_boxes(x, anchors[masks[1]], classes),

name='yolo_boxes_1')(output_1)

boxes_2 = Lambda(lambda x: yolo_boxes(x, anchors[masks[2]], classes),

name='yolo_boxes_2')(output_2)

outputs = Lambda(lambda x: yolo_nms(x, anchors, masks, classes),

name='yolo_nms')((boxes_0[:3], boxes_1[:3], boxes_2[:3]))

return Model(inputs, outputs, name='yolov3')

- YOLOV3Tiny结构

同样是YOLOV3的原作者提出来的一个速度更快但精度稍低的嵌入式版本系列——Tiny-YOLO。对于速度要求比较高的项目,YOLOV3-tiny会是首要选择。删除一些特征层并且输出只有两层特征做筛选。

注:还有使用其他轻量级骨干网络的YOLO变种,如MobileNet-YOLOv3等。

def YoloV3Tiny(size=None, channels=3, anchors=yolo_tiny_anchors,

masks=yolo_tiny_anchor_masks, classes=80, training=False):

x = inputs = Input([size, size, channels])

x_8, x = DarknetTiny(name='yolo_darknet')(x)

x = YoloConvTiny(256, name='yolo_conv_0')(x)

output_0 = YoloOutput(256, len(masks[0]), classes, name='yolo_output_0')(x)

x = YoloConvTiny(128, name='yolo_conv_1')((x, x_8))

output_1 = YoloOutput(128, len(masks[1]), classes, name='yolo_output_1')(x)

if training:

return Model(inputs, (output_0, output_1), name='yolov3')

boxes_0 = Lambda(lambda x: yolo_boxes(x, anchors[masks[0]], classes),

name='yolo_boxes_0')(output_0)

boxes_1 = Lambda(lambda x: yolo_boxes(x, anchors[masks[1]], classes),

name='yolo_boxes_1')(output_1)

outputs = Lambda(lambda x: yolo_nms(x, anchors, masks, classes),

name='yolo_nms')((boxes_0[:3], boxes_1[:3]))

return Model(inputs, outputs, name='yolov3_tiny')

- 两者主结构Darknet与DarknetTiny的对比

- 1、YOLOV3重复若干层DarknetBlock,里面包含残差模块,输出包含三层特征

- 2、YOLOV3-Tiny实现删除残差模块,进行若干层采样,并且输出只有两层特征

# 1、重复若干层DarknetBlock,里面包含残差模块,输出包含三层特征

def Darknet(name=None):

x = inputs = Input([None, None, 3])

x = DarknetConv(x, 32, 3)

x = DarknetBlock(x, 64, 1)

x = DarknetBlock(x, 128, 2) # skip connection

x = x_36 = DarknetBlock(x, 256, 8) # skip connection

x = x_61 = DarknetBlock(x, 512, 8)

x = DarknetBlock(x, 1024, 4)

return tf.keras.Model(inputs, (x_36, x_61, x), name=name)

def DarknetBlock(x, filters, blocks):

x = DarknetConv(x, filters, 3, strides=2)

for _ in range(blocks):

x = DarknetResidual(x, filters)

return x

# 2、实现删除残差模块,进行若干层采样,并且输出只有两层特征

def DarknetTiny(name=None):

x = inputs = Input([None, None, 3])

x = DarknetConv(x, 16, 3)

x = MaxPool2D(2, 2, 'same')(x)

x = DarknetConv(x, 32, 3)

x = MaxPool2D(2, 2, 'same')(x)

x = DarknetConv(x, 64, 3)

x = MaxPool2D(2, 2, 'same')(x)

x = DarknetConv(x, 128, 3)

x = MaxPool2D(2, 2, 'same')(x)

x = x_8 = DarknetConv(x, 256, 3) # skip connection

x = MaxPool2D(2, 2, 'same')(x)

x = DarknetConv(x, 512, 3)

x = MaxPool2D(2, 1, 'same')(x)

x = DarknetConv(x, 1024, 3)

return tf.keras.Model(inputs, (x_8, x), name=name)

3、使用模型

# 初始化模型

model = YoloV3Tiny(args.size, training=True,classes=args.num_classes)

model = YoloV3(args.size, training=True, classes=args.num_classes)

5.10.3 KITTI人车检测项目

5.10.3.1 项目目录与模块

- data:包含所有数据目录

- utils:数据集转换等工具目录

- yolov3-tf2:TensorFlow2.0实现的YOLO多种模型目录

5.10.3.2 项目步骤分析

我们利用已经提供好的数据集和实现好的YOLO模型,去进行训练KITTI场景下的物体检测,包括人,车等多种物体。

- 1、数据集类型转换,KITTI转换成TFRecords文件

- 2、KITTI案例训练代码实现

- 3、图片和视频的检测代码实现

5.10.4 数据集类型转换-KITTI数据集转换成TFRecords文件

5.10.4.1 TFRecord-TensorFlow 数据集存储格式

TFRecord 是 TensorFlow 中的数据集存储格式。当我们将数据集整理成 TFRecord 格式后,TensorFlow 就可以高效地读取和处理这些数据集,从而帮助我们更高效地进行大规模的模型训练。

- 格式:TFRecord 可以理解为一系列序列化的

tf.train.Example元素所组成的列表文件,而每一个tf.train.Example又由若干个tf.train.Feature的字典组成。形式如下:

[

{ # example 1 (tf.train.Example)

'feature_1': tf.train.Feature,

...

'feature_k': tf.train.Feature

},

...

{ # example N (tf.train.Example)

'feature_1': tf.train.Feature,

...

'feature_k': tf.train.Feature

}

]

# 字典结构如

feature = {

'image': tf.train.Feature(bytes_list=tf.train.BytesList(value=[image])), # 图片是一个 Bytes 对象

'label': tf.train.Feature(int64_list=tf.train.Int64List(value=[label])) # 标签是一个 Int 对象

}

1、保存TFRecord

为了将形式各样的数据集整理为 TFRecord 格式,我们需要对数据集中的每个元素进行以下步骤:

- 1、读取该数据元素到内存

- 2、将该元素转换为

tf.train.Example对象(每一个tf.train.Example由若干个tf.train.Feature的字典组成,因此需要先建立 Feature 的字典); - 3、将该

tf.train.Example对象序列化为字符串,并通过一个预先定义的tf.io.TFRecordWriter写入 TFRecord 文件。

2、读取 TFRecord 数据

则可按照以下步骤:

- 1、通过

tf.data.TFRecordDataset读入原始的 TFRecord 文件(此时文件中的tf.train.Example对象尚未被反序列化),获得一个tf.data.Dataset数据集对象; - 2、通过

Dataset.map方法,对该数据集对象中的每一个序列化的tf.train.Example字符串执行tf.io.parse_single_example函数,从而实现反序列化。

3、实例

将对cats_vs_dogs二分类数据集的训练集部分转换为 TFRecord 文件,并读取该文件的过程。因为图片过多,这里为了快速看到效果,选择了sample目录下的train数据集几张图片。

1、获取本地的数据

import os

import tensorflow as tf

import os

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "2"

train_cats_dir = './cats_vs_dogs/train/cats/'

train_dogs_dir = './cats_vs_dogs/train/dogs/'

tfrecord_file = './cats_vs_dogs/train.tfrecords'

train_cat_filenames = [train_cats_dir + filename for filename in os.listdir(train_cats_dir)]

train_dog_filenames = [train_dogs_dir + filename for filename in os.listdir(train_dogs_dir)]

train_filenames = train_cat_filenames + train_dog_filenames

train_labels = [0] * len(train_cat_filenames) + [1] * len(train_dog_filenames) # 将 cat 类的标签设为0,dog 类的标签设为1

2、迭代读取每张图片,建立 tf.train.Feature 字典和 tf.train.Example 对象,序列化并写入 TFRecord 文件。

with tf.io.TFRecordWriter(tfrecord_file) as writer:

for filename, label in zip(train_filenames, train_labels):

# 1、读取数据集图片到内存,image 为一个 Byte 类型的字符串

image = open(filename, 'rb').read()

# 2、建立 tf.train.Feature 字典

feature = {

'image': tf.train.Feature(bytes_list=tf.train.BytesList(value=[image])), # 图片是一个 Bytes 对象

'label': tf.train.Feature(int64_list=tf.train.Int64List(value=[label])) # 标签是一个 Int 对象

}

# 3、通过字典建立 Example

example = tf.train.Example(features=tf.train.Features(feature=feature))

# 4\将Example序列化并写入 TFRecord 文件

writer.write(example.SerializeToString())

tfrecords的文件大小会缩小,由于这里数据及本身不大所以没有对比,后面我们的KITTI数据集生成的大小会小很多。

注意:tf.train.Feature只支持三种数据格式:

tf.train.BytesList:字符串或原始 Byte 文件(如图片),通过bytes_list参数传入一个由字符串数组初始化的tf.train.BytesList对象tf.train.FloatList:浮点数,通过float_list参数传入一个由浮点数数组初始化的tf.train.FloatList对象tf.train.Int64List:整数,通过int64_list参数传入一个由整数数组初始化的tf.train.Int64List对象。

3、读取 TFRecord 文件

我们可以通过以下代码,读取之间建立的 train.tfrecords 文件,并通过 Dataset.map 方法,使用 tf.io.parse_single_example 函数对数据集中的每一个序列化的 tf.train.Example 对象解码。

# 1、读取 TFRecord 文件

raw_dataset = tf.data.TFRecordDataset(tfrecord_file)

# 2、定义Feature结构,告诉解码器每个Feature的类型是什么

feature_description = {

'image': tf.io.FixedLenFeature([], tf.string),

'label': tf.io.FixedLenFeature([], tf.int64),

}

# 3、将 TFRecord 文件中的每一个序列化的 tf.train.Example 解码

def _parse_example(example_string):

feature_dict = tf.io.parse_single_example(example_string, feature_description)

feature_dict['image'] = tf.io.decode_jpeg(feature_dict['image']) # 解码JPEG图片

return feature_dict['image'], feature_dict['label']

dataset = raw_dataset.map(_parse_example)

for image, label in dataset:

print(image, label)

-

这里的

feature_description类似于一个数据集的 “描述文件”,通过一个由键值对组成的字典,告知tf.io.parse_single_example函数每个tf.train.Example数据项有哪些 Feature,以及这些 Feature 的类型、形状等属性。tf.io.FixedLenFeature的三个输入参数shape、dtype和default_value(可省略)为每个 Feature 的形状、类型和默认值。这里我们的数据项都是单个的数值或者字符串,所以shape为空数组。

import os

import tensorflow as tf

import os

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "2"

train_cats_dir = './cats_vs_dogs/train/cats/'

train_dogs_dir = './cats_vs_dogs/train/dogs/'

tfrecord_file = './cats_vs_dogs/train.tfrecords'

def main():

train_cat_filenames = [train_cats_dir + filename for filename in os.listdir(train_cats_dir)]

train_dog_filenames = [train_dogs_dir + filename for filename in os.listdir(train_dogs_dir)]

train_filenames = train_cat_filenames + train_dog_filenames

# 将 cat 类的标签设为0,dog 类的标签设为1

train_labels = [0] * len(train_cat_filenames) + [1] * len(train_dog_filenames)

with tf.io.TFRecordWriter(tfrecord_file) as writer:

for filename, label in zip(train_filenames, train_labels):

# 1、读取数据集图片到内存,image 为一个 Byte 类型的字符串

image = open(filename, 'rb').read()

# 2、建立 tf.train.Feature 字典

feature = {

'image': tf.train.Feature(bytes_list=tf.train.BytesList(value=[image])), # 图片是一个 Bytes 对象

'label': tf.train.Feature(int64_list=tf.train.Int64List(value=[label])) # 标签是一个 Int 对象

}

# 3、通过字典建立 Example

example = tf.train.Example(features=tf.train.Features(feature=feature))

# 4\将Example序列化并写入 TFRecord 文件

writer.write(example.SerializeToString())

def read():

# 1、读取 TFRecord 文件

raw_dataset = tf.data.TFRecordDataset(tfrecord_file)

# 2、定义Feature结构,告诉解码器每个Feature的类型是什么

feature_description = {

'image': tf.io.FixedLenFeature([], tf.string),

'label': tf.io.FixedLenFeature([], tf.int64),

}

# 3、将 TFRecord 文件中的每一个序列化的 tf.train.Example 解码

def _parse_example(example_string):

feature_dict = tf.io.parse_single_example(example_string, feature_description)

feature_dict['image'] = tf.io.decode_jpeg(feature_dict['image']) # 解码JPEG图片

return feature_dict['image'], feature_dict['label']

dataset = raw_dataset.map(_parse_example)

for image, label in dataset:

print(image, label)

if __name__ == '__main__':

# main()

read()5.10.4.2 KITTI数据集转换成TFRecords文件

- 目录结构:

- create_kitti_tf_record.py:需要实现的主要存储逻辑

- 步骤:

- 1、进行读取主逻辑函数过程编写,指定需要传递的命令行参数

- 2、读取文件标准信息、过滤标注信息、进行构造example的feature字典

1、进行读取主逻辑函数过程编写,指定需要传递的命令行参数

- 定义convert_kitti_to_tfrecords,补充完整命令行参数

-

创建KITTI训练和验证集的tfrecord位置

-

列出所有的图片,进行每张图片的内容和标注信息的获取,写入到tfrecords文件

其中导入相关包和命令行参数如下设置

import hashlib

import io

import os

import numpy as np

import PIL.Image as pil

from PIL import Image

import tensorflow as tf

import feature_parse

from IoU import iou

import argparse

import sys

parser = argparse.ArgumentParser()

parser.add_argument('--data_dir', type=str, default='../data/kitti/',

help='kitti数据集的位置')

parser.add_argument('--output_path', type=str, default='../data/kitti_tfrecords/',

help='TFRecord文件的输出位置')

parser.add_argument('--classes_to_use', default='car,van,truck,pedestrian,cyclist,tram', help='KITTI中需要检测的类别')

parser.add_argument('--validation_set_size', type=int, default=500,

help='验证集数据集使用大小')

编写的主函数逻辑如下:

def convert_kitti_to_tfrecords(data_dir, output_path, classes_to_use,

validation_set_size):

"""

将KITTI detection 转换成TFRecords.

:param data_dir: 源数据目录

:param output_path: 输出文件目录

:param classes_to_use: 选择需要使用的类别

:param validation_set_size: 验证集大小

:return:

"""

train_count = 0

val_count = 0

# 1、创建KITTI训练和验证集的tfrecord位置

# 标注信息位置

annotation_dir = os.path.join(data_dir,

'training',

'label_2')

# 图片位置

image_dir = os.path.join(data_dir,

'data_object_image_2',

'training',

'image_2')

train_writer = tf.io.TFRecordWriter(output_path + 'train.tfrecord')

val_writer = tf.io.TFRecordWriter(output_path + 'val.tfrecord')

# 2、列出所有的图片,进行每张图片的内容和标注信息的获取,写入到tfrecords文件

images = sorted(os.listdir(image_dir))

for img_name in images:

# (1)获取当前图片的编号数据,并拼接读取相应标注文件

img_num = int(img_name.split('.')[0])

# (2)读取标签文件函数

# 整数需要进行填充成与标签文件相同的6位字符串

img_anno = read_annotation_file(os.path.join(annotation_dir,

str(img_num).zfill(6) + '.txt'))

# (3)过滤标签函数

# 当前图片的标注中 过滤掉一些没有用的类别和dontcare区域的annotations

annotation_for_image = filter_annotations(img_anno, classes_to_use)

# (4)写入训练和验证集合TFRecord文件

# 读取拼接的图片路径,然后与过滤之后的标注结果进行合并到一个example中

image_path = os.path.join(image_dir, img_name)

example = prepare_example(image_path, annotation_for_image)

# 如果小于验证集数量大小就直接写入验证集,否则写入训练集

is_validation_img = img_num < validation_set_size

if is_validation_img:

val_writer.write(example.SerializeToString())

val_count += 1

else:

train_writer.write(example.SerializeToString())

train_count += 1

train_writer.close()

val_writer.close()

def main(args):

convert_kitti_to_tfrecords(

data_dir=args.data_dir,

output_path=args.output_path,

classes_to_use=args.classes_to_use.split(','),

validation_set_size=args.validation_set_size)

if __name__ == '__main__':

args = parser.parse_args(sys.argv[1:])

main(args)

(2)读取读取标签文件函数

def read_annotation_file(filename):

with open(filename) as f:

content = f.readlines()

# 分割解析内容

content = [x.strip().split(' ') for x in content]

# 保存内容到字典结构

anno = dict()

anno['type'] = np.array([x[0].lower() for x in content])

anno['truncated'] = np.array([float(x[1]) for x in content])

anno['occluded'] = np.array([int(x[2]) for x in content])

anno['alpha'] = np.array([float(x[3]) for x in content])

anno['2d_bbox_left'] = np.array([float(x[4]) for x in content])

anno['2d_bbox_top'] = np.array([float(x[5]) for x in content])

anno['2d_bbox_right'] = np.array([float(x[6]) for x in content])

anno['2d_bbox_bottom'] = np.array([float(x[7]) for x in content])

return anno

(3)过滤标签函数

def filter_annotations(img_all_annotations, used_classes):

"""

过滤掉一些没有用的类别和dontcare区域的annotations

:param img_all_annotations: 图片的所有标注

:param used_classes: 需要留下记录的列别

:return:

"""

img_filtered_annotations = {}

# 1、过滤这个图片中标注的我们训练指定不需要的类别,把索引记录下来

# 方便后面在处理对应的一些坐标时候使用

relevant_annotation_indices = [

i for i, x in enumerate(img_all_annotations['type']) if x in used_classes

]

# 2、获取过滤后的下标对应某个标记物体的其它信息

for key in img_all_annotations.keys():

img_filtered_annotations[key] = (

img_all_annotations[key][relevant_annotation_indices])

# 3、如果dontcare在我们要获取的类别里面,也进行组合获取,然后过滤相关的bboxes不符合要求的

if 'dontcare' in used_classes:

dont_care_indices = [i for i,

x in enumerate(img_filtered_annotations['type'])

if x == 'dontcare']

# bounding box的格式[y_min, x_min, y_max, x_max]

all_boxes = np.stack([img_filtered_annotations['2d_bbox_top'],

img_filtered_annotations['2d_bbox_left'],

img_filtered_annotations['2d_bbox_bottom'],

img_filtered_annotations['2d_bbox_right']],

axis=1)

# 计算bboxesIOU,比如这样的

# Truck 0.00 0 -1.57 599.41 156.40 629.75 189.25 2.85 2.63 12.34 0.47 1.49 69.44 -1.56

# DontCare -1 -1 -10 503.89 169.71 590.61 190.13 -1 -1 -1 -1000 -1000 -1000 -10

# DontCare -1 -1 -10 511.35 174.96 527.81 187.45 -1 -1 -1 -1000 -1000 -1000 -10

# DontCare -1 -1 -10 532.37 176.35 542.68 185.27 -1 -1 -1 -1000 -1000 -1000 -10

# DontCare -1 -1 -10 559.62 175.83 575.40 183.15 -1 -1 -1 -1000 -1000 -1000 -10

ious = iou(boxes1=all_boxes,

boxes2=all_boxes[dont_care_indices])

# 删除所有 bounding boxes 与 dontcare region 重叠的区域

if ious.size > 0:

# 找出下标

boxes_to_remove = np.amax(ious, axis=1) > 0.0

for key in img_all_annotations.keys():

img_filtered_annotations[key] = (

img_filtered_annotations[key][np.logical_not(boxes_to_remove)])

return img_filtered_annotations

2、读取文件标准信息、过滤标注信息、进行构造example的feature字典

def prepare_example(image_path, annotations):

"""

对一个图片的Annotations转换成tf.Example proto.

:param image_path:

:param annotations:

:return:

"""

# 1、读取图片内容,转换成数组格式

with open(image_path, 'rb') as fid:

encoded_png = fid.read()

encoded_png_io = io.BytesIO(encoded_png)

image = pil.open(encoded_png_io)

image = np.asarray(image)

# 2、构造协议中需要的字典键的值

# sha256加密结果

key = hashlib.sha256(encoded_png).hexdigest()

# 进行坐标处理

width = int(image.shape[1])

height = int(image.shape[0])

# 存储极坐标归一化格式

xmin_norm = annotations['2d_bbox_left'] / float(width)

ymin_norm = annotations['2d_bbox_top'] / float(height)

xmax_norm = annotations['2d_bbox_right'] / float(width)

ymax_norm = annotations['2d_bbox_bottom'] / float(height)

# 其他信息,难度以及字符串类别

difficult_obj = [0] * len(xmin_norm)

classes_text = [x.encode('utf8') for x in annotations['type']]

# 3、构造协议example

example = tf.train.Example(features=tf.train.Features(feature={

'image/height': feature_parse.int64_feature(height),

'image/width': feature_parse.int64_feature(width),

'image/filename': feature_parse.bytes_feature(image_path.encode('utf8')),

'image/source_id': feature_parse.bytes_feature(image_path.encode('utf8')),

'image/key/sha256': feature_parse.bytes_feature(key.encode('utf8')),

'image/encoded': feature_parse.bytes_feature(encoded_png),

'image/format': feature_parse.bytes_feature('png'.encode('utf8')),

'image/object/bbox/xmin': feature_parse.float_list_feature(xmin_norm),

'image/object/bbox/xmax': feature_parse.float_list_feature(xmax_norm),

'image/object/bbox/ymin': feature_parse.float_list_feature(ymin_norm),

'image/object/bbox/ymax': feature_parse.float_list_feature(ymax_norm),

'image/object/class/text': feature_parse.bytes_list_feature(classes_text),

'image/object/difficult': feature_parse.int64_list_feature(difficult_obj),

'image/object/truncated': feature_parse.float_list_feature(

annotations['truncated'])

}))

return example

最终运行完成之后对应的目录输出TFRecord文件:

- trian.tfrecord

- val.tfrecord

那么仔细去观察之后会发现,总共大小5.5G训练+396M验证集,要比data_object_image_2.zip 12.57G少了将近一倍。

5.10.5 小结

- 掌握YOLO结构的封装接口以及结构

- 掌握TFRecord文件的读取和存储

- 掌握KITTI数据集的TFRecord格式存储

5.11 案例:KITTI人、车检测案例-训练、检测

学习目标

- 目标

- 无

- 应用

- 应用完成KITTI自动驾驶数据集的训练和检测过程

5.11.1 训练过程实现

- 步骤

- 训练参数设置

- 1、判断传入的是需要训练YoloV3Tiny还是YOLOV3正常版本

- 由于有实现多个版本,可以让用户选择具体版本来进行指定模型训练

- 2、获取传入参数的训练数据以及获取传入参数的验证集数据

- 通过dataset.load_tfrecord_dataset进行读取

- 4、判断是否进行迁移学习

- 在训练期间可以封装让训练自定义训练的结构,从而根据自己的数据训练模型

- 5、定义优化器以及损失计算方式

- 6、优化训练

通过argparse设置训练参数

import logging

import tensorflow as tf

import numpy as np

import cv2

import sys

import argparse

import os

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "2"

from tensorflow.keras.callbacks import (

ReduceLROnPlateau,

EarlyStopping,

ModelCheckpoint,

TensorBoard

)

from yolov3_tf2.models import (

YoloV3, YoloV3Tiny, YoloLoss,

yolo_anchors, yolo_anchor_masks,

yolo_tiny_anchors, yolo_tiny_anchor_masks

)

from yolov3_tf2.utils import freeze_all

import yolov3_tf2.dataset as dataset

parser = argparse.ArgumentParser()

parser.add_argument('--dataset', type=str, default='./data/kitti_tfrecords/train.tfrecord',

help='训练数据集路径')

parser.add_argument('--val_dataset', type=str, default='./data/kitti_tfrecords/val.tfrecord',

help='验证集目录')

parser.add_argument('--tiny', type=bool, default=False, help='加载的模型类型yolov3 or yolov3-tiny')

parser.add_argument('--weights', type=str, default='./checkpoints/yolov3_train_1.tf',

help='模型预训练权重路径')

parser.add_argument('--classes', type=str, default='./data/kitti.names',

help='类别文件路径')

parser.add_argument('--mode', type=str, default='fit', choices=['fit', 'eager_tf'],

help='fit: model.fit模式, eager_tf: 自定义GradientTape模式')

parser.add_argument('--transfer', type=str, default='none', choices=['none', 'darknet',

'no_output', 'frozen',

'fine_tune'],

help='none: 全部进行训练'

'迁移学习并冻结所有, fine_tune: 迁移并只冻结darknet')

parser.add_argument('--size', type=int, default=416,

help='图片大小')

parser.add_argument('--epochs', type=int, default=2,

help='迭代次数')

parser.add_argument('--batch_size', type=int, default=8,

help='每批次大小')

parser.add_argument('--learning_rate', type=float, default=1e-3,

help='学习率')

parser.add_argument('--num_classes', type=int, default=6,

help='类别数量')

1、判断传入的是需要训练YoloV3Tiny还是YOLOV3正常版本

-

并且初始化YOLO各个模型的anchor大小,(在源码中有设置,计算损失时候需要用)

- anchors: 使用到的anchor的尺寸,如[10, 13, 16, 30, 33, 23, 30, 61, 62, 45, 59, 119, 116, 90, 156, 198, 373, 326]

- anchor_mask: 每个层级上使用的anchor的掩码,[[6, 7, 8], [3, 4, 5], [0, 1, 2]]

- anchor box的索引数组,3个1组倒序排序:

- 如6,7,8对应13x13特征图取(116, 90), (156, 198), (373, 326)

- anchor box的索引数组,3个1组倒序排序:

-

yolo_anchors = np.array([(10, 13), (16, 30), (33, 23), (30, 61), (62, 45), (59, 119), (116, 90), (156, 198), (373, 326)], np.float32) / 416 yolo_anchor_masks = np.array([[6, 7, 8], [3, 4, 5], [0, 1, 2]]) yolo_tiny_anchors = np.array([(10, 14), (23, 27), (37, 58), (81, 82), (135, 169), (344, 319)], np.float32) / 416 yolo_tiny_anchor_masks = np.array([[3, 4, 5], [0, 1, 2]])

# 1、判断传入的是需要训练YoloV3Tiny还是YOLOV3正常版本

if args.tiny:

model = YoloV3Tiny(args.size, training=True,

classes=args.num_classes)

anchors = yolo_tiny_anchors

anchor_masks = yolo_tiny_anchor_masks

else:

model = YoloV3(args.size, training=True, classes=args.num_classes)

anchors = yolo_anchors

anchor_masks = yolo_anchor_masks

2、获取传入参数的训练数据以及获取传入参数的验证集数据

- 通过dataset.load_tfrecord_dataset进行读取

# 2、获取传入参数的训练数据

if args.dataset:

train_dataset = dataset.load_tfrecord_dataset(

args.dataset, args.classes)

train_dataset = train_dataset.shuffle(buffer_size=1024)

train_dataset = train_dataset.batch(args.batch_size)

train_dataset = train_dataset.map(lambda x, y: (

dataset.transform_images(x, args.size),

dataset.transform_targets(y, anchors, anchor_masks, 6)))

train_dataset = train_dataset.prefetch(

buffer_size=tf.data.experimental.AUTOTUNE)

# 3、获取传入参数的验证集数据

if args.val_dataset:

val_dataset = dataset.load_tfrecord_dataset(

args.val_dataset, args.classes)

val_dataset = val_dataset.batch(args.batch_size)

val_dataset = val_dataset.map(lambda x, y: (

dataset.transform_images(x, args.size),

dataset.transform_targets(y, anchors, anchor_masks, 6)))

3、判断训练是否进行迁移学习,指定结构冻结

- 1、如果迁移学习:

- 加载与训练权重,可从官网下载

- 如果判断微调的话:加载yolo_darknet:x_36, x_61, x = Darknet(name='yolo_darknet')(x),冻结这些层

- 如果用户传入frozen:冻结所有层

- 如果是其他:根据YOLO类型,初始化模型

- 若只对darknet迁移,然后对剩余其他层进行冻结

- 如果是no_output,吧out_put部分进行随机初始化,然后冻结其他加载过的模型权重

- 加载与训练权重,可从官网下载

# 4、判断是否进行迁移学习

if args.transfer != 'none':

# 加载与训练模型'./data/yolov3.weights'

model.load_weights(args.weights)

if args.transfer == 'fine_tune':

# 冻结darknet

darknet = model.get_layer('yolo_darknet')

freeze_all(darknet)

elif args.transfer == 'frozen':

# 冻结所有层

freeze_all(model)

else:

# 重置网络后端结构

if args.tiny:

init_model = YoloV3Tiny(

args.size, training=True, classes=args.num_classes)

else:

init_model = YoloV3(

args.size, training=True, classes=args.num_classes)

# 如果迁移指的是darknet

if args.transfer == 'darknet':

# 获取网络的权重

for l in model.layers:

if l.name != 'yolo_darknet' and l.name.startswith('yolo_'):

l.set_weights(init_model.get_layer(

l.name).get_weights())

else:

freeze_all(l)

elif args.transfer == 'no_output':

for l in model.layers:

if l.name.startswith('yolo_output'):

l.set_weights(init_model.get_layer(

l.name).get_weights())

else:

freeze_all(l)

# 需要从模型文件中导入utils中的freeze_all函数

def freeze_all(model, frozen=True):

model.trainable = not frozen

if isinstance(model, tf.keras.Model):

for l in model.layers:

freeze_all(l, frozen)

5、定义优化器以及损失计算方式

optimizer = tf.keras.optimizers.Adam(lr=args.learning_rate)

# 返回yolo_loss(y_true, y_pred)的函数

loss = [YoloLoss(anchors[mask], classes=args.num_classes)

for mask in anchor_masks]

注:其中YOLOLoss的计算过程源码当中

6、训练优化过程,训练指定模式

- 用eager的梯度调试模式进行训练易于调试

- keras model的fit模式简单易用

if args.mode == 'eager_tf':

# 1、定义评估方式

avg_loss = tf.keras.metrics.Mean('loss', dtype=tf.float32)

avg_val_loss = tf.keras.metrics.Mean('val_loss', dtype=tf.float32)

# 2、迭代优化

for epoch in range(1, args.epochs + 1):

for batch, (images, labels) in enumerate(train_dataset):

with tf.GradientTape() as tape:

# 1、计算模型输出和损失

outputs = model(images, training=True)

regularization_loss = tf.reduce_sum(model.losses)

pred_loss = []

for output, label, loss_fn in zip(outputs, labels, loss):

# 根据输出和标签计算出损失

pred_loss.append(loss_fn(label, output))

# 计算总损失 = 平均损失 + regularization_loss

total_loss = tf.reduce_sum(pred_loss) + regularization_loss

# 计算梯度以及更新梯度

grads = tape.gradient(total_loss, model.trainable_variables)

optimizer.apply_gradients(

zip(grads, model.trainable_variables))

# 打印日志

logging.info("{}_train_{}, {}, {}".format(

epoch, batch, total_loss.numpy(),

list(map(lambda x: np.sum(x.numpy()), pred_loss))))

avg_loss.update_state(total_loss)

# 验证数据集验证输出计算

for batch, (images, labels) in enumerate(val_dataset):

outputs = model(images)

# 求损失

regularization_loss = tf.reduce_sum(model.losses)

pred_loss = []

# 输出结果和标签计算损失

for output, label, loss_fn in zip(outputs, labels, loss):

pred_loss.append(loss_fn(label, output))

total_loss = tf.reduce_sum(pred_loss) + regularization_loss

# 打印总损失

logging.info("{}_val_{}, {}, {}".format(

epoch, batch, total_loss.numpy(),

list(map(lambda x: np.sum(x.numpy()), pred_loss))))

avg_val_loss.update_state(total_loss)

logging.info("{}, train: {}, val: {}".format(

epoch,

avg_loss.result().numpy(),

avg_val_loss.result().numpy()))

# 保存模型位置

avg_loss.reset_states()

avg_val_loss.reset_states()

model.save_weights(

'checkpoints/yolov3_train_{}.tf'.format(epoch))

else:

# 指定相关回调函数,自定义需求,对于检测来讲不需要太多的优化算法方式

model.compile(optimizer=optimizer, loss=loss)

callbacks = [

EarlyStopping(patience=3, verbose=1),

ModelCheckpoint('checkpoints/yolov3_train_{epoch}.tf',

verbose=1, save_weights_only=True),

TensorBoard(log_dir='logs')

]

history = model.fit(train_dataset,

epochs=args.epochs,

callbacks=callbacks,

validation_data=val_dataset)

1、EarlyStopping

- keras.callbacks.EarlyStopping(monitor='val_loss', patience=0, verbose=0, mode='auto')

当监测值不再改善时,该回调函数将中止训练

- 参数

- monitor:需要监视的量

- patience:当early stop被激活(如发现loss相比上一个epoch训练没有下降),则经过

patience个epoch后停止训练。 - verbose:信息展示模式

- mode:‘auto’,‘min’,‘max’之一,在

min模式下,如果检测值停止下降则中止训练。在max模式下,当检测值不再上升则停止训练。

5.11.2 测试过程实现

- 步骤

- 1、初始化模型并加载权重

- 2、加载图片处理图片并使用模型进行预测

- 3、将图片框画在图片中并进行保存

导入包并制定相关参数

import time

import logging

import cv2

import numpy as np

import tensorflow as tf

import os

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "2"

from yolov3_tf2.models import (

YoloV3, YoloV3Tiny

)

from yolov3_tf2.dataset import transform_images

from yolov3_tf2.utils import draw_outputs

import argparse

import sys

parser = argparse.ArgumentParser()

parser.add_argument('--classes', type=str, default='./data/kitti.names',

help='类别配置路径')

parser.add_argument('--weights', type=str, default='./checkpoints/yolov3_train_1.tf',

help='训练好的模型位置')

parser.add_argument('--tiny', type=bool, default=False, help='加载的模型类型yolov3 or yolov3-tiny')

parser.add_argument('--size', type=int, default=416,

help='图片大小')

parser.add_argument('--image', type=str, default='./data/kitti/data_object_image_2/testing/image_2/000008.png',

help='输入预测图片的位置')

parser.add_argument('--output', type=str, default='./output.jpg',

help='输出图片结果的位置')

parser.add_argument('--num_classes', type=int, default=6,

help='总共类别数量')

整体过程实现逻辑:

def main(args):

# 1、初始化模型并加载权重

if args.tiny:

yolo = YoloV3Tiny(classes=args.num_classes)

else:

yolo = YoloV3(classes=args.num_classes)

yolo.load_weights(args.weights)

logging.info('加载模型权重weights')

# 加载目标类型

class_names = [c.strip() for c in open(args.classes).readlines()]

# 2、加载图片处理图片并使用模型进行预测

img = tf.image.decode_image(open(args.image, 'rb').read(), channels=3)

img = tf.expand_dims(img, 0)

img = transform_images(img, args.size)

# 记录时间

t1 = time.time()

boxes, scores, classes, nums = yolo(img)

t2 = time.time()

logging.info('耗时: {}'.format(t2 - t1))

logging.info('检测结果:')

print(boxes, scores, classes, nums)

for i in range(nums[0]):

logging.info('\t{}, {}, {}'.format(class_names[int(classes[0][i])],

np.array(scores[0][i]),

np.array(boxes[0][i])))

# 3、显示图片并将图片框画出

img = cv2.imread(args.image)

img = draw_outputs(img, (boxes, scores, classes, nums), class_names)

cv2.imwrite(args.output, img)

logging.info('output saved to: {}'.format(args.output))

if __name__ == '__main__':

args = parser.parse_args(sys.argv[1:])

main(args)

其中用到几个YOLO源码实现的处理函数(无需实现,具体参考源代码)

from yolov3_tf2.dataset import transform_images

from yolov3_tf2.utils import draw_outputs

- 预处理大小和归一化函数

def transform_images(x_train, size):

x_train = tf.image.resize(x_train, (size, size))

x_train = x_train / 255

return x_train

- draw_outputs

def draw_outputs(img, outputs, class_names):

boxes, objectness, classes, nums = outputs

boxes, objectness, classes, nums = boxes[0], objectness[0], classes[0], nums[0]

wh = np.flip(img.shape[0:2])

for i in range(nums):

x1y1 = tuple((np.array(boxes[i][0:2]) * wh).astype(np.int32))

x2y2 = tuple((np.array(boxes[i][2:4]) * wh).astype(np.int32))

img = cv2.rectangle(img, x1y1, x2y2, (255, 0, 0), 2)

img = cv2.putText(img, '{} {:.4f}'.format(

class_names[int(classes[i])], objectness[i]),

x1y1, cv2.FONT_HERSHEY_COMPLEX_SMALL, 1, (0, 0, 255), 2)

return img

如果我们看不到结果,说明预测的结果都不好,所以可以这样在模型的代码中修改iou和分数的阈值来进行调整(根据实际需求)

def yolo_nms(outputs, anchors, masks, classes):

# boxes, conf, type

b, c, t = [], [], []

for o in outputs:

b.append(tf.reshape(o[0], (tf.shape(o[0])[0], -1, tf.shape(o[0])[-1])))

c.append(tf.reshape(o[1], (tf.shape(o[1])[0], -1, tf.shape(o[1])[-1])))

t.append(tf.reshape(o[2], (tf.shape(o[2])[0], -1, tf.shape(o[2])[-1])))

bbox = tf.concat(b, axis=1)

confidence = tf.concat(c, axis=1)

class_probs = tf.concat(t, axis=1)

scores = confidence * class_probs

boxes, scores, classes, valid_detections = tf.image.combined_non_max_suppression(

boxes=tf.reshape(bbox, (tf.shape(bbox)[0], -1, 1, 4)),

scores=tf.reshape(

scores, (tf.shape(scores)[0], -1, tf.shape(scores)[-1])),

max_output_size_per_class=100,

max_total_size=100,

iou_threshold=0.5,

score_threshold=0.5

)

return boxes, scores, classes, valid_detections

5.11.3 小结

- 完成KITTI自动驾驶数据集的训练和检测过程

create_kitti_tf_record.py

import hashlib

import io

import os

import numpy as np

import PIL.Image as pil

from PIL import Image

import tensorflow as tf

import feature_parse

from IoU import iou

import argparse

import sys

parser = argparse.ArgumentParser()

parser.add_argument('--data_dir', type=str, default='../data/kitti/',

help='kitti数据集的位置')

parser.add_argument('--output_path', type=str, default='../data/kitti_tfrecords/',

help='TFRecord文件的输出位置')

parser.add_argument('--classes_to_use', default='car,van,truck,pedestrian,cyclist,tram', help='KITTI中需要检测的类别')

parser.add_argument('--validation_set_size', type=int, default=500,

help='验证集数据集使用大小')

def prepare_example(image_path, annotations):

"""

对一个图片的Annotations转换成tf.Example proto.

:param image_path:

:param annotations:

:return:

"""

# 1、读取图片内容,转换成数组格式

with open(image_path, 'rb') as fid:

encoded_png = fid.read()

encoded_png_io = io.BytesIO(encoded_png)

image = pil.open(encoded_png_io)

image = np.asarray(image)

# 2、构造协议中需要的字典键的值

# sha256加密结果

key = hashlib.sha256(encoded_png).hexdigest()

# 进行坐标处理

width = int(image.shape[1])

height = int(image.shape[0])

# 存储极坐标归一化格式

xmin_norm = annotations['2d_bbox_left'] / float(width)

ymin_norm = annotations['2d_bbox_top'] / float(height)

xmax_norm = annotations['2d_bbox_right'] / float(width)

ymax_norm = annotations['2d_bbox_bottom'] / float(height)

# 其他信息,难度以及字符串类别

difficult_obj = [0] * len(xmin_norm)

classes_text = [x.encode('utf8') for x in annotations['type']]

# 3、构造协议example

example = tf.train.Example(features=tf.train.Features(feature={

'image/height': feature_parse.int64_feature(height),

'image/width': feature_parse.int64_feature(width),

'image/filename': feature_parse.bytes_feature(image_path.encode('utf8')),

'image/source_id': feature_parse.bytes_feature(image_path.encode('utf8')),

'image/key/sha256': feature_parse.bytes_feature(key.encode('utf8')),

'image/encoded': feature_parse.bytes_feature(encoded_png),

'image/format': feature_parse.bytes_feature('png'.encode('utf8')),

'image/object/bbox/xmin': feature_parse.float_list_feature(xmin_norm),

'image/object/bbox/xmax': feature_parse.float_list_feature(xmax_norm),

'image/object/bbox/ymin': feature_parse.float_list_feature(ymin_norm),

'image/object/bbox/ymax': feature_parse.float_list_feature(ymax_norm),

'image/object/class/text': feature_parse.bytes_list_feature(classes_text),

'image/object/difficult': feature_parse.int64_list_feature(difficult_obj),

'image/object/truncated': feature_parse.float_list_feature(

annotations['truncated'])

}))

return example

def filter_annotations(img_all_annotations, used_classes):

"""

过滤掉一些没有用的类别和dontcare区域的annotations

:param img_all_annotations: 图片的所有标注

:param used_classes: 需要留下记录的列别

:return:

"""

img_filtered_annotations = {}

# 1、过滤这个图片中标注的我们训练指定不需要的类别,把索引记录下来

# 方便后面在处理对应的一些坐标时候使用

relevant_annotation_indices = [

i for i, x in enumerate(img_all_annotations['type']) if x in used_classes

]

# 2、获取过滤后的下标对应某个标记物体的其它信息

for key in img_all_annotations.keys():

img_filtered_annotations[key] = (

img_all_annotations[key][relevant_annotation_indices])

# 3、如果dontcare在我们要获取的类别里面,也进行组合获取,然后过滤相关的bboxes不符合要求的

if 'dontcare' in used_classes:

dont_care_indices = [i for i,

x in enumerate(img_filtered_annotations['type'])

if x == 'dontcare']

# bounding box的格式[y_min, x_min, y_max, x_max]

all_boxes = np.stack([img_filtered_annotations['2d_bbox_top'],

img_filtered_annotations['2d_bbox_left'],

img_filtered_annotations['2d_bbox_bottom'],

img_filtered_annotations['2d_bbox_right']],

axis=1)

# 计算bboxesIOU,比如这样的

# Truck 0.00 0 -1.57 599.41 156.40 629.75 189.25 2.85 2.63 12.34 0.47 1.49 69.44 -1.56

# DontCare -1 -1 -10 503.89 169.71 590.61 190.13 -1 -1 -1 -1000 -1000 -1000 -10

# DontCare -1 -1 -10 511.35 174.96 527.81 187.45 -1 -1 -1 -1000 -1000 -1000 -10

# DontCare -1 -1 -10 532.37 176.35 542.68 185.27 -1 -1 -1 -1000 -1000 -1000 -10

# DontCare -1 -1 -10 559.62 175.83 575.40 183.15 -1 -1 -1 -1000 -1000 -1000 -10

""" 全部框 和 DontCare框 一起进行计算 IoU"""

ious = iou(boxes1=all_boxes,

boxes2=all_boxes[dont_care_indices])

# 删除所有 bounding boxes 与 dontcare region 重叠的区域

if ious.size > 0:

# amax返回元素值,并不是返回下标

# IoU > 0.0 表示 all_boxes中的某框和DontCare框有重叠的区域,IoU等于0.0表示 all_boxes中没有任何框和DontCare框有重叠的区域

boxes_to_remove = np.amax(ious, axis=1) > 0.0

for key in img_all_annotations.keys():

img_filtered_annotations[key] = (

# np.logical_not 表示 反转,True/非0值 变 False,False/0值 变 True

# np.logical_not(boxes_to_remove) 表示把 IoU > 0.0的值 变为 False,IoU等于0.0 的值 变为 True

# 那么最终只会保留下 all_boxes中和DontCare框没有重叠区域的框,不会保留 和DontCare框有重叠区域的框

img_filtered_annotations[key][np.logical_not(boxes_to_remove)])

return img_filtered_annotations

def read_annotation_file(filename):

with open(filename) as f:

content = f.readlines()

# 分割解析内容

content = [x.strip().split(' ') for x in content]

# 保存内容到字典结构

anno = dict()

anno['type'] = np.array([x[0].lower() for x in content])

anno['truncated'] = np.array([float(x[1]) for x in content])

anno['occluded'] = np.array([int(x[2]) for x in content])

anno['alpha'] = np.array([float(x[3]) for x in content])

anno['2d_bbox_left'] = np.array([float(x[4]) for x in content])

anno['2d_bbox_top'] = np.array([float(x[5]) for x in content])

anno['2d_bbox_right'] = np.array([float(x[6]) for x in content])

anno['2d_bbox_bottom'] = np.array([float(x[7]) for x in content])

return anno

def convert_kitti_to_tfrecords(data_dir, output_path, classes_to_use,

validation_set_size):

"""

将KITTI detection 转换成TFRecords.

:param data_dir: 源数据目录

:param output_path: 输出文件目录

:param classes_to_use: 选择需要使用的类别

:param validation_set_size: 验证集大小

:return:

"""

train_count = 0

val_count = 0

# 1、创建KITTI训练和验证集的tfrecord位置

# 标注信息位置

annotation_dir = os.path.join(data_dir,

'training',

'label_2')

# 图片位置

image_dir = os.path.join(data_dir,

'data_object_image_2',

'training',

'image_2')

train_writer = tf.io.TFRecordWriter(output_path + 'train.tfrecord')

val_writer = tf.io.TFRecordWriter(output_path + 'val.tfrecord')

# 2、列出所有的图片,进行每张图片的内容和标注信息的获取,写入到tfrecords文件

images = sorted(os.listdir(image_dir))

for img_name in images:

# (1)获取当前图片的编号数据,并拼接读取相应标注文件

img_num = int(img_name.split('.')[0])

# (2)读取标签文件函数

# 整数需要进行填充成与标签文件相同的6位字符串

img_anno = read_annotation_file(os.path.join(annotation_dir,

str(img_num).zfill(6) + '.txt'))

# (3)过滤标签函数

# 当前图片的标注中 过滤掉一些没有用的类别和dontcare区域的annotations

annotation_for_image = filter_annotations(img_anno, classes_to_use)

# (4)写入训练和验证集合TFRecord文件

# 读取拼接的图片路径,然后与过滤之后的标注结果进行合并到一个example中

image_path = os.path.join(image_dir, img_name)

example = prepare_example(image_path, annotation_for_image)

# 如果小于验证集数量大小就直接写入验证集,否则写入训练集

is_validation_img = img_num < validation_set_size

if is_validation_img:

val_writer.write(example.SerializeToString())

val_count += 1

else:

train_writer.write(example.SerializeToString())

train_count += 1

train_writer.close()

val_writer.close()

def main(args):

convert_kitti_to_tfrecords(

data_dir=args.data_dir,

output_path=args.output_path,

classes_to_use=args.classes_to_use.split(','),

validation_set_size=args.validation_set_size)

if __name__ == '__main__':

args = parser.parse_args(sys.argv[1:])

main(args)feature_parse.py

import tensorflow as tf

def int64_feature(value):

return tf.train.Feature(int64_list=tf.train.Int64List(value=[value]))

def int64_list_feature(value):

return tf.train.Feature(int64_list=tf.train.Int64List(value=value))

def bytes_feature(value):

return tf.train.Feature(bytes_list=tf.train.BytesList(value=[value]))

def bytes_list_feature(value):

return tf.train.Feature(bytes_list=tf.train.BytesList(value=value))

def float_list_feature(value):

return tf.train.Feature(float_list=tf.train.FloatList(value=value))

def read_examples_list(path):

"""Read list of training or validation examples.

The file is assumed to contain a single example per line where the first

token in the line is an identifier that allows us to find the image and

annotation xml for that example.

For example, the line:

xyz 3

would allow us to find files xyz.jpg and xyz.xml (the 3 would be ignored).

Args:

path: absolute path to examples list file.

Returns:

list of example identifiers (strings).

"""

with tf.gfile.GFile(path) as fid:

lines = fid.readlines()

return [line.strip().split(' ') for line in lines]

def recursive_parse_xml_to_dict(xml):

"""Recursively parses XML contents to python dict.

We assume that `object` tags are the only ones that can appear

multiple times at the same level of a tree.

Args:

xml: xml tree obtained by parsing XML file contents using lxml.etree

Returns:

Python dictionary holding XML contents.

"""

if not xml:

return {xml.tag: xml.text}

result = {}

for child in xml:

child_result = recursive_parse_xml_to_dict(child)

if child.tag != 'object':

result[child.tag] = child_result[child.tag]

else:

if child.tag not in result:

result[child.tag] = []

result[child.tag].append(child_result[child.tag])

return {xml.tag: result}IoU.py

import numpy as np

def area(boxes):

"""Computes area of boxes.

Args:

boxes: Numpy array with shape [N, 4] holding N boxes

Returns:

a numpy array with shape [N*1] representing box areas

"""

return (boxes[:, 2] - boxes[:, 0]) * (boxes[:, 3] - boxes[:, 1])

def intersection(boxes1, boxes2):

"""Compute pairwise intersection areas between boxes.

Args:

boxes1: a numpy array with shape [N, 4] holding N boxes

boxes2: a numpy array with shape [M, 4] holding M boxes

Returns:

a numpy array with shape [N*M] representing pairwise intersection area

"""

[y_min1, x_min1, y_max1, x_max1] = np.split(boxes1, 4, axis=1)

[y_min2, x_min2, y_max2, x_max2] = np.split(boxes2, 4, axis=1)

all_pairs_min_ymax = np.minimum(y_max1, np.transpose(y_max2))

all_pairs_max_ymin = np.maximum(y_min1, np.transpose(y_min2))

intersect_heights = np.maximum(

np.zeros(all_pairs_max_ymin.shape),

all_pairs_min_ymax - all_pairs_max_ymin)

all_pairs_min_xmax = np.minimum(x_max1, np.transpose(x_max2))

all_pairs_max_xmin = np.maximum(x_min1, np.transpose(x_min2))

intersect_widths = np.maximum(

np.zeros(all_pairs_max_xmin.shape),

all_pairs_min_xmax - all_pairs_max_xmin)

return intersect_heights * intersect_widths

def iou(boxes1, boxes2):

"""Computes pairwise intersection-over-union between box collections.

Args:

boxes1: a numpy array with shape [N, 4] holding N boxes.

boxes2: a numpy array with shape [M, 4] holding N boxes.

Returns:

a numpy array with shape [N, M] representing pairwise iou scores.

"""

intersect = intersection(boxes1, boxes2)

area1 = area(boxes1)

area2 = area(boxes2)

union = np.expand_dims(area1, axis=1) + np.expand_dims(

area2, axis=0) - intersect

return intersect / union

def ioa(boxes1, boxes2):

"""Computes pairwise intersection-over-area between box collections.

Intersection-over-area (ioa) between two boxes box1 and box2 is defined as

their intersection area over box2's area. Note that ioa is not symmetric,

that is, IOA(box1, box2) != IOA(box2, box1).

Args:

boxes1: a numpy array with shape [N, 4] holding N boxes.

boxes2: a numpy array with shape [M, 4] holding N boxes.

Returns:

a numpy array with shape [N, M] representing pairwise ioa scores.

"""

intersect = intersection(boxes1, boxes2)

areas = np.expand_dims(area(boxes2), axis=0)

return intersect / areasdetect.py

import time

import logging

import cv2

import numpy as np

import tensorflow as tf

import os

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "2"

from yolov3_tf2.models import (

YoloV3, YoloV3Tiny

)

from yolov3_tf2.dataset import transform_images

from yolov3_tf2.utils import draw_outputs

import argparse

import sys

parser = argparse.ArgumentParser()

parser.add_argument('--classes', type=str, default='./data/kitti.names',

help='类别配置路径')

parser.add_argument('--weights', type=str, default='./checkpoints/yolov3_train_1.tf',

help='训练好的模型位置')

parser.add_argument('--tiny', type=bool, default=False, help='加载的模型类型yolov3 or yolov3-tiny')

parser.add_argument('--size', type=int, default=416,

help='图片大小')

parser.add_argument('--image', type=str, default='./data/kitti/data_object_image_2/testing/image_2/000008.png',

help='输入预测图片的位置')

parser.add_argument('--output', type=str, default='./output.jpg',

help='输出图片结果的位置')

parser.add_argument('--num_classes', type=int, default=6,

help='总共类别数量')

def main(args):

# 1、初始化模型并加载权重

if args.tiny:

yolo = YoloV3Tiny(classes=args.num_classes)

else:

yolo = YoloV3(classes=args.num_classes)

yolo.load_weights(args.weights)

logging.info('加载模型权重weights')

# 加载目标类型

class_names = [c.strip() for c in open(args.classes).readlines()]

# 2、加载图片处理图片并使用模型进行预测

img = tf.image.decode_image(open(args.image, 'rb').read(), channels=3)

img = tf.expand_dims(img, 0)

img = transform_images(img, args.size)

# 记录时间

t1 = time.time()

boxes, scores, classes, nums = yolo(img)

t2 = time.time()

logging.info('耗时: {}'.format(t2 - t1))

logging.info('检测结果:')

print(boxes, scores, classes, nums)

for i in range(nums[0]):

logging.info('\t{}, {}, {}'.format(class_names[int(classes[0][i])],

np.array(scores[0][i]),

np.array(boxes[0][i])))

# 3、显示图片并将图片框画出

img = cv2.imread(args.image)

img = draw_outputs(img, (boxes, scores, classes, nums), class_names)

cv2.imwrite(args.output, img)

logging.info('output saved to: {}'.format(args.output))

if __name__ == '__main__':

args = parser.parse_args(sys.argv[1:])

main(args)

train.py

import logging

import tensorflow as tf

import numpy as np

import cv2

import sys

import argparse

import os

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "2"

from tensorflow.keras.callbacks import (

ReduceLROnPlateau,

EarlyStopping,

ModelCheckpoint,

TensorBoard

)

from yolov3_tf2.models import (

YoloV3, YoloV3Tiny, YoloLoss,

yolo_anchors, yolo_anchor_masks,

yolo_tiny_anchors, yolo_tiny_anchor_masks

)

from yolov3_tf2.utils import freeze_all

import yolov3_tf2.dataset as dataset

parser = argparse.ArgumentParser()

parser.add_argument('--dataset', type=str, default='./data/kitti_tfrecords/train.tfrecord',

help='训练数据集路径')

parser.add_argument('--val_dataset', type=str, default='./data/kitti_tfrecords/val.tfrecord',

help='验证集目录')

parser.add_argument('--tiny', type=bool, default=False, help='加载的模型类型yolov3 or yolov3-tiny')

parser.add_argument('--weights', type=str, default='./checkpoints/yolov3_train_1.tf',

help='模型预训练权重路径')

parser.add_argument('--classes', type=str, default='./data/kitti.names',

help='类别文件路径')

parser.add_argument('--mode', type=str, default='fit', choices=['fit', 'eager_tf'],

help='fit: model.fit模式, eager_tf: 自定义GradientTape模式')

parser.add_argument('--transfer', type=str, default='none', choices=['none', 'darknet',

'no_output', 'frozen',

'fine_tune'],

help='none: 全部进行训练'

'迁移学习并冻结所有, fine_tune: 迁移并只冻结darknet')

parser.add_argument('--size', type=int, default=416,

help='图片大小')

parser.add_argument('--epochs', type=int, default=2,

help='迭代次数')

parser.add_argument('--batch_size', type=int, default=8,

help='每批次大小')

parser.add_argument('--learning_rate', type=float, default=1e-3,

help='学习率')

parser.add_argument('--num_classes', type=int, default=6,

help='类别数量')

def main(args):

# 1、判断传入的是需要训练YoloV3Tiny还是YOLOV3正常版本

if args.tiny:

model = YoloV3Tiny(args.size, training=True,

classes=args.num_classes)

anchors = yolo_tiny_anchors

anchor_masks = yolo_tiny_anchor_masks

else:

model = YoloV3(args.size, training=True, classes=args.num_classes)

anchors = yolo_anchors

anchor_masks = yolo_anchor_masks

# 2、获取传入参数的训练数据

if args.dataset:

train_dataset = dataset.load_tfrecord_dataset(

args.dataset, args.classes)

train_dataset = train_dataset.shuffle(buffer_size=1024)

train_dataset = train_dataset.batch(args.batch_size)

train_dataset = train_dataset.map(lambda x, y: (

dataset.transform_images(x, args.size),

dataset.transform_targets(y, anchors, anchor_masks, 80)))

train_dataset = train_dataset.prefetch(

buffer_size=tf.data.experimental.AUTOTUNE)

# 3、获取传入参数的验证集数据

if args.val_dataset:

val_dataset = dataset.load_tfrecord_dataset(

args.val_dataset, args.classes)

val_dataset = val_dataset.batch(args.batch_size)

val_dataset = val_dataset.map(lambda x, y: (

dataset.transform_images(x, args.size),

dataset.transform_targets(y, anchors, anchor_masks, 80)))

# 4、判断是否进行迁移学习

if args.transfer != 'none':

# 加载与训练模型'./data/yolov3.weights'

model.load_weights(args.weights)

if args.transfer == 'fine_tune':

# 冻结darknet

darknet = model.get_layer('yolo_darknet')

freeze_all(darknet)

elif args.transfer == 'frozen':

# 冻结所有层

freeze_all(model)

else:

# 重置网络后端结构

if args.tiny:

init_model = YoloV3Tiny(

args.size, training=True, classes=args.num_classes)

else:

init_model = YoloV3(

args.size, training=True, classes=args.num_classes)

# 如果迁移指的是darknet

if args.transfer == 'darknet':

# 获取网络的权重

for l in model.layers:

if l.name != 'yolo_darknet' and l.name.startswith('yolo_'):

l.set_weights(init_model.get_layer(

l.name).get_weights())

else:

freeze_all(l)

elif args.transfer == 'no_output':

for l in model.layers:

if l.name.startswith('yolo_output'):

l.set_weights(init_model.get_layer(

l.name).get_weights())

else:

freeze_all(l)

# 5、定义优化器以及损失计算方式

optimizer = tf.keras.optimizers.Adam(lr=args.learning_rate)

loss = [YoloLoss(anchors[mask], classes=args.num_classes)

for mask in anchor_masks]

# 6、训练优化过程,训练指定模式

# 用eager模式进行训练易于调试

# keras model模式简单易用

if args.mode == 'eager_tf':

# 定义评估方式

avg_loss = tf.keras.metrics.Mean('loss', dtype=tf.float32)

avg_val_loss = tf.keras.metrics.Mean('val_loss', dtype=tf.float32)

# 迭代优化

for epoch in range(1, args.epochs + 1):

for batch, (images, labels) in enumerate(train_dataset):

with tf.GradientTape() as tape:

# 1、计算模型输出和损失

outputs = model(images, training=True)

regularization_loss = tf.reduce_sum(model.losses)

pred_loss = []

for output, label, loss_fn in zip(outputs, labels, loss):

# 根据输出和标签计算出损失

pred_loss.append(loss_fn(label, output))

# 计算总损失 = 平均损失 + regularization_loss

total_loss = tf.reduce_sum(pred_loss) + regularization_loss

# 计算梯度以及更新梯度

grads = tape.gradient(total_loss, model.trainable_variables)

optimizer.apply_gradients(

zip(grads, model.trainable_variables))

# 打印日志

logging.info("{}_train_{}, {}, {}".format(

epoch, batch, total_loss.numpy(),

list(map(lambda x: np.sum(x.numpy()), pred_loss))))

avg_loss.update_state(total_loss)

# 验证数据集验证输出计算

for batch, (images, labels) in enumerate(val_dataset):

outputs = model(images)

# 求损失

regularization_loss = tf.reduce_sum(model.losses)

pred_loss = []

# 输出结果和标签计算损失

for output, label, loss_fn in zip(outputs, labels, loss):

pred_loss.append(loss_fn(label, output))

total_loss = tf.reduce_sum(pred_loss) + regularization_loss

# 打印总损失

logging.info("{}_val_{}, {}, {}".format(

epoch, batch, total_loss.numpy(),

list(map(lambda x: np.sum(x.numpy()), pred_loss))))

avg_val_loss.update_state(total_loss)

logging.info("{}, train: {}, val: {}".format(

epoch,

avg_loss.result().numpy(),

avg_val_loss.result().numpy()))

# 保存模型位置

avg_loss.reset_states()

avg_val_loss.reset_states()

model.save_weights(

'checkpoints/yolov3_train_{}.tf'.format(epoch))

else:

model.compile(optimizer=optimizer, loss=loss)

callbacks = [

ReduceLROnPlateau(verbose=1),

EarlyStopping(patience=3, verbose=1),

ModelCheckpoint('checkpoints/yolov3_train_{epoch}.tf',

verbose=1, save_weights_only=True),

TensorBoard(log_dir='logs')

]

history = model.fit(train_dataset,

epochs=args.epochs,

callbacks=callbacks,

validation_data=val_dataset)

if __name__ == '__main__':

args = parser.parse_args(sys.argv[1:])

main(args)batch_norm.py

import tensorflow as tf

class BatchNormalization(tf.keras.layers.BatchNormalization):

"""

Make trainable=False freeze BN for real (the og version is sad)

"""

def call(self, x, training=False):

if training is None:

training = tf.constant(False)

training = tf.logical_and(training, self.trainable)

return super().call(x, training)

dataset.py

import tensorflow as tf

@tf.function

def transform_targets_for_output(y_true, grid_size, anchor_idxs, classes):

# y_true: (N, boxes, (x1, y1, x2, y2, class, best_anchor))

N = tf.shape(y_true)[0]

# y_true_out: (N, grid, grid, anchors, [x, y, w, h, obj, class])

y_true_out = tf.zeros(

(N, grid_size, grid_size, tf.shape(anchor_idxs)[0], 6))

anchor_idxs = tf.cast(anchor_idxs, tf.int32)

indexes = tf.TensorArray(tf.int32, 1, dynamic_size=True)

updates = tf.TensorArray(tf.float32, 1, dynamic_size=True)

idx = 0

for i in tf.range(N):

for j in tf.range(tf.shape(y_true)[1]):

if tf.equal(y_true[i][j][2], 0):

continue

anchor_eq = tf.equal(

anchor_idxs, tf.cast(y_true[i][j][5], tf.int32))

if tf.reduce_any(anchor_eq):

box = y_true[i][j][0:4]

box_xy = (y_true[i][j][0:2] + y_true[i][j][2:4]) / 2

anchor_idx = tf.cast(tf.where(anchor_eq), tf.int32)

grid_xy = tf.cast(box_xy // (1/grid_size), tf.int32)

# grid[y][x][anchor] = (tx, ty, bw, bh, obj, class)

indexes = indexes.write(

idx, [i, grid_xy[1], grid_xy[0], anchor_idx[0][0]])

updates = updates.write(

idx, [box[0], box[1], box[2], box[3], 1, y_true[i][j][4]])

idx += 1

# tf.print(indexes.stack())

# tf.print(updates.stack())

return tf.tensor_scatter_nd_update(

y_true_out, indexes.stack(), updates.stack())

def transform_targets(y_train, anchors, anchor_masks, classes):

y_outs = []

grid_size = 13

# calculate anchor index for true boxes

anchors = tf.cast(anchors, tf.float32)

anchor_area = anchors[..., 0] * anchors[..., 1]

box_wh = y_train[..., 2:4] - y_train[..., 0:2]

box_wh = tf.tile(tf.expand_dims(box_wh, -2),

(1, 1, tf.shape(anchors)[0], 1))

box_area = box_wh[..., 0] * box_wh[..., 1]

intersection = tf.minimum(box_wh[..., 0], anchors[..., 0]) * \

tf.minimum(box_wh[..., 1], anchors[..., 1])

iou = intersection / (box_area + anchor_area - intersection)

anchor_idx = tf.cast(tf.argmax(iou, axis=-1), tf.float32)

anchor_idx = tf.expand_dims(anchor_idx, axis=-1)

y_train = tf.concat([y_train, anchor_idx], axis=-1)

for anchor_idxs in anchor_masks:

y_outs.append(transform_targets_for_output(

y_train, grid_size, anchor_idxs, classes))

grid_size *= 2

return tuple(y_outs)

def transform_images(x_train, size):

x_train = tf.image.resize(x_train, (size, size))

x_train = x_train / 255

return x_train

# https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/using_your_own_dataset.md#conversion-script-outline-conversion-script-outline

IMAGE_FEATURE_MAP = {

'image/width': tf.io.FixedLenFeature([], tf.int64),

'image/height': tf.io.FixedLenFeature([], tf.int64),

'image/filename': tf.io.FixedLenFeature([], tf.string),

'image/source_id': tf.io.FixedLenFeature([], tf.string),

'image/key/sha256': tf.io.FixedLenFeature([], tf.string),

'image/encoded': tf.io.FixedLenFeature([], tf.string),

'image/format': tf.io.FixedLenFeature([], tf.string),

'image/object/bbox/xmin': tf.io.VarLenFeature(tf.float32),

'image/object/bbox/ymin': tf.io.VarLenFeature(tf.float32),

'image/object/bbox/xmax': tf.io.VarLenFeature(tf.float32),

'image/object/bbox/ymax': tf.io.VarLenFeature(tf.float32),

'image/object/class/text': tf.io.VarLenFeature(tf.string),

# 'image/object/class/label': tf.io.VarLenFeature(tf.int64),

'image/object/difficult': tf.io.VarLenFeature(tf.int64),

# 'image/object/truncated': tf.io.VarLenFeature(tf.int64),

'image/object/truncated': tf.io.VarLenFeature(tf.float32),

'image/object/view': tf.io.VarLenFeature(tf.string),

}

def parse_tfrecord(tfrecord, class_table):

x = tf.io.parse_single_example(tfrecord, IMAGE_FEATURE_MAP)

x_train = tf.image.decode_jpeg(x['image/encoded'], channels=3)

x_train = tf.image.resize(x_train, (416, 416))

class_text = tf.sparse.to_dense(

x['image/object/class/text'], default_value='')

labels = tf.cast(class_table.lookup(class_text), tf.float32)

y_train = tf.stack([tf.sparse.to_dense(x['image/object/bbox/xmin']),

tf.sparse.to_dense(x['image/object/bbox/ymin']),

tf.sparse.to_dense(x['image/object/bbox/xmax']),

tf.sparse.to_dense(x['image/object/bbox/ymax']),

labels], axis=1)

paddings = [[0, 100 - tf.shape(y_train)[0]], [0, 0]]

y_train = tf.pad(y_train, paddings)

return x_train, y_train

def load_tfrecord_dataset(file_pattern, class_file):

LINE_NUMBER = -1 # TODO: use tf.lookup.TextFileIndex.LINE_NUMBER

class_table = tf.lookup.StaticHashTable(tf.lookup.TextFileInitializer(

class_file, tf.string, 0, tf.int64, LINE_NUMBER, delimiter="\n"), -1)

files = tf.data.Dataset.list_files(file_pattern)

dataset = files.flat_map(tf.data.TFRecordDataset)

return dataset.map(lambda x: parse_tfrecord(x, class_table))

def load_fake_dataset():

x_train = tf.image.decode_jpeg(

open('./data/girl.png', 'rb').read(), channels=3)

x_train = tf.expand_dims(x_train, axis=0)

labels = [

[0.18494931, 0.03049111, 0.9435849, 0.96302897, 0],

[0.01586703, 0.35938117, 0.17582396, 0.6069674, 56],

[0.09158827, 0.48252046, 0.26967454, 0.6403017, 67]

] + [[0, 0, 0, 0, 0]] * 5

y_train = tf.convert_to_tensor(labels, tf.float32)

y_train = tf.expand_dims(y_train, axis=0)

return tf.data.Dataset.from_tensor_slices((x_train, y_train))

models.py

from absl import flags

from absl.flags import FLAGS

import numpy as np

import tensorflow as tf

from tensorflow.keras import Model

from tensorflow.keras.layers import (

Add,

Concatenate,

Conv2D,

Input,

Lambda,

LeakyReLU,

MaxPool2D,

UpSampling2D,

ZeroPadding2D,

)

from tensorflow.keras.regularizers import l2

from tensorflow.keras.losses import (

binary_crossentropy,

sparse_categorical_crossentropy

)

from .batch_norm import BatchNormalization

from .utils import broadcast_iou

yolo_anchors = np.array([(10, 13), (16, 30), (33, 23), (30, 61), (62, 45),

(59, 119), (116, 90), (156, 198), (373, 326)],

np.float32) / 416

yolo_anchor_masks = np.array([[6, 7, 8], [3, 4, 5], [0, 1, 2]])

yolo_tiny_anchors = np.array([(10, 14), (23, 27), (37, 58),

(81, 82), (135, 169), (344, 319)],

np.float32) / 416

yolo_tiny_anchor_masks = np.array([[3, 4, 5], [0, 1, 2]])

def DarknetConv(x, filters, size, strides=1, batch_norm=True):

if strides == 1:

padding = 'same'

else:

x = ZeroPadding2D(((1, 0), (1, 0)))(x) # top left half-padding

padding = 'valid'

x = Conv2D(filters=filters, kernel_size=size,

strides=strides, padding=padding,

use_bias=not batch_norm, kernel_regularizer=l2(0.0005))(x)

if batch_norm:

x = BatchNormalization()(x)

x = LeakyReLU(alpha=0.1)(x)

return x

def DarknetResidual(x, filters):

prev = x

x = DarknetConv(x, filters // 2, 1)

x = DarknetConv(x, filters, 3)

x = Add()([prev, x])

return x

def DarknetBlock(x, filters, blocks):

x = DarknetConv(x, filters, 3, strides=2)

for _ in range(blocks):

x = DarknetResidual(x, filters)

return x

def Darknet(name=None):

x = inputs = Input([None, None, 3])

x = DarknetConv(x, 32, 3)

x = DarknetBlock(x, 64, 1)

x = DarknetBlock(x, 128, 2) # skip connection

x = x_36 = DarknetBlock(x, 256, 8) # skip connection

x = x_61 = DarknetBlock(x, 512, 8)

x = DarknetBlock(x, 1024, 4)

return tf.keras.Model(inputs, (x_36, x_61, x), name=name)

def DarknetTiny(name=None):

x = inputs = Input([None, None, 3])

x = DarknetConv(x, 16, 3)

x = MaxPool2D(2, 2, 'same')(x)

x = DarknetConv(x, 32, 3)

x = MaxPool2D(2, 2, 'same')(x)

x = DarknetConv(x, 64, 3)

x = MaxPool2D(2, 2, 'same')(x)

x = DarknetConv(x, 128, 3)

x = MaxPool2D(2, 2, 'same')(x)

x = x_8 = DarknetConv(x, 256, 3) # skip connection

x = MaxPool2D(2, 2, 'same')(x)

x = DarknetConv(x, 512, 3)

x = MaxPool2D(2, 1, 'same')(x)

x = DarknetConv(x, 1024, 3)

return tf.keras.Model(inputs, (x_8, x), name=name)

def YoloConv(filters, name=None):

def yolo_conv(x_in):

if isinstance(x_in, tuple):

inputs = Input(x_in[0].shape[1:]), Input(x_in[1].shape[1:])

x, x_skip = inputs

# concat with skip connection

x = DarknetConv(x, filters, 1)

x = UpSampling2D(2)(x)

x = Concatenate()([x, x_skip])

else:

x = inputs = Input(x_in.shape[1:])

x = DarknetConv(x, filters, 1)

x = DarknetConv(x, filters * 2, 3)

x = DarknetConv(x, filters, 1)

x = DarknetConv(x, filters * 2, 3)

x = DarknetConv(x, filters, 1)

return Model(inputs, x, name=name)(x_in)

return yolo_conv

def YoloConvTiny(filters, name=None):

def yolo_conv(x_in):

if isinstance(x_in, tuple):

inputs = Input(x_in[0].shape[1:]), Input(x_in[1].shape[1:])

x, x_skip = inputs

# concat with skip connection

x = DarknetConv(x, filters, 1)

x = UpSampling2D(2)(x)

x = Concatenate()([x, x_skip])

else:

x = inputs = Input(x_in.shape[1:])

x = DarknetConv(x, filters, 1)

return Model(inputs, x, name=name)(x_in)

return yolo_conv

def YoloOutput(filters, anchors, classes, name=None):

def yolo_output(x_in):

x = inputs = Input(x_in.shape[1:])

x = DarknetConv(x, filters * 2, 3)

x = DarknetConv(x, anchors * (classes + 5), 1, batch_norm=False)

x = Lambda(lambda x: tf.reshape(x, (-1, tf.shape(x)[1], tf.shape(x)[2],

anchors, classes + 5)))(x)

return tf.keras.Model(inputs, x, name=name)(x_in)

return yolo_output

def yolo_boxes(pred, anchors, classes):

# pred: (batch_size, grid, grid, anchors, (x, y, w, h, obj, ...classes))

grid_size = tf.shape(pred)[1]

box_xy, box_wh, objectness, class_probs = tf.split(

pred, (2, 2, 1, classes), axis=-1)

box_xy = tf.sigmoid(box_xy)

objectness = tf.sigmoid(objectness)

class_probs = tf.sigmoid(class_probs)

pred_box = tf.concat((box_xy, box_wh), axis=-1) # original xywh for loss

# !!! grid[x][y] == (y, x)

grid = tf.meshgrid(tf.range(grid_size), tf.range(grid_size))

grid = tf.expand_dims(tf.stack(grid, axis=-1), axis=2) # [gx, gy, 1, 2]

box_xy = (box_xy + tf.cast(grid, tf.float32)) / \

tf.cast(grid_size, tf.float32)

box_wh = tf.exp(box_wh) * anchors

box_x1y1 = box_xy - box_wh / 2

box_x2y2 = box_xy + box_wh / 2

bbox = tf.concat([box_x1y1, box_x2y2], axis=-1)

return bbox, objectness, class_probs, pred_box

def yolo_nms(outputs, anchors, masks, classes):

# boxes, conf, type

b, c, t = [], [], []

for o in outputs:

b.append(tf.reshape(o[0], (tf.shape(o[0])[0], -1, tf.shape(o[0])[-1])))

c.append(tf.reshape(o[1], (tf.shape(o[1])[0], -1, tf.shape(o[1])[-1])))

t.append(tf.reshape(o[2], (tf.shape(o[2])[0], -1, tf.shape(o[2])[-1])))

bbox = tf.concat(b, axis=1)

confidence = tf.concat(c, axis=1)

class_probs = tf.concat(t, axis=1)

scores = confidence * class_probs

boxes, scores, classes, valid_detections = tf.image.combined_non_max_suppression(

boxes=tf.reshape(bbox, (tf.shape(bbox)[0], -1, 1, 4)),

scores=tf.reshape(

scores, (tf.shape(scores)[0], -1, tf.shape(scores)[-1])),

max_output_size_per_class=100,

max_total_size=100,

iou_threshold=0.5,

score_threshold=0.5

)

return boxes, scores, classes, valid_detections

def YoloV3(size=None, channels=3, anchors=yolo_anchors,

masks=yolo_anchor_masks, classes=80, training=False):

x = inputs = Input([size, size, channels])

x_36, x_61, x = Darknet(name='yolo_darknet')(x)

x = YoloConv(512, name='yolo_conv_0')(x)

output_0 = YoloOutput(512, len(masks[0]), classes, name='yolo_output_0')(x)

x = YoloConv(256, name='yolo_conv_1')((x, x_61))

output_1 = YoloOutput(256, len(masks[1]), classes, name='yolo_output_1')(x)

x = YoloConv(128, name='yolo_conv_2')((x, x_36))

output_2 = YoloOutput(128, len(masks[2]), classes, name='yolo_output_2')(x)

if training:

return Model(inputs, (output_0, output_1, output_2), name='yolov3')

boxes_0 = Lambda(lambda x: yolo_boxes(x, anchors[masks[0]], classes),

name='yolo_boxes_0')(output_0)

boxes_1 = Lambda(lambda x: yolo_boxes(x, anchors[masks[1]], classes),

name='yolo_boxes_1')(output_1)

boxes_2 = Lambda(lambda x: yolo_boxes(x, anchors[masks[2]], classes),

name='yolo_boxes_2')(output_2)

outputs = Lambda(lambda x: yolo_nms(x, anchors, masks, classes),

name='yolo_nms')((boxes_0[:3], boxes_1[:3], boxes_2[:3]))

return Model(inputs, outputs, name='yolov3')

def YoloV3Tiny(size=None, channels=3, anchors=yolo_tiny_anchors,

masks=yolo_tiny_anchor_masks, classes=80, training=False):

x = inputs = Input([size, size, channels])

x_8, x = DarknetTiny(name='yolo_darknet')(x)

x = YoloConvTiny(256, name='yolo_conv_0')(x)

output_0 = YoloOutput(256, len(masks[0]), classes, name='yolo_output_0')(x)

x = YoloConvTiny(128, name='yolo_conv_1')((x, x_8))

output_1 = YoloOutput(128, len(masks[1]), classes, name='yolo_output_1')(x)

if training:

return Model(inputs, (output_0, output_1), name='yolov3')

boxes_0 = Lambda(lambda x: yolo_boxes(x, anchors[masks[0]], classes),

name='yolo_boxes_0')(output_0)

boxes_1 = Lambda(lambda x: yolo_boxes(x, anchors[masks[1]], classes),

name='yolo_boxes_1')(output_1)

outputs = Lambda(lambda x: yolo_nms(x, anchors, masks, classes),

name='yolo_nms')((boxes_0[:3], boxes_1[:3]))

return Model(inputs, outputs, name='yolov3_tiny')

def YoloLoss(anchors, classes=80, ignore_thresh=0.5):

def yolo_loss(y_true, y_pred):

# 1. transform all pred outputs

# y_pred: (batch_size, grid, grid, anchors, (x, y, w, h, obj, ...cls))

pred_box, pred_obj, pred_class, pred_xywh = yolo_boxes(

y_pred, anchors, classes)

pred_xy = pred_xywh[..., 0:2]

pred_wh = pred_xywh[..., 2:4]

# 2. transform all true outputs

# y_true: (batch_size, grid, grid, anchors, (x1, y1, x2, y2, obj, cls))

true_box, true_obj, true_class_idx = tf.split(

y_true, (4, 1, 1), axis=-1)

true_xy = (true_box[..., 0:2] + true_box[..., 2:4]) / 2

true_wh = true_box[..., 2:4] - true_box[..., 0:2]

# give higher weights to small boxes

box_loss_scale = 2 - true_wh[..., 0] * true_wh[..., 1]

# 3. inverting the pred box equations

grid_size = tf.shape(y_true)[1]

grid = tf.meshgrid(tf.range(grid_size), tf.range(grid_size))

grid = tf.expand_dims(tf.stack(grid, axis=-1), axis=2)

true_xy = true_xy * tf.cast(grid_size, tf.float32) - \

tf.cast(grid, tf.float32)

true_wh = tf.math.log(true_wh / anchors)

true_wh = tf.where(tf.math.is_inf(true_wh),

tf.zeros_like(true_wh), true_wh)

# 4. calculate all masks

obj_mask = tf.squeeze(true_obj, -1)

# ignore false positive when iou is over threshold

true_box_flat = tf.boolean_mask(true_box, tf.cast(obj_mask, tf.bool))

best_iou = tf.reduce_max(broadcast_iou(

pred_box, true_box_flat), axis=-1)

ignore_mask = tf.cast(best_iou < ignore_thresh, tf.float32)

# 5. calculate all losses

xy_loss = obj_mask * box_loss_scale * \

tf.reduce_sum(tf.square(true_xy - pred_xy), axis=-1)

wh_loss = obj_mask * box_loss_scale * \

tf.reduce_sum(tf.square(true_wh - pred_wh), axis=-1)

obj_loss = binary_crossentropy(true_obj, pred_obj)

obj_loss = obj_mask * obj_loss + \

(1 - obj_mask) * ignore_mask * obj_loss

# TODO: use binary_crossentropy instead

class_loss = obj_mask * sparse_categorical_crossentropy(

true_class_idx, pred_class)

# 6. sum over (batch, gridx, gridy, anchors) => (batch, 1)

xy_loss = tf.reduce_sum(xy_loss, axis=(1, 2, 3))

wh_loss = tf.reduce_sum(wh_loss, axis=(1, 2, 3))

obj_loss = tf.reduce_sum(obj_loss, axis=(1, 2, 3))

class_loss = tf.reduce_sum(class_loss, axis=(1, 2, 3))

return xy_loss + wh_loss + obj_loss + class_loss

return yolo_loss

utils.py

from absl import logging

import numpy as np

import tensorflow as tf

import cv2

YOLOV3_LAYER_LIST = [

'yolo_darknet',

'yolo_conv_0',

'yolo_output_0',

'yolo_conv_1',

'yolo_output_1',

'yolo_conv_2',

'yolo_output_2',

]

YOLOV3_TINY_LAYER_LIST = [

'yolo_darknet',

'yolo_conv_0',

'yolo_output_0',

'yolo_conv_1',

'yolo_output_1',

]

def load_darknet_weights(model, weights_file, tiny=False):

wf = open(weights_file, 'rb')

major, minor, revision, seen, _ = np.fromfile(wf, dtype=np.int32, count=5)

if tiny:

layers = YOLOV3_TINY_LAYER_LIST

else:

layers = YOLOV3_LAYER_LIST

for layer_name in layers:

sub_model = model.get_layer(layer_name)

for i, layer in enumerate(sub_model.layers):

if not layer.name.startswith('conv2d'):

continue

batch_norm = None

if i + 1 < len(sub_model.layers) and \

sub_model.layers[i + 1].name.startswith('batch_norm'):

batch_norm = sub_model.layers[i + 1]

logging.info("{}/{} {}".format(

sub_model.name, layer.name, 'bn' if batch_norm else 'bias'))

filters = layer.filters

size = layer.kernel_size[0]

in_dim = layer.input_shape[-1]

if batch_norm is None:

conv_bias = np.fromfile(wf, dtype=np.float32, count=filters)

else:

# darknet [beta, gamma, mean, variance]

bn_weights = np.fromfile(

wf, dtype=np.float32, count=4 * filters)

# tf [gamma, beta, mean, variance]

bn_weights = bn_weights.reshape((4, filters))[[1, 0, 2, 3]]

# darknet shape (out_dim, in_dim, height, width)

conv_shape = (filters, in_dim, size, size)

conv_weights = np.fromfile(

wf, dtype=np.float32, count=np.product(conv_shape))

# tf shape (height, width, in_dim, out_dim)

conv_weights = conv_weights.reshape(

conv_shape).transpose([2, 3, 1, 0])

if batch_norm is None:

layer.set_weights([conv_weights, conv_bias])

else:

layer.set_weights([conv_weights])

batch_norm.set_weights(bn_weights)

assert len(wf.read()) == 0, 'failed to read all data'

wf.close()

def broadcast_iou(box_1, box_2):

# box_1: (..., (x1, y1, x2, y2))

# box_2: (N, (x1, y1, x2, y2))

# broadcast boxes

box_1 = tf.expand_dims(box_1, -2)

box_2 = tf.expand_dims(box_2, 0)

# new_shape: (..., N, (x1, y1, x2, y2))

new_shape = tf.broadcast_dynamic_shape(tf.shape(box_1), tf.shape(box_2))

box_1 = tf.broadcast_to(box_1, new_shape)

box_2 = tf.broadcast_to(box_2, new_shape)

int_w = tf.maximum(tf.minimum(box_1[..., 2], box_2[..., 2]) -

tf.maximum(box_1[..., 0], box_2[..., 0]), 0)

int_h = tf.maximum(tf.minimum(box_1[..., 3], box_2[..., 3]) -

tf.maximum(box_1[..., 1], box_2[..., 1]), 0)

int_area = int_w * int_h

box_1_area = (box_1[..., 2] - box_1[..., 0]) * \

(box_1[..., 3] - box_1[..., 1])

box_2_area = (box_2[..., 2] - box_2[..., 0]) * \

(box_2[..., 3] - box_2[..., 1])

return int_area / (box_1_area + box_2_area - int_area)