Article | CSDN Editorial Department

Listing | CSDN (ID: CSDNnews)

This time last year, ChatGPT was launched and took the world by storm, with many saying it meant the iPhone moment of AI was coming. CSDN founder Jiang Tao once predicted: "The next step is the application moment, and a new application era will come... Large models will promote the birth of more AI application programmers."

In the Keynote on the third day of the 2023 Amazon Cloud Technology re:Invent Global Conference, Dr. Swami Sivasubramanian, Vice President of Data and Artificial Intelligence of Amazon Cloud Technology, talked about the latest capabilities of Amazon Cloud Technology’s generative AI, the data strategy for the generative AI era, and the use of generative technology. In the speech about using generative AI applications to improve production efficiency, we really felt that Amazon Cloud Technology has lowered the threshold for generative AI application development, and the time for new generative AI applications is coming!

Dr. Swami said in the opening speech: "Today, humans and technology are showing an unprecedented close relationship, and generative AI is improving human productivity in many unexpected ways. This relationship allows humans and artificial intelligence to jointly form a new Innovation is full of endless possibilities.”

Based on this, Dr. Swami has brought a series of products from Amazon Cloud Technology, the key functions of which are: Help all developers and enterprises to build generative systems quickly, safely and at scale. application. Moreover, Dr. Swami believes that data is the core advantage of building differentiated generative AI applications. Amazon Cloud Technology protects all enterprises from the data dimension based on its generative AI capabilities.

In response to Dr. Swami’s wonderful speech, Amazon Cloud Technology Artificial Intelligence Product Marketing Manager Song Hongtao, Amazon Cloud Technology Data Analysis and Artificial Intelligence Product Director Troy Cui, Amazon Cloud Technology Data Product Technical Director Wang Xiaoye, and CSDN Artificial Intelligence Technology Editor-in-Chief Yuan Gungun, In-depth interpretation and discussion were carried out at the re:Invent global conference to help all developers and enterprises embrace the era of generative AI.

Amazon Cloud Technology’s layout in the field of generative AI

Song Hongtao:Today Dr. Swami introduced from a very unique perspective how to use data to build your own generative AI applications, and how to update generative AI applications after you have them. Change our lifestyle and improve our work efficiency. At yesterday's conference, Adam Selipsky, CEO of Amazon Cloud Technology, also shared the three-layer architecture of Amazon Cloud Technology's generative AI technology stack. What exactly does this three-layer technology architecture look like? And what are the highlights of the Amazon Bedrock upgrade?

Wang Xiaoye:Amazon Cloud Technology’s involvement in the field of generative AI is actually in line with our previous practices in cloud computing and other fields. Our goal is tomake an extremely complex technology easier to use, lower the barriers to use, and make it easy for anyone to apply.

Therefore, based on this premise, we proposed the concept of a three-tier architecture. Such a hierarchical structure helps organize technology more clearly and help customers think about problems end-to-end.

The lowest level isinfrastructure. On the one hand, it is inseparable from the construction of basic models. The typical feature of this dimensional model is that the parameter scale is relatively large. From training to inference and performance, a model may take several months to complete, and the cost is in the millions of dollars. Amazon Cloud Technology hopes to provide the best basic model infrastructure during the training and inference stages of the basic model. For example, Amazon SageMaker is the core product for model training. We have also moved it to the infrastructure layer to help customers and maximize the performance of the entire model in both training and inference.

On the other hand, the chip also provides strong support. At this conference, we have in-depth cooperation with NVIDIA. In fact, the industry's most leading GPUs are available at Amazon Cloud Technologies. At the same time, Amazon Cloud Technology has updated its self-developed chips Trainium and Inferentia to the second generation level.

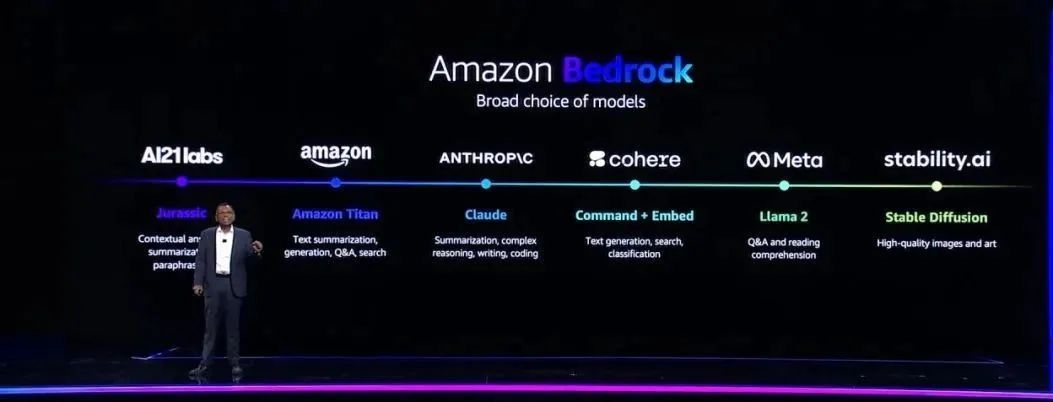

The middle layer isstorage. This layer allows customers to better utilize model capabilities. This time, Amazon Bedrock has undergone a new upgrade. Users can choose the best models based on this platform and get the simplest way to use it. We will continue to expand the functionality of the platform based on users' actual scenarios, such as the Amazon CoderWhisperer code generation assistant, to meet more needs.

The top layer isthe application layer. Adam released the most important product Amazon Q at this conference, hoping to use generative AI assistants help users answer questions and keep experts who understand the business online. The application layer also covers out-of-the-box generative AI applications, providing generative AI users and business personnel who lack development skills with easy access to services to accelerate work efficiency.

Troy Cui:Based on Amazon BedRock, the significance of its existence is that Amazon Cloud Technology helps us select the best models on the market and provide these models to us, so that we You no longer need to go through tedious connection steps. These models are now directly accessible through an API.

One of the most immediate improvements to Amazon BedRock this time around is a host of updates to these models, like the addition of support for the Claude 2.1 and Llama2 70b. Among them, the Claude 2.1 model is very powerful in handling complex summarization and reasoning, supporting 200k context tokens, and we have further strengthened support for the entire stability aspect, providing strong scalability.

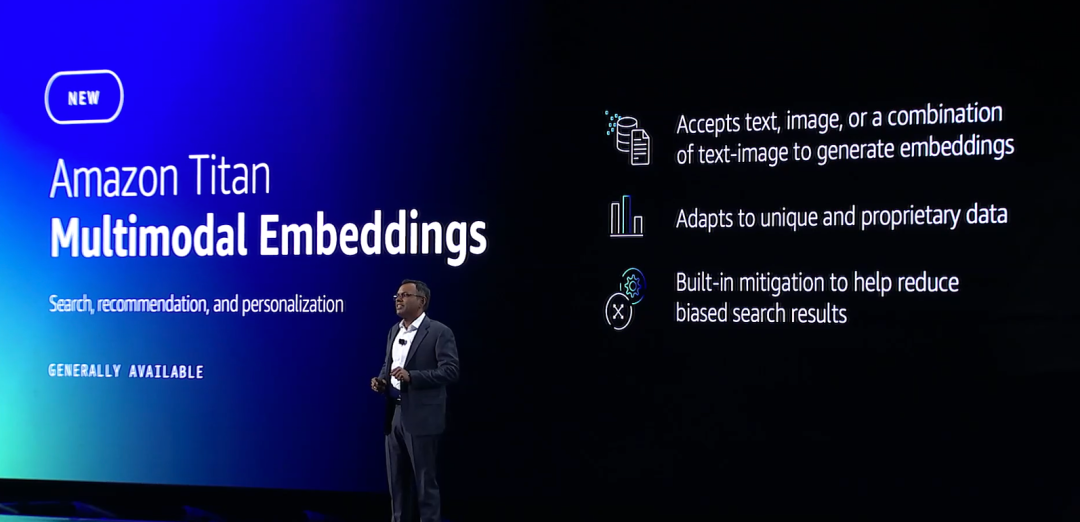

Song Hongtao:Amazon Cloud Technology recently released a latest product called Amazon Titan Multimodal Embeddings. Multimodality actually represents a very important trend in the field of generative AI models. What exactly does Multimodal Embeddings mean to our customers and base models? Embedding is a term that is often mentioned in the technology field, so what exactly is it used for?

Wang Xiaoye:To understand simply, Embedding can be regarded as digital. When chat language models were popular, everyone was using tools like vector databases, which digitized the information, because only after digitization, it is easier for us to calculate its similarity through mathematical methods. Therefore, the core problem is to convert concepts such as "Embedding" into numbers to make it easier to find similarities.

This time we are releasing a concept called "multimodality", which may sound a bit abstract. It includes pictures, sounds, videos and other forms, not just text. One of the most useful scenarios is image search on e-commerce websites. When we see a product we like, such as a mobile phone case, and want to find out which e-commerce website to buy it on, we can take a photo and search. At this time, we rely on the middle layer to convert this image into numbers, which will improve the accuracy of the search.

Finding similarities is not a particularly difficult task at a mathematical level, so the key lies in whether the model can effectively translate the feeling of similarity between two pictures or similar elements into numbers in the process. In this step, the performance of the model is crucial as it needs to integrate multiple dimensions, such as color, scene, etc., to make the two more similar after digital conversion.

The model we released this time pays more attention to the performance at this level. In addition, it also considers pictures and text descriptions together to more comprehensively present the characteristics of the item.

How to protect privacy and ensure security while accelerating the pace of generative AI?

Song Hongtao:I noticed that Dr. Swami announced the updates of several large language models. One of them is the Amazon Titan Image Generator Vincentian graph model. Dr. Swami mentioned that he should be responsible for Responsible AI, such as invisible watermarks, can better protect the copyright of images generated by large models. I know that in the field of document processing, copyright issues or privacy issues are actually a very big pain point for many customers.

Yuan Gungun:My job is content creation, and I often use large models to generate text and pictures. OpenAI has released a Copywriter Shield Support copyright shield support plan for API developers and enterprise customers. If they encounter legal disputes over copyright due to generated results, OpenAI will provide them with protection. However, I believe that large models will be applied to various fields and scenarios in the future, and the timeliness of such commitments is uncertain. Therefore, like image watermarking technology, the bottom layer is a relatively mature digital watermarking technology, which can protect the security of content generated by large models in many aspects.

When you find illegal content on the Internet and suspect that it was generated by a large model, you can use the image watermark to trace the large model that generated it. Another way is to use our large model to generate pictures with digital watermarks, which can protect the author's copyright.

Wang Xiaoye:First of all, Dr. Swami mentioned three current challenges regarding image watermarking technology:

First, the watermark must be invisible, otherwise it will directly affect the image.

Second, adding watermarks to images means consuming some extra performance during the inference process, but it cannot be slowed down by the delay. These problems need to be solved.

Third, most people edit the image after generating it, and there is no way to ensure that the watermark is still there after the image is edited.

Through Dr. Swami’s explanation, we can find that some technologies that we think are mature are actually not easy and indeed important, and require special handling and treatment. At the same time, you can also see the difference between the image generator model and other open source models. If companies really want to use it, this model may be worth trying first.

How to choose a large model with a hundred flowers blooming?

Song Hongtao:When faced with so many basic models, how should customers choose the model that best suits their business scenarios? Does Amazon Cloud Technology already have relevant products or tools to help customers make more informed choices?

Troy Cui:There are several factors to consider when selecting a large model in a production environment.

First of all,the accuracy of the model is particularly important in answering questions.

Secondly, in a production environment, you must pay attention tolatencies.

Finally, if your product will be used by a large number of users, you also need to consider the costof operating on a large scale.

As a large-scale model ecological platform for production and enterprise customers, Amazon Cloud Technology has released Model evaluation and selection, aiming to help enterprise customers make the best choice in the production environment. This tool gives you the option to compare while choosing the best-fitting model. The entire comparison process will take these three key factors into consideration to help you strike a balance between accuracy, latency, and cost. This tool provides businesses with a path to value and continuous delivery in an easy and convenient way.

Yuan Gungun:In terms of model selection, we just mentioned that Amazon BedRock now supports Claude 2.1 and Llama2 70b. Before this, everyone always felt that GPT was a large model with a leading edge. Just choose GPT.

But in fact, as time goes by, some other models have greatly improved, and the gap with GPT has gradually narrowed. For example, Claude 2.1's support for long text and its support for file uploads can actually help users find suitable scenarios. Llama2 is a large open source basic model with a very complete ecosystem. There are many open source tools and components that can also improve model effects, so GPT is not the only choice.

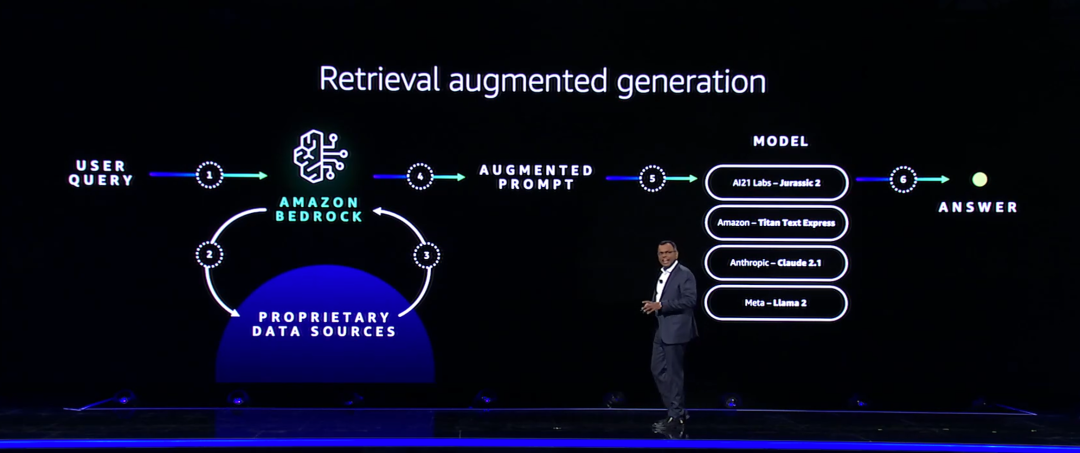

Song Hongtao:In the past six months, we can see that a technology called RAG (Retrieval Augmented Generation) has become the mainstream for enterprise users to build generative AI applications. choose. Teacher Troy Cui, can you introduce the specific principles of RAG to everyone in a simple and in-depth way?

Troy Cui:The basic capabilities of large language models are understanding and expression, that is, fully understanding what you are saying, understanding your intentions, etc. RAG is based on this Give large models the ability to remember . When the large model encounters some question and answer or search scenarios, it will first understand the other party's meaning, then retrieve it in the memory, and finally output the results. So to put it simply, RAG actually combines vector databases and large language models in this way to empower.

There are actually many components involved in using RAG. First, we need to use the Embedding model to convert existing content knowledge, including multi-modal content such as text and pictures, into vectors, and then store these vectorized data in a vector database. When you actually face the business process, you still need to deal with many aspects, such as performance optimization, various adjustments, etc., and finally build it into a complete package for external output.

Therefore, although the concept of RAG sounds simple, it actually requires a lot of engineering work to engineer it into a business that can empower external parties.

Song Hongtao:Today Dr. Swami announced access to a service called Knowledge Bases for Amazon Bedrock. Can this service help customers or application developers to a certain extent? to solve the challenges or difficulties you mentioned above?

Troy Cui:This year, Amazon Cloud Technology has made a lot of releases, many of which are dedicated to helping enterprise customers integrate into specific service scenarios. For example, in a specific scenario, we will provide a relatively practical out-of-the-box tool so that customers can directly enable and go online in this scenario - and Knowledge Bases for Amazon Bedrock provides such a capability.

As we mentioned just now, the entire knowledge base is in a retrieval scenario. Customers may have put the data in Amazon S3 (Simple Storage Service). They can directly convert it into vectors through Amazon Bedrock and check some Embedding vector databases, including Open Search, Pinecone, Redis, and more new databases such as Aurora and MongoDB will follow. It is then stored in the vector data and sent to the model to form a feedback.

Therefore, Knowledge Bases for Amazon Bedrock is equivalent to having the entire end-to-end work packaged, and enterprises can directly use it for business empowerment.

Build generative AI applications more quickly in a low-code, no-code way

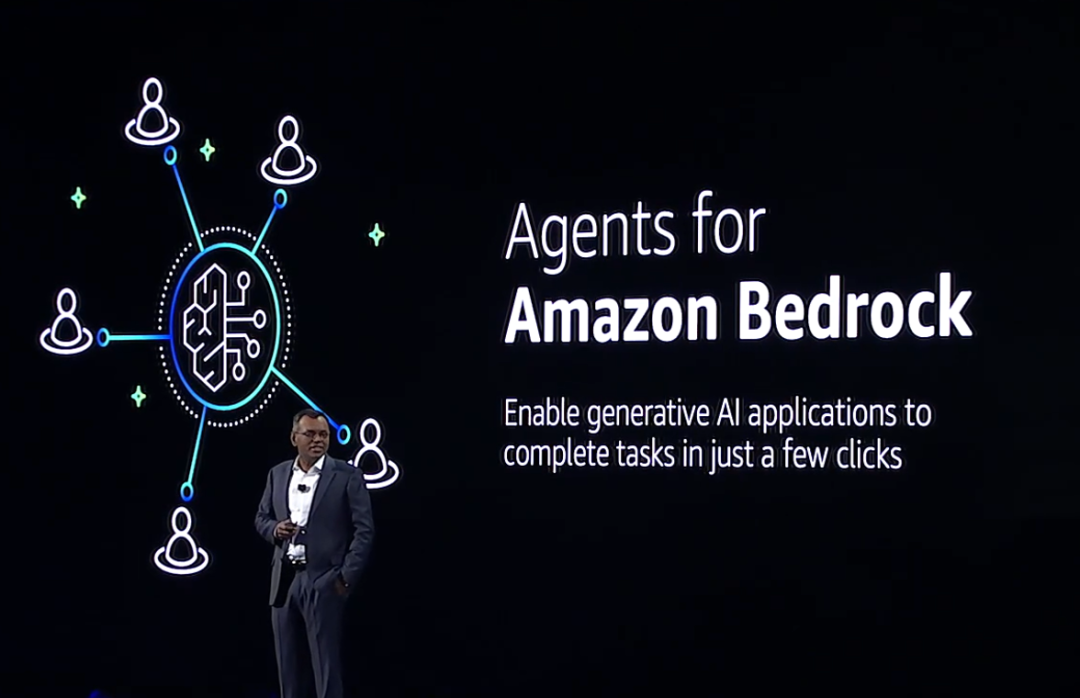

Song Hongtao:I saw Dr. Swami mentioned a tool called "Agents for Amazon Bedrock" today. I would like to ask Mr. Xiaoye to give you a brief analysis of Agents for Amazon. Bedrock, how will it help developers build generative AI applications in a low-code or even no-code way?

Wang Xiaoye:The implementation logic of Agents for Amazon Bedrock is to help you with task planning first. Dr. Swami will use buying shoes for colors as an example every time. In the scenario where a customer wants to change the color of shoes, task planning is required: in the first step, the model will help you verify the purchase record, and then check the exchange policy to determine whether the shoes can be exchanged and returned, then execute the return task, and then enter the logistics task, the next step Make price difference settlement with customers.

A microservice is required behind each step, and to run this single-function function, the most suitable serverless computing service like Lambda is. The role of Agents for Amazon Bedrock is to string together these workflows and automatically help you arrange tasks:As long as you tell it what this function does, it can basically reason about it. and advance implementation.

Song Hongtao:How to get through the "last three kilometers" of generative AI applications from POC (Proof of Concept) to implementation is currently a headache for many companies. Based on this, Dr. Swami shared a custom model program for Anthropic Claude. Can Mr. Xiaoye introduce it?

Wang Xiaoye:First of all, we strive to lower the threshold for customer development, such as making models more accessible through products, using security agents to help complete tasks, and making it easier to build generative AI applications. Customers' evaluation of whether this product is effective depends on the final business output, but this requires an iterative process of constant change.

To this end, we launched this global project and formed the organizational unit Generative AI Innovation Center. In essence, this project is to provide human support, that is, a group of experts will help you tune the model, including changing prompts, doing RAG and other complex engineering work, to help you make good use of Anthropic Claude and make customized models for enterprise business. . In China, this team of experts is called Innovation Lab.

Lower the operation and maintenance threshold for algorithm engineers

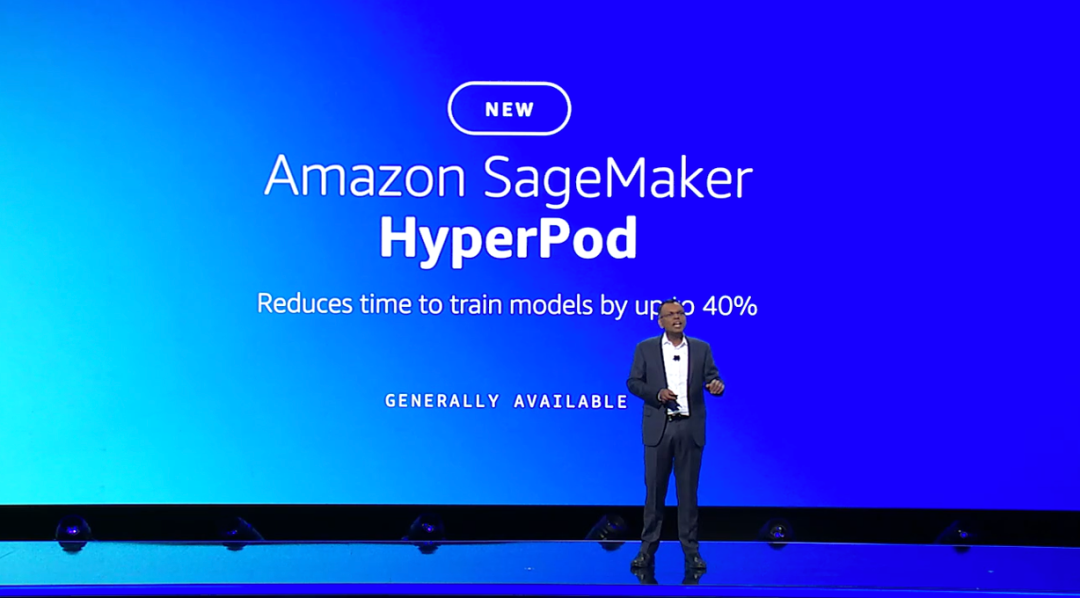

Song Hongtao:For most model providers, Amazon SageMaker HyperPod is a very popular product. In terms of training, deployment, and inference optimization, it can Effectively improve development efficiency and reduce complexity. So what do you think about the Amazon SageMaker HyperPod released today?

Yuan Gungun:Dr. Swami mentioned that Amazon SageMaker HyperPod is very suitable for basic large models and reduces the operation and maintenance burden during the training phase.

The time cycle for training a basic large model may take ten days and a half or even longer, and the computing power cost is also higher. There are many complex operation and maintenance problems that algorithm engineers may not be able to solve. For example, after one of our servers crashes, how to locate the problem requires strong engineering experience and capabilities. Quickly identify whether it is a data problem, an algorithm problem, or a cluster problem, and then solve the problem.

Amazon SageMaker HyperPod provides a very automated operation and maintenance training cluster. It can quickly locate the faulty node, automatically replace it, and then restart the training task of the previous checkpoint. I believe that Amazon SageMaker HyperPod can relieve many algorithm engineers from the operation and maintenance pressure during the model training phase.

Amazon Sagemaker HyperPod comes preinstalled with Amazon SageMaker's training libraries. So you only need to select the training environment you need, customize the training library and tuning tools, then create a new instance and start the training task directly.

Wang Xiaoye:This is indeed very important. There is already a shortage of AI talents in the market now, and many of them are training models. It is difficult to bear the workload of how to operate and maintain, detect machine failures, and how to troubleshoot problems. Many times, they forget to do Checkpoint, and when they restart, they are back to two or three days ago. , which greatly delays the development progress. The emergence of Amazon SageMaker HyperPod can free data scientists from underlying operation and maintenance issues and focus more on model hyperparameter adjustment and other work.

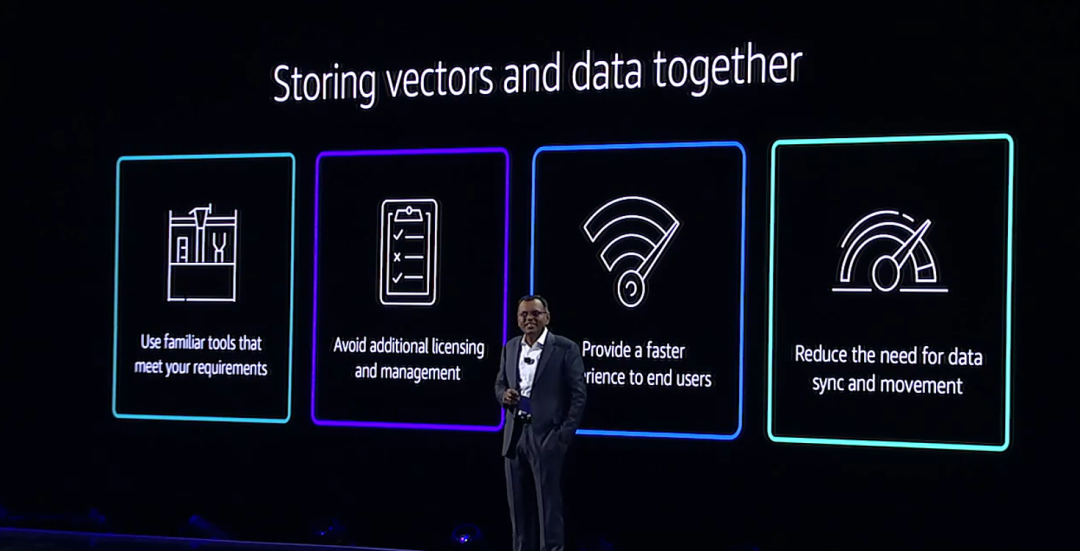

Song Hongtao:From a comprehensive product perspective, vector database is a very important component. Please Troy share how Amazon Cloud Technology supports vector capabilities on the database product side?

Troy Cui:I think vectoring is to vectorize the entire customer data to provide better service capabilities for the business in the future. This is a current big trend. In practice, many customers will be curious: Why does Amazon Cloud Technology not just make a vector database product with a single name, but instead releases so many vector functions? In this regard, Amazon Cloud Technology hopes that customers will have the right to choose based on each data, allowing customers to store data in the most suitable storage environment, and give the environment vectorization capabilities< /span>. In this way, when business needs vector support, they do not need to move data from the stored data to the vector database. Based on such a big idea, we hope to give customers an explanation that no matter where the data is stored, you have the ability to use vectorization at any time. At this conference, we released some database capabilities with vector storage environment. Currently, Amazon Cloud has seven database products with vector storage environment capabilities, giving customers many choices.

Generative AI is revolutionizing the way developers work

Song Hongtao: Previously, Amazon Cloud Technology launched a product called Amazon CodeWhisperer, which aims to help developers improve the efficiency of writing code from the perspective of code development. Now that Amazon Q has been introduced, combined with Amazon CodeWhisperer, can it further improve developers’ experience and efficiency in code development?

Yuan Gungun:CSDN conducted a community survey a while ago. Thousands of developers filled out questionnaires and submitted their usage habits of code generation tools. As a result, nearly 90% of developers have tried various code generation tools, and nearly 40% of developers now use them every day. We also conducted a survey on everyone’s tool selection, and Amazon CodeWhisperer is also far ahead.

What are the application scenarios of code generation, or what content do developers use code generation tools to generate? Common uses are unit testing, code annotation, learning a new language, or writing products across technology stacks.

There is also a type of situation, such as a certain programming language has changed, especially when the new version has undergone major changes, we need to use code generation tools to understand these changes.

Therefore, developers have very complex usage scenarios and complex usage environments. Some use code generation tools through plug-ins in IDEs, some use them in conversational windows, and some use them through local deployment. Amazon Q can solve this problem in a more fine-grained manner for different user needs.

Wang Xiaoye:In code application scenarios, we are not so detailed and summarize them as code generation, but in fact this field covers many different functions . This time, Amazon Q does not provide all possible code generation functions. Its core is based on a part of your original code, using a large model to understand the function of your original code and On this basis, new functions are generated and certain code segments are enhanced.

We would like to provide a new capability in this specific area. In addition, code translation and conversion is also our focus. Of course, there will be more application scenarios in the future, but for now we recommend that you focus on these two core capabilities first to better understand their basic principles.

Amazon Q uses enterprise-level AI assistant as its slogan to provide an intelligent assistant for workers in the business field. Users can connect directly to their business's database, such as one that contains employee salaries, and then talk to the assistant. Amazon was designed with data protection and permissions in mind. Initially, when you connect, it integrates with your enterprise's single sign-on (SSO) to understand what you have access to through the role permissions set in SSO. For example, when a regular employee rather than a manager asks the salary of other members of the entire team, it will limit this permission and will not answer such a question. Therefore, it fully takes into account the relevant factors of the enterprise.

In the future, Amazon Q will be developed for more scenarios. Currently, we have launched a platform that may be popular with some customers - Center, which covers our call center. At the same time, in the field of business intelligence (BI), we have also introduced assistants.

Song Hongtao: Helping developers improve personal efficiency through the application of generative AI, and continuing to promote the universalization of generative AI, has always been Amazon Cloud Technology Vision. Based on this, in addition to the above products, Dr. Swami also brought a product with a similar name to Amazon Bedrock in his speech-PartyRock. So, what exactly does this product do?

To use, visit https://partyrock.aws/

Wang Xiaoye:The bottom layer of PartyRock is developed based on Amazon Bedrock. Simply put, it is equivalent to a "playground" that allows developers to feel the huge potential of the capabilities of generative AI+Agent, and allows many developers to use their imagination to innovate and create.

Troy Cui:I think it can be regarded as an experience area. You don’t even need an Amazon Cloud Technology account to try the network experience on PartyRock.

Yuan Gungun:I highly recommend trying PartyRock, which is actually a one-stop AI application generation tool based on Amazon BedRock. You don't even need to register an Amazon Cloud Technology account, you can use Amazon Cloud Technology's cloud resources for free, easily generate stunning Web and App applications, and share them with others. What’s more interesting is that it has already provided some demonstration applications online, some of which are even extremely powerful. The advanced model technology of Claude 2 is used at the bottom, which is very surprising.

Advice for developers

Song Hongtao:Finally, please summarize your experience of watching Dr. Swami’s speech in one sentence, as well as your suggestions for contemporary developers.

Yuan Gungun:I wrote a sentence:Cloud service overlord, deeply embracing AI, enterprises and developers, sharing technology dividends a>. This is actually a call to our developers. We hope that everyone can seize the opportunity of this wave of artificial intelligence technology and large model technology to develop more new innovative applications based on the solid cloud services of Amazon Cloud Technology.

Wang Xiaoye:I would like to share with you the goals we want to achieve based on the positioning of the Amazon Q product, as well as some key dimensions and factors to prioritize when iterating the product,. The basis of everything is actually the needs of the enterprise

Pay attention to the needs of the enterprise, that is, whether the enterprise's data is taken seriously, the protection of data privacy, and whether business barriers can be broken through personalized data customization. On the premise of meeting the needs of enterprises, we need to responsibly ensure that products can provide substantial help to enterprises.

Troy Cui:From a data perspective, Amazon Cloud Technology will further solve privacy and security issues, so that customers do not have to worry about this part at all, butFocus more on mining the value of data instead of focusing on time-consuming but repetitive work.

Song Hongtao:Thanks to the three teachers for their wonderful summary. We are very lucky to be in the era of the explosion of generative AI, and we look forward to the subsequent generative AI bringing more convenience to our work and life.