Existe un concepto muy importante en la reconstrucción tridimensional en la coincidencia estéreo: el volumen de costos. Los más recientes incluyen transformadores de autoatención y atención cruzada.

Script de prueba para generar nube de puntos:

import sys

sys.path.append('core')

DEVICE = 'cuda'

import os

os.environ['CUDA_VISIBLE_DEVICES'] = '0'

import argparse

import glob

import numpy as np

import torch

from tqdm import tqdm

from pathlib import Path

from igev_stereo import IGEVStereo

from utils.utils import InputPadder

from PIL import Image

from matplotlib import pyplot as plt

import os

import cv2

import time

def load_image(imfile):

img = np.array(Image.open(imfile)).astype(np.uint8)

img = torch.from_numpy(img).permute(2, 0, 1).float()

return img[None].to(DEVICE)

def load_image1(img):

# img = np.array(Image.open(imfile)).astype(np.uint8)

img = torch.from_numpy(img).permute(2, 0, 1).float()

return img[None].to(DEVICE)

def disp2pointcloud(disp, K, disline):

width = disp.shape[1]

height = disp.shape[0]

f = open('pointcloud.txt','w')

print(K[0, 2])

for i in range(width):

for j in range(height):

z = K[0, 0]/disp[j, i]*disline

x = (i-K[0, 2])/K[0, 0]*z

y = (j-K[1, 2])/K[1, 1]*z

f.write('%f %f %f\n' %(x, y, z))

f.close()

def demo(args):

model = torch.nn.DataParallel(IGEVStereo(args), device_ids=[0])

model.load_state_dict(torch.load(args.restore_ckpt))

model = model.module

model.to(DEVICE)

model.eval()

output_directory = Path(args.output_directory)

output_directory.mkdir(exist_ok=True)

with torch.no_grad():

left_images = sorted(glob.glob(args.left_imgs, recursive=True))

right_images = sorted(glob.glob(args.right_imgs, recursive=True))

print(f"Found {

len(left_images)} images. Saving files to {

output_directory}/")

for (imfile1, imfile2) in tqdm(list(zip(left_images, right_images))):

# image1 = load_image(imfile1)

# image2 = load_image(imfile2)

# print(imfile2)

start = time.time()

image1 = cv2.imread(imfile1)

image2 = cv2.imread(imfile2)

width = int(image1.shape[1] * 0.5)

height = int(image1.shape[0] * 0.5)

dim = (width, height)

image1 = cv2.resize(image1, dim, interpolation = cv2.INTER_AREA)

image2 = cv2.resize(image2, dim, interpolation = cv2.INTER_AREA)

#np.asarray(bytearray(req.read()), dtype=np.uint8)

# image1 = np.asarray(image1)

# image2 = np.asarray(image2)

image1 = load_image1(image1)

image2 = load_image1(image2)

padder = InputPadder(image1.shape, divis_by=32)

image1, image2 = padder.pad(image1, image2)

disp = model(image1, image2, iters=args.valid_iters, test_mode=True)

disp = disp.cpu().numpy()

K = np.array([[2.4219981e+03*0.5, 0, 1.2478e+3*0.5], [0, 2.4219981e+03*0.5, 1.00927e+3*0.5],[0, 0, 1]])

disline = 1.4939830129441067e+00

disp2pointcloud(disp.squeeze(), K, disline)

disp = padder.unpad(disp)

end = time.time()

print(end-start)

file_stem = imfile1.split('/')[-2]

filename = os.path.join(output_directory, f"{

file_stem}.png")

plt.imsave(output_directory / f"{

file_stem}.png", disp.squeeze(), cmap='jet')

# disp = np.round(disp * 256).astype(np.uint16)

# cv2.imwrite(filename, cv2.applyColorMap(cv2.convertScaleAbs(disp.squeeze(), alpha=0.01),cv2.COLORMAP_JET), [int(cv2.IMWRITE_PNG_COMPRESSION), 0])

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--restore_ckpt', help="restore checkpoint", default='./pretrained_models/sceneflow/sceneflow.pth')

parser.add_argument('--save_numpy', action='store_true', help='save output as numpy arrays')

parser.add_argument('-l', '--left_imgs', help="path to all first (left) frames", default="./demo-imgs/leador/0.jpg")

parser.add_argument('-r', '--right_imgs', help="path to all second (right) frames", default="./demo-imgs/leador/1.jpg")

# parser.add_argument('-l', '--left_imgs', help="path to all first (left) frames", default="/data/Middlebury/trainingH/*/im0.png")

# parser.add_argument('-r', '--right_imgs', help="path to all second (right) frames", default="/data/Middlebury/trainingH/*/im1.png")

# parser.add_argument('-l', '--left_imgs', help="path to all first (left) frames", default="/data/ETH3D/two_view_training/*/im0.png")

# parser.add_argument('-r', '--right_imgs', help="path to all second (right) frames", default="/data/ETH3D/two_view_training/*/im1.png")

parser.add_argument('--output_directory', help="directory to save output", default="./demo-output/")

parser.add_argument('--mixed_precision', action='store_true', help='use mixed precision')

parser.add_argument('--valid_iters', type=int, default=32, help='number of flow-field updates during forward pass')

# Architecture choices

parser.add_argument('--hidden_dims', nargs='+', type=int, default=[128]*3, help="hidden state and context dimensions")

parser.add_argument('--corr_implementation', choices=["reg", "alt", "reg_cuda", "alt_cuda"], default="reg", help="correlation volume implementation")

parser.add_argument('--shared_backbone', action='store_true', help="use a single backbone for the context and feature encoders")

parser.add_argument('--corr_levels', type=int, default=2, help="number of levels in the correlation pyramid")

parser.add_argument('--corr_radius', type=int, default=4, help="width of the correlation pyramid")

parser.add_argument('--n_downsample', type=int, default=2, help="resolution of the disparity field (1/2^K)")

parser.add_argument('--slow_fast_gru', action='store_true', help="iterate the low-res GRUs more frequently")

parser.add_argument('--n_gru_layers', type=int, default=3, help="number of hidden GRU levels")

parser.add_argument('--max_disp', type=int, default=192, help="max disp of geometry encoding volume")

args = parser.parse_args()

Path(args.output_directory).mkdir(exist_ok=True, parents=True)

demo(args)

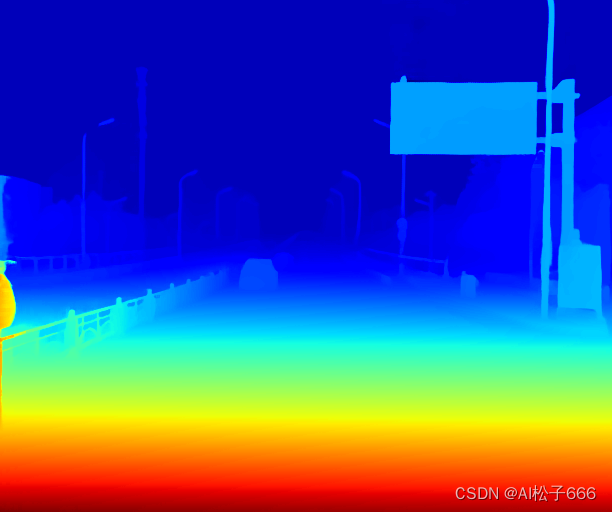

Imágenes de prueba de datos propios.

0.jpg, representa la imagen de la izquierda.

1.jpg, representa la imagen de la derecha

Resultados de la prueba