Clúster k8s de implementación binaria de Ansible

Perspectiva de DevOps de Master K

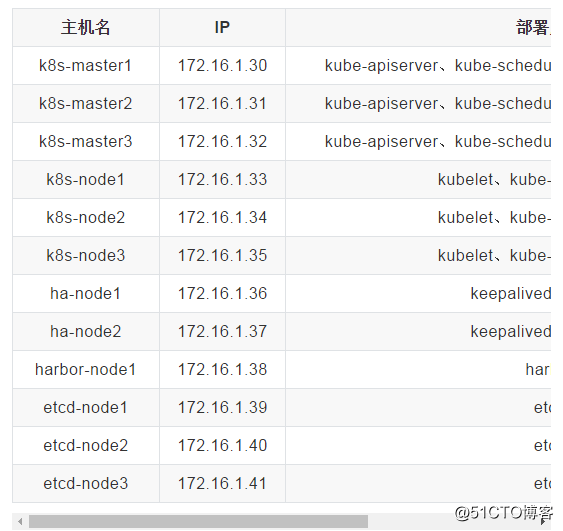

Despliegue del servidor

Entorno del servidor

root@k8s-master1:~# uname -r

4.15.0-112-generic

root@k8s-master1:~# cat /etc/issue

Ubuntu 18.04.4 LTS \n \lEl entorno básico es el mismo que utilizar kubeadm para implementar el clúster de kubernetes

Instale keepalived y haproxy en ha-node1 y ha-node2, la IP virtual es 172.16.1.188, como VIP del apiserver. ha-node1 sirve como nodo maestro y ha-node2 sirve como nodo de respaldo.

Harbor-node1 implementa el servicio de puerto para almacenar la imagen.

Los servicios anteriores no se implementarán en detalle por el momento.

Implementar ansible

k8s-master como servidor de implementación

Configuración básica del entorno

- Instalar python2.7

Instalar en nodos maestros, nodos, etcd

# apt-get install python2.7 -y

# ln -s /usr/bin/python2.7 /usr/bin/python- Instalar ansible

# apt install ansible- Configurar el inicio de sesión sin contraseña en la consola ansible

# ssh-keygen- Clave de distribución

root@k8s-master1:~# ssh-copy-id 172.16.1.31Distribuya la clave al nodo principal, nodo de nodo, nodo etcd

Descargar proyecto

#export release=2.2.0

# curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/${release}/easzup

# vim easzup

export DOCKER_VER=19.03.12

export KUBEASZ_VER=2.2.0

# chmod +x easzup

# ./easzup -DPrepare el archivo de hosts

# cd /etc/ansible/

# cp example/hosts.multi-node ./hosts

# vim hosts

# 'etcd' cluster should have odd member(s) (1,3,5,...)

# variable 'NODE_NAME' is the distinct name of a member in 'etcd' cluster

[etcd]

172.16.1.39 NODE_NAME=etcd1

172.16.1.40 NODE_NAME=etcd2

172.16.1.41 NODE_NAME=etcd3

# master node(s)

[kube-master]

172.16.1.30

172.16.1.31

# work node(s)

[kube-node]

172.16.1.33

172.16.1.34

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'yes' to install a harbor server; 'no' to integrate with existed one

# 'SELF_SIGNED_CERT': 'no' you need put files of certificates named harbor.pem and harbor-key.pem in directory 'down'

[harbor]

#172.16.1.8 HARBOR_DOMAIN="harbor.yourdomain.com" NEW_INSTALL=no SELF_SIGNED_CERT=yes

# [optional] loadbalance for accessing k8s from outside

# 外部负载均衡,用于自有环境负载转发 NodePort 暴露的服务等

[ex-lb]

172.16.1.36 LB_ROLE=master EX_APISERVER_VIP=172.16.1.188 EX_APISERVER_PORT=6443

172.16.1.37 LB_ROLE=backup EX_APISERVER_VIP=172.16.1.188 EX_APISERVER_PORT=6443

# [optional] ntp server for the cluster

[chrony]

#172.16.1.1

[all:vars]

# --------- Main Variables ---------------

# Cluster container-runtime supported: docker, containerd

CONTAINER_RUNTIME="docker"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="flannel"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="172.20.0.0/16"

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="10.20.0.0/16"

# NodePort Range

NODE_PORT_RANGE="30000-60000"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="kevin.local."

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/usr/bin"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/ansible"La red toma la franela como ejemplo, se puede cambiar a calicó

Implementación paso a paso

Inicialización del entorno

root@k8s-master1:/etc/ansible# vim 01.prepare.yml

# [optional] to synchronize system time of nodes with 'chrony'

- hosts:

- kube-master

- kube-node

- etcd

# - ex-lb

# - chrony

roles:

- { role: chrony, when: "groups['chrony']|length > 0" }

# to create CA, kubeconfig, kube-proxy.kubeconfig etc.

- hosts: localhost

roles:

- deploy

# prepare tasks for all nodes

- hosts:

- kube-master

- kube-node

- etcd

roles:

- prepare

root@k8s-master1:/etc/ansible# apt install python-pip

root@k8s-master1:/etc/ansible# ansible-playbook 01.prepare.ymlInstalar etcd

root@k8s-master1:/etc/ansible# ansible-playbook 02.etcd.yml- Cada servidor etcd verifica el servicio etcd

root@etc-node1:~# export NODE_IPS="172.16.1.39 172.16.1.40 172.16.1.41"

root@etc-node1:~# for ip in ${NODE_IPS};do /usr/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem endpoint health;done

https://172.16.1.39:2379 is healthy: successfully committed proposal: took = 11.288725ms

https://172.16.1.40:2379 is healthy: successfully committed proposal: took = 14.079052ms

https://172.16.1.41:2379 is healthy: successfully committed proposal: took = 13.639416msInstalar ventana acoplable

La implementación de Docker es la misma que usar kubeadm para implementar el clúster de kubernetes.

Implementar maestro

root@k8s-master1:/etc/ansible# ansible-playbook 04.kube-master.yml

root@k8s-master1:/etc/ansible# kubectl get node

NAME STATUS ROLES AGE VERSION

172.16.1.30 Ready,SchedulingDisabled master 65s v1.17.2

172.16.1.31 Ready,SchedulingDisabled master 65s v1.17.2Nodo de departamento

- Configurar la duplicación

# docker pull mirrorgooglecontainers/pause-amd64:3.1

# docker tag mirrorgooglecontainers/pause-amd64:3.1 harbor.kevin.com/base/pause-amd64:3.1

# docker push harbor.kevin.com/base/pause-amd64:3.1- Modificar la dirección del espejo

root@k8s-master1:/etc/ansible# vim roles/kube-node/defaults/main.yml

# 基础容器镜像

SANDBOX_IMAGE: "harbor.kevin.com/base/pause-amd64:3.1"

root@k8s-master1:/etc/ansible# ansible-playbook 05.kube-node.yml

root@k8s-master1:/etc/ansible# kubectl get node

NAME STATUS ROLES AGE VERSION

172.16.1.30 Ready,SchedulingDisabled master 16m v1.17.2

172.16.1.31 Ready,SchedulingDisabled master 16m v1.17.2

172.16.1.33 Ready node 10s v1.17.2

172.16.1.34 Ready node 10s v1.17.2Implementar componentes de red

root@k8s-master1:/etc/ansible# ansible-playbook 06.network.yml- Verificar red

root@k8s-master1:~# kubectl run net-test1 --image=alpine --replicas=3 sleep 360000

\kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/net-test1 created

root@k8s-master1:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test1-5fcc69db59-67gtx 1/1 Running 0 37s 10.20.2.3 172.16.1.33 <none> <none>

net-test1-5fcc69db59-skpfl 1/1 Running 0 37s 10.20.2.2 172.16.1.33 <none> <none>

net-test1-5fcc69db59-w4chj 1/1 Running 0 37s 10.20.3.2 172.16.1.34 <none> <none>

root@k8s-master1:~# kubectl exec -it net-test1-5fcc69db59-67gtx sh

/ # ping 10.20.3.2

PING 10.20.3.2 (10.20.3.2): 56 data bytes

64 bytes from 10.20.3.2: seq=0 ttl=62 time=1.272 ms

64 bytes from 10.20.3.2: seq=1 ttl=62 time=0.449 ms

^C

--- 10.20.3.2 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.449/0.860/1.272 ms

/ # ping 8.8.8.8

PING 8.8.8.8 (8.8.8.8): 56 data bytes

64 bytes from 8.8.8.8: seq=0 ttl=127 time=112.847 ms

^C

--- 8.8.8.8 ping statistics ---

2 packets transmitted, 1 packets received, 50% packet loss

round-trip min/avg/max = 112.847/112.847/112.847 msDespués de que la red esté conectada, la parte inferior de k8s estará bien

Agregar nodo maestro

root@k8s-master1:~# easzctl add-master 172.16.1.32

root@k8s-master1:~# kubectl get node

NAME STATUS ROLES AGE VERSION

172.16.1.30 Ready,SchedulingDisabled master 46h v1.17.2

172.16.1.31 Ready,SchedulingDisabled master 46h v1.17.2

172.16.1.32 Ready,SchedulingDisabled master 3m28s v1.17.2

172.16.1.33 Ready node 45h v1.17.2

172.16.1.34 Ready node 45h v1.17.2Agregar nodo de nodo

root@k8s-master1:~# easzctl add-node 172.16.1.35

root@k8s-master1:~# kubectl get node

NAME STATUS ROLES AGE VERSION

172.16.1.30 Ready,SchedulingDisabled master 46h v1.17.2

172.16.1.31 Ready,SchedulingDisabled master 46h v1.17.2

172.16.1.32 Ready,SchedulingDisabled master 11m v1.17.2

172.16.1.33 Ready node 46h v1.17.2

172.16.1.34 Ready node 46h v1.17.2

172.16.1.35 Ready node 3m44s v1.17.2Servicio DNS

Actualmente, hay dos componentes dns de uso común, kube-dns y coredns, aquí para instalar CoreDNS

Implementar coreDNS

root@k8s-master1:~# mkdir /opt/dns/

root@k8s-master1:~# cd /opt/dns/

root@k8s-master1:/opt/dns# git clone https://github.com/coredns/deployment.git

root@k8s-master1:/opt/dns# cd deployment/kubernetes/

root@k8s-master1:/opt/dns/deployment/kubernetes# ./deploy.sh >/opt/dns/coredns.yml

root@k8s-master1:/opt/dns/deployment/kubernetes# cd /opt/dns/

root@k8s-master1:/opt/dns# docker pull coredns/coredns:1.7.0

root@k8s-master1:/opt/dns# docker tag coredns/coredns:1.7.0 harbor.kevin.com/base/coredns:1.7.0

root@k8s-master1:/opt/dns# docker push harbor.kevin.com/base/coredns:1.7.0

root@k8s-master1:/opt/dns# vim coredns.yml

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes kevin.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . 223.6.6.6 {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: harbor.kevin.com/base/coredns:1.7.0

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 512Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 172.20.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

root@k8s-master1:/opt/dns# kubectl delete -f kube-dns.yaml

root@k8s-master1:/opt/dns# kubectl apply -f coredns.ymImplementar panel

La implementación es la misma que usar kubeadm para implementar el clúster de kubernetes.