First, determine the website link

The code used in the link, in https://www.biqukan.com link to the home of the election of a serialized novel

from bs4 import BeautifulSoup

import requests

link = 'https://www.biqukan.com/1_1094'

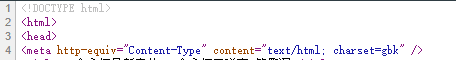

Second, the View page source

Found:

1, the site is gbk coding

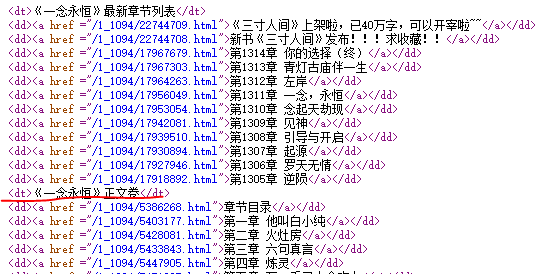

2, section all have a label you want to filter out this part

3, we want to start a chapter from the body roll, slice thought interception

# 获取结果res,编码是gbk(这个网站就是gbk的编码)

res = requests.get(link)

res.encoding = 'gbk'

# 使用BeatifulSoup得到网站中的文本内容

soup = BeautifulSoup(res.text)

lis = soup.find_all('a') #

lis = lis[42:-13] # 不属于章节内容的都去掉

# 用urllist存储所有{章节名称:链接}

urldict = {}

# 观察小说各个章节的网址,结合后面的代码,这里只保留 split_link = 'https://www.biqukan.com/'

tmp = link.split("/")

split_link = "{0}//{1}/".format(tmp[0], tmp[2])

# 将各章节名字及链接形成键值对形式,并添加到大字典 urldict中

for i in range(len(lis)):

print({lis[i].string: split_link + lis[i].attrs['href']})

urldict.update({lis[i].string: split_link + lis[i].attrs['href']})

from tqdm import tqdm

for key in tqdm(urldict.keys()):

tmplink = urldict[key] # 章节链接

res = requests.get(tmplink) # 链接对应的资源文件html

res.encoding = 'gbk'

soup = BeautifulSoup(res.text) # 取资源文件中的文本内容

content = soup.find_all('div', id='content')[0] # 取得资源文件中文本内容的小说内容

with open('text{}.txt'.format(key), 'a+', encoding='utf8') as f:

f.write(content.text.replace('\xa0', ''))