At the beginning of Genesis, God said, Let there be light, and there was light

We live in the world, in fact, a world full of light, light is the world's most common and most common, the most amazing thing, everywhere, yet intangible, physics tells us that light is an electromagnetic wave, and there are electromagnetic waves, so light, in fact, bright field.

Light field (Light Field) is a collection of light in space complete, he said acquiring and displaying light fields will be able to reproduce the real world visually. Plenoptic function (Plenoptic Function) comprising seven dimensions, is a mathematical model of the light field. All-optical function can be expressed as:

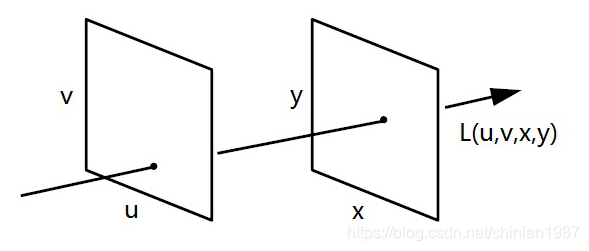

among them, represents a spatial position, denotes a direction, represents the wavelength, represents time, our most common photography or display, the main advantage of the position and wavelength of the light , rarely used information direction of light, in order to completely capture all the light of the information environment, this amount of data is very heterogeneous, Stanford University M.levory and P.Hanraham the all-optical functions have been simplified, It turned into a four-dimensional signal: , L represents the intensity of the light, (u, v) and (s, t) coordinates of the intersection of the two light planes, respectively. In the four-dimensional coordinate space, a light corresponding to a sampling point of the optical field, as shown below:

- Insert an image

among them graph where the lens pupil, represents a plane where the coordinate sensor, when the imaging light through the pupil of the first lens, and then reaches the sensor, the conventional image forming can be expressed as:

The above formula indicates, of a real world object point, the light emitted after pupil converged onto a corresponding point on the image sensor. Such imaging can only record the intensity of light, direction of light can not be obtained.

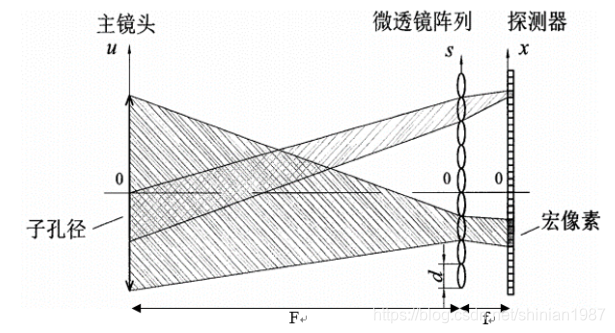

Different light field camera with conventional imaging, optical fields need to use two-dimensional imaging detector dimensional image plane while recording information light field, i.e., two-dimensional position and two-dimensional distribution of transmission direction. In order to achieve four-dimensional information, resampling and must be distributed to the four-dimensional light field conversion two-dimensional plane. Light field camera There are several different implementations, more common camera array, microlens arrays, and programmable aperture , camera array is one of the earliest implementation, and later at Stanford University, Dr. Wu Yiren (Ren Ng) founded after graduation Lytro camera company introduced the world's first consumer light field camera, in front of the sensor is used, placed in a row microlens array, as shown below:

- Insert an image

Lens, a microlens array, the positional relationship between the sensors is three: a microlens array disposed near the main focal plane of the lens, and the sensor is placed near the focal plane of the microlenses.

Light in different directions into the interior through the main camera lens, converged to different microlenses on the microlens array, and then through the microlenses into several diverging rays onto each photosensitive element of the sensor. Where each macro-pixel as a microlens, each corresponding to a (macro pixel) behind the microlens tuples pixel (photosensitive cells). This sum of the luminance pixels for the final meta macro pixel luminance, i.e. brightness of its corresponding pixel in the macro-membered pixels all points. Each metadata corresponding to the pixel by a light front microlens in Lytro illum in tuples pixels can be recorded number of light rays through the front strip line microlenses in different directions, so Lytro illum may be recorded for a total of $ m \ times n \ times k is the number of microlenses.

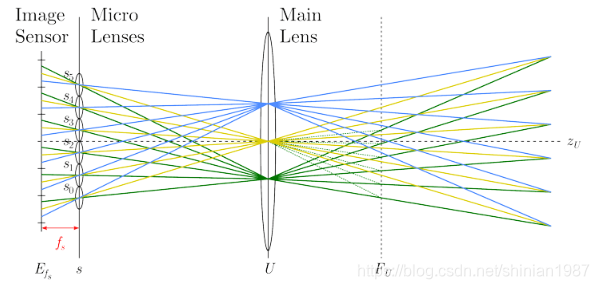

Conventional imaging contrast, we can see that all the conventional imaging light emitted from a point object as a bundle, are converged to a point on the image, and the light field camera, the light of a point object into a multi-beam, respectively converged different image points, it is clear larger, the finer the mean light is divided, the less means that each image point can receive light, also commonly referred angular resolution, and is generally referred to as image resolution, is also known as the number of viewpoints, similarly opened on the main lens sub-aperture, each sub-aperture can into a size of the image, as shown below, the main lens, there are three virtual sub-aperture, through each sub-aperture may be a microlens array, an image into different sub-sensor on the sensor

- Insert an image

Light field camera angle resolution and image resolution is a trade-off relation, because the total number of pixels of a certain sensor, if increases, which means is reduced, and vice versa.

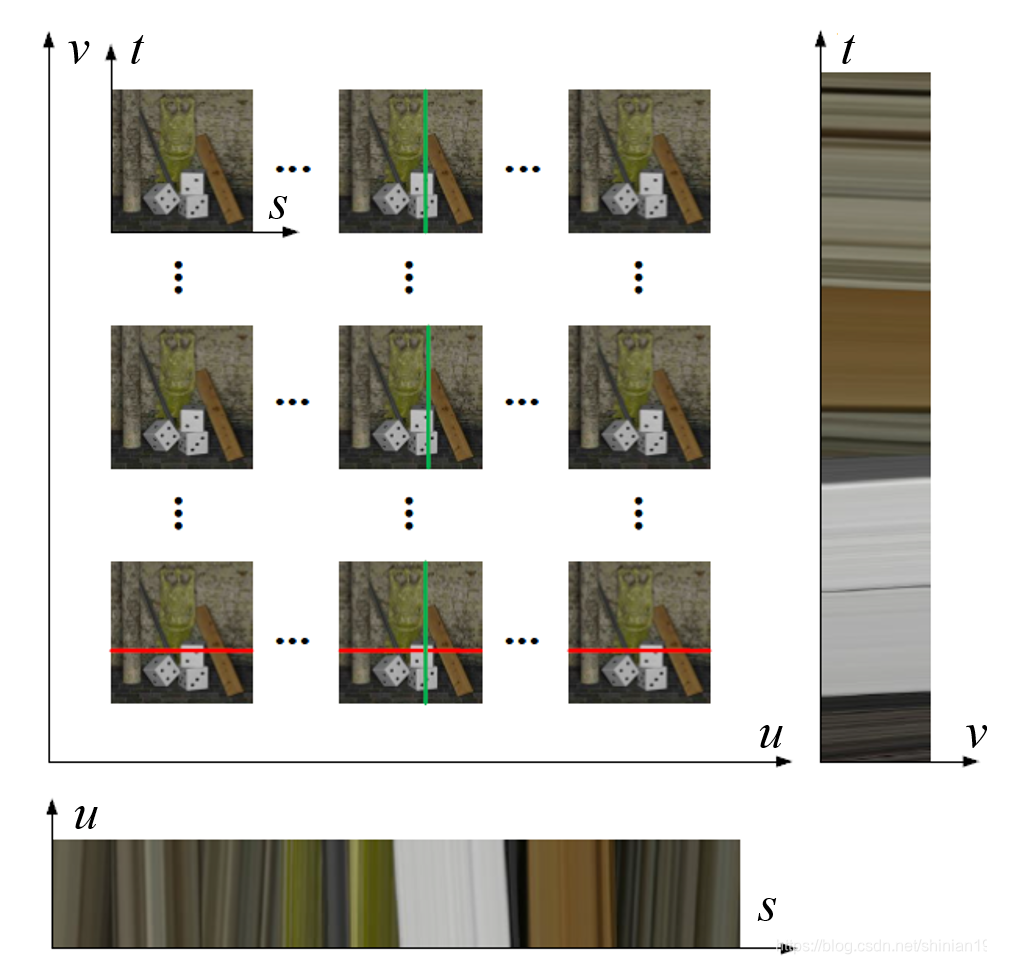

Represents a light field image has many different forms, because the full light field image information is a thinking, if you count the color channels, is the message of a five-dimensional, color channels are not considered here, the light field data can be expressed as:

- If the fixed ,那么我们可以获得某一个视角下的子图像

- 如果固定 ,那么我们可以获得某一个微透镜后面的一个宏像素

- 如果固定 ,那么我们可以获得一个极线图,类似从图像阵列的某一列子图像中,抽取固定的一列拼接而成

- 如果固定 ,那么我们同样可以获得一个极线图,从图像阵列的某一行子图像中,抽取固定的一行拼接而成

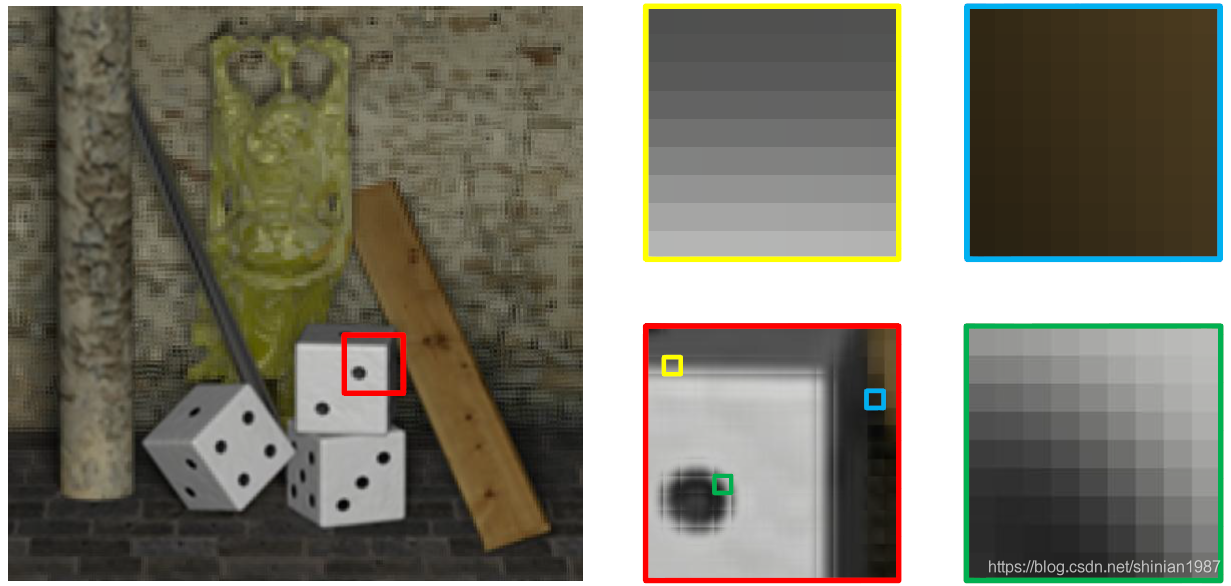

如下图所示:

上图左边对应一个子图像,右边对应宏像素

上图分别对应图像阵列,极线图

- 参考

https://www.vincentqin.tech/posts/light-field-depth-estimation/

https://www.cnblogs.com/riddick/p/6731130.html

http://www.plenoptic.info/pages/refocusing.html