Sarsa & Q-Learning

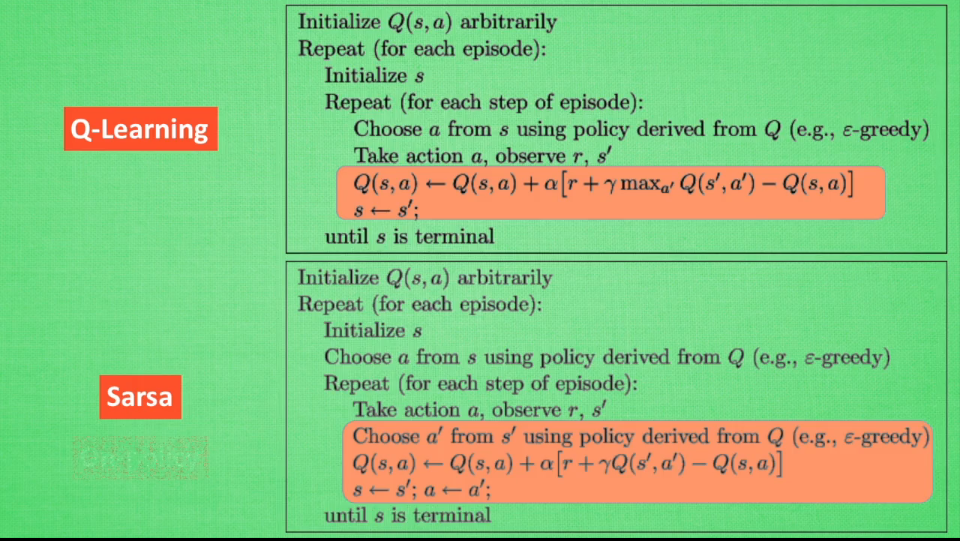

The public table, with respect to the difference Q Sarsa seems that the learning of the update process Sarsa,

Q (s ', a') and the manner of obtaining Q (s, a) the same, are obtained by analogous methods of epsilon-greedy.

And Q-Learning idea is directly grab s ', all corresponding action, select the maximum as the source of learning, i.e., max (a') [Q (s ', a')]

For Q:

We have s, we chose a, and then the resulting s ', using the existing q_table s' information selected as the largest of a 'learning, so that the end may be the fastest approach but when the s.' -> s when, a 'not retained, that is to say, a new step, and may not be selected once a', but re-use policy

For sarsa:

We have s, we chose a, then got s ', directly next round of policy chosen a', as learning content to modify the current weight, and the next state and action have been identified.

So the result is, Q of learning is to go straight to the end, if it is a single reward system, then, is obvious from the forward stepwise transfer, and for sarsa, is gradually test the experience for the first time may not get it soon a second time to spend, the final result is more than enough to try the path, eventually gradually find the optimal solution.