Content Highlights:

一、keepalived :

Second, the configuration steps of:

一、keepalived :

What (1) keepalived is

keepalived cluster management is to ensure a high availability cluster service software, which functions like heartbeat, to prevent a single point of failure.

1, keepalived three core modules:

core Core Module

chech health monitoring

vrrp Virtual Router Redundancy Protocol

2, three important functions Keepalived services:

Management LVS

LVS cluster nodes to check

High Availability function as a system of network services

(2) keepalived works

1, keepalived is to achieve VRRP protocol-based, VRRP stands for Virtual Router Redundancy Protocol, or virtual routing redundancy protocol.

2, virtual routing redundancy protocol, protocol router can be considered highly available, about to stage N routers provide the same functionality of a router group, the group which has a master and multiple backup, there is a master above the external service provider vip (default route to other machines within the LAN router is for vip), master will send multicast, when the backup does not receive packets vrrp considers that the master dawdle out, then you need a VRRP according to priority of election when the backup master. So we can ensure high availability of the router.

3, keepalived there are three main modules, namely core, check and vrrp. keepalived core module as the core, the main process responsible for initiating, maintaining, and loads the global configuration file and parsing. check responsible for health checks, including a variety of common inspection method. vrrp VRRP module is to achieve agreement.

Second, the configuration steps of:

Experimental environment Description:

(1) preparing four virtual machines, scheduling of two servers, the two servers node;

(2) the scheduling server and the deployment LVS keepalived, load balancing and stateful failover;

(3) the client host through a virtual ip address, access to back-end Web server pages;

(4) Experimental results: wherein one DR downtime, normal access all the services as usual.

| Roles | IP addresses |

| DRl scheduling server (master) | 192.168.100.201 |

| DR2 of the scheduling server (standby) | 192.168.100.202 |

| Node server web1 | 192.168.100.221 |

| Node server web2 | 192.168.100.222 |

| Virtual IP | 192.168.100.10 |

| Client test machine win7 | 192.168.100.50 |

Step 1: Configure two DR

(1) Installation and keepalived packet ipvsadm

yum install ipvsadm keepalived -y

(2) modify /etc/sysctl.conf file, add the following code:

=. 1 is named net.ipv4.ip_forward and // proc off redirection response net.ipv4.conf.all.send_redirects = 0 net.ipv4.conf.default.send_redirects = 0 net.ipv4.conf.ens33.send_redirects = 0

sysctl -p This command is to make the above configurations take effect

(3) configure virtual NICs (ens33: 0):

1, note the path: / etc / sysconfig / network-scripts /

2, the direct copying an existing card information, can be modified:

the ifcfg-ens33 the ifcfg-cp ens33: 0 vim the ifcfg-ens33: 0 deletes all the original information, add the following code: the DEVICE = ens33: 0 ONBOOT = yes IPADDR = 192.168.100.10 NETMASK = 255.255.255.0

3, enable virtual NIC:

ifup ens33:0

(4) Write the service startup script, path: /etc/init.d

1, vim dr.sh script reads as follows:

#!/bin/bash

GW=192.168.100.1

VIP=192.168.100.10

RIP1=192.168.100.221

RIP2=192.168.100.222

case "$1" in

start)

/sbin/ipvsadm --save > /etc/sysconfig/ipvsadm

systemctl start ipvsadm

/sbin/ifconfig ens33:0 $VIP broadcast $VIP netmask 255.255.255.255 broadcast $VIP up

/sbin/route add -host $VIP dev ens33:0

/sbin/ipvsadm -A -t $VIP:80 -s rr

/sbin/ipvsadm -a -t $VIP:80 -r $RIP1:80 -g

/sbin/ipvsadm -a -t $VIP:80 -r $RIP2:80 -g

echo "ipvsadm starting------------------[ok]"

;;

stop)

/sbin/ipvsadm -C

systemctl stop ipvsadm

ifconfig ens33:0 down

route del $VIP

echo "ipvsamd stoped--------------------[ok]"

;;

stop)

/sbin/ipvsadm -C

systemctl stop ipvsadm

ifconfig ens33:0 down

route del $VIP

echo "ipvsamd stoped--------------------[ok]"

;;

status)

if [ ! -e ar/lock/subsys/ipvsadm ];then

echo "ipvsadm stoped--------------------"

exit 1

else

echo "ipvsamd Runing-------------[ok]"

fi

;;

*)

echo "Usage: $0 {start|stop|status}"

exit 1

esac

exit 0

2, add permissions, startup scripts

chmod +x dr.sh service dr.sh start

(5) a second DR first configuration and the same, the operation is repeated to look

Step Two: configuring a first node server web1

(1) Installation httpd

yum install httpd -y

systemctl start httpd.service // start the service

(2) Write a test page on the site, it will be easy to verify the test results back

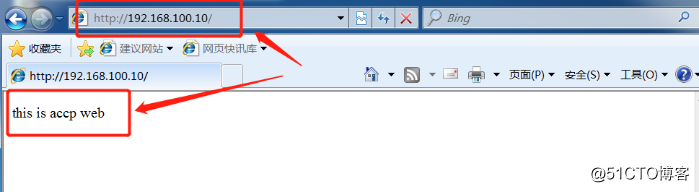

路径:/var/www/html echo "this is accp web" > index.html

(3) create a virtual network adapter

1, the path: / etc / sysconfig / Network-scripts / 2, copy card information to be modified CP the ifcfg-LO the ifcfg-LO: 0 . 3, Vim the ifcfg-LO: 0 to delete all the original message, add the following: the DEVICE = LO: 0 IPADDR = 192.168.100.10 NETMASK = 255.255.255.0 ONBOOT = yes

(4) Write the service startup script, path: /etc/init.d

1, vim web.sh script reads as follows:

#!/bin/bash

VIP=192.168.100.10

case "$1" in

start)

ifconfig lo:0 $VIP netmask 255.255.255.255 broadcast $VIP

/sbin/route add -host $VIP dev lo:0

echo "1" > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" > /proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" > /proc/sys/net/ipv4/conf/lo/arp_announce

sysctl -p > /dev/null 2>&1

echo "RealServer Start OK "

;;

stop)

ifconfig lo:0 down

route del $VIP /dev/null 2>&1

echo "0" > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo "0" > /proc/sys/net/ipv4/conf/lo/arp_announce

echo "0" > /proc/sys/net/ipv4/conf/all/arp_ignore

echo "0" > /proc/sys/net/ipv4/conf/all/arp_announce

echo "RealServer Stoped"

;;

*)

echo "Usage: $0 {start|stop}"

exit 1

esac

exit 0

2、添加权限,并执行

chmod +x web.sh //添加权限 service web.sh start //启动服务

(5)开启虚拟网卡

ifup lo:0

(6)测试网页是否正常

第三步:配置第二台节点服务器 web2

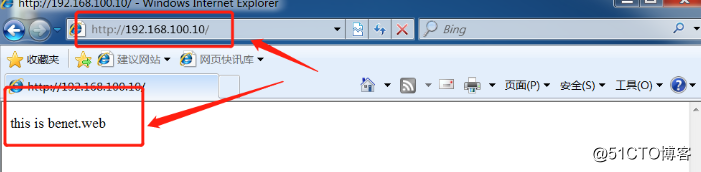

第二台web和第一台配置一模一样,唯一不同的是,为了区分实验效果,第二台的测试网页内容换了:

路径:/var/www/html echo "this is benet web" > index.html

测试网页是否正常:

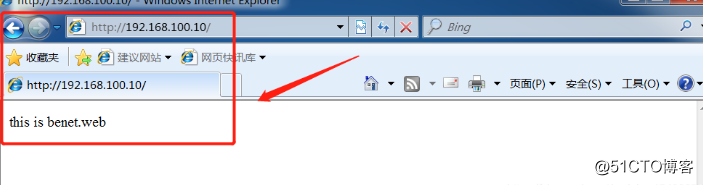

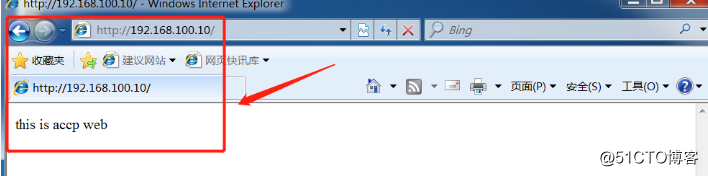

第四步:客户端测试

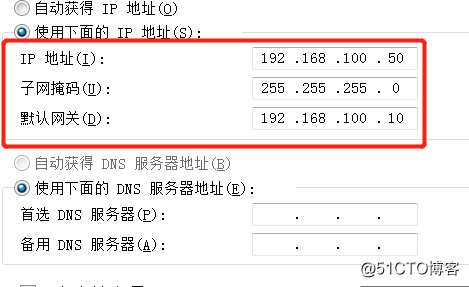

(1)配置好客户端的IP地址

(2)测试

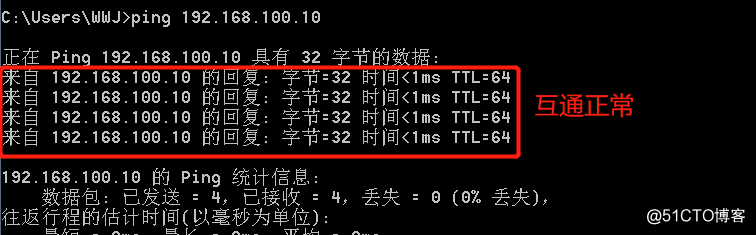

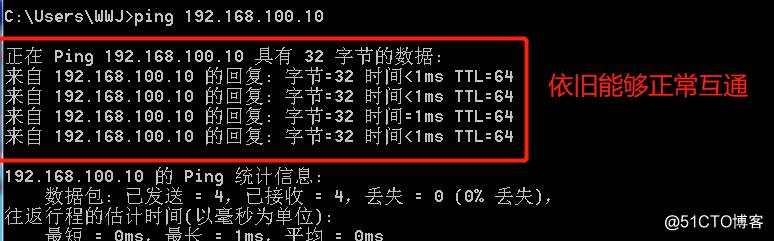

1、与 192.168.100.10 能否互通:

2、访问网页是否正常

第五步:部署 keepalived

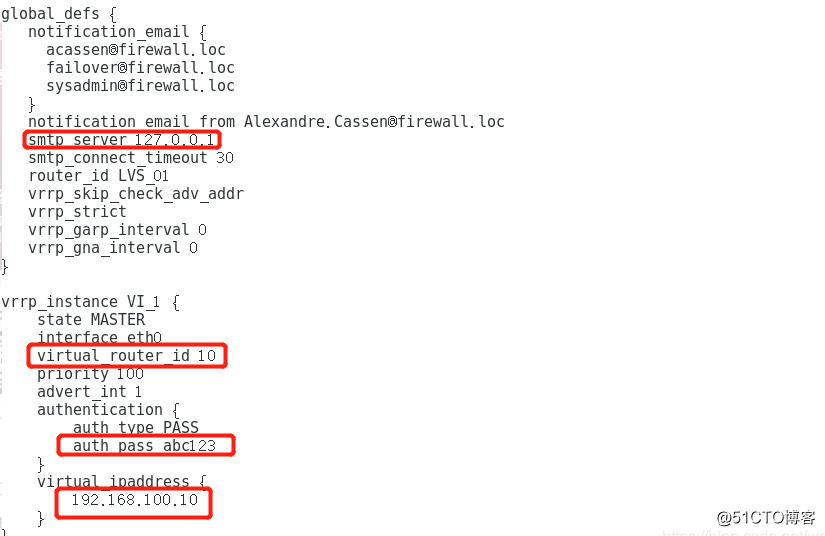

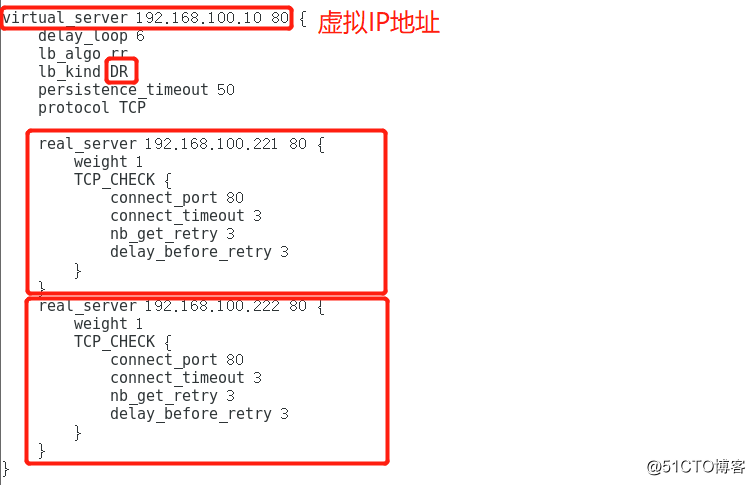

一、在第一台 DR 上部署:

(1)修改 keepalived.conf 文件,路径 /etc/keepalived/

修改以下内容:

(2)启动服务

systemctl start keepalived.service

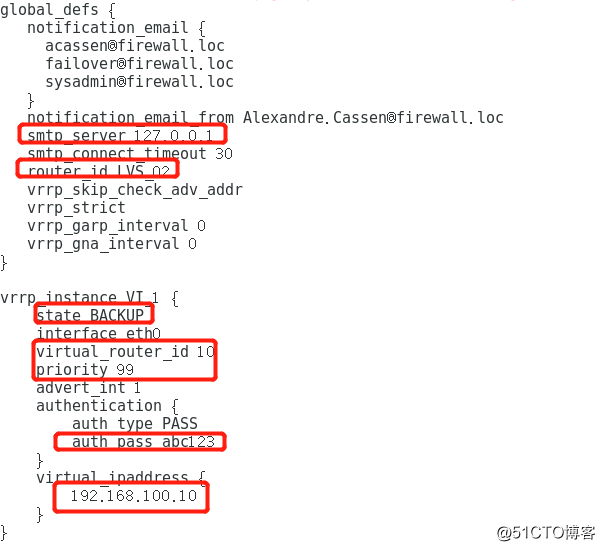

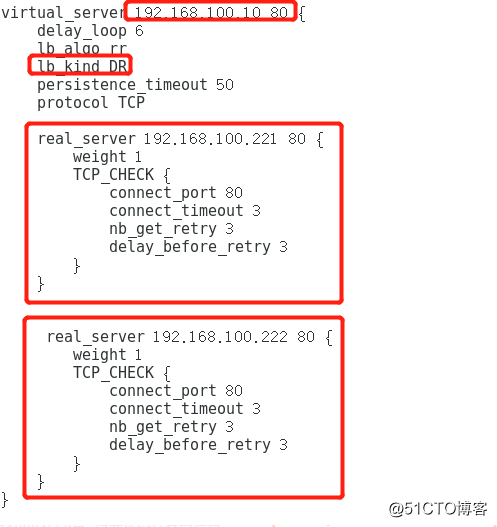

二、在第二台 DR 上部署:

(1)修改 keepalived.conf 文件

(2)启动服务

systemctl start keepalived.service

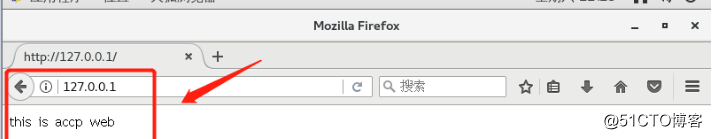

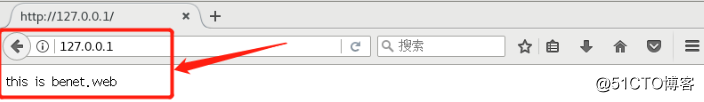

第六步:实验结果验证

由于部署了 LVS 和 keepalived,目的是,负载均衡和双机热备。

此时,我们模拟一下故障,宕掉其中一台 DR1,如果客户端依旧可以和虚拟 IP地址 互通,且能够正常访问网站的话,就说明 DR2 就代替 DR1 工作了,防止单点故障的效果实现了。

(1)故障模拟:宕掉 DR1

ifdown ens33:0

(2)结果验证

1、在客户端 ping 一下虚拟ip

2、网站也依旧能够访问