8.1Hadoop optimization and development

- Hadoop1.0 deficiencies and limitations

- Low levels of abstraction

- Limited ability to express

- Developers manage their own dependencies between jobs

- Difficult to see the overall program logic

- Low efficiency of the implementation of iterative

- a waste of resource

- Poor real-time

- Improvements and upgrading of Hadoop

- 1.0 the single node name, there is a single point of failure, it is designed to provide hot backup mechanism HDFS HA node name

- Single namespace resource isolation can not be achieved, the design HDFS Federation, the management of multiple namespaces

- For low MapReduce resource management efficiency, the design of new resource management framework YARN

- Pig, large-scale data processing scripting language to solve the problem of low levels of abstraction

- Oozie, the workflow engine and collaboration services to help run Hadoop different tasks, solve does not provide the dependency management mechanism.

- The TEz, support computing framework DAG job, the job for the decomposition and reassembly operation, the operation is repeated to solve MapReduce between different tasks.

- Kafka, distributed publish-subscribe messaging system, usually as an enterprise data platform for big data analysis of exchange hub for efficient exchange of different types of data between the various components to address the lack of uniform data between Hadoop eco various components and products exchange intermediary.

New features of 8.2HDFS2.0

HDFS HDFS HA and increased federal

HDFS HA

- A second node exists in the name HDFS1.0, which role is periodically obtained from the name of the image file namespace node (the FsImage) and modify the log (the EditLog), then sent to the merge node name, replace the original the FsImage, to prevent the log file is too large, while the second also keep a node name. So that you can cold backup.

- HDFS2.0 In order to solve the problem using a single point HA architecture, two names nodes, wherein a is active. That is, heat can be backed up. Two synchronization state name of the node, can be implemented by means of a shared system, NFS, QJM or Zookeeper, prevent two Butler, using Zookeeper management.

HDFS Federation

- HDFS1.0 only a node name, not horizontally extend, the overall performance is limited by the throughput of a single node name. HDFS HA single point of failure to solve the problem, but does not address scalability, performance, isolate problems.

- Federation Architecture Design In another plurality

独立of node name, a naming service so that HDFS extend horizontally, respectively, each of the names of the nodes and namespace management block, the Federal relationship to each other, need not coordinated with each other. - In the name of the Federal nodes provide namespace and block management functions, the names of all nodes share data node underlying storage resources.

- HDFS federal access methods can be used to mount the client table data sharing.

- Compared to 1.0 Advantages:

- HDFS cluster scalability, each in charge of part of the directory

- Higher performance

- Good isolation

- Note that HDFS Federation does not solve the problem single point of failure, it is estimated that independent reasons, it is necessary to deploy a backup node name for each node.

8.3 new generation of resource management scheduling framework

- MapReduce1.0 using Master / Slave architecture, and includes a plurality of JobTracker TaskTracker, the following disadvantages:

- Single point of failure

- JobTracker "taking on everything" lead role overload (three functions, resource management, task scheduling and task monitoring)

- Prone to memory overflow

- Resource partitioning unreasonable

- YARN (Yet Another Resource Negotiator) design ideas

- The basic idea is decentralization, the original function split

- ResourceManager responsible for managing the resources to deal with client requests; and start monitoring Application; monitoring NodeManager; resource allocation and scheduling

- ApplicationMaster responsible for scheduling and monitoring the application of resources for an application, and assigned to the internal; task scheduling, monitoring and fault tolerance

- NodeManager TaskTracker responsible for implementation of the original task, resource management on a single node; process commands from the ResourceManager; processing of commands from ApplicationMaster

- YARN is in the container (Container) as a dynamic resource allocation unit

- YARN ResourceManager components and the HDFS name node deployed in a top node

- YARN The ApplicationMaster and NodeManager HDFS and data nodes are deployed together

YARN workflow

- Write user client application, submit the program to the YARN, including ApplicationMaster program, initiated command, user programs, etc.

- YARN The ResourceManager receive and process requests from clients. Start a ApplicationMaster

- ApplicatioMaster was created after the first registration ResourceManager

- ApplicationMaster polling manner by application resources ResourceManager RPC protocol

- ResourceManager allocation of resources in the form of a container to ApplicationMaster, will communicate with the inside of the container once obtained NodeManager resources.

- When ApplicationMaster The containers will start the task he set a good operating environment for the task, then the task start command wrote a script to run this script through the container to start the task.

- Individual tasks can keep abreast of the status of each task to run through ApplicationMaster RPC protocol, you can restart the task if the task fails.

- Once the application is finished running, ApplicationMaster to log out and close your ResourceManager of the application manager.

YARN framework of advantages compared to MapReduce1.0

- Greatly reducing the resource consumption of bear center services ResourceManager

- MapReduce is a computational framework, but also a resource management scheduling framework, but can only support the MapReduce programming model. And YARN a pure resource scheduling framework that can run a variety of computing framework.

- YARN resource management more efficient

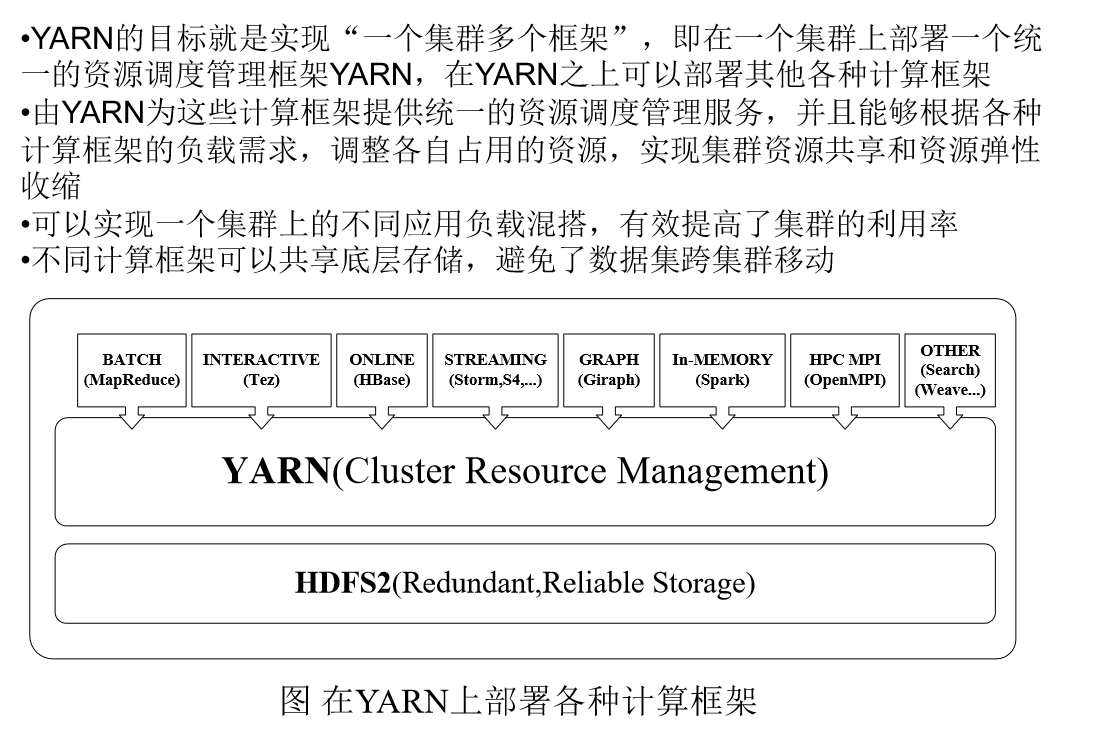

YARN Development Goals