Nonlinear equations

Why should we use the activation function? With a simple statement to summarize. Because, in reality, did not we think so beautiful, it is cruel and varied.

Just kidding, but activation function is to solve the problems in our daily lives can not use linear equations summarized. Well, I know your question here.

What is linear equations (linear function)?

We can simplify the whole network such as a formula. Y = Wx, W is our requirements parameter, y is the predicted value, x is the input value.

With this formula, we can easily describe the linear problem just because W seeking out can be a fixed number, but it seems

It does not make this line becomes twisted up, seeing activation function, his help, stood up and said: "Let me breaking bend it!."

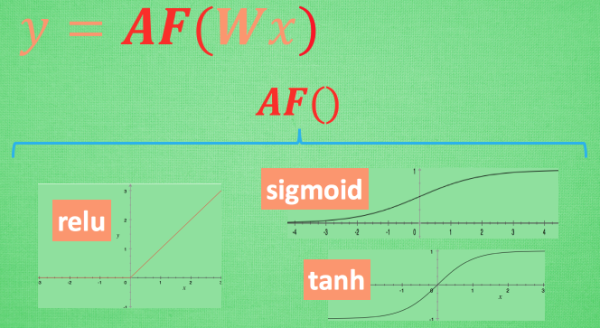

Activation function

Here's AF refers to the activation function. Activation function to come up with what they do best, "breaking bend weapon", set in a hard twist on the original function,

The original Wx result was twisted up.

In fact, this AF, breaking bending tool, not a touch and not something which is actually another nonlinear function. For example relu,

sigmoid, tanh. These bend breaking tool nest on the results of the original, the original linear force to distort the results. causes the output

Y has also been the result of non-linear characteristics. For example, this example, I used the relu breaking curved weapon, if this time the result is Wx 1,

y will be 1, but for the time Wx-1, y instead of -1, but will be 0.

You can even create your own incentive function to handle their own problems, but to ensure that these incentives must be differentiable functions,

Because when backpropagation error back pass, only these differentiable activation function in order to pass the error back.

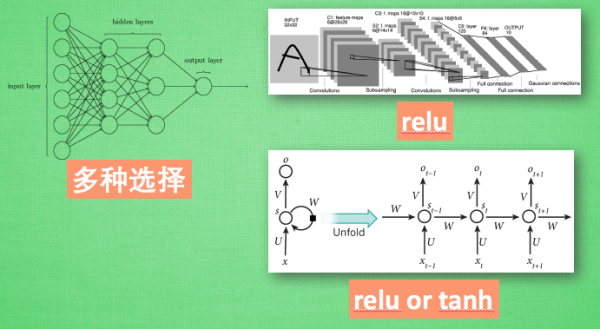

Popular choice

Want appropriate use of these activation function, there are still tricks, such as when your only two three-layer neural network, not a lot of time, for

Hidden layer, use any activation function, casually breaking the bend is possible, there will be particularly affected. However, when you use the neural special multilayer

Network, in turn breaking when play are not free to choose the weapon because it would involve an explosion gradient, gradient go away. Since the turn of time

System, we could then talk about this specific issue with.

Finally, we talk about, in the specific case, we default choice of activation function which is in a small layer structure, we can try a lot of

Different types of activation function. In convolutional neural network convolution Convolutional neural networks layer activation function is recommended RELU.

recurrent neural networks in recurrent neural network, it is recommended that tanh or relu.