Foreword

In recent years, due to the distributed storage with high performance, high availability features into the storage market. In addition to the commercial products, open source distributed storage software more popular, which Lustre, CephFS, GlusterFS is a typical representative.

1 Introduction

Lustre is an open, distributed parallel file system software platform, highly scalable, high performance, high availability characteristics. Lustre configuration object is to provide a globally consistent large-scale high-performance computing system namespace POSIX-compliant, it supports hundreds PB data storage space, support hundreds GB / s and the number of TB / s concurrent aggregated bandwidth.

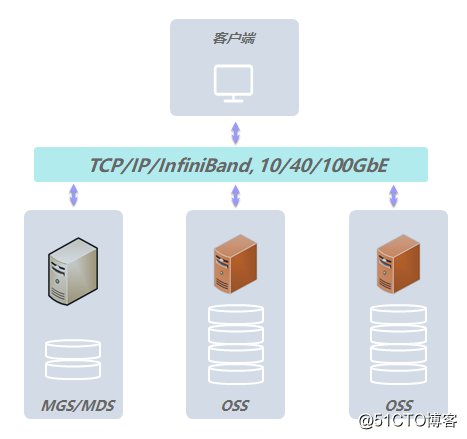

1.1 Environmental Architecture

MGS (Management Server, management server), all the configuration information Lustre MGS file storage cluster and provide information to other Lustre components.

MDS (Metadata Servers, metadata server), MDS makes metadata available to clients, each MDS management Lustre file system and directory names.

OSS (Object Storage Servers, object storage server), OSS used to store client data access services.

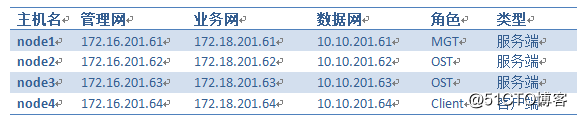

1.2 Network Planning

2. Preparing the Environment

NOTE: Do the following for all hosts

1. Set the hostname

hostnamectl set-hostname node12. Turn off the firewalld and selinux

systemctl stop firewalld && systemctl disable firewalld

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config3. Create a temporary yum source

cat >/tmp/lustre-repo.conf <<EOF

[lustre-server]

name=lustre-server

baseurl=https://downloads.whamcloud.com/public/lustre/latest-release/el7/server

gpgcheck=0

[lustre-client]

name=lustre-client

baseurl=https://downloads.whamcloud.com/public/lustre/latest-release/el7/client

gpgcheck=0

[e2fsprogs-wc]

name=e2fsprogs-wc

baseurl=https://downloads.whamcloud.com/public/e2fsprogs/latest/el7

gpgcheck=0

EOF4. Installation Kit Related

yum install yum-utils createrepo perl linux-firmware -yThe advance package has been downloaded to the local

mkdir -p /var/www/html/repo

cd /var/www/html/repo

reposync -c /tmp/lustre-repo.conf -n \

-r lustre-server \

-r lustre-client \

-r e2fsprogs-wc6. Create yum source of local lustre

cd /var/www/html/repo

for i in e2fsprogs-wc lustre-client lustre-server; do

(cd $i && createrepo .)

done7. Create a local source profile lustre

cat > /etc/yum.repos.d/CentOS-lustre.repo <<EOF

[lustre-server]

name=lustre-server

baseurl=file:///var/www/html/repo/lustre-server/

enabled=0

gpgcheck=0

[lustre-client]

name=lustre-client

baseurl=file:///var/www/html/repo/lustre-client/

enabled=0

gpgcheck=0

[e2fsprogs-wc]

name=e2fsprogs-wc

baseurl=file:///var/www/html/repo/e2fsprogs-wc/

enabled=0

gpgcheck=0

EOF8. Review the repo

yum repolist all2. server configuration

NOTE: Do the following for all host server

1. Install efs2progs

yum --nogpgcheck --disablerepo=* --enablerepo=e2fsprogs-wc \

install e2fsprogs -y2. Uninstall the core conflict package

yum remove selinux-policy-targeted -y3. Install and upgrade the kernel

yum --nogpgcheck --disablerepo=base,extras,updates \

--enablerepo=lustre-server install \

kernel \

kernel-devel \

kernel-headers \

kernel-tools \

kernel-tools-libs 4. reboot

reboot5. Installation ldiskfs kmod packages and lustre

yum --nogpgcheck --enablerepo=lustre-server install \

kmod-lustre \

kmod-lustre-osd-ldiskfs \

lustre-osd-ldiskfs-mount \

lustre \

lustre-resource-agents6. loaded into the kernel lustre

modprobe -v lustre

modprobe -v ldiskfs

echo 'options lnet networks=tcp0(ens1f1)' > /etc/modprobe.d/lustre.conf

depmod -a3. Client Configuration

Note: only operate on the client host

Lustre client host client software installation, no need to upgrade the kernel with lustre, can be installed directly lustre-client

1. Install kmod

yum --nogpgcheck --enablerepo=lustre-client install \

kmod-lustre-client \

lustre-client2. Load lustre parameters

echo ' options lnet networks=tcp0(ens1f1)' > /etc/modprobe.d/lustre.conf

depmod -a

modprobe lustre4. Create a file system Lustre

Configuration Description:

--fsname: after generating lustre specified file system name, such as sgfs, future client uses mount -t 192.168.100.1@tcp0: 192.168.100.2@tcp0: / sgfs / home to mount. br /> - mgs: MGS partition designated

--mgt: MGT designated partition

--ost: OST designated partition

--servicenode = ServiceNodeIP @ tcp0: when the specified node fails to take over the service node, such as InfiniBand network, tcp0 need to be replaced o2ib

--index: specified index, the same can not

establish MGS and MGT (Note: MGS is executed on the server host node1)

mkdir -p /data/mdt

mkfs.lustre --fsname=lufs --mgs --mdt --index=0 --servicenode=10.10.201.61@tcp0 --reformat /dev/sdb

mount -t lustre /dev/sdb /data/mdt/Establish OST1 (Note: OSS executed on the server host node2)

mkdir /data/ost1 –p

mkfs.lustre --fsname=sgfs --mgsnode=10.10.201.61@tcp0 --servicenode=10.10.201.62@tcp0 --servicenode=10.10.201.63@tcp0 --ost --reformat --index=1 /dev/sdb

mount -t lustre /dev/sdb /data/ost1/Establish OST2 (Note: OSS executed on the server host node3)

mkdir /data/ost2 -p

mkfs.lustre --fsname=sgfs --mgsnode=10.10.201.61@tcp0 --servicenode=10.10.201.63@tcp0 --servicenode=10.10.201.62@tcp0 --ost --reformat --index=2 /dev/sdb

mount -t lustre /dev/sdb /data/ost2/5. The client mount access

The client creates a directory to mount and mount access. (Note: The execution on the client host node4)

mkdir /lustre/sgfs/

mount.lustre 10.10.201.61@tcp0:/sgfs /lustre/sgfs/If the mount fails, check the network connection is available lctl commands and view system logs.

lctl ping 10.10.201.61@tcp0To see if Mount success

df -ht lustre6. Frequently Asked Questions treatment

1 error:

[root@node1 ~]# modprobe -v lustre

insmod /lib/modules/3.10.0-957.10.1.el7_lustre.x86_64/extra/lustre/net/libcfs.ko

insmod /lib/modules/3.10.0-957.10.1.el7_lustre.x86_64/extra/lustre/net/lnet.ko

insmod /lib/modules/3.10.0-957.10.1.el7_lustre.x86_64/extra/lustre/fs/obdclass.ko

insmod /lib/modules/3.10.0-957.10.1.el7_lustre.x86_64/extra/lustre/fs/ptlrpc.ko

modprobe: ERROR: could not insert 'lustre': Cannot allocate memoryCause: The server has two CPU, the CPU does not insert a memory, performance is as follows

[root@node2 ~]# numactl -H

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 20 21 22 23 24 25 26 27 28 29

node 0 size: 0 MB

node 0 free: 0 MB

node 1 cpus: 10 11 12 13 14 15 16 17 18 19 30 31 32 33 34 35 36 37 38 39

node 1 size: 32654 MB

node 1 free: 30680 MB

node distances:

node 0 1

0: 10 20

1: 20 10After re-plug the memory tuning status

[root@node1 ~]# numactl -H

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 20 21 22 23 24 25 26 27 28 29

node 0 size: 16270 MB

node 0 free: 15480 MB

node 1 cpus: 10 11 12 13 14 15 16 17 18 19 30 31 32 33 34 35 36 37 38 39

node 1 size: 16384 MB

node 1 free: 15504 MB

node distances:

node 0 1

0: 10 21

1: 21 10Reference solution:

https://jira.whamcloud.com/browse/LU-11163

Welcome scan code questions can answer online. Share virtualization, container, DevOps and other related content on a regular basis