Reprinted from: https: //blog.csdn.net/qq_30868235/article/details/80370060

1. Data Set

Dataset sklearn own handwritten numeral recognition data set mnist, introduced through the function datasets. mnist total of 1797 samples, 8 * 8 wherein, the tag numbers from 0 to 9 ten.

### load data

from sklearn import datasets # Loading data set

digits = datasets.load_digits () # Loading mnist data set

print (digits.data.shape) # prints the input spatial dimension

print (digits.target.shape) # printout spatial dimensions

"""

(1797, 64)

(1797,)

"""

2. Data Set Partitioning

sklearn.model_selection train_test_split functions in the divided data set, wherein the parameter is the ratio test set test_size occupied, random_state random seed (to be able to reproduce the experimental results set).

3. The relevant model (model loaded - training model - model predictions)

XGBClassifier.fit () function is used to train the model, XGBClassifier.predict () function to use the model to make predictions.

### model relevant

from xgboost Import XGBClassifier

Model = XGBClassifier () # load the model (model named Model)

model.fit (x_train, y_train) # training model (training set)

y_pred = model.predict (x_test) # model predictions (test set), y_pred prediction results

4. Performance Evaluation

sklearn.metrics accuracy_score function used to determine the accuracy of the model predictions.

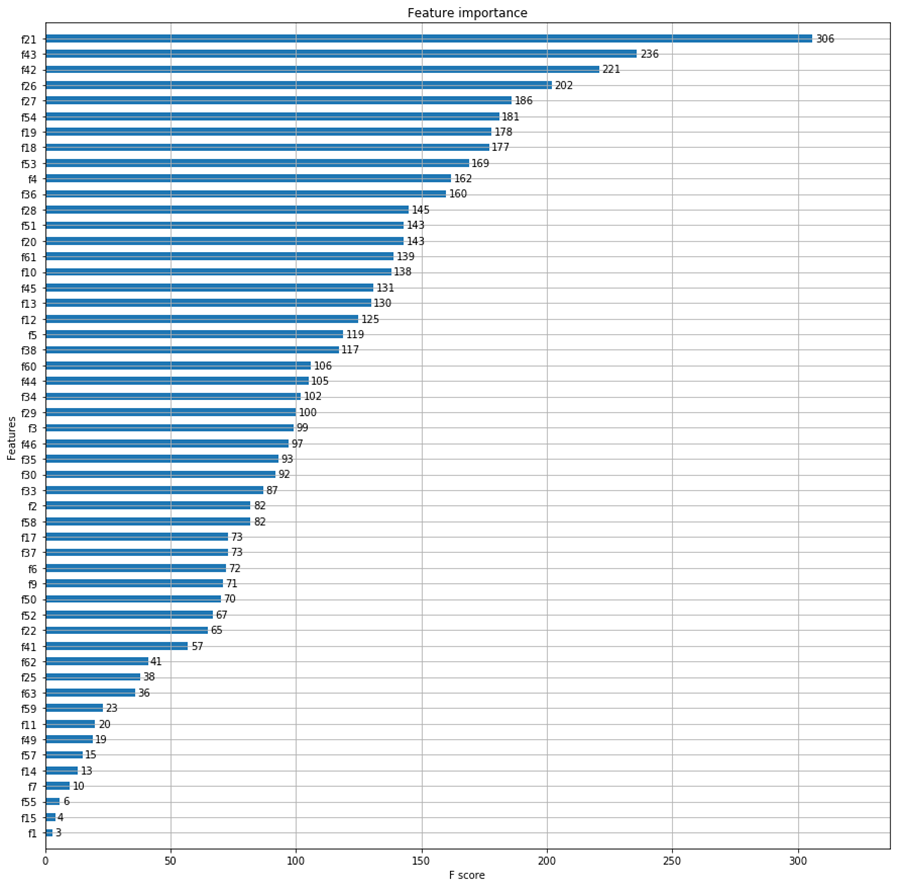

5. The importance of features

xgboost analyzes the importance of features, draw pictures by function plot_importance.

6. complete code