Contents:

1. Introduction Xen

1.1 Xen general structure

1.2 Xen VM term for the

1.3 Xen virtualization of CPU and memory process

1.4 Xen virtualization process IO devices

1.5 Linux Kernel support for the Xen

Brief History 1.6 Xen release

1.7 Xen tools stack

1.8 XenStore

4 network model 1.9 virtualization in

security issues 1.10 Xen's Introduction

2. Xen installation and configuration files Description

2.1.1 Xen runs on CentOS6.6

configuration of 2.1.2 Xen

2.2 .1 Xen start DomU profile comments

2.2.1.1 how to create a VM Xen PV mode [Note: VM HVM mode see the creation of the test section]

3. libvirt implement Xen virtual machine graphical management

4. PV DomU root file the system can be arranged in many different ways

prompt:

Xen is recommended only to learn its principles, it is not recommended to take the time to learn the specific use.

If you want to know the specific use of reference: https://pan.baidu.com/s/1A77aJdoBK7CPIF7FVngudQ

view this file requires the use of Cherrytree document editor view

Introduction 1. Xen

Xen is an open source can be run directly on top of the hardware layer of virtualization software, it can also have a good performance in the traditional virtualization technology is extremely unfriendly X86 architecture it is open source virtual development of the University of Cambridge software, its original intention is to run hundreds of virtual machines on a single physical machine;

Xen design is very compact, it belongs to the virtualization type-I, because Xen is actually a simplified version of the Hypervisor layer; with respect to the type-II type Host-based virtualization. (eg: VMware Workstattion), its performance will be relatively good; Xen only CPU and Memory taking over, while other IO hardware driver by the first virtual machine running on it to provide such support the reason is: Xen can not drive as many developers IO devices, and hardware developers will not provide specifically for Xen drive, so Xen uses a special design.

The default Xen think they are run directly on top of the hardware layer of virtualization software, and can directly drive the CPU and memory, CPU and memory are required to pay attention to all operating systems want to run must be able to direct support, but in order to ensure its own Xen small, it does not provide virtual machine management interface, so it takes a unique approach to running a privileged virtual machine and VM must support this modification Kernel, choosing open-source Linux as a privileged VM is the most appropriate, which would also be convenient to adopt Linux supported way to develop virtual machine management interface, and Xen Hypervisor layer interacts directly to complete the allocation of CPU and memory resources and create VM, delete, stop, start the VM management interface; usually this privileged virtual machine will adopt the more popular Linux distributions, because it supports more IO hardware devices, such as: network card, disk, graphics cards, sound cards and so on; so far, NetBSD, GNU / Linux, FreeBSD and Plan 9, OpenSolaris and other systems has been supported paravirtualized Xen run in the DomU in; currently already supports Xen x86, x86_64 and ARM platforms, and is to IA64, PPC transplant. Ported to other platforms is technically feasible, in the future there is likely to be achieved.

Xen virtual machine support in the case of non-stop live migration between multiple physical hosts. During operation, the virtual machine memory is copied to the target machine repeatedly in the case did not stop working. Virtual machine before you begin the final destination, there will be very brief pause time 60-300 ms to perform final synchronization, giving the impression of seamless migration. A similar technique is used to pause a running virtual machine to disk, and switch to another one, the first virtual machine can be restored later.

1.1 Xen general structure:

the Xen constituent divided into three parts:

(. 1) Hypervisor in the Xen layer above the hardware layer: and Memory CPU is responsible for direct drive these underlying hardware,

providing CPU, memory, Interrupt (interrupt) for all other virtual machines management, and also provides the call HyperCall.

(2) the first virtual machine: this is called a virtual machine in Xen virtual machine in the privileged: it has access to the entire virtualized environment, and is responsible for creating user-level virtual machine,

and assign I / O device resources to it. the Kernel is specially modified VM, it can access the hardware directly IO can also be accessed by other users VM.

(3) numerous other virtual machines: virtual is user-level virtual machines: they are actually delivered to the user's virtual machine, is also relevant isolation of VM.

Note: the Xen virtualization support three, of course, especially where the virtual CPU Paravirtualized or complete virtualization.

<. 1> Para-the Virtualization (Paravirtualization): In this virtualization, CPU is not required HVM support features,

but GuestOS the Kernel must be allowed to be modified. otherwise it will not support the GuestOS run in DomU.

This is because you must let GuestOS know they are when running in a virtualized environment, making it so privileged instructions to operate the hardware,

It will directly initiate HyperCall initiate a request to the Xen Hypervisor to complete the so-called hardware operations.

In PV technology, it can run on the OS DomU comprising:

the Linux, the NetBSD, the FreeBSD, the OpenSolaris

<2> HVM (fully hardware-based virtualization): this need by means of a virtual Intel's VT-x or AMD AMD -v the HVM technology

and IO hardware simulation Qemu, in order to support GuestOS does not modify the kernel, it can directly support DomU.

This is because the Xen Hypervisor running on the CPU ring -1, GuestOS the kernel running on the Ring 0 CPU, GuestOS

all fake privileged instructions to initiate the call by the ring 0 CPU directly captured and handed over to the ring -1 Hypervisor Xen on, and finally on its behalf by the Xen performed

so DomU shutdown command if initiated, it will cut off the power of the Xen GuestOS only, and will not affect other GuestOS.

In HVM technology can run on the OS DomU comprising: X86 architecture all supported OS.

<. 3> the PV ON HVM: the I / O devices paravirtualized running, running in the CPU mode HVM

is a way to solve HVM embodiment herein IO device must also be fully simulated brought bad performance; by the CPU to

full virtualization, the I / O device is used to install the corresponding IO driver implementation paravirtualized IO way to improve the efficiency in the GuestOS.

In PV on HVM technology can run on the OS DomU comprising:

as long as the OS can be driven interface type PV IO devices can.

1.2 Xen term for the VM:

Xen referred to as the VM Domain.

The first VM privilege, generally referred to in the Xen: Domain0, referred to as Dom0, alias:. Privileged Domain

Other VM subsequent user level, referred to in the Xen: domain1, Domain2, ...., they have a collectively DomU, alias: unprivileged Domain.

1.3 Xen virtualization process CPU and memory:

Xen VM when to provide CPU virtualization, it is also used to start a thread in the Xen hypervisor layer, and maps these threads to a physical core, of course, through DomU cpus in the configuration file may specify the analog bind to the CPU thread certain physical core; and virtual memory pages are mapped memory, the physical memory mapped to a plurality of continuous or discontinuous memory page VM, VM so that it appears that this is a complete continuous memory space.

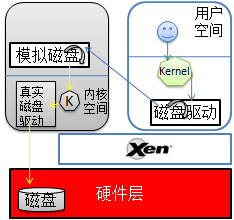

1.4 Xen virtualization process IO device:

when a user starts a VM (DomU), CPU and memory required for the VM has provided Xen Hypervisor, and if it requires the use of IO device, the VM initiates a request to the privileged, privileged VM (Dom0) creates a thread for the simulated hardware device user VM, and VM privilege to run in user space, and when the user VM to IO hardware when initiating calls, the VM privilege corresponding analog device receives the request and converts it to the operation of the VM privilege IO hardware to privileged VM kernel took to complete its operation. Here should pay attention to these virtual IO hardware needs to be emulated by Qemu, Xen itself does not provide the appropriate analog functions. (Note: the privileged VM's CPU and memory is also Xen Hypervisor provided.)

Qemu analog IO devices (fully virtualized mode): If a user VM requests a disk to a privileged VM, privileged VM can be a partition, files, etc., by Qemu it modeled as a disk device, took documents, the privileged VM to create an image file, and then simulate a disk controller chip via Qemu for the file, then map it to the user's VM, of course, this simulated disk controller chip must be one of the most common users of VM Kernel some support, but should pay attention: the simulated disk may be different from the actual physical disk, because to be as compatible as possible. Thus, if a user VM write data to disk is as follows:

User VM-APP ---> user VM-Kernel calls the driver can be prepared for the virtual disk to write data (eg: writing data to a sector location on the disk / data coding, etc.) --->

user to VM-Kernel the encoded information to the privileged VM disk simulation process --->

the privileged VM disk simulation process ID information and then sent to restore privilege VM-kernel --->

privileged calls VM-kernel real physical disk drives for data write preparation before ---> final scheduling disk drive disk writing is completed.

excerpt supplement :( http://my.oschina.net/davehe/blog/94039?fromerr=mOuCyx6W )

Xen provides an abstraction layer to the Domain, which includes management and virtual hardware API. Domain 0 contains internal real device drivers (native device driver), direct access to physical hardware, the management API may interact Xen provided, and management tool user mode: to manage (e.g., xm / xend, xl, etc.) Xen virtual machine environment.

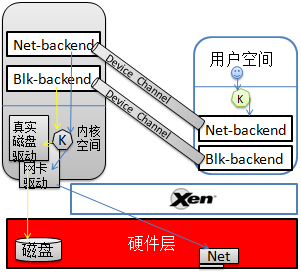

Paravirtualized IO device: it is the biggest difference with the analog DomU know they are running in a virtualized environment, and know that this is not a real disk disk it is just a front-end disk drive Xen simulation (Disk Frontend), write it when the data, the data directly to the disk Frontend, again without calling a disk drive for data encoding, when the end of the privileged VM disk backend data is received from DomU, but also directly to the privileged VM-Kernel, called directly by physical disk drive to process the raw data and written to disk.

Excerpt added:

Xen2.0 after the introduction of the separation device driving mode. The mode set the front end (front end) of each device in the user domain, to establish a rear end (back end) in the privileged device domain (DomO) in. All user domain operating system like ordinary apparatus is used as a transmission request to the head-end, and the front end sends these requests, and the identity information of the user domain through the IO request descriptor (IO descripror ring) and a device channel (device channel) in a privileged back-end equipment domain. Such systems control information transfer and data transfer separately.

Paravirtualized machine (Domain U PV) of PV Block Driver receives a request to a local disk To write data, and write data shared with Domain 0 own local memory to local disk by Xen Hypervisor. Event channel exists between Domain 0 and paravirtualized Domain U (I understand: Device Channel included Event Channel), between the channel allows them to communicate by asynchronous present in the Xen Hypervisor interruption. Domain 0 will receive one from Xen Hypervisor system interrupts, and triggers Block Backend Driver Domain 0 in the local system to access content from shared memory and read for yourself and paravirtualized client's data blocks after then it is written to the specified location on the local disk.

但无论采用模拟或半虚拟化最终都是对物理磁盘的操作,假如当前只有一个物理磁盘,众多用户VM都在进行大量的读写请求,此时,为了避免用户VM无限制的向特权VM发起请求,特权VM中采用一个环状缓存区,每到一个IO请求,就先将其塞入这个环状缓冲区的槽位中,若缓冲区满了,就会告诉用户VM IO设备繁忙。当然其它各种IO设备大致都采用这种机制来控制。

1.5 Linux Kernel对Xen的支持:

》Linux2.6.37 : kernel开始对Xen进行支持,并加其加入到Kernel中。

》Linux3.0 :Kernel开始对Xen的关键部分进行优化.

RHEL对Xen的支持概况:

Redhat系列对Xen的支持情况:

RHEL5.7 ~ 及以前版本: 默认的企业虚拟化技术为Xen.

但Redhat提供了两种内核:

kernel-... :这是仅允许RHEL系统的内核,不能运行在DomU中。

kernel-xen.. :这是需要部署XenServer时,使用的Kernel版本.

RHEL6 ~ 及以后版本: 默认支持KVM(收购自以色列的一款虚拟化工具),并且不在对Xen做任何支持,但允许自己运行在DomU中.

1.6 Xen版本发布简史:

10年4月Xen4.0.0发布,改进后Xen的DomU最大可支持虚拟CPU 64颗,Xen主机可支持1TB内存和128颗物理CPU,磁盘可支持快照和克隆; HVM客户机支持虚拟内存页共享;

11年4月发布的Xen4.1版后,xm/xend开始被提示废弃,xl这个更轻量级的Xen VM管理工具逐渐成为主流.

15年为止已经发布Xen4.5版本.目前yum源可用的最新版Xen是4.6.1版的(http://mirrors.skyshe.cn/centos/6.7/virt/x86_64/xen-46/).

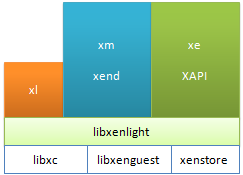

1.7 Xen的工具栈:

xend : 这是Xen Hypervisor的Dom0上运行的服务,此服务用来监控xm命令发来的指令,并完成相应的动作。

xm : Xen Management,用来管理VM的创建、删除、启动、快照、删除、停止等的管理工具。

xl : 这是一个基于libxenlight库的一个轻量级VM管理工具,它从Xen4.1开始出现,从4.3以后,它被作为主要的VM管理工具,而xm这个重量级管理工具开始被提示废弃.以下为xm、xl的对比图:

xl 和 xm都需要调用libxenlight,但xl不需要运行任何服务,它可直接调用libxenlight完成相关操作。

xe/XAPI,是xend的一个API管理接口,通常用于Xen Cloud环境中:Xen Server, XCP

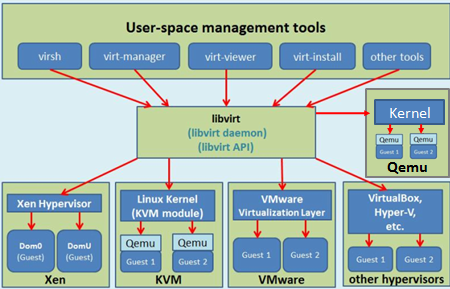

virsh/ libvirt : 这是Redhat发起开发的一套用于管理众多不同类别的VM的管理工具。

virsh : 这是一个命令行工具

libvirt: 则是一个lib库, libvirtd守护进程用于监听virsh命令操作,并调用lbvirt完成相关操作.

1.8 XenStore:

为各Domain之间提供共享信息存储的空间,同时它也是一个有着层级结构的名称空间数据库;它运行于Dom0上,由Dom0直接管理,它支持事务和原子操作。XenStore通常用来控制DomU中设备的机制,并通过多种方式对其进行访问。

摘录补充(http://blog.chinaunix.net/uid-26299858-id-3134495.html):

XenStore是Xen提供的一个域间共享的存储系统,它以字符串形式存放了管理程序和前、后端驱动程序的配置信息。Dom0管理所有的数据,而DomU通过共享内存,向Dom0请求与自己相关的键值,以此实现域间通信。Xen提供了多种接口用来操作XenStore:命令行的xenstore-*命令、用户空间的xs_系列函数、内核的XenBus接口,都可以用来方便地操作XenStore的数据。

1.9 虚拟化中的四种网络模型

在虚拟化环境中虚拟网络是十分重要但又比较难,需要特别注意;

在Linux中实现虚拟网络的方法中比较常用的工具有两个:bridge-utils 和 openvswitch,它们创建的虚拟网络设备是不能相互使用的,比如:bridge-utils创建的桥设备,openvswitch是无法识别的。

用下图来做简单说明

1.10 Xen的安全问题导读

补充见附件:

2. Xen的安装及配置文件说明

2.1.1 在CentOS6.6上运行Xen的条件:

方式一:

(1) 编译3.0以上版本的内核,启动对Dom0的支持.

(2) 编译xen程序

方式二:

使用相关Xen运行环境快速部署的项目:

(1) xen4contos : 这是Xen官方提供的开源项目。

xen环境部署的RPM包镜像站: http://mirrors.aliyun.com/centos/6.7/xen4/x86_64/

(2) xen made easy

2.1.2 Xen的配置:

(1) 修改grub.conf

# Xen是直接运行于硬件层之上的,因此必须修改grub.conf,手动添加以下参数:

title Xen Server Linux 3.7.4

root (hd0,0)

kernel /xen.gz dom0_mem=1024M cpufreq=xen dom0_max_vcpus=2 dom0_vcpus_pin

module /vmlinuz-3.7.4-1.el6xen.x86_64 ro root=/dev/mapper/vg0-root rd_NO_LUNKS LANG=en_US.UTF-8

rd_LVM_LV=vg0/swap rd_NO_MDSYSFONT=latarcyrheb-sun16 crashkernel=auto rd_NO_DM

KEYBOARDTYPE=pc KEYTABLE=us rd_LVM_LV=vg0/root rhgb quiet

module /initramfs-3.7.4-1.el6xen.x86_64.img

注: kernel 必须指定xen*.gz为启动的内核, dom0_mem:设定Dom0启动后可以使用的内存大小,

cpufreq: 设定由Xen来管理CPU, dom0_max_vcpus: 设定Dom0可以使用多少颗CPU,

dom0_vcpus_pin: 将Dom0固定在系统启动后,分配给它的CPU上,以避免它去抢占其它物理CPU核心,这样其它物理核心就可以分配给DomU来使用了。

详细参数查看:

http://xenbits.xen.org/docs/unstable/misc/xen-command-line.html

2.2.1 Xen 启动DomU的配置文件说明

xl list : #首先需要了解的第一个命令.

xen VM的常见状态:

r : running

b: block(阻塞)

p: pause(暂停): 类似与睡眠.

s: stop

c: crash(崩溃)

d: dying, 正在关闭的过程中.

2.2.1.1 如何创建一个Xen PV模式的VM:

1. Kernel 和 initrd或initramfs :这些LinuxKernel文件必须要有,但可以不在DomU上,它们可以在Dom0上.

2. DomU内核模块(即:/lib/modules/`uname -r`): 这些就需要在DomU根文件系统中存储了。

3. 根文件系统

4. swap设备: 若条件充足也可以提供.

以上四步的内容必须定义在DomU的配置文件中.

注: xl 和 xm启动DomU的配置文件是存在一些不同的.

对于xl命令创建VM所需的配置文件说明可查看:

man xl.cfg

# 注: man xl.conf #这是对xl命令所使用的配置文件.

xl.cfg的配置文件参数说明:

name : 域的唯一名.

builder: 指明VM的类型,generic:创建PV类型的VM; HVM:创建HVM类型的VM

vcpus: 指定CPU个数.

maxvcpus:指定最大可使用CPU的个数,这些CPU可在需要是手动启动。

cpus: 指定VM的vcpu线程可以运行在哪些物理核心列表上.

#如:cpus="0-3,5,^1" 这表示:VCPU可运行在0,2,3,5上,但不能运行在1上.

#建议将vCPU绑定到固定的物理核心上,这样可减少vCPU线程在多个物理核心上切换.

memory: 指定DomU启动时预分配的内存大小[单位:M]

maxmem: 指定最大给DomU分配的内存大小. [单位:M]

on_poweroff: 指定DomU关机时,实际采用的动作.

destroy: 直接将DomU断电关机.

restart: 重启

rename-restart: 改名后重启

preserve: 将这个DomU保存,以便下次再启动。

coredump-destroy: 将DomU的运行中的内核数据备份出来后,再关机。

coredump-restart: 先备份内核数据,再重启.

on_reboot: 重启DomU时实际采用的动作。

on_crash: 当DomU崩溃后,实际采用的动作。

uuid: DomU的UUID,非必须.

disk:指定DomU的磁盘列表

如: disk=[ "/img/disk/DomU1.img" , "/dev/sda3" , ...]

vif : 指定DomU的网卡列表

如: vif=[ "NET_SPEC_STRING" , "NET_SPEC_STRING" , ...]

vfb: 指定DomU的显示器, vfb:virtual frame buffer(虚拟帧缓冲)

如: vfb=[ "VFB_SPEC_STRING" , "VFB_SPEC_STRING" ,...]

pci :指定DomU的PCI设备列表.

如:pci=[ "PCI_SPEC_STRING" , "PCI_SPEC_STRING" ,...]

PV模型的专用指令:

kernel : 指定内核文件路径,此路径为Dom0的路径.

ramdisk: 指定initrd或initramfs文件的路径.

root: 指定根文件系统. 此参数仅与kernel和ramdisk一起使用,因为,kernel和ramdisk都是在

Dom0上的,并没有grub.conf来告诉kernel根文件系统在哪里,因此这里需要指定。

extra: 与root参数类似,它是指定kernel启动时的其它参数的.

# 以上4个参数用于kernel文件在Dom0上, 下面固定格式用于DomU有自身的Kernel文件.

bootloader="pygrub": 若DomU使用自己的kernel和ramdisk,则此时需要一个Dom0中的

应用程序来实现其bootloader功能。这个不用指定root,因为,DomU的启动所需的

所有文件都在自己的镜像文件中,它可以从grub.conf中指定根文件系统的位置.

注:

# 让DomU通过网络之间安装操作系统,需要注意:

# kernel 和 ramdisk所需要的文件必须是:安装光盘目录下/isolinux/{vmlinuz,initrd.img}

kernel="/images/kernel/vmlinuz"

ramdisk="/images/kernel/initrd.img"

extra="ks=http://1.1.1.1/centos6.6_x86_64.ks" #注:给内核传递的ks文件。

另注:

cloud-init*.rpm工具包可以将安装的OS中的特有信息(如:MAC, 磁盘信息等)删除,并且可以在下次启动时,

自动生成这些信息.是制作云模板OS镜像的工具。

磁盘参数的指定方式:

xl方式创建VM时,磁盘指定格式: http://xenbits.xen.org/docs/unstable/misc/xl-disk-configuration.txt

[<target>, [<format>,[<vdev>,[<access>]]]]

target: 表示磁盘映像文件或设备文件路径:

如: /images/xen/linux.img

/dev/vg-xen/lv-linux

format: 表示磁盘的格式:

如: raw、qcow2..等,具体格式可查看: qemu-img --help |grep 'Supported formats'

vdev: 指定添加的虚拟磁盘被DomU识别为何种类型的磁盘; 支持:hd, sd, xvd(xen-vritual-disk)

注: 指定是需要写成: sda 或 sdb等,即要指定这是第几块磁盘.

access: 访问权限,除CDROM为'r'只读外,其它都为'rw'

示例:

disk=["/images/xen/linux.img,qcow2,xvda,rw", "/iso/CentOS6.7.iso,,hdc,devtype=cdrom"]

# 使用Dom0中物理磁盘分区做为DomU的存储空间

disk=['/dev/vg-data/lv-bbox,raw,xvda,rw']

创建虚拟磁盘文件:

#注qemu-img创建的磁盘映像文件是采用稀疏磁盘格式创建的.因此用:du -sh linux.img 可看到其大小为0.

qemu-img create -f raw -o size=2G /images/xen/linux.img

创建虚拟网络接口:

#虚拟网卡的创建直接在配置文件中使用vif指定即可。

#格式: vif=[ "NET_SPEC_STRING" , "NET_SPEC_STRING" , ...]

NET_SPEC_STRING:的格式如下:

key=value

#key包含以下几个:

》mac :指定网卡的MAC地址,注:MAC地址必须以:00:16:3e 开头,这是IANA分配给Xen的MAC厂商前缀.

》bridge: 指定此网卡要桥接到Dom0上的那个桥设备上.

》model: 指定模拟网卡的芯片类型:[rtl8139 |e1000]

》vifname:指定在Dom0中显示的接口名, 注: 在DomU中显示还是eth0...

》script: DomU在创建网络接口时,预先执行的脚本.注: 此脚本的路径也是Dom0上的路径.

》ip:指定要注入DomU中的IP地址。

》rate: 指定网卡的设备速率.

如: rate=10Mb/s

rate=1MB/s@20ms #20毫秒内只能传输1M/0.02s=20000字节/20ms

图形窗口的启用:

可直接修改DomU的启动配置文件,并在其中添加:

(1) vfb=['sdl=1'] #这是直接在本地启动一个VNC窗口来输出DomU的图形桌面,且只能本地连接.

(2)Dom0上启动一个VNC进程,并监听在5900端口上,可通过密码连接.

vfb=['vnc=1,vnclisten=0.0.0.0,vncpasswd=123456,vncunused=1,vncdisplay=:1']

#注: vncunused: 为非0值,则表示vncserver监听在大于5900的第一个没被占用的端口上.

# vncdisplay: VNC显示号,默认为当前域的ID,当前域的VNC服务器将监听在5900+此显示号的端口上.

vfb=[ 'sdl=1,xauthority=/home/bozo/.Xauthority,display=:1' ]

#注:xauthority:表示认证密码文件。

# display:

3. 使用libvirt实现Xen虚拟机的图形管理

#需要安装的包

yum install libvirt libvirt-deamon-xen virt-manager python-virtinst libvirt-client

注: virt-manager: 是图形管理VM的接口界面,类似与VMware,可创建、启动、停止等

python-virtinst: 此工具提供了virt-install命令行管理VM的接口.

libvirt-client: 提供了一个virsh命令来管理VM。

service libvirtd start #要使用libvirt工具集,此服务必须首先启动.

virsh dumpxml busybox #将busybox的信息输出为virsh的可用的xml配置文件,可修改此文件做模板来创建新VM实例.

4. PV DomU的根文件系统可以以多种不同的方式安置:

(1) 虚拟磁盘映像文件

(a)方便制作成通用模板

当用qemu-img创建好一个虚拟磁盘映像文件,并向其中安装好OS后,可以使用cloud-init这种工具对其进行修改,去除其中OS的唯一性的数据信息(如:MAC地址等),需要注意的是,磁盘映像文件实际上是由标准格式的并且在其上创建完文件系统后,就可以通过FS的API接口在不挂载的情况下对其中的文件进行修改.

(b)方便实现实时迁移

1. 将模板映像文件放置于FTP/NFS/Samba等共享存储上,且有多台Xen Hypervisor:

场景一: 需要快速创建一台VM.

假如当前业务中MySQL的从库读压力过大,需要扩容,此时就可以直接通过自行开发的脚本工具来判断

当前那个Xen Hypervisor更空闲,然后,直接下载从共享存储上下载一个模板磁盘映像,直接就可以启动起来.

并且这是配置好的从库模板,启动后它可以直接连接到主库等等.

场景二: 快速迁移

假如当前某台Xen Hypervisor的CPU、Memory或其他资源突然升高,此时,可直接将该Xen Hypervisor上

某些VM的内存数据导出到共享存储上,然后直接在另一台比较宽裕的Xen Hypervisor上,下载模板映像文

件并直接将该共享存储上导出的内存数据与模板映像文件关联,就可恢复到此Xen Hypervisor上实现快速迁移。

2.分布式文件系统上存储磁盘映像文件,且有多台Xen Hypervisor:

场景一: 故障快速恢复

假如当前Xen Hypervisor上运行了多台VM,但Xen Hypervisor所在物理机突然故障,这时,我们完全可以直接

将分布式存储系统上的磁盘映像文件直接让正常的Xen Hypervisor接管,已实现快速迁移.

场景二:快速迁移

如上面场景二类似,但此处我们无需在下模板磁盘映像文件,直接dump出运行中VM的内存数据到共享存储上

就可以直接在另一台Xen Hypervisor上启动起来。

(2) 使用Dom0上空闲的物理磁盘分区

(3) 使用Dom0上空闲的逻辑卷

(4) 使用iSCSI设备提供的块级别网络文件系统 或 分布式文件系统。

(5) 网络文件系统,如: NFS, Samba