Reprinted: https://blog.csdn.net/u013109501/article/details/91987180

https://blog.csdn.net/Vancl_Wang/article/details/90349047

bert_utils: https://github.com/terrifyzhao/bert-utils

bert-Chinese-classification-task

First, the environment depend on:

Install Python, tensorflow

python 3.6.8 tensorflow 1.13.1 bert-serving-server 1.9.1 bert-serving-cline 1.9.1

Second, the installation package

PIP the install BERT-serving- Server PIP the install BERT-serving- Client # specific version PIP the install BERT Serving-Client-== 1.9 . . 6

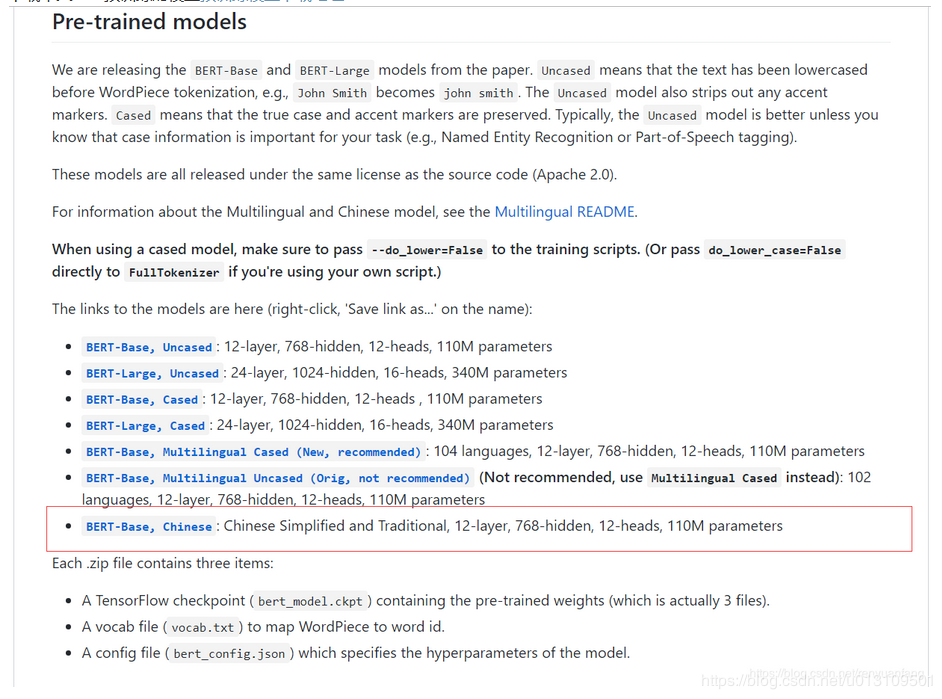

Third, pre-download model training of Chinese bert ( https://github.com/google-research/bert#pre-trained-models )

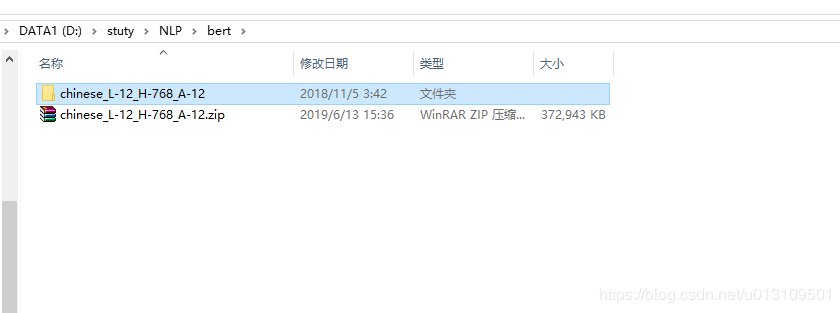

Fourth, after the download is successful, we need to unpack. After decompression success

Fifth, start server, enter cmd in

bert-serving-start -model_dir D:\stuty\NLP\bert\chinese_L-12_H-768_A-12 -num_worker=1

Parameters -model_dirused to model the path specified decompression step, the parameter num_worker=2indicates the two start worker can handle two simultaneous requests, so if using a high profile as bert the server machine separately, may be provided by setting the parameter high concurrency support.

(I was worker = 4, the computer card dead. Model_dir attention must be placed in an address after decompression model.)

Sixth, after a successful start. Run code (csdn case of a blog)

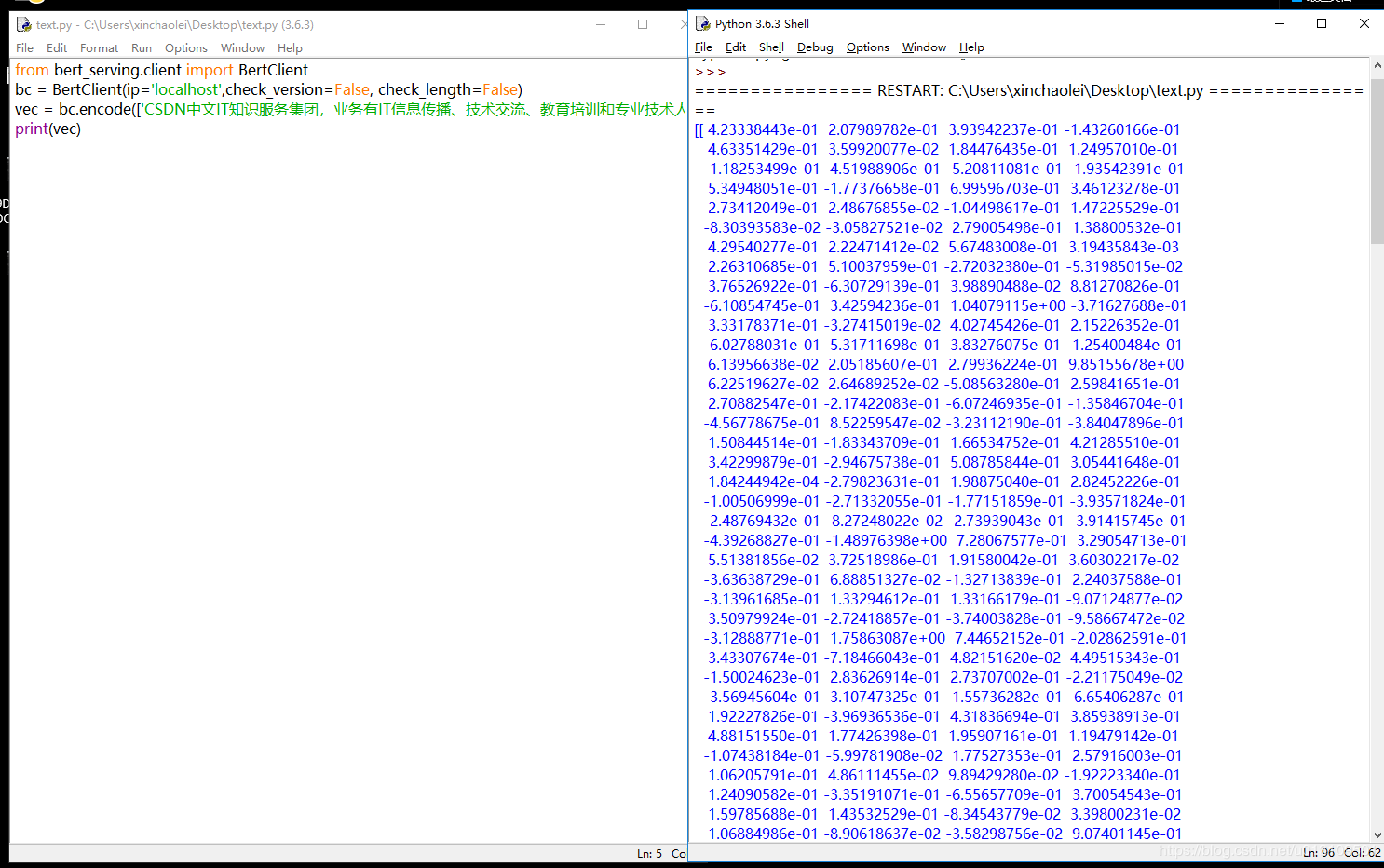

from bert_serving.client import BertClient bc = BertClient(ip='localhost',check_version=False, check_length=False) vec = bc.encode(['CSDN中文IT知识服务集团,业务有IT信息传播、技术交流、教育培训和专业技术人才服务。旗下有网络社区、学习平台和交流平台。']) print(vec)

7.运行成功后的截图