Eager essentials

Eager essentials

Tensorflow eager execution of a command programming environment (imperative programming environment), he can return to the operational particular value, instead of building to run at a later computation graph. So you can easily use TensorFlow and debugging model, and the model can also be reduced.

Eager execution is a flexible machine learning research and experimentation platform, he offers:

- An intuitive interface (intuitive interface) - Construction and use of natural python code python data structure. Rapid iteration and small-scale model of small data sets.

- Easily debugging (easier to debug) - Direct call ops (operations) to check the run or test the model change. Using standard debugging tools for timely python error reporting.

natural control flow (natural control flow) - used to control flow instead of calculating the python FIG flow of control simplifies the specification of the dynamic model.

Installation and basic use

from __future__ import absolute_import, division, print_function, unicode_literals !pip install -q tensorflow-gpu==2.0.0-beta1 import tensorflow as tf import cProfile

In the TensorFlow2.0, eager is enabled by default.

tf.executing_eagerly () # returns renamed eager mode

If eager to open, you can run TensorFlow operation and immediately return the result:

x = [[2.]] m = tf.matmul(x, x) print("hello, {}".format(m)) # hello,[[4.]]

Open eager execution will change the operating behavior of TensorFlow - now they directly calculate their value and returns to the python. Object handle tf.tensor symbol refers to a specific value instead of calculating the figure. Since there is no build in FIG computing session (session), and thus to print () or a debugger to examine the results easily. Calculating, printing and inspection Tensor value does not destroy the calculated gradient flow.

eager execution with the numpy good collaboration. numpy operation accepts tf.tensor parameters. TensorFlow math converted to tf.tensor objects and objects python numpy array. tf.tensor.numpy method returns the value of the object as numpy ndarray.

In addition, eagerexecution support broadcasting. Operator overloading:

a = tf.constant([[1,2], [3,4] ]) print(a) # a tensor include(matrix,shape=(2,2),dtype=int32) b = tf.add(a,1) print(b) # broadingcasting-> [[2,3],[4,5]] print(a*b) # operator overloading import numpy as np c = np.multiply(a,b) # use numpy values print(c) print(a.numpy()) # tensor->numpy

Dynamic flow control

One benefit of using eager execution is to use the full functionality of host language at the time of execution model, such as:

def fizzbuzz(max_num): counter = tf.constant(0) max_num = tf.convert_to_tensor(max_num) for num in range(1, max_num.numpy()+1): num = tf.constant(num) if int(num % 3) == 0 and int(num % 5) == 0: print('FizzBuzz') elif int(num % 3) == 0: print('Fizz') elif int(num % 5) == 0: print('Buzz') else: print(num.numpy()) counter += 1

fizzbuzz(15) # 1 2 Fizz

Eager training

Computing gradients

Automatic differentiation (automatic differentiation) in machine learning algorithm is very useful, such as in the neural network of back-propagation (backpropagation) . In eager execution using tf.GradienTape to calculate the gradient of the tracking operation later.

You can train or calculated gradient tf.GradientTape in eager in. This is useful in the training cycle load.

Because the call is made each time (call), the different operations may occur, are propagated to all the money recorded to a "tape" in order to calculate the gradient, the reverse tape "play" and then discarded. A specific tf.GradientTape only counted once gradient, subsequent calls will lead to runtime errors. (Did not know)

Training model train a model

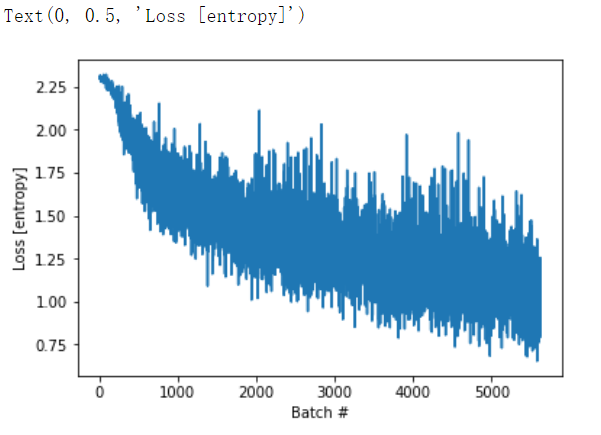

The following example creates a multi-tier model, the standard for digital MNIST handwritten classification. He demonstrated optimizer pooled and convolution API layer or the like can be constructed in a training computation graph execution environment eager.

# Fetch and format the mnist data (mnist_images, mnist_labels), _ = tf.keras.datasets.mnist.load_data() dataset = tf.data.Dataset.from_tensor_slices( (tf.cast(mnist_images[...,tf.newaxis]/255, tf.float32), tf.cast(mnist_labels,tf.int64))) dataset = dataset.shuffle(1000).batch(32) # Build the model mnist_model = tf.keras.Sequential([ tf.keras.layers.Conv2D(16,[3,3], activation='relu', input_shape=(None, None, 1)), tf.keras.layers.Conv2D(16,[3,3], activation='relu'), tf.keras.layers.GlobalAveragePooling2D(), tf.keras.layers.Dense(10) ]) # Even without training, call the model and inspect the output in eager execution: for images,labels in dataset.take(1): print("Logits: ", mnist_model(images[0:1]).numpy())

Although keras model has a built-training cycle (using the fit method), sometimes you need more customization, this is an example of an implementation cycle with eager:

optimizer = tf.keras.optimizers.Adam() loss_object = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True) loss_history = [] def train_step(images, labels): with tf.GradientTape() as tape: logits = mnist_model(images, training=True) # Add asserts to check the shape of the output. tf.debugging.assert_equal(logits.shape, (32, 10)) loss_value = loss_object(labels, logits) loss_history.append(loss_value.numpy().mean()) grads = tape.gradient(loss_value, mnist_model.trainable_variables) optimizer.apply_gradients(zip(grads, mnist_model.trainable_variables)) def train(): for epoch in range(3): for (batch, (images, labels)) in enumerate(dataset): train_step(images, labels) print ('Epoch {} finished'.format(epoch)) train() # Epoch 0 finished;Epoch 1 finished ...

import matplotlib.pyplot as plt plt.plot(loss_history) plt.xlabel('Batch #') plt.ylabel('Loss [entropy]')

Variables and optimizers

During training tf.Variable object store mutable (variable) values tf.Tensor, the automatic differentiation can be made easier, as a variable parameter model can be encapsulated in the class.

Use tf.Variable tf.GradientTape better packaging and model parameters. For example, the example can be rewritten in the automatic differentiation of:

class Model(tf.keras.Model): def __init__(self): super(Model, self).__init__() self.W = tf.Variable(5., name='weight') self.B = tf.Variable(10., name='bias') def call(self, inputs): return inputs * self.W + self.B # A toy dataset of points around 3 * x + 2 NUM_EXAMPLES = 2000 training_inputs = tf.random.normal([NUM_EXAMPLES]) noise = tf.random.normal([NUM_EXAMPLES]) training_outputs = training_inputs * 3 + 2 + noise # The loss function to be optimized def loss(model, inputs, targets): error = model(inputs) - targets return tf.reduce_mean(tf.square(error)) def grad(model, inputs, targets): with tf.GradientTape() as tape: loss_value = loss(model, inputs, targets) return tape.gradient(loss_value, [model.W, model.B]) # Define: # 1. A model. # 2. Derivatives of a loss function with respect to model parameters. # 3. A strategy for updating the variables based on the derivatives. model = Model() optimizer = tf.keras.optimizers.SGD(learning_rate=0.01) print("Initial loss: {:.3f}".format(loss(model, training_inputs, training_outputs))) # Training loop for i in range(300): grads = grad(model, training_inputs, training_outputs) optimizer.apply_gradients(zip(grads, [model.W, model.B])) if i % 20 == 0: print("Loss at step {:03d}: {:.3f}".format(i, loss(model, training_inputs, training_outputs))) print("Final loss: {:.3f}".format(loss(model, training_inputs, training_outputs))) print("W = {}, B = {}".format(model.W.numpy(), model.B.numpy()))

Use objects for state during eager execution

在TF1.x的计算图执行的时候,程序状态(例如 variables)是存储在全局集合中的,其生命周期是由tf.Session对象管理的。相反,在eager模式下,程序状态对象的生命周期是由其相应的python对象的生命周期决定的。

Variables are objects

在eager模式期间,variables在对象的最后一个引用被删除之前将一直存在而不被删除。.

if tf.test.is_gpu_available(): with tf.device("gpu:0"): print("GPU enabled") v = tf.Variable(tf.random.normal([1000, 1000])) v = None # v no longer takes up GPU memory

object-based saving 基于对象的保存检查点

这一节是培训检查点指南的缩写版本。

tf.train.Checkpoint 可以用来save和restore tf.Variables to/from checkpoint:

(变量保存和恢复)

# 首先创建一变量,并常见保存点变量 x = tf.Variable(10.) checkpoint = tf.train.Checkpoint(x=x) x.assign(2.) #赋给x一个新的值,并保存 checkpoint_path = './ckpt/' checkpoint.save('./ckpt/') # 这个地方是./ckpt/而不是./ckpt。 # 所以保存在./ckpt/ 目录下的 -1文件中。 # 如果是./ckpt,则直接保存在当前目录的ckpt-1的文件中 x.assign(11.) # Change the variable after saving. # Restore values from the checkpoint checkpoint.restore(tf.train.latest_checkpoint(checkpoint_path)) print(x) # =><tf.Variable 'Variable:0' shape=() dtype=float32, numpy=2.0>

为了保存和恢复模型,tf.train.Checkpoint存储对象的内部状态,而不需要隐藏变量。要记录一个模型的状态,优化器,以及全局步骤,也需要通过tf.train.Checkpoint来保存:

(模型的保存和恢复)

# save and restore model import os model = tf.keras.Sequential([ tf.keras.layers.Conv2D(16,[3,3],activation='relu'), tf.keras.layers.GlobalAveragePooling2D(), tf.keras.layers.Dense(10) ]) optimizer = tf.keras.optimizers.Adam(learning_rate=0.001) checkpoint_dir = 'path/to/model_dir' if not os.path.exists(checkpoint_dir): os.makedirs(checkpoint_dir) checkpoint_prefix = os.path.join(checkpoint_dir,'ckpt') # print(checkpoint_prefix) # path/to/model_dir/ckpt root = tf.train.Checkpoint(optimizer=optimizer,model=model) root.save(checkpoint_prefix) # ./path/to/ckpt-1.xxxx root.restore(tf.train.latest_checkpoint(checkpoint_dir)) # 恢复变量

注意:在许多训练循环中,在调用tf.train.Checkpoint.restore之后创建变量。 这些变量将在创建后立即恢复,并且可以使用断言来确保检查点已完全加载。 有关详细信息,请参阅培训检查点指南。

高级自动微分主题

相关推荐阅读:https://www.cnblogs.com/richqian/p/4549590.html

https://www.cnblogs.com/richqian/p/4534356.html

https://www.jianshu.com/p/fe2e7f0e89e5

Dynamic models

tf.GradientTape也可用于动态模型。 这是回溯线搜索算法(backtracking line search alg)的示例,尽管控制流很复杂,但它看起来像普通的NumPy代码,除了有自动微分是可区分的:(不会)

def line_search_step(fn, init_x, rate=1.0): with tf.GradientTape() as tape: # Variables are automatically recorded, but manually watch a tensor tape.watch(init_x) value = fn(init_x) grad = tape.gradient(value, init_x) grad_norm = tf.reduce_sum(grad * grad) init_value = value while value > init_value - rate * grad_norm: x = init_x - rate * grad value = fn(x) rate /= 2.0 return x, value

Custom gradients(自定义梯度)

自定义梯度是一种重写梯度的简单方法。根据输入,输出或结果定义梯度。例如这有一种在后向传递中剪切渐变范数的简单方法:

@tf.custom_gradient def clip_gradient_by_norm(x, norm): y = tf.identity(x) def grad_fn(dresult): return [tf.clip_by_norm(dresult, norm), None] return y, grad_fn # 自定义梯度通常用于为一系列操作提供数值稳定的梯度: def log1pexp(x): return tf.math.log(1 + tf.exp(x)) def grad_log1pexp(x): with tf.GradientTape() as tape: tape.watch(x) value = log1pexp(x) return tape.gradient(value, x) # The gradient computation works fine at x = 0. grad_log1pexp(tf.constant(0.)).numpy()

Performance

在eager模式下,计算会自动卸载(offload)到GPU,如果要控制 计算运行的设备,你可以使用tf.device(/gpu:0)快(或等效的CPU设备)中把他包含进去。

import time def measure(x, steps): # TensorFlow initializes a GPU the first time it's used, exclude from timing. tf.matmul(x, x) start = time.time() for i in range(steps): x = tf.matmul(x, x) # tf.matmul can return before completing the matrix multiplication # (e.g., can return after enqueing the operation on a CUDA stream). # The x.numpy() call below will ensure that all enqueued operations # have completed (and will also copy the result to host memory, # so we're including a little more than just the matmul operation # time). _ = x.numpy() end = time.time() return end - start # shape = (1000, 1000)

shape = (50, 50) # 我的电脑貌似只能跑50的,超过100jupyter notebook就会挂掉,另外 我依然不会查看GPU使用率 steps = 200 print("Time to multiply a {} matrix by itself {} times:".format(shape, steps)) # Run on CPU: with tf.device("/cpu:0"): print("CPU: {} secs".format(measure(tf.random.normal(shape), steps))) # Run on GPU, if available: if tf.test.is_gpu_available(): with tf.device("/gpu:0"): print("GPU: {} secs".format(measure(tf.random.normal(shape), steps))) else: print("GPU: not found")

一个tf.tensor对象可以复制到不同的设备上去执行操作:

if tf.test.is_gpu_available(): x = tf.random.normal([10, 10]) x_gpu0 = x.gpu() x_cpu = x.cpu() _ = tf.matmul(x_cpu, x_cpu) # Runs on CPU _ = tf.matmul(x_gpu0, x_gpu0) # Runs on GPU:0