I. Description of the problem

Reality often encountered in multi-classification learning task, some two-class learning method can be directly extended to multiple classification, but in more cases, we are based on some basic strategies, using the two-class learning is to solve the multi-classification problems.

Suppose there are N classes C 1 , C 2 , ......, C N , the basic idea of multiple classifiers to learn is "dismantling Law", the upcoming multi-classification task split into a number of dichotomous task solving. Specifically, the first question to be split, then split out as

Each binary classification task of training a classifier. During the test, the results of these projections classifiers are integrated to obtain the final multi-classification results. Therefore, how to split the multi-classification task is the key. Here

Starring introduce three classical resolution strategies: one (One vs One, referred to as OvO), one pair of rest (One vs Rest, referred OvR), many to many (Mnay vs Many, referred MvM).

Second, split strategy

Given data set = {D (X . 1 , Y . 1 ), (X 2 , Y 2 ), ......, (X m , Y m )}, Y I belong C { . 1 , C 2 ,. ....., C N }

1, OvO strategy

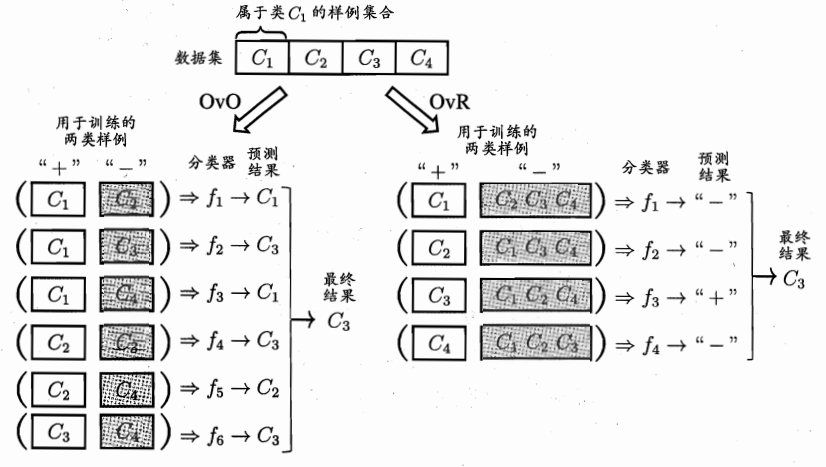

OvO N classes will match the two, thereby generating N (N-1) / 2 binary classifiers tasks, e.g. OvO will distinguish category C I , C J train a classifier, the classifier of the D C I class as a positive example sample, C J class sample as a counter

example. During the testing phase, the new sample would be submitted simultaneously to all the sorter, so we will get N (N-1) / 2 Category results, the final result may be generated by vote. The example shown in Figure 1.

2, OvR strategy

OvR every time a sample is a class as positive cases, other classes as examples all counterexamples N classifiers trained in test only if a predicted positive class classifier, numerals as the corresponding category The final classification result

Consequently, the example shown in Figure 1.

1, OvO and schematic diagram OvR

3, MvM strategy

MvM is the number of each class as positive class, a number of other classes as anti-class. Obviously OvO and OvR is her special case. Obviously MvM front and back of the class constructor must have a special design, can not be selected at random. Here we introduce a

The most commonly used technique MvM: corrected output code (ECOC).

ECOC is encoded introduce the idea of splitting the category, and fault-tolerant as possible in the process of decoding. ECOC work process consists of two steps:

(1) Code: M made of N classes divided times, each time dividing a portion designated as n-type category, designated as part of the anti-class, thereby forming a binary classification training set, and produced a total of M training set, the trainable M training device.

(2) decoding: M classifiers each test sample prediction, which constitute a coding prediction flag. This is compared with this encoding each encoding a respective category, which returns the most minimum distance category final prediction result.