1 Introduction

Deep Reinforcement Learning can be said to be the most cutting-edge fields of study depth research, research that is objective decision-making and make the robot with the ability to control movement. Saying that mankind has created a machine flexibility is far less than some lower organisms, such as bees. . DRL is to do this thing, but the key is to use neural networks to make decisions control.

Therefore, consider for a moment, decided to launch the forefront of DRL series, the first time to push learned DRL frontier, is to introduce the latest research results, does not explain the specific methods (taking into account the bloggers I have no way to get to know so fast). Therefore, we do not know anything for this area, this area of interest or shoes are suitable for reading.

Following entered.

2 Benchmarking Deep Reinforcement Learning for Continuous Control

Article Source: http://arxiv.org/abs/1604.06778

Time: April 25, 2016

open source software Address: https://github.com/rllab/rllab

This article is not anything innovative algorithm article, but it is extremely important article, see the article can be seen at first glance. The article in question DRL for continuous control fields get a Benchmark, and the key is the author of the open source program, according to the author's original words is

To encourage adoption by other researchers!

In this article, or this open source software packages, the author of several mainstream and cutting-edge algorithms for continuous control field are used to reproduce the python, then the algorithm on 31 kinds of continuous control problems of varying difficulty .

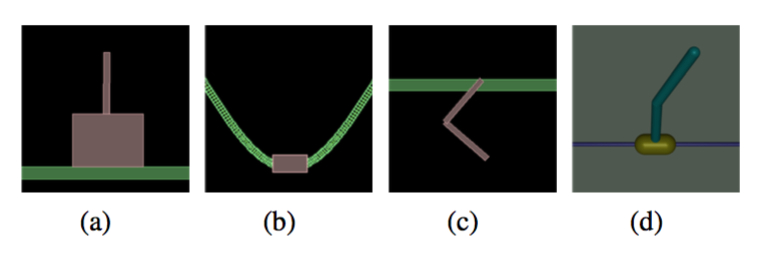

So a task is divided into four categories:

1) a simple task: to make like an inverted pendulum balance

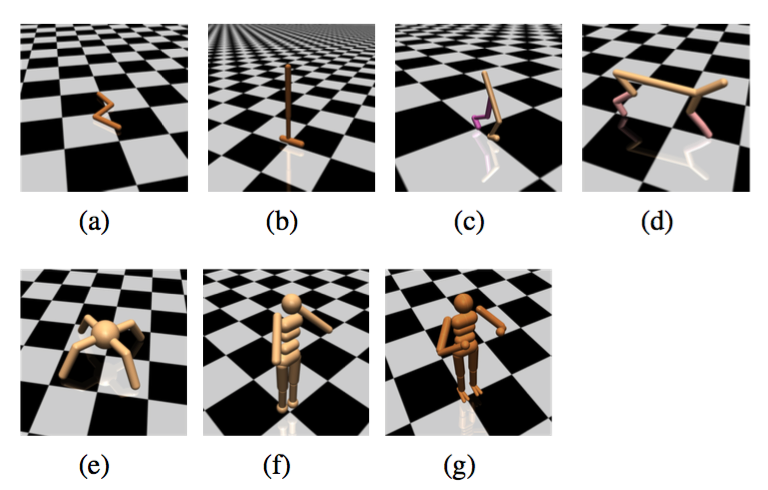

2) Motion task: to make the inside of the virtual creature ahead of him, the sooner the better!

3) incomplete tasks can be observed: the virtual creature can only get a limited perception of information, so as to know the position of each joint, but do not know the speed

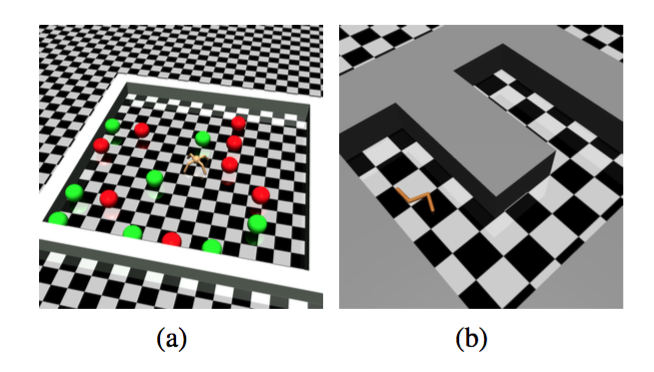

4) hierarchical tasks: + include top decision-making level control. For example, make the following virtual ants to find food or let the virtual snake maze. This difficulty is very big.

So with the same test environment, you can compare different algorithms.

Comparison out of the result:

- TNPG and TRPO two methods (UC Berkerley of Schulman suggested that now belongs to OpenAI) the best, DDPG (DeepMind of David Silver team proposed) followed.

- There is no level tasks to complete an algorithm, the birth of the new algorithm.

Then the article and no A3C algorithm DeepMind of http://arxiv.org/pdf/1602.01783 tested, and this is the best algorithm by DeepMind article.

3 Summary

UC Berkerley the open source and I believe that the academic community has an important influence, many researchers will benefit from their public for reproduction algorithm. After studies will probably be tested on this Benchmark.

Reproduced in: https: //www.cnblogs.com/alan-blog-TsingHua/p/9733931.html