Retrieval Augmented Generation (RAG) is an AI framework that enhances text generation by combining information retrieval and natural language processing (NLP) capabilities. Specifically, the language model in the RAG system queries and searches a knowledge base or external database through a retrieval mechanism that incorporates the latest information into the generated response, making the final output more accurate and containing more context.

Zilliz Cloud ( https://zilliz.com.cn/cloud) is built on the Milvus ( https://milvus.io/) vector database and provides solutions for storing and processing large-scale vectorized data, which can be used for efficient management and analysis. and retrieve data. Developers can use the vector database function of Zilliz Cloud to store and search massive Embedding vectors, further enhancing the retrieval module capabilities in RAG applications.

AWS Bedrock cloud service ( https://aws.amazon.com/cn/bedrock/) provides a variety of pre-trained basic models that can be used to deploy and expand NLP solutions. Developers can integrate models of language generation, understanding, and translation into AI applications through AWS Bedrock. In addition, AWS Bedrock can generate relevant and context-rich responses to text, further increasing the capabilities of RAG applications.

01. Use Zilliz Cloud and AWS Bedrock to build RAG applications

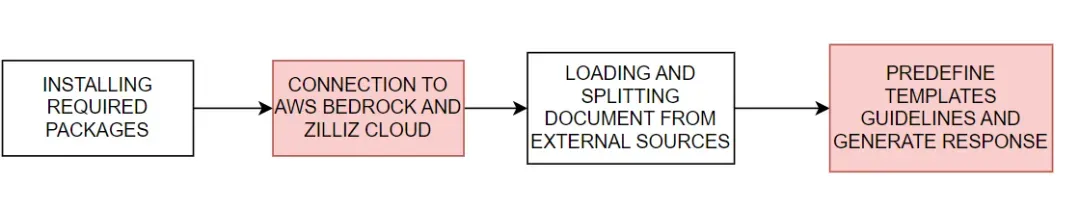

We will demonstrate how to use Zilliz Cloud with AWS Bedrock builds RAG applications. The basic process is shown in Figure 1:

Figure 1. Basic process of building a RAG application using Zilliz Cloud and AWS Bedrock

Figure 1. Basic process of building a RAG application using Zilliz Cloud and AWS Bedrock

#download the packages then import them

! pip install --upgrade --quiet langchain langchain-core langchain-text-splitters langchain-community langchain-aws bs4 boto3

# For example

import bs4

import boto3

Connect to AWS Bedrock and Zilliz Cloud

Next, set the environment variables required to connect to AWS and Zilliz Cloud services. You need to provide the AWS service region, access key, and Zilliz Cloud's Endpoint URI and API key to connect to the AWS Bedrock and Zilliz Cloud services.

# Set the AWS region and access key environment variables

REGION_NAME = "us-east-1"

AWS_ACCESS_KEY_ID = os.getenv("AWS_ACCESS_KEY_ID")

AWS_SECRET_ACCESS_KEY = os.getenv("AWS_SECRET_ACCESS_KEY")

# Set ZILLIZ cloud environment variables

ZILLIZ_CLOUD_URI = os.getenv("ZILLIZ_CLOUD_URI")

ZILLIZ_CLOUD_API_KEY = os.getenv("ZILLIZ_CLOUD_API_KEY")

With the access credentials provided above, we created a boto3 client ( https://boto3.amazonaws.com/v1/documentation/api/latest/index.html) for connecting to the AWS Bedrock Runtime service and integrating AWS Bedrock language model in . Next, we initialize a ChatBedrock instance ( https://python.langchain.com/v0.1/docs/integrations/chat/bedrock/), connect to the client, and specify the language model to use. The model we use in this tutorial anthropic.claude-3-sonnet-20240229-v1:0 . This step helps us set up the infrastructure for generating text responses, and also configures the model's temperature parameters to control the diversity of generated responses. BedrockEmbeddings instances can be used to convert unstructured data such as text ( https://zilliz.com.cn/glossary/%E9%9D%9E%E7%BB%93%E6%9E%84%E5%8C%96%E6 %95%B0%E6%8D%AE) into a vector.

# Create a boto3 client with the specified credentials

client = boto3.client(

"bedrock-runtime",

region_name=REGION_NAME,

aws_access_key_id=AWS_ACCESS_KEY_ID,

aws_secret_access_key=AWS_SECRET_ACCESS_KEY,

)

# Initialize the ChatBedrock instance for language model operations

llm = ChatBedrock(

client=client,

model_id="anthropic.claude-3-sonnet-20240229-v1:0",

region_name=REGION_NAME,

model_kwargs={"temperature": 0.1},

)

# Initialize the BedrockEmbeddings instance for handling text embeddings

embeddings = BedrockEmbeddings(client=client, region_name=REGION_NAME)

Collect and process information

After the Embedding model is successfully initialized, the next step is to load data from an external source. Create a WebBaseLoader instance ( https://python.langchain.com/v0.1/docs/integrations/document_loaders/web_base/) to crawl content from the specified web source.

In this tutorial, we will load content from AI agent related articles. The loader uses SoupStrainer from BeautifulSoup (https://www.crummy.com/software/BeautifulSoup/bs4/doc/) to parse specific parts of the web page - namely with "post-content", "post-title" and "post" -header" section to ensure that only relevant content is retrieved. The loader then retrieves the document from the specified network source, providing a list of related content for subsequent processing. Next, we use the RecursiveCharacterTextSplitter instance ( https://python.langchain.com/v0.1/docs/modules/data_connection/document_transformers/recursive_text_splitter/) to split the retrieved document into smaller text chunks. This can make the content more manageable, and can also pass these text blocks into other components, such as text Embedding or language generation modules.

# Create a WebBaseLoader instance to load documents from web sources

loader = WebBaseLoader(

web_paths=("https://lilianweng.github.io/posts/2023-06-23-agent/",),

bs_kwargs=dict(

parse_only=bs4.SoupStrainer(

class_=("post-content", "post-title", "post-header")

)

),

)

# Load documents from web sources using the loader

documents = loader.load()

# Initialize a RecursiveCharacterTextSplitter for splitting text into chunks

text_splitter = RecursiveCharacterTextSplitter(chunk_size=2000, chunk_overlap=200)

# Split the documents into chunks using the text_splitter

docs = text_splitter.split_documents(documents)

Generate response

The prompt template pre-defines the structure of each response, which can guide the AI to use statistics and numbers when possible and avoid making up answers when relevant knowledge is lacking.

# Define the prompt template for generating AI responses

PROMPT_TEMPLATE = """

Human: You are a financial advisor AI system, and provides answers to questions by using fact based and statistical information when possible.

Use the following pieces of information to provide a concise answer to the question enclosed in <question> tags.

If you don't know the answer, just say that you don't know, don't try to make up an answer.

<context>

{context}

</context>

<question>

{question}

</question>

The response should be specific and use statistics or numbers when possible.

Assistant:"""

# Create a PromptTemplate instance with the defined template and input variables

prompt = PromptTemplate(

template=PROMPT_TEMPLATE, input_variables=["context", "question"]

)

Initialize the Zilliz vector store and connect to the Zilliz Cloud platform. The vector store is responsible for converting documents into vectors for subsequent quick and efficient retrieval of documents. The retrieved documents are then formatted and organized into coherent text, and the AI integrates relevant information into responses, ultimately delivering highly accurate and relevant answers.

# Initialize Zilliz vector store from the loaded documents and embeddings

vectorstore = Zilliz.from_documents(

documents=docs,

embedding=embeddings,

connection_args={

"uri": ZILLIZ_CLOUD_URI,

"token": ZILLIZ_CLOUD_API_KEY,

"secure": True,

},

auto_id=True,

drop_old=True,

)

# Create a retriever for document retrieval and generation

retriever = vectorstore.as_retriever()

# Define a function to format the retrieved documents

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

Finally, we create a complete RAG link for generating AI responses. This link first retrieves documents related to the user query from the vector store, retrieves and formats them, and then passes them to the prompt template ( https://python.langchain.com/v0.1/docs/modules/model_io/ prompts/) to generate a response structure. This structured input is then passed into a language model to generate a coherent response, which is ultimately parsed into a string format and presented to the user, providing an accurate, context-rich answer.

# Define the RAG (Retrieval-Augmented Generation) chain for AI response generation

rag_chain = (

{"context": retriever | format_docs, "question": RunnablePassthrough()}

| prompt

| llm

| StrOutputParser()

)

# rag_chain.get_graph().print_ascii()

# Invoke the RAG chain with a specific question and retrieve the response

res = rag_chain.invoke("What is self-reflection of an AI Agent?")

print(res)

The following is an example response result:

Self-reflection is a vital capability that allows autonomous AI agents to improve iteratively by analyzing and refining their past actions, decisions, and mistakes. Some key aspects of self-reflection for AI agents include:

1. Evaluating the efficiency and effectiveness of past reasoning trajectories and action sequences to identify potential issues like inefficient planning or hallucinations (generating consecutive identical actions without progress).

2. Synthesizing observations and memories from past experiences into higher-level inferences or summaries to guide future behavior.

02. Advantages of using Zilliz Cloud and AWS Bedrock

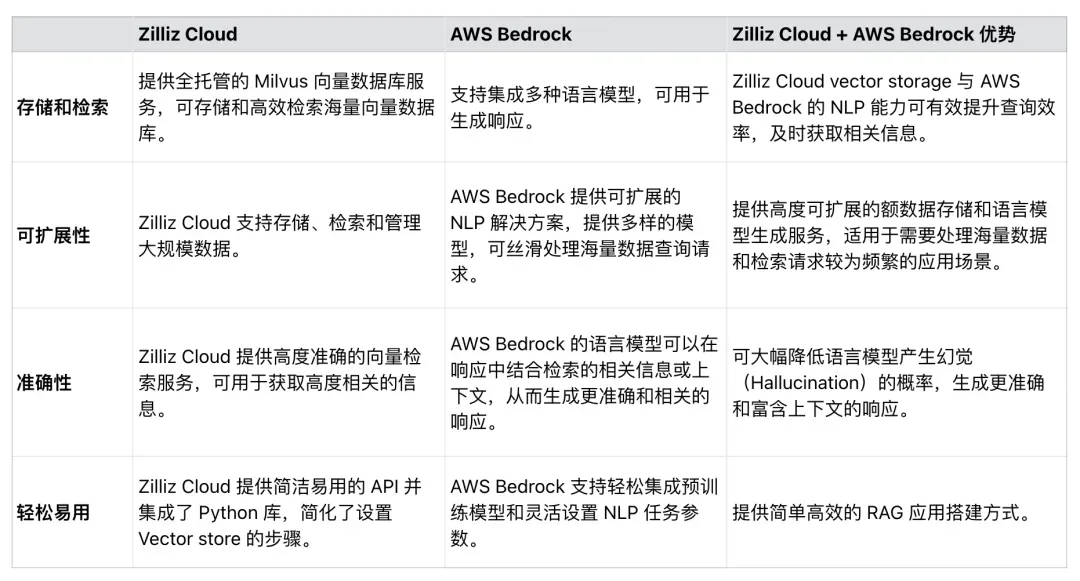

As shown in Table 1, Zilliz Cloud can be seamlessly integrated with AWS Bedrock to enhance the efficiency, scalability, and accuracy of RAG applications. Developers can use these two services to develop comprehensive solutions that process massive data sets, simplify RAG application processes, and improve the accuracy of RAG-generated responses.

Table 1. Benefits of using Zilliz Cloud and AWS Bedrock

Table 1. Benefits of using Zilliz Cloud and AWS Bedrock

03. Summary

This article mainly introduces how to use Zilliz Cloud and AWS Bedrock to build RAG applications.

Zilliz Cloud, a vector database built on Milvus, provides scalable storage and retrieval solutions for Embedding vectors, while AWS Bedrock provides a powerful pre-trained model for language generation. Through sample code, we show how to connect to Zilliz Cloud and AWS Bedrock, load data from external sources, process and split the data, and finally build a complete RAG link. The RAG application built in this article can minimize the probability that LLM will produce hallucinations and provide inaccurate responses, giving full play to the synergy between modern NLP models and vector databases. We hope that this tutorial will inspire others to use similar techniques in building RAG applications.

High school students create their own open source programming language as a coming-of-age ceremony - sharp comments from netizens: Relying on the defense, Apple released the M4 chip RustDesk. Domestic services were suspended due to rampant fraud. Yunfeng resigned from Alibaba. In the future, he plans to produce an independent game on Windows platform Taobao (taobao.com) Restart web version optimization work, programmers’ destination, Visual Studio Code 1.89 releases Java 17, the most commonly used Java LTS version, Windows 10 has a market share of 70%, Windows 11 continues to decline Open Source Daily | Google supports Hongmeng to take over; open source Rabbit R1; Docker supported Android phones; Microsoft’s anxiety and ambitions; Haier Electric has shut down the open platform