01

Background of the project

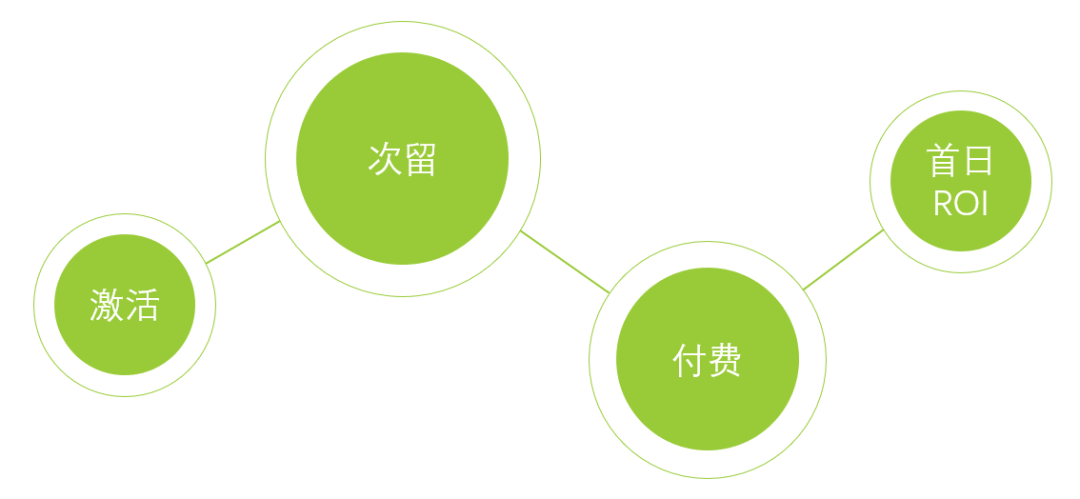

The placement of performance advertising is a game scenario: the media platform hopes to sell the traffic at the highest price, and the customer hopes that the advertising cost and back-end effect will meet the standards. With the development of the performance advertising industry, customers are no longer satisfied with only assessing shallow conversion effects such as awakening and activation. More and more advertisers are expressing their demands to media platforms for optimizing back-end effects. Back-end effects include retention rate, payment rate, first-day payment ROI and other deep conversion types.

In this article, we will review the optimization process of performance advertising in the bidding model.

Advertising media platforms generally use a dual-bid model to protect advertisers’ shallow conversion costs and back-end effects at the same time. Advertisers' advertising demands include shallow conversion cost compliance, deep conversion cost compliance, and deep conversion rate compliance implicit in the two bids. Therefore, the media platform needs to design an appropriate eCPM (effective cost per mille, advertising revenue per thousand impressions) bidding formula to meet the requirements of advertisers while maximizing the media platform's revenue.

02

Project history

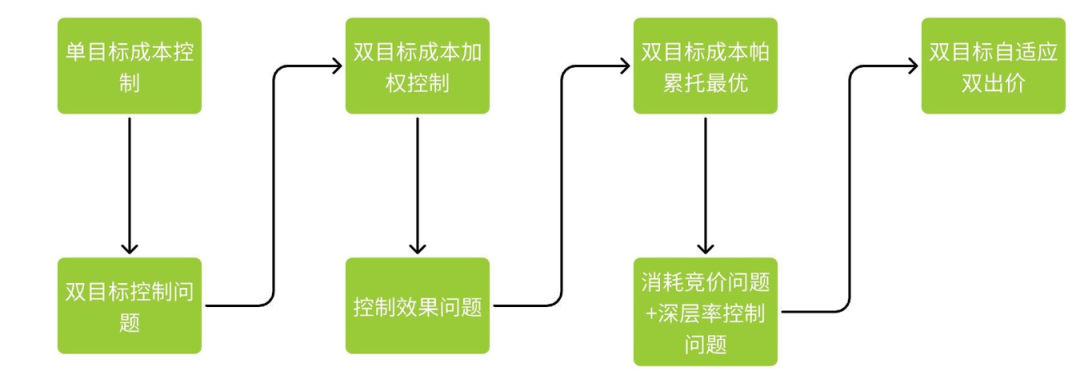

2.1 Traditional single bid

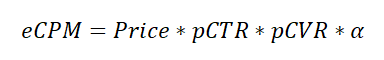

Clients only bid for one conversion goal (e.g. download, wake, etc.). The media platform estimates click-through rates and conversion rates through models, converting the value of a conversion into the exposure value of a piece of traffic. Due to the existence of estimation deviation, an additional bidding factor α needs to be used to adjust the bid to protect the customer's conversion cost.

The bid factor in the above formula mainly plays two roles. First, it is the calibration of estimates so that the exposure value can be accurately measured based on the click conversion rate; second, it is the screening of high-quality traffic, combining estimates and crowd tags to dynamically improve the bidding ability of high-quality traffic.

Before 2022, we have achieved this formula to a relatively extreme level. Bidding factors have changed from inverse proportional control to calculus control, from hourly updates to minute updates, from single granularity control to multi-dimensional dynamic aggregation, and from a single strategy to compatibility with logic such as cold start, regular delivery, and aggressive volume acquisition. With the continuous improvement of prediction model capabilities and the construction of advanced model calibration algorithms, bidding factors have actually begun to play more of a role in screening high-quality traffic. Shallow costs rely on the accurate prediction and rapid calibration of the model itself to be stable and controllable. The above series of optimizations is another wonderful story that I will share with you again when I have the opportunity.

The challenge occurred in the second half of 2021, when the advertising industry began to fully shift to dual-bid advertising. At this time, the deep cost problem was placed in front of us, and the changes in the budget structure made this problem a hurdle that must be overcome.

2.2 Weighted double bid

The business meaning of double bidding is: when customers set two bidding targets, one shallow and one deep, such as activation + retention, awakening + payment, etc. While customers require shallow costs to meet standards, they also require deeper effects to meet standards, especially deep cost standards. Starting from the second half of 2021, deep cost control and optimization have become the core pain points during this period. In order to quickly adapt to changes, we adopted a small-step approach and quickly launched weighted double bidding.

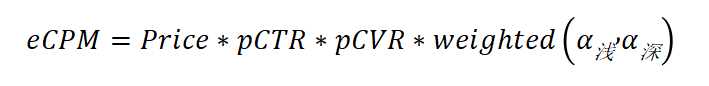

For multi-objective optimization problems, commonly used methods include Pareto optimality and weighted optimality. We use the weighted optimal method to add deep cost control factors on the basis of shallow single bids, weight them to obtain the final cost control factors, and first solve the problem of whether deep cost control exists.

In the above formula, the control logic of the deep bidding factor completely reuses the shallow framework. In actual application, this strategy was found to have the following flaws:

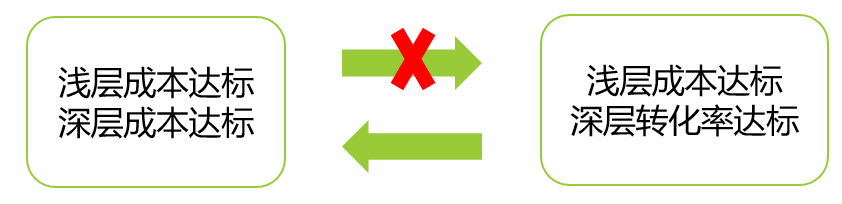

① Under this bidding formula, the shallow cost and the deep cost will affect each other. After weighting, a seesaw phenomenon will easily occur. If the deep layer is better, the shallow layer will be worse, and if the shallow layer is better, the deeper layer will be worse. There may even be cyclical fluctuations.

② The bidding factor is essentially a feedback controller, which is controlled based on the delivery data of the day. For traffic with different deep effects, no estimate based on historical data is introduced when bidding. That is, there is no pDCVR involved in the bidding formula, and the bidding is only controlled based on the delivery performance of the day.

In order to optimize the above problems, we conducted continuous iterations.

2.3 Pareto double bid

The weighted method ensures that the shallow and deep costs are optimal as a whole, while requiring the two to be optimal respectively is essentially a Pareto optimal solution problem. Therefore, we use traffic value and target traffic as the starting points to sort out strategies, and rely on the gradually starting pDCVR model prediction capabilities to build a new dual-bidding strategy to achieve stable control of shallow and deep costs.

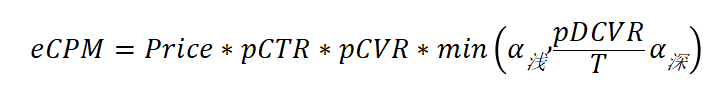

In order to ensure that shallow costs and deep costs meet the standards at the same time, we introduce deep conversion rate estimation pDCVR and adjust the bidding formula to:

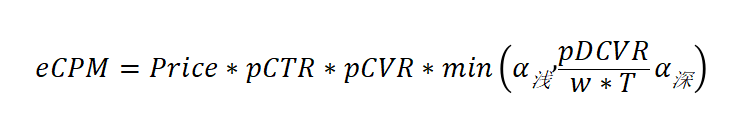

Because deep control is strictly controlled, deep conversion is often more sparse, which is not conducive to data accumulation, and it is difficult to drive volume, affecting revenue. To solve this problem, we control the target rate by introducing a correction coefficient w to improve the running capacity. At the same time, in order to ensure the effect, w is controlled online in real time.

This bidding formula theoretically strictly controls both deep costs and shallow costs. Since the accumulation of deep transformation data is slower than that of shallow data, often by one or even multiple orders of magnitude, the pDCVR model is in a state of relatively accurate sorting during this period, but insufficient accuracy. Therefore, we use the deep estimate indirectly as a basis for judging traffic quality, thereby affecting the deep bidding factor. This bidding formula solves the two problems in 2.2 to a certain extent. But a new problem arises:

①Between strictly controlling deep costs and increasing volume, it is necessary to balance it through the correction coefficient w, but it is difficult to determine the optimal coefficient to achieve the desired balance.

② The bid limit for deep-level high-quality traffic is the bid that meets the shallow-level cost standard. This bid is too conservative for deep-level high-quality traffic. Compared with the deep-level single bidding model, this model suppresses high-quality traffic and will lower the deep-level conversion rate.

③ If the shallow cost and deep cost meet the standard at the same time, it cannot guarantee that the deep conversion rate reaches the standard. Under the general trend of advertising, customers' assessment of secondary retention rate, long-term retention rate and ROI are often issues of rate, rather than simple deep-seated cost compliance issues.

The above questions can be summarized as follows: How to achieve shallow cost compliance while achieving deep conversion rate compliance? How to gradually improve costs while releasing consumption during the delivery process?

2.4 Adaptive double bidding

In order to solve the above problems, in addition to improving the accuracy of the pDCVR model in the long term, a more effective method is to make breakthroughs in the traffic value evaluation mechanism. So we iterated on a new version of the bid strategy. The goals are as follows:

① Further optimize traffic to achieve double optimization of deep cost and deep conversion rate.

② While optimizing traffic, strengthen the bidding and bidding capabilities of high-quality traffic to solve the problem of shrinking volume if the effect is not good.

Regarding the bidding mechanism, relying on the continuous improvement of the capabilities of the deep prediction model, we have strengthened the role of pDCVR in bidding prediction compared to the bidding formula in version 2.3. This leads to a new bidding formula:

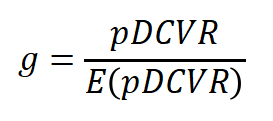

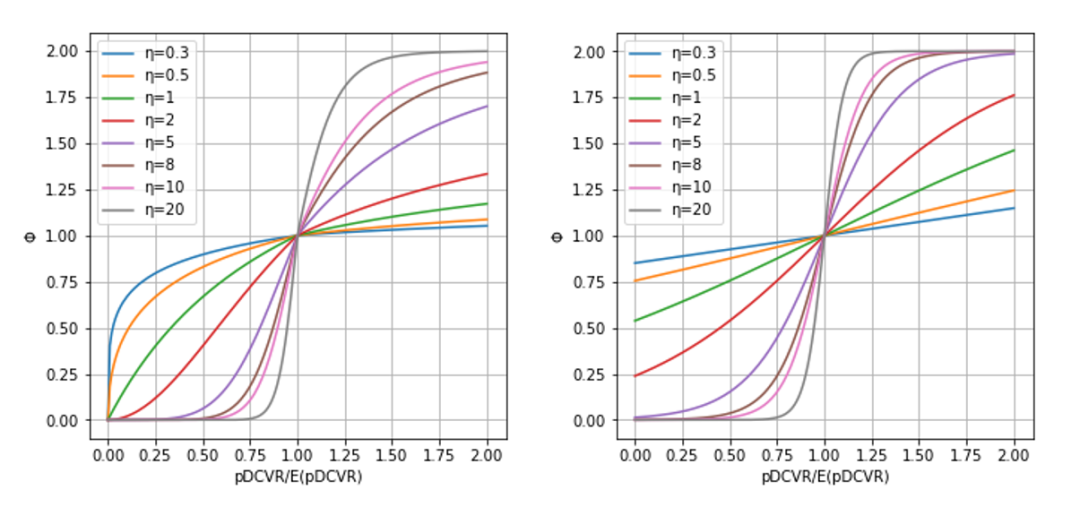

Among them, the φ function is an S-shaped function, based on which the final deep effect control factor is calculated. The figure below shows two different φ function curves. g can be selected as

eta is the function curvature control factor, DCVR is the actual deep conversion rate of the current delivery, and T is the customer's target deep conversion rate.

Its bid control logic is as follows:

(1) When DCVR>=T, the curvature η of the function is a minimum value, and the function curve basically coincides with the x-axis. At this time, no matter whether the pDCVR is high or low, the value of the φ function is basically the same, and there is no intervention in the bidding. You can run as much volume as possible according to the requirements of shallow cost compliance.

(2) When DCVR < T, the controller pulls up η, and the function curvature increases. Traffic with low pDCVR bids conservatively, and traffic with high pDCVR bids aggressively, so DCVR increases. When DCVR approaches T, the control algorithm controls the curvature eta to decrease. Thus achieving dynamic balance.

(3) When DCVR<<T, the curvature η is pulled up to the maximum value, low pDCVR traffic is abandoned during bidding, and high pDCVR traffic is actively bid, driving E(pDCVR) to continuously increase, making DCVR close to T, thus repeating step (2) ) to achieve dynamic equilibrium.

At this point, based on the back-end delivery demands of different customers, whether it is payment cost, secondary retention rate or first-day ROI, etc., combined with deep conversion estimation models such as payment rate estimation, secondary retention rate estimation and payment amount estimation, The deep value of a single flow of traffic has been effectively evaluated. Adaptive dual bidding uses pDCVR to screen and identify high-quality traffic. It relies on a non-linear bidding strategy. When there is a gap between the effect and the target, some low-quality traffic is discarded. At the same time, it bids more aggressively for exposure on high-quality traffic, achieving the best results. Adaptive adjustment. Achieve the purpose of continuously achieving effect climbing and gradually automatically tilting consumption to high-quality traffic. There are also some small details, such as the g function taking into account crowd labels at the same time, etc., which will not be expanded here.

03

Online effect

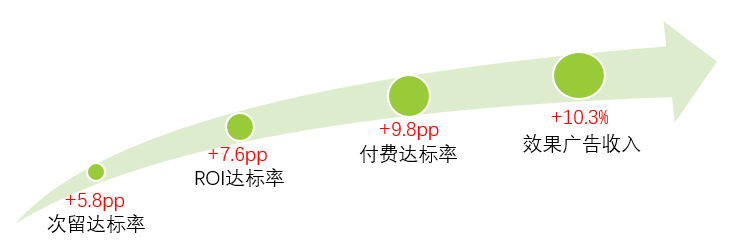

Multiple versions are launched iteratively, cumulatively driving performance advertising improvements:

04

Summary and Outlook

Any bidding strategy is based on the prediction ability of the model, and is closely related to the construction of crowd labels, budget allocation logic, design of bidding strategies, etc. Currently, as customers' assessment goals become more and more stringent and bidding models continue to evolve, the traffic value evaluation system needs to be continuously iterated to meet the needs of business development. In the future, we will further optimize in the following directions:

Deep effect control under extremely sparse data

Effect optimization under deep conversion delay

Effect fluctuations caused by changes in the real-time bidding environment

Context-aware automated bidding framework

This article is shared from the WeChat public account - iQIYI Technology Product Team (iQIYI-TP).

If there is any infringement, please contact [email protected] for deletion.

This article participates in the " OSC Source Creation Plan ". You who are reading are welcome to join and share together.