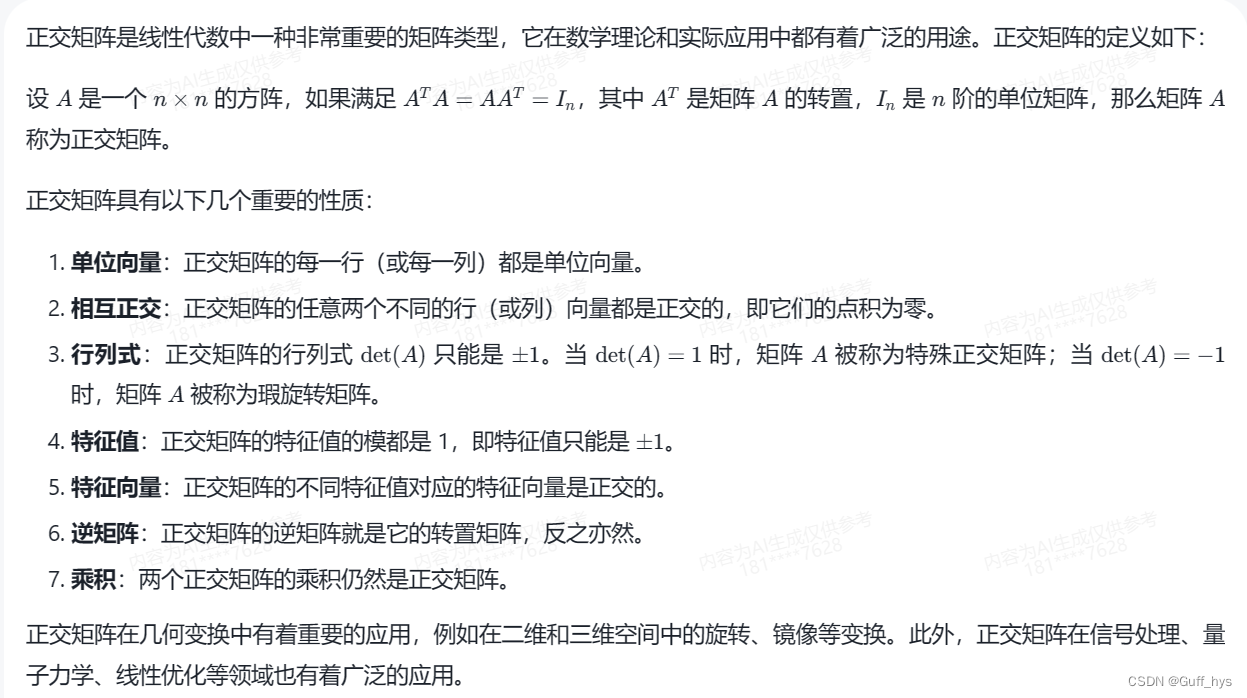

Orthogonal matrices are a very important matrix type in linear algebra and are widely used in mathematical theory and practical applications. The definition of an orthogonal matrix is as follows:

Let \( A \) be a square matrix of \( n \times n \), if it satisfies \( A^TA = AA^T = I_n \), where \( A^T \ ) is the transpose of matrix \( A \), \( I_n \) is the identity matrix of order \( n \), then matrix \( A \) is called an orthogonal matrix.

Orthogonal matrices have the following important properties:

1. **Unit vector**: Each row (or column) of an orthogonal matrix is a unit vector.

2. **Mutually Orthogonal**: Any two different row (or column) vectors of an orthogonal matrix are orthogonal, that is, their dot product is zero.

3. **Determinant**: The determinant of an orthogonal matrix \( \det(A) \) can only be \( \pm 1 \). When \( \det(A) = 1 \), the matrix \( A \) is called a special orthogonal matrix; when \( \det(A) = -1 \), the matrix \( A \) is is called the rotation matrix.

4. **Eigenvalue**: The modulus of the eigenvalues of an orthogonal matrix are all 1, that is, the eigenvalue can only be \( \pm 1 \).

5. **Eigenvector**: The eigenvectors corresponding to different eigenvalues of the orthogonal matrix are orthogonal.

6. **Inverse matrix**: The inverse matrix of an orthogonal matrix is its transposed matrix, and vice versa.

7. **Product**: The product of two orthogonal matrices is still an orthogonal matrix.

Orthogonal matrices have important applications in geometric transformations, such as rotation, mirroring and other transformations in two-dimensional and three-dimensional spaces. In addition, orthogonal matrices are also widely used in signal processing, quantum mechanics, linear optimization and other fields.