HashMap parsing

HashMap source code analysis_jdk1.8 (1)

Hash

Hash, generally translated as "hash", but also directly transliterated as "hash", it is to convert an input of any length into a fixed-length output through a hash algorithm, and the output is the hash value. This conversion is a compressed mapping, that is, the space of hash values is usually much smaller than the space of inputs. Different inputs may hash into the same output, so it is impossible to uniquely determine the input value from the hash value. Simply put, it is a function that compresses a message of any length into a message digest of a fixed length.

Comparison of search/insertion/deletion performance of common data structures.

-

array

A continuous storage unit is used to store data.

Find: time complexity

O(1)Insertion and deletion: time complexity

O(n), insertion and deletion operations involve the movement of array elements. -

linear linked list

Find: time complexity

O(n), need to traverse the linked list.Insertion and deletion: time complexity

O(1), only needs to deal with the pointing of the linked list pointer. -

Binary tree

For a relatively balanced ordered binary tree, the average complexity of insertion, search, deletion and other operations is

O(logn). -

Hash table

The performance of adding, deleting, searching and other operations in the hash table is very high. Without considering the hash conflict, it only needs one positioning to complete, and the time complexity is< a i=1>.

O(1)The backbone of a hash table is an array.

For example, if we want to add or find an element, we can complete the operation by mapping the keyword of the current element to a certain position in the array through a function, and positioning it once through the array subscript.

Mapping function:

存储位置 = f(关键字)Among them, this function f is generally called a hash function, and the design of this function will directly affect the quality of the hash table. The search operation is the same. First, calculate the actual storage address through the hash function, and then retrieve the corresponding address from the array.

Hash collision

Hashing is a solution that improves efficiency by recompressing data. However, since the hash value generated by the hash function is limited, and the data may be relatively large, there will still be different data corresponding to the same value after processing by the hash function. At this time, a hash conflict (hash collision) occurs.

How to resolve hash collisions:

-

open address method

1). Linear detection: When a conflict occurs, check the next unit in the table sequentially until an empty unit is found or the entire table is searched.

2). Re-square detection: When a conflict occurs, jump detection is performed on the left and right sides of the table. Based on the original value, 1 square unit is added first. If it still exists, 1 square unit is subtracted. Then 2 squared, 3 squared, and so on. Until no hash collision occurs.

3). Pseudo-random detection: When a conflict occurs, a number is randomly generated through a random function until no hash conflict occurs.

-

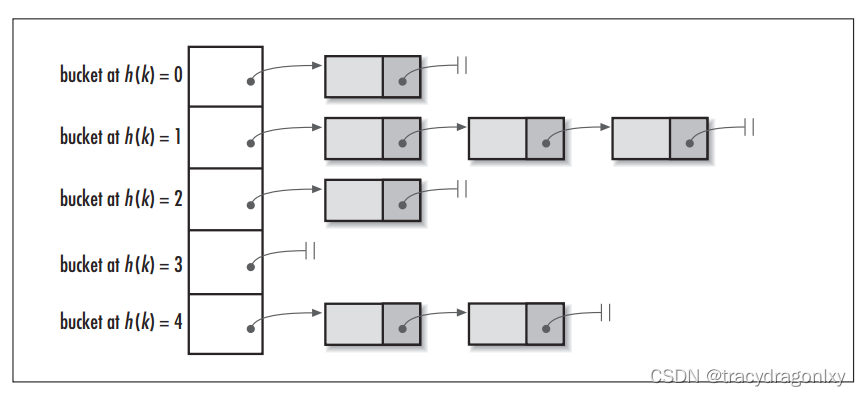

Chain address method (HashMap hash conflict resolution method)

All elements with hash address i form a singly linked list, and the head pointer of the singly linked list is stored in the i-th unit of the hash table, so search, insertion and deletion are mainly performed in the linked list.

-

rehash

The conflicting hash values are hashed again until there are no hash conflicts.

-

Create a public overflow area

The hash table is divided into two parts: the basic table and the overflow table. All elements that conflict with the basic table are filled in the overflow table.

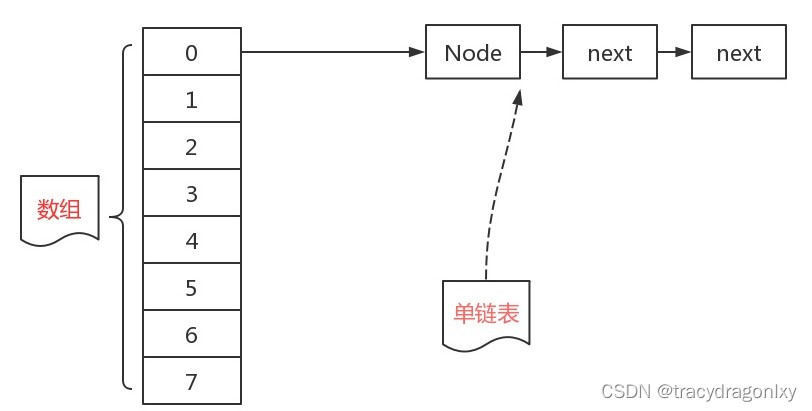

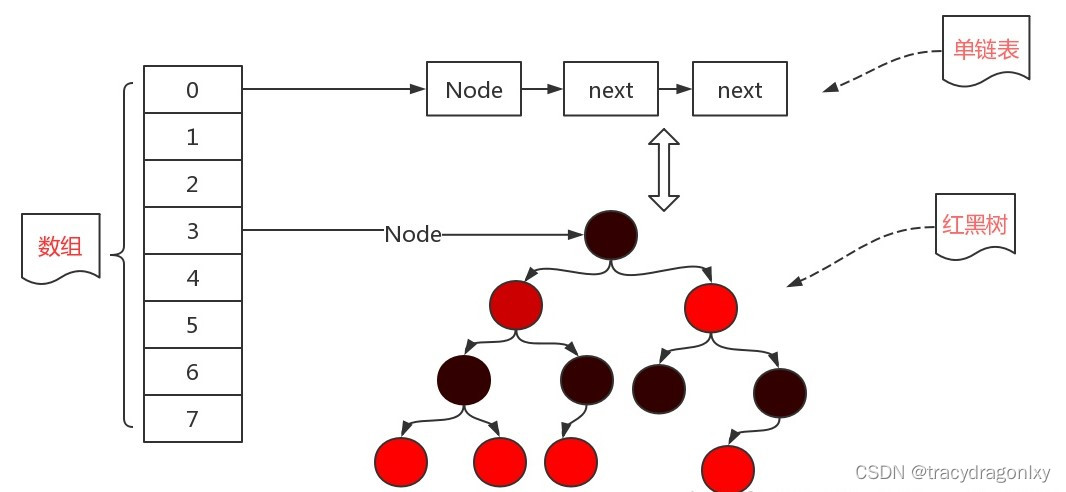

HashMap data structure

The bottom layer of HashMap is mainly implemented based on array + linked list + red-black tree (JDK1.8 adds the red-black tree part).

As you can see from the picture above, the left side is an array, and each member of the array is a linked list. All elements held by this data structure contain a pointer for linking between elements.

The data structure of HashMap in JDK1.7:array + linked list

The data structure of HashMap in JDK1.8:array+linked list+red-black tree, only The linked list will be converted into a red-black tree only when the length of the linked list is not less than 8 and the length of the array is not less than 64.

The backbone of HashMap is an Entry array. Entry is the basic component unit of HashMap. Each Entry contains a key-value pair. Entry is a static inner class in HashMap. code show as below:

static class Node<K,V> implements Map.Entry<K,V> {

final int hash;

final K key;

V value;

Node<K,V> next;

Node(int hash, K key, V value, Node<K,V> next) {

this.hash = hash;

this.key = key;

this.value = value;

this.next = next;

}

public final K getKey() {

return key; }

public final V getValue() {

return value; }

public final String toString() {

return key + "=" + value; }

public final int hashCode() {

return Objects.hashCode(key) ^ Objects.hashCode(value);

}

public final V setValue(V newValue) {

V oldValue = value;

value = newValue;

return oldValue;

}

public final boolean equals(Object o) {

if (o == this)

return true;

if (o instanceof Map.Entry) {

Map.Entry<?,?> e = (Map.Entry<?,?>)o;

if (Objects.equals(key, e.getKey()) &&

Objects.equals(value, e.getValue()))

return true;

}

return false;

}

}

HashMap related variables

HashMapThe class has the following main member variables:

/**

* 默认初始容量 - 必须是2的幕数.

*/

static final int DEFAULT_INITIAL_CAPACITY = 1 << 4; // aka 16

/**

* node数组最大容量:2^30=1073741824.

* MUST be a power of two <= 1<<30.

*/

static final int MAXIMUM_CAPACITY = 1 << 30;

/**

* 默认的负载因子,用来衡量HashMap满的程度。

*/

static final float DEFAULT_LOAD_FACTOR = 0.75f;

/**

* 将链表转换为树的阈值。当链表长度超过8时,链表就转换为红黑树。

*/

static final int TREEIFY_THRESHOLD = 8;

/**

* 树转链表阀值,小于等于6(tranfer时,lc、hc=0两个计数器分别++记录原bin、新binTreeNode数量,<=UNTREEIFY_THRESHOLD 则untreeify(lo))

*/

static final int UNTREEIFY_THRESHOLD = 6;

/**

* The smallest table capacity for which bins may be treeified.

* (Otherwise the table is resized if too many nodes in a bin.)

* Should be at least 4 * TREEIFY_THRESHOLD to avoid conflicts

* between resizing and treeification thresholds.

*/

static final int MIN_TREEIFY_CAPACITY = 64;

/* ---------------- Fields -------------- */

/**

* 存放node的数组,分配长度后,长度始终为2的幂

*/

transient Node<K,V>[] table;

/**

* Holds cached entrySet(). Note that AbstractMap fields are used

* for keySet() and values().

*/

transient Set<Map.Entry<K,V>> entrySet;

/**

* 记录了Map中Key-Value对的个数

*/

transient int size;

/**

* 记录当前集合被修改(添加,删除)的次数

*

* 当我们使用迭代器或foreach遍历时,如果你在foreach遍历时,自动调用迭代器的迭代方法,

* 此时在遍历过程中调用了集合的add,remove方法时,modCount就会改变,

* 而迭代器记录的modCount是开始迭代之前的,如果两个不一致,就会报异常,

* 说明有两个线路(线程)同时操作集合。这种操作有风险,为了保证结果的正确性,

* 避免这样的情况发生,一旦发现modCount与expectedModCount不一致,立即保错。

*

* This field is used to make iterators on Collection-views of

* the HashMap fail-fast. (See ConcurrentModificationException).

*/

transient int modCount;

/**

* 下一个要调整大小的大小值,当实际KV个数超过threshold时,HashMap会将容量扩容,threshold=capacity * load factor.

*/

int threshold;

/**

* The load factor for the hash table.

*/

final float loadFactor;

size and capacity

HashMap is like a "bucket", so capacity is the maximum number of elements that this bucket can "currently" hold, and size indicates how many elements this bucket has already held.

HashMap<Integer, Integer> map = new HashMap<>();

map.put(1, 1);

try {

Class<?> mapClass = map.getClass();

Method capacity = mapClass.getDeclaredMethod("capacity");

capacity.setAccessible(true);

System.out.println("capacity: " + capacity.invoke(map));

Field size = mapClass.getDeclaredField("size");

size.setAccessible(true);

System.out.println("size: " + size.get(map));

} catch (NoSuchMethodException | IllegalAccessException | InvocationTargetException | NoSuchFieldException e) {

e.printStackTrace();

}

}

capacity: 16

size: 1

We define a new HashMap, put an element into it, and then print the capacity and size through reflection. The output result is:capacity: 16, size: 1

By default, the capacity of HashMap is 16. However, if the user specifies a number as the capacity through the constructor, then Hash will choose the first power of 2 greater than the number as the capacity. (1->1, 7->8, 9->16)

/**

* Returns a power of two size for the given target capacity.

*/

static final int tableSizeFor(int cap) {

int n = cap - 1;

n |= n >>> 1;

n |= n >>> 2;

n |= n >>> 4;

n |= n >>> 8;

n |= n >>> 16;

return (n < 0) ? 1 : (n >= MAXIMUM_CAPACITY) ? MAXIMUM_CAPACITY : n + 1;

}

public static void main(String[] args) {

HashMap<Integer, Integer> map1 = new HashMap<>(1);

HashMap<Integer, Integer> map2 = new HashMap<>(7);

HashMap<Integer, Integer> map3 = new HashMap<>(9);

demoCapacity(map1);

demoCapacity(map2);

demoCapacity(map3);

}

private static void demoCapacity(HashMap<Integer, Integer> map) {

try {

Class<?> mapClass = map.getClass();

Method capacity = mapClass.getDeclaredMethod("capacity");

capacity.setAccessible(true);

System.out.println("capacity: " + capacity.invoke(map));

} catch (NoSuchMethodException | IllegalAccessException | InvocationTargetException e) {

e.printStackTrace();

}

}

capacity: 1

capacity: 8

capacity: 16

Reference:

https://zhuanlan.zhihu.com/p/79219960

https://www.cnblogs.com/theRhyme/ p/9404082.html#_lab2_1_1