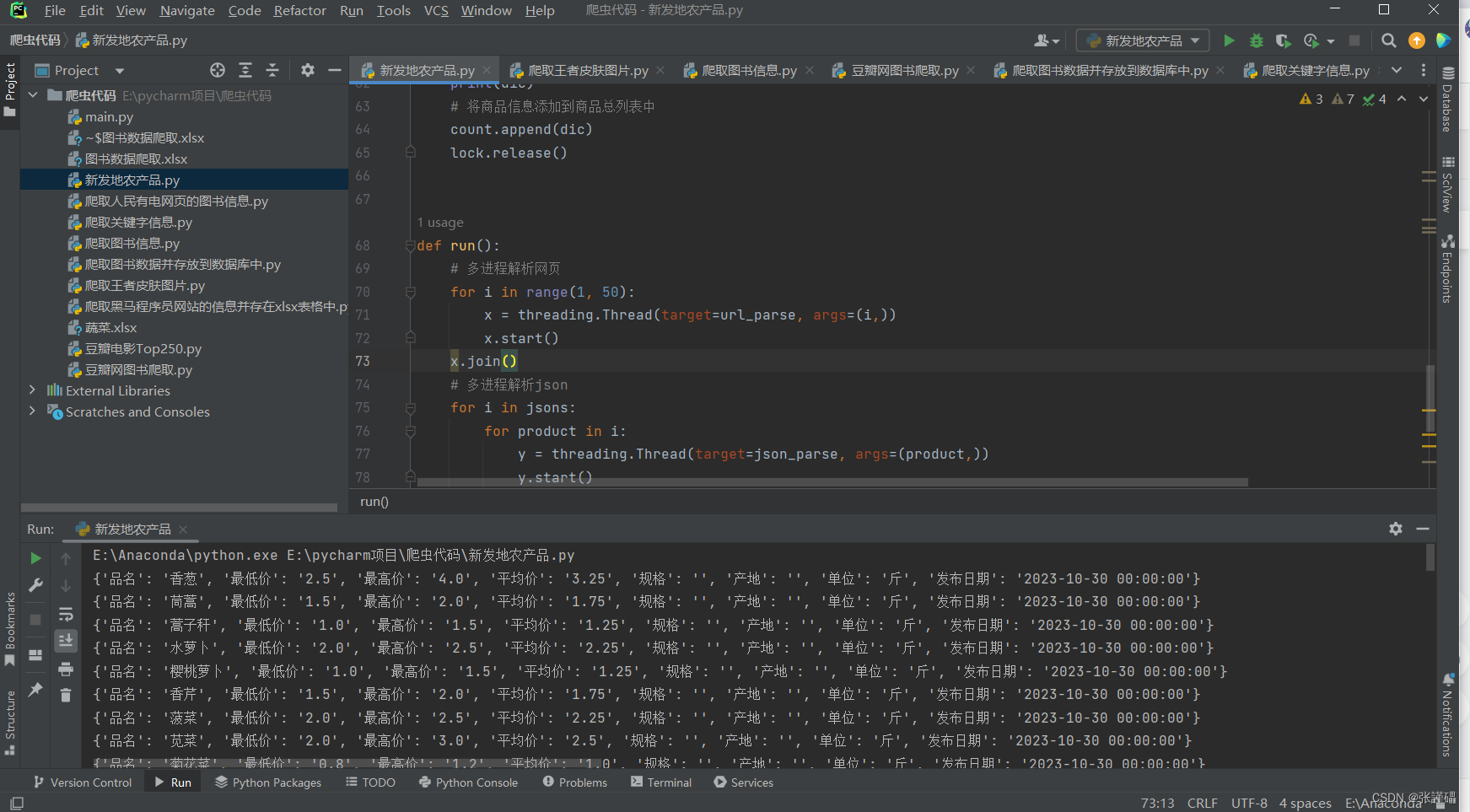

This code is a program that crawls Xinfadi vegetable price information. It uses multi-threading to speed up data acquisition and analysis. The specific steps are as follows:

- Import the required libraries: json, requests, threading and pandas.

- Initialize some variables, including the number of pages, the total list of products, and the list to store json data.

- defines a function

url_parse()for sending requests and parsing web page data. The function uses therequests.post()method to send a POST request, obtain product information, and save it to thejsonslist. - defines a function

json_parse()for parsing json data and saving product information to the total product listcount. - defines a main function

run(), in which multi-threading is used to call the above two functions to achieve concurrent parsing of web pages and json data. - In the main function, first use a loop to create multiple threads to parse web page data concurrently, and implement this by calling the

url_parse()function. - Then, use a loop again to create multiple threads to parse the json data concurrently, and implement this by calling the

json_parse()function. - Finally, convert the total list of products

countinto a DataFrame object, and then use the pandas library to save it as an Excel file.

The code can be divided into the following parts for block analysis:

- Import library

import json

import requests

import threading

import pandas as pd

In this part, the libraries that need to be used are imported json, requests, threading and pandas.

- Define global variables and functions

page = 1

count = list()

jsons = list()

def url_parse(page):

...

def json_parse(product):

...

def run():

...

This part defines global variables page, count and jsons, which are used to store crawled data.

then defines two functions url_parse and json_parse, which are used to parse web pages and parse JSON data respectively.

finally defines the run function, which is used to call the url_parse and json_parse functions, and process and save data.

- Parse web page functions

url_parse

def url_parse(page):

url = 'http://www.xinfadi.com.cn/getPriceData.html'

headers = {

...

}

data = {

...

}

response = requests.post(url=url, headers=headers, data=data).text

response = json.loads(response)['list']

lock = threading.RLock()

lock.acquire()

jsons.append(response)

lock.release()

This function is used to parse web page data. Get the JSON data of the web page by sending a POST request. Then store the product information in JSON as a list and add the list to the global variable jsons .

- Parse JSON function

json_parse

def json_parse(product):

lock = threading.RLock()

lock.acquire()

dic = {'品名': product['prodName'], "最低价": product['lowPrice'], '最高价': product['highPrice'],

'平均价': product['avgPrice'], '规格': product['specInfo'], '产地': product['place'], '单位': product['unitInfo'],

'发布日期': product['pubDate']}

print(dic)

count.append(dic)

lock.release()

This function is used to parse JSON data. For each product, parse its information into a dictionary and add the dictionary to the global variable count .

- Main program part

if __name__ == '__main__':

run()

In this part, first call the run function to execute the main program.

In the main program, the run function is first called using multi-threading. The url_parse function obtains all web page data, and then is called using multi-threading. json_parse The function parses all JSON data.

Finally, convert the count list into pandas of DataFrame and save it as an Excel file.

Complete code:

import json

import requests

import threading

import pandas as pd

# 新发地官网:http://www.xinfadi.com.cn/priceDetail.html

# 页数

page = 1

# 商品总列表

count = list()

# json列表

jsons = list()

# 解析网页函数

def url_parse(page):

# 请求地址

url = 'http://www.xinfadi.com.cn/getPriceData.html'

headers = {

"Accept": "*/*",

"Accept-Encoding": "gzip, deflate",

"Accept-Language": "zh-CN,zh;q=0.9",

"Cache-Control": "no-cache",

"Connection": "keep-alive",

"Content-Length": "89",

"Content-Type": "application/x-www-form-urlencoded; charset=UTF-8",

"Host": "www.xinfadi.com.cn",

"Origin": "http://www.xinfadi.com.cn",

"Pragma": "no-cache",

"Referer": "http://www.xinfadi.com.cn/priceDetail.html",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.107 Safari/537.36",

"X-Requested-With": "XMLHttpRequest",

}

data = {

"limit": "20",

"current": page,

"pubDateStartTime": "",

"pubDateEndTime": "",

"prodPcatid": "1186", # 商品类id

"prodCatid": "",

"prodName": "",

}

response = requests.post(url=url, headers=headers, data=data).text

# 获取商品信息

response = json.loads(response)['list']

# 生成线程锁对象

lock = threading.RLock()

# 上锁

lock.acquire()

# 添加到json列表中

jsons.append(response)

# 解锁

lock.release()

# 解析json函数

def json_parse(product):

lock = threading.RLock()

lock.acquire()

dic = {'品名': product['prodName'], "最低价": product['lowPrice'], '最高价': product['highPrice'],

'平均价': product['avgPrice'], '规格': product['specInfo'], '产地': product['place'], '单位': product['unitInfo'],

'发布日期': product['pubDate']}

print(dic)

# 将商品信息添加到商品总列表中

count.append(dic)

lock.release()

def run():

# 多进程解析网页

for i in range(1, 50):

x = threading.Thread(target=url_parse, args=(i,))

x.start()

x.join()

# 多进程解析json

for i in jsons:

for product in i:

y = threading.Thread(target=json_parse, args=(product,))

y.start()

y.join()

# 生成excel

data = pd.DataFrame(count)

data.to_excel('蔬菜.xlsx', index=None)

if __name__ == '__main__':

run()

![]()