Ethereum 2.0 basic architecture understanding

Ethereum (hereinafter referred to as eth) 2.0 has been 4 years since improvements were proposed in 2018. The existing eth 1.0 is a chain based on POW consensus. The pow consensus mainly has the following problems:

Waste of energy.

The performance is low and it can only process a dozen transactions per second.

The data is completely stored in one node.

The problem of concentration of computing power, the chip giant theoretically has 51% of the computing power attack capability.

The core change of eth 2.0 is the replacement of pow with pos and the sharding chain. The main goals of eth 2.0 are:

- Data read and write sharding solves the problem of block data being read and written on one node.

- Improve TPS through POS algorithm

- Prevent over-centralization

- For civilians, any civilian machine (including the tree mold sect) can become a validator node as long as it pledges 32 ETH.

The following is the basic architecture of eth2.0

The above figure explains: The beacon chain is a separate chain that coordinates all sharding chains. Pay attention to shard 1~shard 100 in the picture above. It indicates that a beacon chain block is responsible for managing shard blocks from 1 to 100.

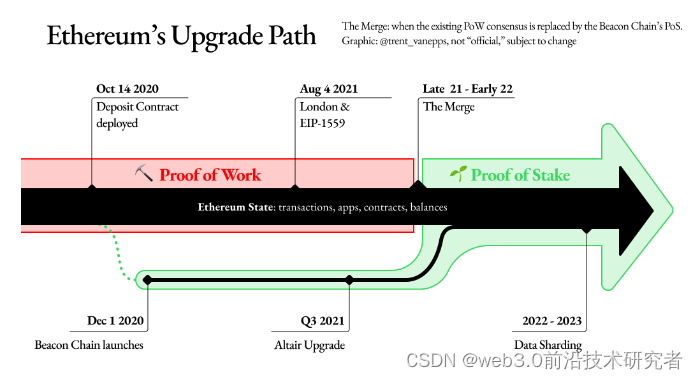

eth1.0 to 2.0 iterates in phases. The specific time node starts from 2018. (Obviously, it has been delayed for a long time)

Phase 0: Build the beacon chain network.

Phase 1: Build a sharded network to allow data to be read and written into shards.

Phase 2: EVM can support sharded networks.

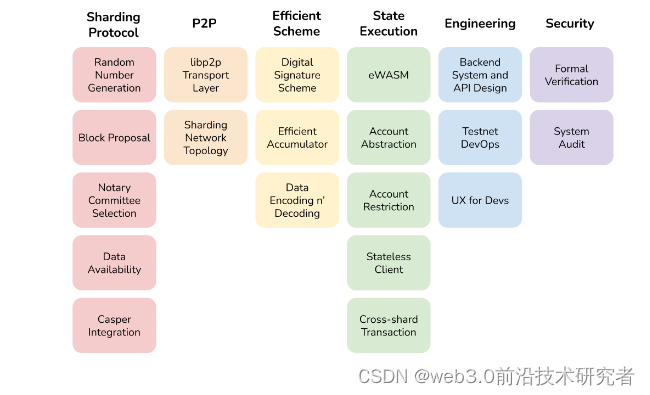

The following are some technical details involved in each upgrade. You can gohere to learn more about the specific technologies.

beacon chain network

The beacon chain network is a very critical link in the entire eth2.0. It is responsible for the registration and staking of validators, as well as randomly assigning validators to a committee for POS voting. In order to ensure the randomness of blocks and prevent false predictions, eth 2.0 introduced RANDAO (random number generator) random+DAO. Its brief principle is as follows:

Imagine a bunch of people sitting in a room, and each person gives a number at will. The person who finally gave the number adds up the numbers given by the others, and then This is used as a random number seed. In order to prevent the last person from cheating by giving a number that he can predict, he needs to do a round of VDF (Verifiable Delay Functions) operation to obtain a final random number.

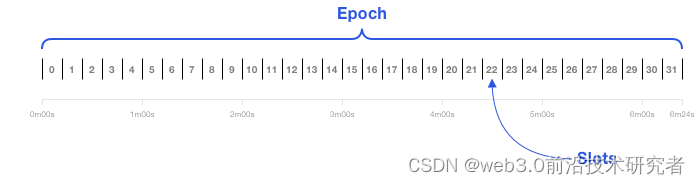

The beacon chain is also a block chain system, which requires validators to vote for each block through the POS consensus algorithm. There will be a time synchronization slot every 12 seconds, and a block will be generated in each slot. 32 slots form an epoch. The functions of slot and epoch here are not listed below, and will be introduced below. Let’s talk about the voting process first.

In each epoch, validators will be randomly assigned to a committee for voting. Each slot will randomly select a proposer as the block producer. Other validators will vote (POS) to verify whether the block is legal. If more than two-thirds of the votes are in favor, it will pass; if more than one-third of the votes are against, consensus cannot be reached. (Byzantine Consensus). It is possible for a validator to have both the proposer and validator roles, but this is rare.

To prevent collusion, validators will only be randomly assigned to one committee in each epoch. Each committee will not allow them to vote for two consecutive blocks. eth2.0 mathematically guarantees that the probability of more than one-third of validators colluding is one in a trillion.

From the above we can see that the role of slots is to synchronize the distributed environment between validators. In addition, the split voting of each slot reduces the collusion of validators. After each round of epoch, the validators and committee members will be reshuffled. vote.

The current beacon chain only runs POS voting verification, and has not seen any data related to sharding blockFor details, see beaconscan. I haven’t seen any solution on how to combine the beacon chain and the sharding chain (please leave a message to add). However, there has been a lot of discussion about sharding chain in the community, and we can take a preliminary look.

sharding chain

eth2.0 divides shred (shards) into 64 pieces. Each shred is only responsible for its own internal data reading and writing, and cross-chain reading and writing operations require coordination by the beacon chain. There is a key problem that needs to be solved in the sharding chain here, which is that global data is needed when executing transactions and contracts. How to interact with other nodes efficiently? There are two options discussed in the community, synchronous and asynchronous:

-

Synchronization

Synchronously change two shred blocks. Both sides must perform status synchronization communication. -

Asynchronous

Suppose there is a cross-shred transaction that requires deducting 10 yuan from account a in shred A, and then adding 10 yuan to account b in shred B. Shred A first creates a receipt (the amount deducted from a, target account address, target shred, amount). After shred B gets this receipt, it will first perform verification. After successful verification, 10 yuan will be added to account B. This receipt must be verifiable and double spending must be prevented. This receipt needs to be assigned a unique self-increasing ID and stored in shred A. Shred B only needs to track the ID in the source shred A to know whether the money has been spent.

What needs to be noted here is that there is an intermediate state, that is, sharding A deducts the money and then creates a receipt. Sharding B then synchronizes the created receipt status to its own shred.

The division of labor and merger impact of the consensus layer and execution layer of Eth 2.0

The consensus layer (Consensus Layer, below CL) and execution (hereinafter EL) protocol in the middle layer of the Eth 2.0 client architecture are truly designed to separate consensus and execution, but in fact they are just the Eth 2.0 project. Based on the analysis of the above architecture, the actual consensus and execution are still bound.

What does it mean to write consensus and bundling? You can refer to the results of my subsequent implementation:

I will first introduce the CL and EL architecture of Eth 2.0, and then introduce Merge The impact on applications and developers after incorporating PoW into the PoS chain.

The client is subdivided into CL and EL

The information here refers to Hsiao-Wei Wang's "Ethereum The Merge Technical Lazy Guide" ( 2022-04 version)" file, which contains many links.

The consensus mechanism of Eth 1.0 and Eth 2.0

The consensus mechanism of Eth 1.0 is composed of PoW and Fork Choice Rule. When a node reaches a new highest block , The first is to verify the validity of PoW. If the work collected by the new chain is compared to see whether it is authentic, the new chain will be adopted.

Eth 2.0 also has the same composition, except that W is replaced by the same composition, and whether it chooses the chain validator (Validator), it is the verification of Po ( Attestation) number, new verifiers who are also verifier nodes will choose the chain with the highest weight.

Execute and verify transactions

In the previous paragraph, the steps performed by the node when receiving a new block are actually one less step: because execution and verification include blocks Transactions inside, make sure the transaction is valid. , including such a block will cause your chain to fork outward.

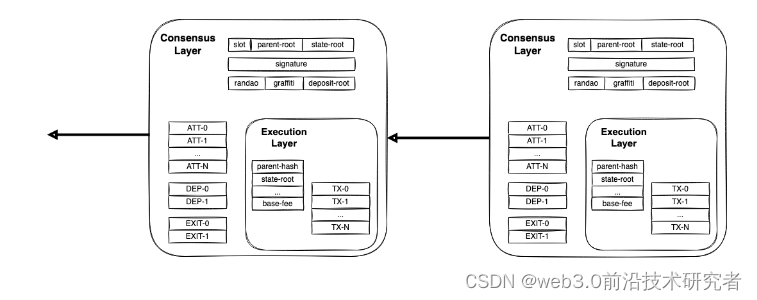

In the Eth Eth 1.0 client, verifying PoW, Fork Choice Rule and executing transactions are bound. When you run Verify, it executes the new three steps it receives each time. But in the Eth 2.0 client, these three steps are combined separately: verifying the PoS selection rules is handed over to CL, and executing the verification transaction is handed over to EL.

Block of Eth 1.0

For example, if there is a Prysm CL of the current Eth 2.0 client, who is that EL? In Merge, the original Geth is responsible for verifying the selection rules of PoW and Eth 1.0. It is responsible for executing the verification transaction of Fork and becomes the EL of Eth 2.0.

In the Eth 2.0 block, you can see that the content/content of EL is the same as the Eth 1.0 block.

How CL and EL cooperate

> After splitting into CL and EL, the component means that you can choose to run but start CL yourself (such as Prysm), EL (such as Geth) is shared with others, just like the current mining pool, you join The mining pool is only responsible for calculating PoW and is not responsible for replacing exchange package transactions. You can also run CL and EL yourself.

and a well-defined API (engine API) between CLs will be enabled. Whenever CL receives a new installment, the relevant EL content it sends is named EL, and based on the if block of EL and based on the selection rules of CL, it is represented by the block selected by CL. The longest chain on the chain notifies, EL "The current block is this area" block, please apply the transaction inside and calculate the latest status and update it.

Interaction between CL and EL

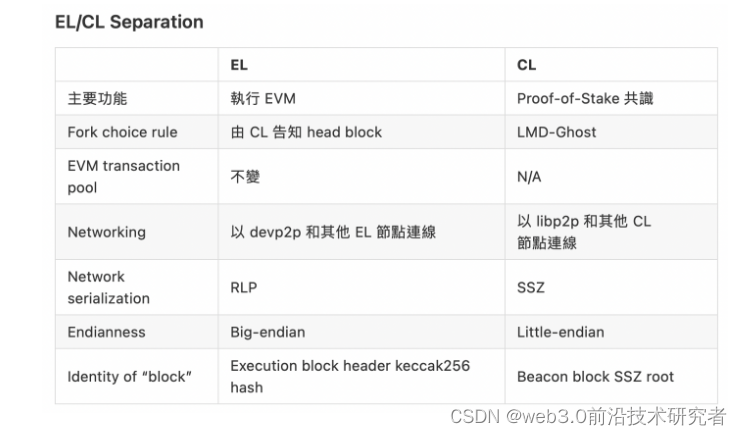

After CL and EL are separated, they also represent their own independence and independence between networks, not only the EL using them and their respective independence and independence, CL and p2 between them The path is also independent.

The original developer's use of web3.eth to obtain data on the chain will still be the same, and web3.eth will be used to obtain the data behind the scenes. CL and EL will use different The p2p network can be used with other CL or EL: EL still uses the original devp2 of Eth 1.0, and CL uses libp2p of Eth 2.0 respectively. web3.beaconweb3.beacon

The interaction between CL and EL and the interaction with the outside world

For example, it can be imagined as the original Geth node (including p2p network), which still uses the CL path, just for The components of the consensus (PoW, k Choice Rule) are removed, and an independent node (Prysm) is responsible for the web3 suite used by developers. web3.js or ethers.js will automatically point different pointers to different nodes. Development Don't worry.

What can be noted here is that EVM transactions will still go to the EL node instead of the CL node through the devp2p network, which means that if your CL node is a validator preparing to propose a block, it needs EL help. It helps form its own block, which contains EVM transactions. CL must be a block of CL, but it contains Attestation and various transactions of EVM.

Impact of the merger

The use of services will occur after the merger. Although it has an impact on developers, it is unlikely that you will think about your project, application or Whether applications will be affected and migrated early. An application where information comes from random sources.

How the merger affects Ethereum’s application layer

Note: This article was updated in March 2022 to reflect the latest changes to the specification. This includes renaming.

Block time

Select a period of 2 periods every 12 seconds in PoS. Each period is responsible for a period. Each period is responsible for a period. Each period will have a validator proposal. If the validator is not online or has no time to send the block, please be sure to be used by other validators. Empty slots cultivate the next block, delay the slot for 12 seconds, and then have time.

DIFFICULTY opcode was renamed and misappropriated

First of all, because there is no PoW, there is no concept of difficulty (Difficulty) in changing it again, but in order to make this change, it will cause the use of The contract for DIFFICULTYopcode is dropped directly), so DIFFICULTYopcode will be named PREVRANDAO, and each one is changed to put a validator so they will produce out-of-order numbers. The number that could have been used to merge DIFFICULTY in the contract of this opcode can no longer be assumed to be an out-of-order number that will continue to increase after this value.

The value of BLOCKHASH opcode will be enjoyed by gourmet food

The content of Block Hash block is determined by miners, but the content inside is the result of calculation by miners. Therefore, it must be decided by the blockchain, because the content of the blockchain is determined by the blockchain, and it is the content of the blockchain, which is not easy to decide. The miner calculates the PoW result, so the Block Hash representing the block discovery result after entering the network is not satisfied. If it gives up and recalculates the PoW, it will give up this block reward opportunity). , a contract will use the value of BLOCKHASHopcode to fake the source of random numbers.

PREVRANDAO But the validator in Pos (the miner of Pos) has to find it in the content of Pos (that is, the tile), which can be said to be the same. , although there is still a risk of being cheated more frequently and harder than Po, this data source is a mess.

Because any frequently changing value provided by this validator is a number provided by a different validator, the range of each validator is likely to be limited. The block's slots choose to do nothing, leaving each slot unchanged (if doing so is of value to him). Blockchain validators come up with proposals.

For detailed security analysis of PREVRANDAO opcode, please refer to the security analysis chapter of EIP 4399.

Certainty

This is one of the biggest impacts of PoS. We have Block Confirmation, a more reliable Finality. Reference: If the network is allowed to be normal and Without an attacker, the block will be Finalized in about 2 epochs (about 12 minutes). Eventually, the image represented by this block will be unveiled, and the final promise will not leave the sacrifice of the memorial person.

Fork selection rules and security headers

12 Determinism may take a long time for a certain application. Is there any other method? Then it will still be blocked in another minute. The results continued and were finalized at 12. During this period, it is still possible to fork due to network problems, so I still have a fork during this period, chained due to network selection rules.

Of course, for applications, these blocks are just like PoW, and the probability must be ensured through the block confirmation mechanism. Each block records the validators' proofs. The number of proofs in the block allows the validators to have a more accurate reference than "include or not block": "the proofs in this block" are only The Attestation of 1/6 of the validators, Douyin, the probability of being forked communicators is quite high." "This block has the verification of 3/4 of the validators. If it is stable, 2/3 of the validators will appear. The chances of risking a fork are low.”

Therefore, this new fork selection rule will bring a new time label safe. Originally, the developer seemed to default to the latest time tag: ask the node to give you the information in the latest block received. The safe label is to ask the node to give you its time label. Fork Choice Rule After calculation, the label information is deemed to be reliable enough. Under normal circumstances, labeled blocks last approximately four blocks in time. So in Merge, developers should pay attention to whether safeweb3latest will use latest or safe by default, and the tag of the application you want to use.