1. Algorithm requirements

Extracting license plate areas from vehicle photos in complex environments based on template matching

2. Problem analysis

Current analysis has found that in vehicle photos, the characteristics of the license plate area and its surrounding areas are as follows:

1. The license plate only exists at the front and rear of the car, and there is a shadow area below the license plate ( 这是自然光照射所形成的)

2. Comparison of the texture around the license plate when it is at the rear of the car Single, but the texture of the upper part of the license plate is more complicated when it is on the front of the car ( 不同车型的发动机出气口不同)

3. The characters in the license plate have horizontal edge features ( 车牌号都是水平排列的)

To achieve the current goal, first cut out a template with a larger area ( 包含车牌周围的环境信息【这里所提到的特定1】), and roughen the image. Granularity matching, get the approximate area of the license plate ( 通过该操作可以将图像的size缩减到原来的1/3,可以滤除掉车辆外的干扰因素【例如,地板上存在车牌,但其没有固定在车辆上】). Then the small images cut out of the large template are analyzed to select the most representative and accurate template, and then perform multi-scale template matching.

Key knowledge

Template matching function: matchTemplate

result = cv2.matchTemplate(image, template, cv2.TM_CCOEFF)

Parameter meaning:

image: original image, image to be matched, 8-bit integer type, 32-bit floating point type, can be single channel or multi-channel;

template: template image, the same type as the original image, the size must be smaller than the source image;

method: matching method;

mask: mask;

result: return result, 32-bit floating point type, the original image is W×H, the template image is w×h, and the generated image object is (W−w+1)×(H−h+1);

There are 6 matching methods TemplateMatchModes, which can be passed into the Python interface using the corresponding cv2.TM_xxx:

1. cv::TM_SQDIFF: This method uses squared differences for matching, so the best matching result is where the result is 0, and the larger the value, the better the match. The worse the result.

2. cv::TM_SQDIFF_NORMED: This method uses the normalized squared difference for matching, and the best match is also where the result is 0.

3. cv::TM_CCORR: Correlation matching method. This method uses the convolution result of the source image and the template image for matching. Therefore, the best matching position is at the maximum value. The smaller the value, the worse the matching result.

4. cv::TM_CCORR_NORMED: Normalized correlation matching method, similar to the correlation matching method, the best matching position is also at the maximum value.

5. cv::TM_CCOEFF: Correlation coefficient matching method. This method uses the correlation between the difference between the source image and its mean and the difference between the template and its mean to match. The best matching result is where the value is equal to 1, and the worst The matching result is when the value is equal to -1, and the value equal to 0 directly indicates that the two are not related.

6. cv::TM_CCOEFF_NORMED: Normalized correlation coefficient matching method. Positive values indicate better matching results, while negative values indicate poor matching results. The larger the value, the better the matching results.

The selection of matching method depends on the actual situation.

The above content is quoted from: https://blog.csdn.net/guduruyu/article/details/69231259

3. Core ideas

1. Read the image as a grayscale image

2. Intercept the coarse-grained template according to the relative size ( 包含车牌周围的环境信息)

3. Perform simple template matching ( 利用高斯滤波降噪,再利用Cnny提取边缘信息进行匹配) to obtain the coarse area of the license plate

4. Intercept the fine-grained template according to the relative size ( 包含车牌邻域的空白区域信息); this operation may be required Try many times

5. Perform multi-scale template matching ( 利用sobel算子提取车牌字符的横向梯度让车牌字符变得明显,同时滤除掉车头车牌上面的各种y方向的梯度线)

4. Specific implementation

4.1 Read the image as grayscale image

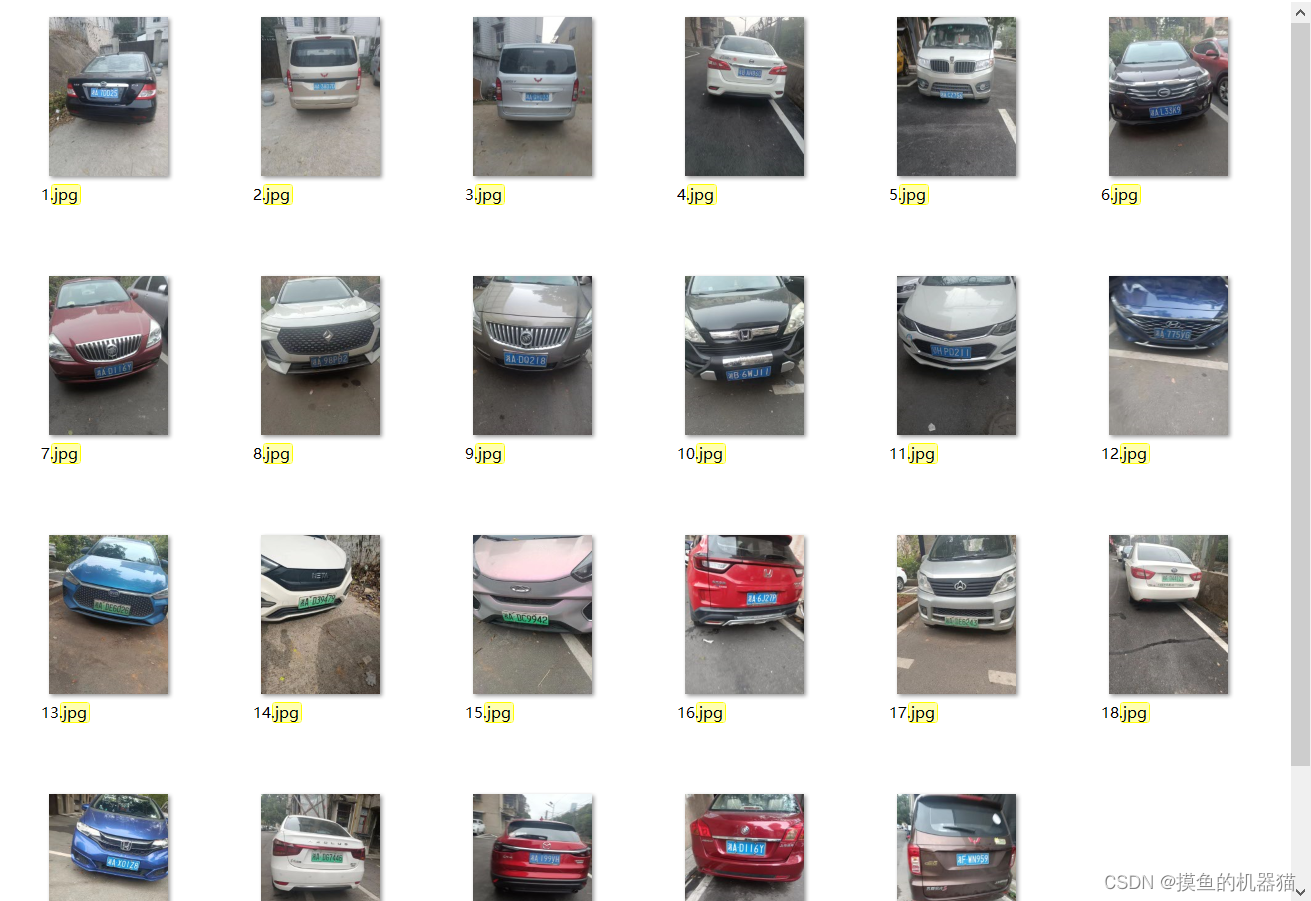

Grayscale images are more conducive to analyzing the characteristics of vehicles. The license plate used for template cutting should have a complete outline, clear writing, and little other obvious interference information around the license plate.

The code and execution results are as follows:

import cv2,os

def all_gray(path_list):

for p in path_list:

img=cv2.imread(path+'/'+p,1)

print(path+"/"+p)

img=cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)

cv2.imwrite(path+'/'+p,img)

path="images"

path_list=os.listdir(path)

#当jpg在x中才返回x,生成的path_list2只包含jpg文件

path_list2=[x for x in path_list if ".jpg" in x]

print(path_list)

print(path_list2)

all_gray(path_list2)

Grayscale image 1:

Grayscale image 2:

Compared with grayscale image 1 and grayscale image 2, grayscale image 2 is more suitable for cropping templates. The license plate area of grayscale image 1 appears very bright, while the area around the license plate appears very dark ( ), so it is cropped 这与大部分的车牌区域特征不合based on grayscale image 1 The template that comes out is not very suitable for other vehicles.

4.2 Intercept coarse-grained templates based on relative sizes

The coarse-grained template should contain the environmental information around the license plate ( 直接使用精细的车牌模块很难一次性截取到车牌区域,其针对大部分图像都存在错误匹配). We expand the license plate area information as much as possible when selecting the template ( 这里将车辆下方的阴影区域进行了保留). Based on more spatial information, incorrect matching can be avoided.

The interception code and coarse-grained template are as follows:

import cv2

#保存面积较大的模板,更容易匹配到目标

def save_temp1():

path=r"Car/2.jpg"

img=cv2.imread(path,1)

gray = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)

#cv2.imshow('grsy',gray)

gau_img=cv2.GaussianBlur(gray,(3,3),0)#高斯滤波

#根据相对尺寸,采集模板

hi,wi,c=img.shape

yr,xr,hr,wr=0.3,0.33,0.45,0.45

y,x,h,w=yr*hi,xr*wi,hr*hi,wr*wi

y,x,h,w=int(y),int(x),int(h),int(w)

print(y/hi,x/wi,h/hi,w/wi)

im = gau_img[y:y+h,x:x+w]

save_path = r'template1.jpg'

cv2.imwrite(save_path,im)

cv2.waitKey()

save_temp1()

The coarse-grained template looks like this:

4.3 Simple template matching

Through simple template matching, the size of the image can be reduced to 1/3 of the original size, interference factors outside the vehicle can be filtered out, and the preliminary range of the license plate can be determined.

Execute code:

import glob

import cv2

def template_crop(templateo,imageo):

rate=5

image=cv2.resize(imageo,None,fx=1/rate,fy=1/rate)

template=cv2.resize(templateo,None,fx=1/rate,fy=1/rate)

tH,tW,c=template.shape

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

template = cv2.cvtColor(template, cv2.COLOR_BGR2GRAY)

gray = cv2.Canny(image, 50, 200)

template = cv2.Canny(template, 50, 200)

gray=cv2.GaussianBlur(gray,(3,3),0)#高斯滤波

#cv2.imshow("template", template)

#cv2.imshow("gray", gray)

print(gray.shape, template.shape)

result = cv2.matchTemplate(gray, template, cv2.TM_CCOEFF)

(_, maxVal, _, maxLoc) = cv2.minMaxLoc(result)

print(imagePath,maxVal)

# 计算测试图片中模板所在的具体位置,即左上角和右下角的坐标值,并乘上对应的裁剪因子

(startX, startY) = (int(maxLoc[0] -30), int(maxLoc[1] ))

if startX<0:

startX=0

(endX, endY) = (int((maxLoc[0] + tW)+30 ), int((maxLoc[1] + tH) ))

crop=imageo[startY*rate:endY*rate,startX*rate:endX*rate]

crop=crop[50:-50]

return(crop)

# 绘制并显示结果

#cv2.rectangle(image, (startX, startY), (endX, endY), (0, 0, 255), 2)

#cv2.imshow("Image", image)

cv2.waitKey(0)

def get_template():

# 读取模板图片

template = cv2.imread('template1.jpg')

return template

template=get_template()

# 遍历所有的图片寻找模板

for imagePath in glob.glob("Car/*.jpg"):

image = cv2.imread(imagePath)

print(imagePath,image.shape)

print(template.shape)

img=template_crop(template,image)

print(img.shape)

sname=imagePath.replace('.jpg','.png')

cv2.imwrite(sname,img)

#cv2.imshow("imagimagee", image)

cv2.imshow("image", img)

cv2.waitKey(0)

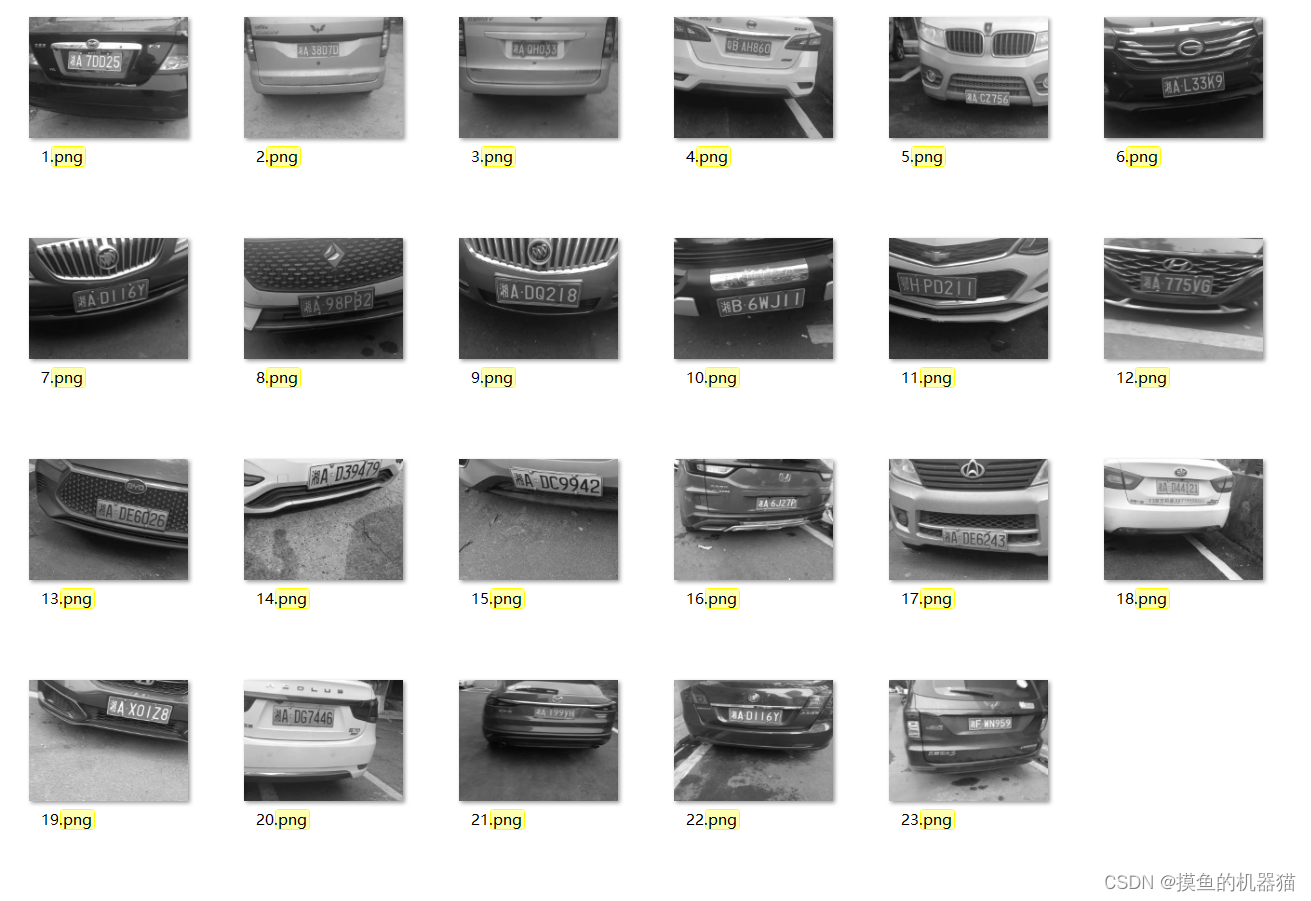

Code execution effect:

4.4 Cut out fine templates based on relative sizes

Through 4.3, we have obtained the range of the license plate and eliminated most of the interference around the license plate. However, in order to further determine the range of the license plate, we need to cut out a fine template based on 4.3 to remove as much interference as possible. The fine template needs to be cut multiple times according to the actual matching situation.

Interception code:

import cv2

#保存车牌模板,用于精准匹配到目标

def save_temp2():

path=r"Car/23.jpg"

img=cv2.imread(path,1)

gray = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)

gau_img=cv2.GaussianBlur(gray,(3,3),0)#高斯滤波

#根据相对尺寸,采集模板

hi,wi,c=img.shape

yr,xr,hr,wr=0.35,0.4,0.15,0.45

y,x,h,w=yr*hi,xr*wi,hr*hi,wr*wi

y,x,h,w=int(y),int(x),int(h),int(w)

print(y/hi,x/wi,h/hi,w/wi)

im = gau_img[y:y+h,x:x+w]

save_path = r'template2.jpg'

cv2.imwrite(save_path,im)

save_temp2()

Fine template:

4.5 Multi-scale template matching

Using matchTemplate() can only detect targets that are the same size as the template image. It cannot detect targets of different sizes or slightly different targets. The license plate posture information we photographed is relatively rich, and the distance is different. Performing fine template matching at a single scale cannot accurately find the license plate in all images.

For this situation, multi-scale template matching is performed on the image ( 通过改变待检测图像的大小来实现多尺度的模板匹配算法). During the matching process, some matching errors were still found ( 主要是将车牌上方的出气口或者logo文字识别为匹配区域). Therefore, find the gradient in the horizontal direction of the image, filter out most of the textures that do not conform to the characteristics of the license plate characters, and perform template matching based on the gradient.

Run the code:

import numpy as np

import argparse

import imutils

import glob

import cv2

args = {

'template': 'template2.jpg', 'images': "images",'visualize':False}

# 读取模板图片

template = cv2.imread(args["template"])

# 转换为灰度图片

template = cv2.cvtColor(template, cv2.COLOR_BGR2GRAY)

# 执行边缘检测

#template = cv2.Canny(template, 50, 200)

template = cv2.Sobel(template, cv2.CV_64F, dx=1, dy=0, ksize=3)

template = cv2.convertScaleAbs(template)

kernel=cv2.getStructuringElement(shape=cv2.MORPH_RECT,ksize=(7,7))

template=cv2.morphologyEx(src=template,op=cv2.MORPH_CLOSE,kernel=kernel,iterations=1)

(tH, tW) = template.shape[:2]

# 显示模板

cv2.imshow("Template", template)

print('args.get("visualize", False):====',args.get("visualize", True))

# 遍历所有的图片寻找模板

for imagePath in glob.glob(args["images"] + "/*.png"):

print(imagePath)

# 读取测试图片并将其转化为灰度图片

image = cv2.imread(imagePath)

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

found = None

# 循环遍历不同的尺度

for scale in np.linspace(0.2, 1.0, 10)[::-1]:

# 根据尺度大小对输入图片进行裁剪

resized = imutils.resize(gray, width = int(gray.shape[1] * scale))

r = gray.shape[1] / float(resized.shape[1])

# 如果裁剪之后的图片小于模板的大小直接退出

if resized.shape[0] < tH or resized.shape[1] < tW:

break

# 首先进行高斯滤波,然后用Sobel算子计算水平方向的梯度,接着进行闭运算

resized=cv2.GaussianBlur(resized,(5,5),0)#高斯滤波

edged = cv2.Sobel(resized, cv2.CV_64F, dx=1, dy=0, ksize=3)

edged = cv2.convertScaleAbs(edged)

edged=cv2.morphologyEx(src=edged,op=cv2.MORPH_CLOSE,kernel=kernel,iterations=1)

#cv2.imshow("edged", edged)

#cv2.waitKey()

#模板匹配

result = cv2.matchTemplate(edged, template, cv2.TM_CCOEFF)

(_, maxVal, _, maxLoc) = cv2.minMaxLoc(result)

# 结果可视化

if args.get("visualize", False):

# 绘制矩形框并显示结果

clone = np.dstack([edged, edged, edged])

cv2.rectangle(clone, (maxLoc[0], maxLoc[1]), (maxLoc[0] + tW, maxLoc[1] + tH), (0, 0, 255), 2)

cv2.imshow("Visualize", clone)

cv2.waitKey(0)

# 如果发现一个新的关联值则进行更新

if found is None or maxVal > found[0]:

found = (maxVal, maxLoc, r)

# 计算测试图片中模板所在的具体位置,即左上角和右下角的坐标值,并乘上对应的裁剪因子

(_, maxLoc, r) = found

(startX, startY) = (int(maxLoc[0] * r), int(maxLoc[1] * r))

(endX, endY) = (int((maxLoc[0] + tW) * r), int((maxLoc[1] + tH) * r))

startX-=30

endX+=30

# 绘制并显示结果

cv2.rectangle(image, (startX, startY), (endX, endY), (0, 0, 255), 2)

cv2.imshow("Image", image)

save_name=imagePath.replace(".png",".bmp")

cv2.imwrite(save_name,image)

cv2.waitKey(0)

Code running effect: