The relationship between UNIX and Linux is an interesting topic. Among the current mainstream server-side operating systems, UNIX was born in the late 1960s, Windows was born in the mid-1980s, and Linux was born in the early 1990s. It can be said that UNIX is the "big brother" among operating systems. Later Windows and Linux both referenced UNIX.

Modern Windows systems have developed in the direction of "graphical interfaces", which are hugely different from UNIX systems. On the surface, the relationship between the two cannot even be seen.

The Troubled History of UNIX

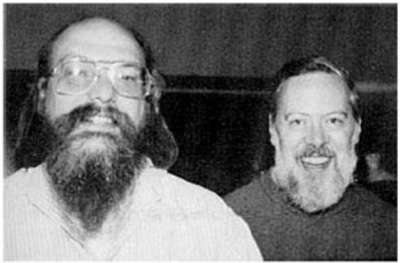

The UNIX operating system was invented by Ken Thompson and Dennis Ritchie. Part of its technical origins can be traced back to the Multics engineering program started in 1965, which was jointly initiated by Bell Labs, MIT, and General Electric Company. The goal was to develop an interactive, multi-program processing capable time-sharing operating system to replace the widely used batch operating system at the time.

Description: The time-sharing operating system allows one computer to serve multiple users at the same time. The terminal user connected to the computer issues commands interactively. The operating system uses time slice rotation to process the user's service request and display the results on the terminal (operating system Divide the CPU time into several segments, called time slices). The operating system takes time slices as units and serves each end user in turn, one time slice at a time.

Unfortunately, the goal pursued by the Multics project was so huge and complex that its developers didn't even know what it was going to be like, and it ultimately ended in failure.

Bell Labs researchers headed by Ken Thompson learned from the failure of the Multics project and implemented a prototype of a time-sharing operating system in 1969. In 1970, the system was officially named UNIX.

If you think about the prefixes Multi and Uni in English, you will understand the hidden meaning of UNIX. Multi means big, big and complex; Uni means small, small and clever. This was the original design intention of UNIX developers, and this concept continues to influence today.

Interestingly, Ken Thompson's original intention in developing UNIX was to run a computer game he wrote called Space Travel. This game simulates the movement of celestial bodies in the solar system. Players drive a spacecraft, enjoy the scenery and try to land on various planets and moons. He tried it on multiple systems, but the operating results were not satisfactory, so he decided to develop his own operating system. In this way, UNIX was born.

Since 1970, UNIX systems have gradually become popular among programmers within Bell Labs. From 1971 to 1972, Ken Thompson's colleague Dennis Ritchie invented the legendary C language, a high-level language suitable for writing system software. Its birth was an important milestone in the development of the UNIX system, and it announced In the development of operating systems, assembly language is no longer the dominant language.

By 1973, most of the source code of the UNIX system was rewritten in C language, which laid the foundation for improving the portability of the UNIX system (previously, operating systems mostly used assembly language and were highly dependent on hardware). This creates conditions for improving the development efficiency of system software. It can be said that the UNIX system and the C language are twin brothers and have an inseparable relationship.

In the early 1970s, there was another great invention in the computer industry - the TCP/IP protocol, which was a network protocol developed after the US Department of Defense took over ARPAnet. The U.S. Department of Defense bundled the TCP/IP protocol with the UNIX system and C language, and AT&T issued non-commercial licenses to various universities in the United States. This kicked off the development of the UNIX system, C language, and TCP/IP protocol. They have influenced the three fields of operating systems, programming languages, and network protocols to this day respectively. Ken Thompson and Dennis Ritchie won the Turing Award, the highest award in computer science, in 1983 for their outstanding contributions to the field of computing.

Subsequently, various versions of UNIX systems appeared. Currently, the common ones are Sun Solaris, FreeBSD, IBM AIX, HP-UX, etc.

Solaris and FreeBSD

Let's focus on Solaris, which is an important branch of the UNIX system. In addition to running on the SPARC CPU platform, Solaris can also run on the x86 CPU platform. In the server market, Sun's hardware platform has high availability and high reliability, and is the dominant UNIX system in the market.

For users who have difficulty accessing Sun SPARC architecture computers, they can experience the commercial UNIX style of world-renowned manufacturers by using Solaris x86. Of course, Solaris x86 can also be used in servers for actual production applications. Solaris x86 can be used for free for study, research or commercial applications, subject to Sun's relevant licensing terms.

FreeBSD originated from the UNIX version developed by the University of California, Berkeley. It is developed and maintained by volunteers from all over the world, providing varying degrees of support for computer systems of different architectures. FreeBSD is released under the BSD license agreement, which allows anyone to use and distribute it freely while retaining the copyright and license agreement information. It does not restrict the release of FreeBSD code under another agreement, so commercial companies can freely integrate FreeBSD code into in their products. Apple's OS X is an operating system based on FreeBSD.

A considerable part of the user groups of FreeBSD and Linux overlap, the hardware environments they support are relatively consistent, and the software they use is also relatively similar. The biggest feature of FreeBSD is its stability and efficiency, making it a good choice as a server operating system; however, its hardware support is not as complete as Linux, so it is not suitable as a desktop system.

Other UNIX versions have relatively limited scope of application and will not be introduced in detail here.

The past of Linux

The Linux kernel was originally written by Linus Torvalds as a personal hobby when he was studying at the University of Helsinki. At that time, he felt that the mini version of the UNIX operating system Minix used for teaching was too difficult to use, so he decided to develop it himself. An operating system. The first version was released in September 1991 with only 10,000 lines of code.

Linus Torvalds did not retain the copyright of the Linux source code, made the code public, and invited others to improve Linux. Unlike Windows and other proprietary operating systems, Linux is open source and anyone can use it for free.

It is estimated that only 2% of the Linux core code is now written by Linus Torvalds himself, although he still owns the Linux kernel (the core part of the operating system) and retains the new method of selecting new code and needing to be merged final decision. The Linux that everyone is using now, I prefer to say that it was jointly developed by Linus Torvalds and many Linux enthusiasts who joined later.

Linus Torvalds is undoubtedly one of the greatest programmers in the world, not to mention that he also created GitHub (an open source code base and version control system), the world's largest programmer dating community.

The origin of the Linux Logo is a very interesting topic. It is a penguin.

Why choose a penguin instead of a lion, tiger or white rabbit? Some people say that Linus Torvalds chose penguins because he is Finnish. Some people say that because all other animal patterns were used up, Linus Torvalds had to choose penguins.

I prefer to believe the following statement. Penguins are the iconic animals of Antarctica. According to international conventions, Antarctica is owned by all mankind and does not belong to any country in the world. However, no country has the right to include Antarctica in its territory. Linux chose the penguin pattern as its logo, which means: open source Linux is owned by all mankind, but the company has no right to keep it private.

The close relationship between UNIX and Linux

The relationship between the two is not that of elder brother and younger brother. It is more appropriate to say "UNIX is the father of Linux". The reason why I want to introduce their relationship is to tell readers that Linux and UNIX actually have a lot in common when learning. Simply put, if you have mastered Linux, it will be very easy to start using UNIX. .

There are also two big differences between the two:

- Most UNIX systems are matched with hardware, that is to say, most UNIX systems such as AIX, HP-UX, etc. cannot be installed on x86 servers and personal computers, while Linux can run on a variety of hardware platforms;

- UNIX is commercial software, while Linux is open source software, which is free and open source code.

Linux is loved by most computer enthusiasts for two main reasons:

- It is open source software. Users can obtain it and its source code without paying any fees, and can make necessary modifications to it according to their own needs, use it free of charge, and continue to spread it without restriction;

- It has all the functions of UNIX, and anyone who uses the UNIX operating system or wants to learn the UNIX operating system can benefit from Linux.

Open source software is a model different from commercial software. Literally, it means open source code. You don’t have to worry about any tricks in it. This will bring about software innovation and security.

In addition, open source does not actually mean free, but a new software profit model. Currently, many software are open source software, which has a profound impact on the computer industry and the Internet.

The models and concepts of open source software are relatively obscure. Readers can simply understand them when applying Linux.

In recent years, Linux has outdone its predecessors, developing at an extraordinary speed, from an ugly duckling to a truly excellent and trustworthy operating system with a huge user base. The wheel of history has made Linux the best inheritor of UNIX.

Summarize the relationship/difference between Linux and UNIX

Linux is an operating system similar to Unix. Unix is earlier than Linux. The original intention of Linux is to replace UNIX and optimize functions and user experience. Therefore, Linux imitates UNIX (but does not plagiarize the source code of UNIX), making Linux Very similar to UNIX in appearance and interaction.

If you say imitation, you may get criticized, but you can also say micro-innovation or improvement.

Compared with UNIX, the biggest innovation of Linux is that it is open source and free, which is the most important reason for its rapid development; however, most of the current UNIX is paid, which is unaffordable for small companies and individuals.

It is precisely because Linux and UNIX are inextricably linked that people call Linux a "UNIX-like system", which we will focus on in the next section.

UNIX/Linux system structure

The UNIX/Linux system can be roughly abstracted into three levels (the so-called rough means that it is not detailed and precise enough, but it is easy for beginners to grasp the key points and understand), as shown in Figure 3. The bottom layer is the UNIX/Linux operating system, which is the system kernel (Kernel); the middle layer is the Shell layer, which is the command interpretation layer; and the top layer is the application layer.

1) Kernel layer

The kernel layer is the core and foundation of the UNIX/Linux system. It is directly attached to the hardware platform, controls and manages various resources (hardware resources and software resources) in the system, and effectively organizes the running of processes, thereby expanding the functions of the hardware. Improve resource utilization efficiency and provide users with a convenient, efficient, safe and reliable application environment.

2) Shell layer

The Shell layer is the interface that interacts directly with the user. The user can enter a command line at the prompt, and the Shell will interpret and execute it and output the corresponding results or related information, so we also call the Shell a command interpreter. Many tasks can be completed quickly and easily by using the rich commands provided by the system.

3) Application layer

The application layer provides a graphical environment based on the X Window protocol. The X Window protocol defines the functions that a system must have (just like TCP/IP is a protocol that defines the functions that software should have). If the system can meet this protocol and comply with other specifications of the X Association, it can be called X Window.

Nowadays, most UNIX systems (including Solaris, HP-UX, AIX, etc.) can run the user interface of CDE (Common Desktop Environment, a commercial desktop environment running on UNIX); and it is widely used on Linux Some include Gnome, KDE, etc.

X Window is very different from Microsoft's Windows graphical environment:

- UNIX/Linux systems are not necessarily bound to X Window, that is to say, UNIX/Linux can install X Window or not; while Microsoft's Windows graphical environment is closely tied to the kernel.

- The UNIX/Linux system does not rely on the graphical environment and can still complete 100% of the functions through the command line, and it will save a lot of system resources because it does not use the graphical environment.

As a server deployment, most Linux does not install or enable the graphical environment. The explanations in this tutorial are basically operations under the Linux command line.