Model introduction

This project uses the Bert model to perform text entity recognition.

For an introduction to the Bert model, you can view this article: nlp series (2) text classification (Bert) pytorch_bert text classification_Makizikawa's blog-CSDN blog

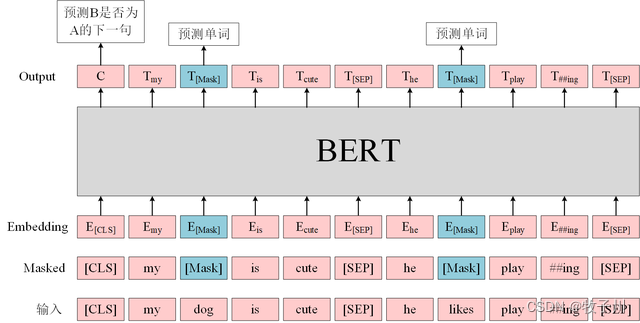

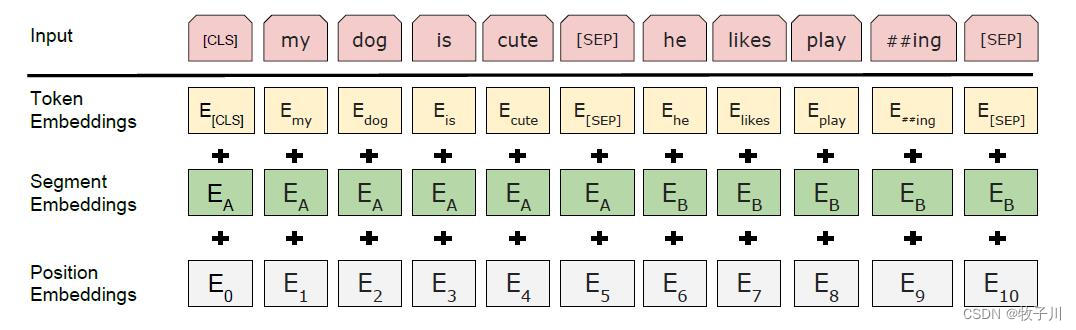

Model structure

Model structure of Bert model:

Data introduction

Data URL: https://github.com/buppt//raw/master/data/people-relation/train.txt

Entity 1 Entity 2 Relationship text

input_ids_list, token_type_ids_list, attention_mask_list, e1_masks_list, e2_masks_list, labels_list = [], [], [], [], [], []

for instance in batch_data:

# 按照batch中的最大数据长度,对数据进行padding填充

input_ids_temp = instance["input_ids"]

token_type_ids_temp = instance["token_type_ids"]

attention_mask_temp = instance["attention_mask"]

e1_masks_temp = instance["e1_masks"]

e2_masks_temp = instance["e2_masks"]

labels_temp = instance["labels"]

# 添加到对应的list中

input_ids_list.append(torch.tensor(input_ids_temp, dtype=torch.long))

token_type_ids_list.append(torch.tensor(token_type_ids_temp, dtype=torch.long))

attention_mask_list.append(torch.tensor(attention_mask_temp, dtype=torch.long))

e1_masks_list.append(torch.tensor(e1_masks_temp, dtype=torch.long))

e2_masks_list.append(torch.tensor(e2_masks_temp, dtype=torch.long))

labels_list.append(labels_temp)

# 使用pad_sequence函数,会将list中所有的tensor进行长度补全,补全到一个batch数据中的最大长度,补全元素为padding_value

return {"input_ids": pad_sequence(input_ids_list, batch_first=True, padding_value=0),

"token_type_ids": pad_sequence(token_type_ids_list, batch_first=True, padding_value=0),

"attention_mask": pad_sequence(attention_mask_list, batch_first=True, padding_value=0),

"e1_masks": pad_sequence(e1_masks_list, batch_first=True, padding_value=0),

"e2_masks": pad_sequence(e2_masks_list, batch_first=True, padding_value=0),

"labels": torch.tensor(labels_list, dtype=torch.long)}Model preparation

def forward(self, token_ids, token_type_ids, attention_mask, e1_mask, e2_mask):

sequence_output, pooled_output = self.bert_model(input_ids=token_ids, token_type_ids=token_type_ids,

attention_mask=attention_mask, return_dict=False)

# 每个实体的所有token向量的平均值

e1_h = self.entity_average(sequence_output, e1_mask)

e2_h = self.entity_average(sequence_output, e2_mask)

e1_h = self.activation(self.dense(e1_h))

e2_h = self.activation(self.dense(e2_h))

# [cls] + 实体1 + 实体2

concat_h = torch.cat([pooled_output, e1_h, e2_h], dim=-1)

concat_h = self.norm(concat_h)

logits = self.hidden2tag(self.drop(concat_h))

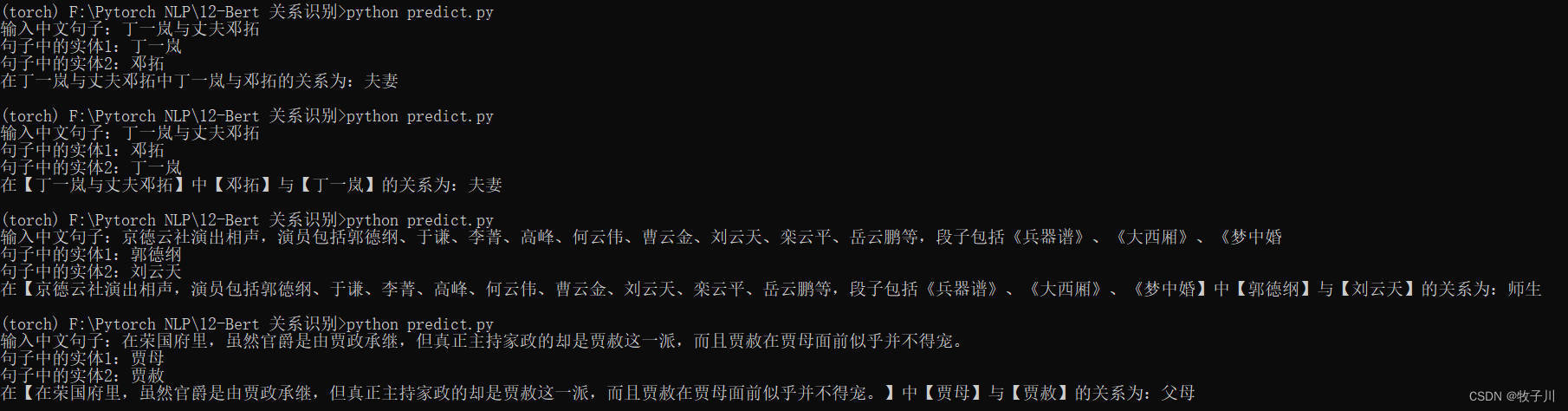

return logitsModel prediction

Enter a Chinese sentence: Ding Yilan and her husband Deng Tuo

Entity 1 in the sentence: Ding Yilan

Entity 2 in the sentence: Deng Tuo

In Ding Yilan and her husband Deng Tuo, the relationship between Ding Yilan and Deng Tuo is: husband and wife

Enter a Chinese sentence: Ding Yilan and her husband Deng Tuo

Entity 1 in the sentence: Deng Tuo

Entity 2 in the sentence: Ding Yilan

In [Ding Yilan and her husband Deng Tuo], the relationship between [Deng Tuo] and [Ding Yilan] is: husband and wife

Enter Chinese sentences: Jingde Yunshe performs cross talk. The actors include Guo Degang, Yu Qian, Li Jing, Gao Feng, He Yunwei, Cao Yunjin, Liu Yuntian, Luan Yunping, Yue Yunpeng, etc. The jokes include "Weapons Book", "The Great Western Chamber", "Dream Marriage Sentences

" Entity 1 in the sentence: Guo Degang

Entity 2 in the sentence: Liu Yuntian

performs cross talk in [Jingde Yunshe]. The actors include Guo Degang, Yu Qian, Li Jing, Gao Feng, He Yunwei, Cao Yunjin, Liu Yuntian, Luan Yunping, Yue Yunpeng, etc. The jokes include "Weapons Manual" ", "The West Chamber", "Dream Marriage", the relationship between [Guo Degang] and [Liu Yuntian] is: teacher and student

Enter a Chinese sentence: In the Rongguo Mansion, although the official title was inherited by Jia Zheng, it was Jia She's group who was actually in charge of housekeeping, and Jia She did not seem to be favored in front of Jia's mother.

Entity 1 in the sentence: Jia Mu

Entity 2 in the sentence: Jia She

is in [In the Rongguo Mansion, although the official title was inherited by Jia Zheng, it was Jia She's group who was really in charge of the housekeeping, and Jia She was in front of Jia Mu. Doesn't seem to be favored. 】The relationship between 【Jia Mu】 and 【Jia She】 is: parents

Source code acquisition

Rigid standards cannot actually limit our infinite possibilities, so! Come on, young men!