Table of contents

Algorithm brief description

basic

Ahem... As for algorithms, we are not CS students. Let me just say that algorithms are specific ways and methods to solve problems that have been modeled. They are about learning some mature/formed completion problems for programming. calculation routines and ideas.

List of typical algorithms

String-to-string matching

basic

A string is a limited sequence of zero or more characters, also called a string. A subsequence composed of any consecutive characters in a string (drool) is a substring of the string. The encoding method of string is character encoding such as ASCII encoding, Unicode encoding, etc.

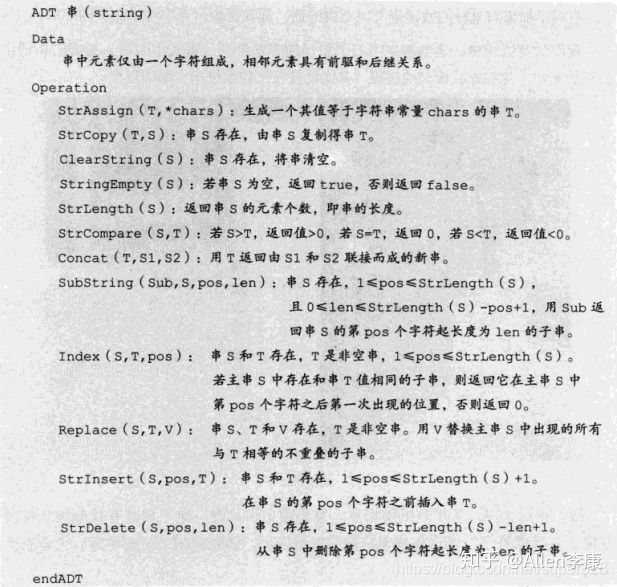

The elements in a string are all characters. String operations are mainly related to string operations rather than individual elements. Most of them are operations such as finding the position of a substring, obtaining a substring at a specified position, and replacing a substring, as follows:

Obviously, standard libraries such as string.h provide basic string operation APIs. For detailed use of the C standard library, please refer to the " 7 Usage of the C Standard Library" section of the article " C & MCU Writing Specifications and Others ".

String matching algorithm (also called string pattern matching)

String matching, for example: to find the position of the substring that is the same as the pattern string P = "ABCDABD" from the main string S = "BBC ABCDAB ABCDADCDABDE".

String pattern matching algorithm-BF algorithm (or brute force algorithm)

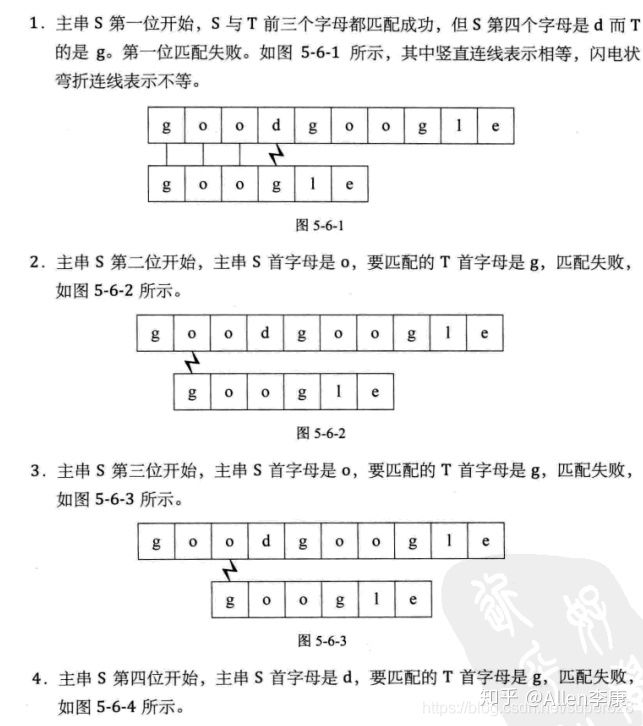

Is the main string S starting from the i = 0 position the same as the pattern string P starting from the j = 0 position character by character? If they are the same, both i and j will be incremented by one and then judge whether they match. If they are different, i will return here. At the same time, j returns to the first position of the matching start position and continues to match one by one. A picture explains:

Quoted from: 21 String pattern matching algorithm (BF algorithm) - Zhihu (zhihu.com)

Fast pattern matching algorithm for strings-KMP algorithm

The main idea is that compared to the BF algorithm, in order to speed up matching, find some rules. When the matching fails, j does not need to return to the beginning of P every time, but according to (note, if you don’t understand, it is recommended to read the tutorial article given below. , here is the summary after understanding) The maximum common element length of each prefix and suffix substring of each string in the pattern string is used to construct the next array, and the number of digits moved by j each time is adjusted according to next.

- Thoroughly understand KMP from beginning to end - Chris_z - Blog Park (cnblogs.com) . Coding-Zuo (cnblogs.com) . The article makes it very clear.

- "Tianqin Open Class" KMP algorithm easy-to-understand version_bilibili_bilibili .

sort

- Top Ten Classic Sorting Algorithms (Animation Demonstration) - One Pixel - Blog Park (cnblogs.com) .

- Summary of algorithms - 10 classic sorting algorithms (from small to large)_ Frank's blog - CSDN blog_ Sorting algorithms from small to large .

Depth/Breadth First Search

Graph traversal is usually performed using depth-first search (DFS) and breadth-first search (BFS). "If you think of a tree as a special kind of graph, DFS is preorder traversal."

- Search Thoughts - DFS & BFS (Basic Basics) - Zhihu (zhihu.com) .

- Search Thoughts - DFS & BFS (Basics) - Zhihu (zhihu.com) .

- Depth-first search (DFS) and breadth-first search (BFS) of graphs_Qingpingzhimo's blog-CSDN blog .

- Depth-first traversal and connected components | Rookie Tutorial (runoob.com) , Breadth-first traversal and shortest path | Rookie Tutorial (runoob.com) .

dynamic programming

- What is dynamic programming (Dynamic Programming)? What is the meaning of dynamic programming? - Zhihu (zhihu.com) .

- DP-Dynamic Programming Problem Experience-Zhihu (zhihu.com) .

- One foot into the ocean of DP - Zhihu (zhihu.com) .

- Five commonly used algorithms - detailed explanation of dynamic programming algorithm and classic examples_ Stop Thinking of Better Ways Blog - CSDN Blog_ Classic Examples of Dynamic Programming Algorithm .

Some people understand it as using the idea of "dynamic programming" (writing optimization goals and state transition equations (or recursive relationships)) to understand and model problems so that the optimal solution to the problem can be found without traversing all possible solutions (cutting branches, or remove calculations that are unlikely to be optimal solutions to save time, or remove overlapping sub-problems); a common implementation method is to use cache to store data to reduce repeated calculations (expand the exhaustive calculation process into a tree , and then find out the parts that are repeatedly calculated, and use caching to retain a copy of the results that have been repeatedly calculated before to reduce repeated calculations), and there are many other techniques and methods.

divide and conquer

The design idea of the divide-and-conquer method is to divide a large problem that is difficult to solve directly into a number of smaller identical problems so that they can be solved separately and divided and conquered.

The divide-and-conquer strategy is: for a problem of size n, if the problem can be easily solved (for example, the size n is small), then solve it directly; otherwise, decompose it into k smaller-scale sub-problems, and these sub-problems interact with each other. Independently and in the same form as the original problem, these sub-problems are solved recursively, and then the solutions to each sub-problem are combined to obtain the solution to the original problem. This algorithm design strategy is called divide and conquer.

If the original problem can be divided into k sub-problems, 1<k≤n, and these sub-problems can all be solved and the solutions of these sub-problems can be used to find the solution to the original problem, then this divide-and-conquer method is feasible. The sub-problems generated by the divide-and-conquer method are often smaller models of the original problem, which facilitates the use of recursive techniques. In this case, repeated application of the divide-and-conquer method can make the sub-problem consistent with the original problem type but its scale is continuously reduced, and finally the sub-problem is reduced to the point where it is easy to directly find its solution. This naturally leads to a recursive process. Divide and conquer and recursion are like twin brothers, often used simultaneously in algorithm design, and thus produce many efficient algorithms.

Some classic problems that can be solved using the divide-and-conquer method:

- (1) Binary search

- (2) Large integer multiplication

- (3) Strassen matrix multiplication

- (4)Chessboard coverage

- (5) Merge sort

- (6) Quick sort

- (7) Linear time selection

- (8) Closest point pair problem

- (9) Round robin schedule

- (10) Tower of Hanoi

A core of the divide-and-conquer algorithm lies in whether the sizes of the sub-problems are close. If they are close, the algorithm is more efficient.

The divide-and-conquer algorithm and dynamic programming both solve sub-problems and then merge the solutions; but the divide-and-conquer algorithm looks for sub-problems that are much smaller than the original problem (because it is still very fast for computers to calculate small data problems), and at the same time divide The efficiency of the governance algorithm is not necessarily good, and the efficiency of dynamic programming depends on the number of sub-problems. When the number of sub-problems is much smaller than the total number of sub-problems (that is, there are many repeated sub-problems), the algorithm will Very efficient.

greedy

The greedy algorithm (also known as the greedy algorithm) means that when solving a problem, always make the best choice at the moment. In other words, without considering the overall optimal solution, what he made was only a local optimal solution in a certain sense. The greedy algorithm cannot obtain the overall optimal solution for all problems, but it can produce the overall optimal solution or an approximate solution to the overall optimal solution for a wide range of problems.

The basic idea

Build a mathematical model to describe the problem.

Divide the problem to be solved into several sub-problems.

Solve each sub-problem and obtain the local optimal solution of the sub-problem.

The local optimal solution of the sub-problem is synthesized into a solution of the original problem.

The process of implementing the algorithm

Starting from an initial solution to the problem;

while can take one step forward towards a given overall goal;

Find a solution element of a feasible solution;

A feasible solution to the problem is composed of all solution elements.

There is a problem with this algorithm

- There is no guarantee that the final solution obtained is the best;

- It cannot be used to find the maximum or minimum solution problem;

- It can only find the range of feasible solutions that satisfy certain constraints.

Quote from: What is Dynamic Programming? What is the meaning of dynamic programming? - Zhihu (zhihu.com) explains the shortcomings of the greedy algorithm:

Let’s first take a look at something we often encounter in life—suppose you are a wealthy person and carry enough banknotes with denominations of 1, 5, 10, 20, 50, and 100 yuan. Now your goal is to collect a certain amount w, using as few banknotes as possible.

Based on life experience, we can obviously adopt this strategy: if you can use 100, try to use 100, otherwise try to use 50... and so on. Under this strategy, 666=6×100+1×50+1×10+1×5+1×1, and a total of 10 banknotes were used.

This strategy is called " greedy ": assuming that the situation we face is "we need to make up w", the greedy strategy will make the "part that still needs to be made up" smaller as soon as possible . If you can reduce w by 100, try to reduce it by 100. In this way, the next situation we face is to make up w-100. Long-term life experience shows that the greedy strategy is correct.

However, if we change the denomination of a set of banknotes, the greedy strategy may not work. If the banknote denominations of a strange country are 1, 5, and 11, then when we come up with 15, our greedy strategy will go wrong:

15=1×11+4×1 (the greedy strategy uses 5 banknotes)

15=3×5 (correct strategy, only use 3 bills)

Why is this so? What's wrong with the greedy strategy?

Short-sighted.

As I just said, the program of the greedy strategy is: "Try to make the w you face next smaller." In this way, the greedy strategy will give priority to using 11 to reduce w to 4 when w=15; but in this problem, the cost of getting 4 is very high, so 4×1 must be used. If 5 is used, w will be reduced to 10. Although it is not as small as 4, it only takes two 5 yuan to make up 10.

Here we find that greed is a strategy that only considers the immediate situation .

Backtrace

Quoted from: leetcode backtracking algorithm (backtracking) Summary of _wonner_'s blog-CSDN blog_leetcode backtracking .

The backtracking algorithm is also called the heuristic method. It is a method of systematically searching for solutions to problems. The basic idea of the backtracking algorithm is: go forward from one path, advance if you can, go back if you can't, and try another path again.

- Detailed explanation of backtracking algorithm and answers to classic examples of Leetcode_Mango is a blog without blindness-CSDN blog_Backtracking algorithm leetcode .

- Leetcode backtracking algorithm (backtracking) summary_wonner_'s blog-CSDN blog_leetcode backtracking .

- Five commonly used algorithms - Detailed explanation and classic examples of backtracking algorithm_ Stop Thinking of Better Ways Blog - CSDN Blog_ Classic Examples of Backtracking Algorithm .

branch and bound

Comparative backtracking

- The solution goal of the backtracking method is to find all solutions in the solution space that satisfy the constraint conditions. Presumably, the solution goal of the branch and bound method is to find a solution that satisfies the constraint conditions, or to find out the solution among the solutions that satisfy the constraint conditions. A certain objective function value reaches a maximum or minimum solution, which is the optimal solution in a certain sense.

- Another very big difference is that the backtracking method searches the solution space in a depth-first manner, while the branch-and-bound method searches the solution space in a breadth-first manner or a minimum-cost-first manner.