Recommended: Add NSDT Scene Editor to your 3D toolchain

Cocos Creator: AR interaction

3D Toolset: NSDT Jianshi Digital Twin

AR interaction

AR interaction is mainly driven by the cc.ScreenTouchInteractor component, which converts touch events into gestures such as click, drag, and pinch. The interactor passes these gestures to interactive virtual objects to complete the behavior triggered by the gesture.

Gesture interaction

The AR Gesture Interactor component translates screen touches into gestures. Cocos Creator's input system transmits gesture signals to interactive objects, and then interactive objects change behaviors in response to gesture events. The prerequisite for the interactive behavior of the interactive object is to bind the cc.Selectable component. For the property description of this component, see the interactive component Selectable.

To use the screen gesture interactor , right-click to create XR -> Screen Touch Interactor in the hierarchy manager .

Create a 3D object at will (take Cube as an example).

Modify the Cube's Scale property to (0.1, 0.1, 0.1), which means the actual size is 1000cm³, and modify the Position property to (0, -0.1, -0.5), which is located 50cm away and 10cm below the far point of the space, and add components XR > Interaction -> Selectable .

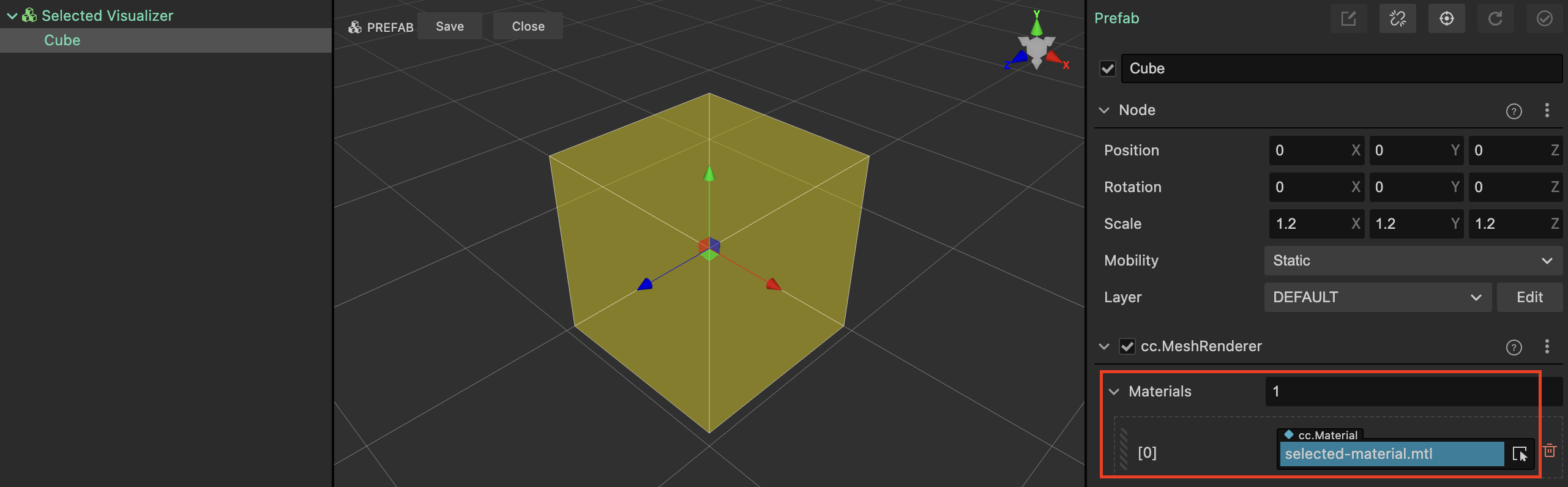

Next, create the selected effect. Create a prefab in the resource folder and name it Selected Visualizer.

Create the same Cube object under the root node of the prefab, and set the Scale size to be 1.2 times that of the parent node.

Create a new material that highlights the selected state.

To adjust the material effect, it is recommended to select builtin-unlit for Effect and 1-transparent for Technique.

After the material is created, apply it to the cc.MeshRenderer of the Cube in the prefab to complete the creation of the selected effect.

Finally, apply the prefab to the Selected Visualization property of cc.Selectable.

The runtime effect is as follows, and gestures can be combined to move, rotate and scale virtual objects.

place

When using the screen gesture interactor , the AR Hit Test capability of the device will be enabled. According to the coordinates of the screen touch position, the camera will be converted to a collision calculation using Ray Cast and AR Plane to obtain the position of the collision point. Finally, a virtual virtual object will be placed on this coordinate of the plane. object. The prefab object that can be placed must mount the cc.Placeable component.

Taking the Selectable object created in the above scene as an example, the following gives it the ability to be placed and interact.

Select the Cube object in the scene, and add components XR -> Interaction -> Placeable to it .

Drag this scene node into the resource manager to generate a prefab, and delete the Cube object in the scene.

Reference the newly generated Cube prefab to the Screen Touch Interactor -> Place Action > Placement Prefab attribute, and select AR_HIT_DETECTION for Calculation Mode .

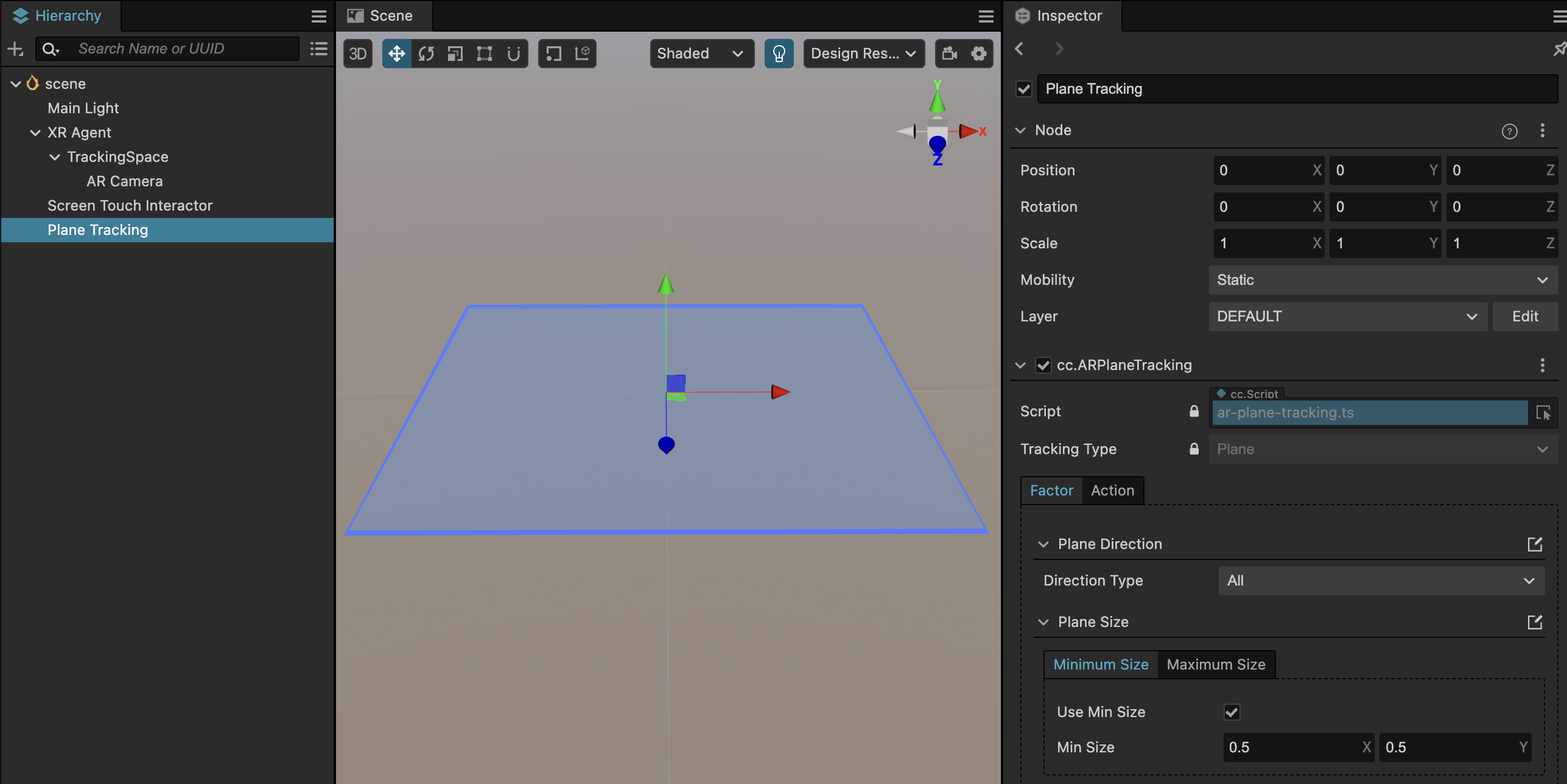

The position calculation of placed objects depends on AR Plane, so a Plane Tracking node needs to be created to request the device to activate the plane recognition capability of the AR SDK. In the editor's layer manager, right-click to create > XR > Plane Tracking to create a plane proxy node.

Once all the work is done, it's time to package and publish, and see the placement at runtime.