Article Directory

foreword

This article mainly introduces the earlier work of evaluating SAM in the field of medical images after Segment Anything Model has made remarkable achievements in the field of natural image segmentation.

Original paper link: Segment Anything Model for Medical Image Analysis: an Experimental Study

1. Abstraction & Introduction

1.1. Abstraction

Segment Anything Model (SAM)is a base model trained on more than 1 billion annotations (mainly natural images) designed to interactively segment user-defined objects of interest. Despite the impressive performance of the model on natural images, it is unclear how the model would be affected when turned to the domain of medical images.

This paper SAMpresents an extensive evaluation of the ability to segment medical images on 11 medical imaging datasets from different modalities and anatomies. Studies have shown that SAMthe performance of , varies by task and dataset, with impressive performance on some datasets but poor to moderate performance on others.

1.2. Introduction

Developing and training segmentation models for new medical imaging data and tasks is actually challenging because collecting and curating medical images is expensive and time-consuming, and requires careful mask annotation of images by experienced physicians. Base models and zero-shot learning can significantly reduce these difficulties by using neural networks trained on large amounts of data without using traditional supervised training labels.

1.2.1. What is SAM?

Segment Anything Modelis a segmentation model whose purpose is to segment a user-defined object of interest when prompted. Hints can be in the form of a point, a set of points (including the entire mask), a bounding box, or text. The model is required to return valid segmentation masks even when the hint is ambiguous.

For an introduction to the Segment Anything Model, please refer to my other blog: SAM [1]: Segment Anything

1.2.2. How to segment medical images with SAM?

It is technically SAMpossible to run without prompts, but it is not expected to be useful in medical imaging. This is because medical images often have many specific objects of interest in the image, and models need to be trained to recognize these objects.

2. Methodology

2.1. SAM is used in the process of segmentation of medical images

2.1.1. Semi-automated annotation

Manually annotating medical images is one of the main challenges in developing segmentation models in this field, as it often requires doctors to spend valuable time. In this case, SAMit can be used as a tool to speed up labeling.

In the simplest case, a human user SAMprovides a hint for , SAMwhich generates a mask for user approval or modification; in another approach, SAMthe hint is given as a grid across the image and masks are generated for multiple objects , which can then be named, selected, or modified by the user.

2.1.2. SAM assisting other segmentation models

SAMAutomatically segment images together with another algorithm to make up for the SAMinability to understand the lack of semantic information of segmented objects. For example, SAMbased on point cues distributed over an image, multiple object masks can be generated, which are then classified as specific objects by a separate classification model. Likewise, a stand-alone detection model can generate object bounding boxes for images as SAMcues for generating accurate segmentation masks.

In addition, in the process of training the semantic segmentation model, it can be SAMused cyclically with the semantic segmentation model. For example, during training, the masks generated by a segmentation model on unlabeled images can be used as SAMhints to generate more accurate masks for those images, which can be used as an iterative improvement of the model being trained with supervised training example.

2.1.3. New medical image foundation segmentation models

The development process of new medical image-based segmentation models can be SAMguided by the development process of ; alternatively, fine-tuning on medical images and masks from various medical imaging domains SAM, rather than training from scratch, as this may require less image.

2.2. Experiments

2.2.1. Settings

SAMEvaluation is done by creating one or more cues for each object and evaluating the accuracy of the generated masks relative to the ground truth mask annotations for a given dataset and task. Meanwhile, we always use the most confident mask generated by SAM for a given hint.

Choose mIoUas the evaluation metric. However, empirical studies have shown that SAMthe performance of A can vary greatly across different categories of the same image. Therefore, this paper Ntransforms the multi-class prediction problem of n categories into Na binary classification problem, so IoUit is enough to use t as the final evaluation metric.

2.2.2. Prompt Point Generation Scheme

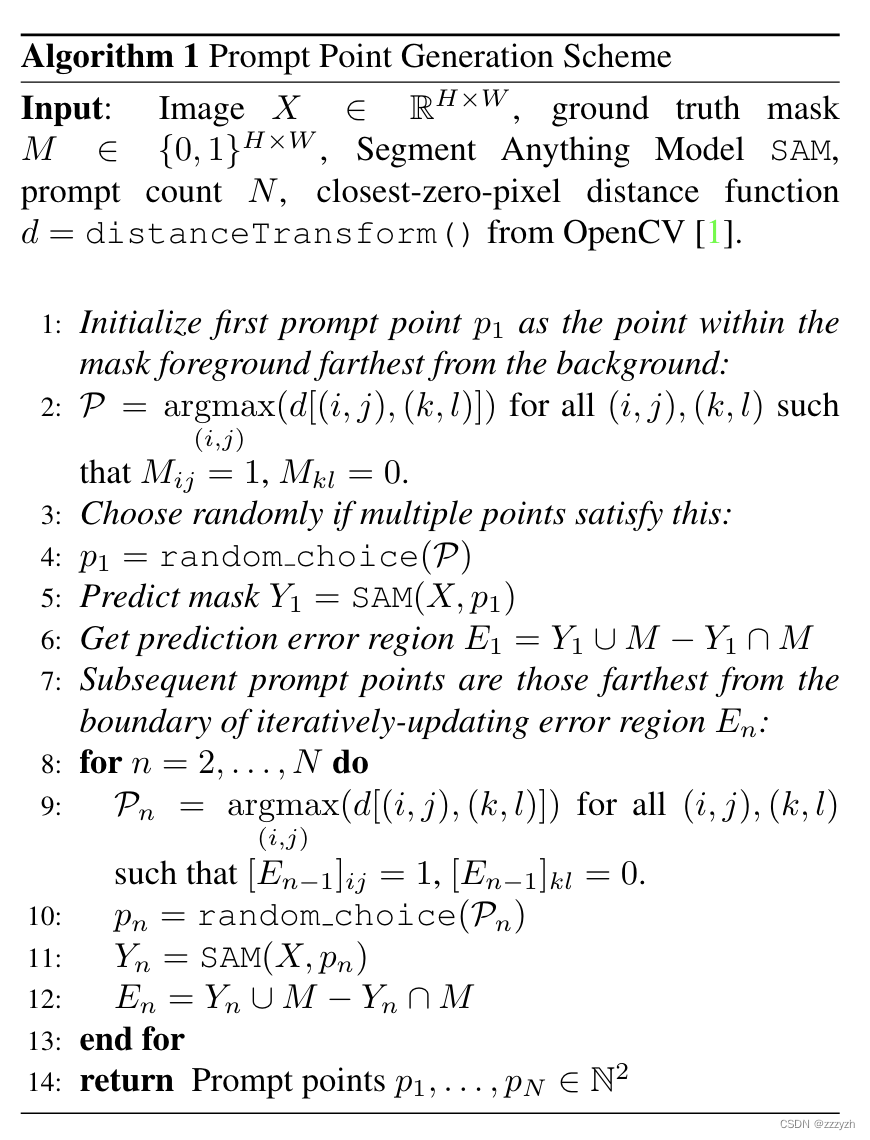

This paper uses a general and intuitive strategy to simulate the generation of realistic point hints, which reflects how users can interactively generate hints, and the implementation details are shown in the following figure:

Generate logic:

- It mainly simulates people's thinking when selecting points: when selecting points, users usually choose points from the center of the most obviously wrong or most correct place. In theory, this can cover the part that users need as much as possible.

- Put the first cue point p 1 p1p 1 is initialized to the point farthest from the background in the foreground of the mask

- That is, the first cue point p 1 p1p 1 is a positive prompt

- It should be noted that

SAMa positive prompt is required to determine the object to be segmented, soSAMthe first point prompt passed in must be a positive prompt

- By the formula P = argmax ( i , j ) ( d [ ( i , j ) , ( k , l ) ] ) \mathcal{P} = argmax_{(i, j)} (d[(i, j), ( k, l)])P=argmax(i,j)(d[(i,j),(k,l )]) get a set of points that meet the above conditions

- Randomly select a qualified point from the above qualified point set as the first input

- Enter the coordinates of the point prompt

SAMto get a mask with the highest prediction score Y 1 Y_1Y1 - Get the region where the prediction is wrong: E 1 = Y 1 ∪ M − Y 1 ∩ M E_1 = Y_1 \cup M - Y_1 \cap ME1=Y1∪M−Y1∩M

- Y 1 ∪ M Y_1 \cup M Y1∪M : The union of the area covered by the predicted mask in the original mask and the foreground point set

- Y 1 ∩ M Y_1 \cap M Y1∩M : the intersection of the area covered by the predicted mask in the original mask and the foreground point set

- E 1 E_1E1: The predicted mask and the part of the foreground point set that are not correctly predicted, that is, the predicted error area

- The subsequent point prompt is the distance iterative update error area E n E_nEnpoint farthest from border

SAMOnly one point prompt can be accepted at a time, and a prediction mask is returned, that is, multiple point prompts areSAMa process of iterating input on the basis of each output prediction mask- Each iteration gets an error region E n E_nEnThe set of points farthest from the boundary

- Randomly select a point from the point set as input

- Get

SAMthe predicted output of - Update Forecast Error Region

- Get the final prediction result

Summarize

This paper is mainly SAMbased on the proposal, and SAMmade an evaluation on whether it can be applied in medical image segmentation. For specific data sets and results, please refer to the result graph in the original paper.

This note focuses on SAMthe application in medical image segmentation and the learning and understanding of fine-tuning. At the same time, it analyzes and records the point prompt generation algorithm proposed in this paper with higher efficiency and more simulated user point selection habits.

But at the same time, this article does not study 3D data sets, but 3D data is very common in the field of medical images, which will also be a key direction of future research.