来源:机器之心

本文约3300字,建议阅读5分钟

这个方法可以高效构建三维模型,精细程度很高。High-realistic, accurate and controllable 3D face modeling is one of the important issues in digital human construction. At present, using the existing mesh-based face modeling method requires professional personnel to use complex software and invest a lot of time and effort, and it is difficult to achieve realistic face rendering results.

Although the neural radiation field as a new 3D representation can synthesize realistic results, how to precisely control and modify the generated results to achieve high-quality 3D face synthesis remains an open problem.

Recently, researchers proposed SketchFaceNeRF [1], a line-based 3D facial neural radiation field generation and editing method. Related technical papers were published in SIGGRAPH 2023, the top conference on computer graphics, and included in ACM Transactions on Graphics, a top journal in graphics. . Using this system, users can freely create 3D faces based on line drawings, even if they do not know how to use complex 3D software. Let's take a look at the face effect created with SketchFaceNeRF:

Figure 1: Generating a highly realistic 3D human face using line art.

Further, after a 3D face is given, the user adds editing operations at any angle:

Figure 2 Editing 3D faces at any angle using line drawings.

Part 1 background

Recently, AI painting is very popular. Based on methods such as Stable Diffusion [2] and ControlNet [3], high-realistic two-dimensional images can be generated by specifying text. However, the above works cannot generate high-quality 3D models. At the same time, it is difficult to control the details of generation using only text. Although ControlNet already supports the control of line drawings, it is still very difficult to accurately modify the local area of the generated results.

With the development of Neural Radiation Fields [4] and Adversarial Generative Networks [5], existing methods, such as EG3D [6], have achieved high-quality generation and fast rendering of 3D face models. However, these generative models only support random sampling of faces and cannot control the generated results. IDE-3D [7] and NeRFFaceEditing [8] use semantically labeled graphs to edit 3D faces, however, it is difficult for such methods to achieve more detailed control, such as structural details of hair and wrinkles. At the same time, it is difficult for users to draw complex semantic maps from scratch, and it is impossible to generate a 3D face model out of thin air.

As a more friendly interactive way, line draft has been used in the generation of 2D face images [9] and editing [10]. However, the following problems exist in the use of line drafts for 3D face generation: firstly, the styles of line drafts are diverse and too sparse, making it difficult to generate 2D images, and it is even more difficult to generate 3D models; secondly, for 3D faces, users Editing is often added at any viewing angle. How to generate effective editing results while maintaining three-dimensional consistency is a problem that needs to be solved.

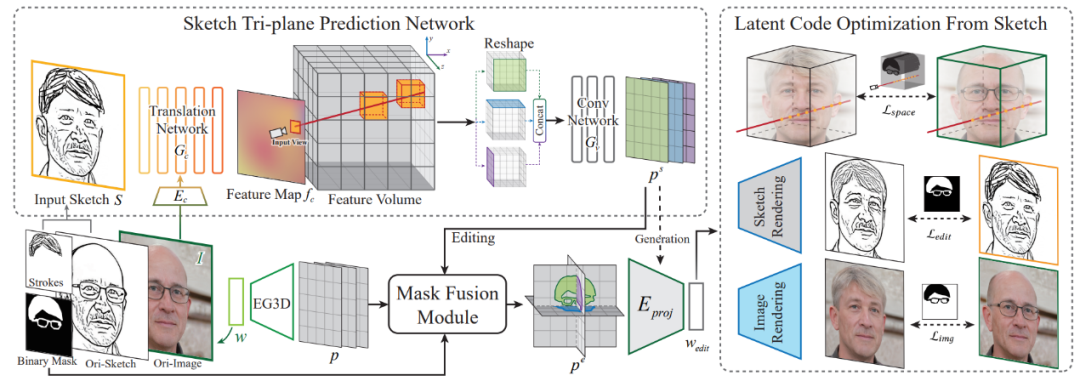

In response to the above challenges, SketchFaceNeRF uses the three-plane representation method to directly predict the three-plane features of the face based on the line drawing, and project it into the latent space of the generative model to obtain a high-quality face NeRF. The multi-view 3D face editing problem is modeled as an optimization problem. The initial value is predicted by the local fusion and projection method of the three planes, and the hidden code is reversely optimized based on the line draft constraint and image constraint to obtain a high-quality face NeRF. Editing effects.

Part 2 SketchFaceNeRF algorithm principle

Figure 3 SketchFaceNeRF network architecture diagram, generation process.

Face NeRF controllable generation

Given a single-view hand-drawn line drawing, it is directly projected into the latent space of the generative model, and the synthesized face has problems such as poor correspondence and low generation quality. This is because the difference between the two-dimensional sparse line draft and the three-dimensional human face is too large, and the hand-painted line draft has various styles. In order to solve the above problems, a step-by-step dimension-upscaling mapping method is proposed: since the input line draft only contains sparse geometric information, but the 3D faces have different appearances, adaptive instance normalization is first used (AdaIN [11]) , convert the input line art into a color feature map to infuse color, lighting and texture information.

Furthermore, since the 2D input lacks 3D information, the algorithm constructs 3D feature voxels in the volumetric rendering space, and the 3D points in the space are projected to the 2D feature map, and the corresponding features are retrieved. Finally, the shape transformation of the 3D voxels is performed on the three axes of x, y, and z, and then the three-plane feature map is obtained based on the 2D convolutional network. In order to generate high-quality face NeRF, the three planes are back-projected into the latent space of the generative model, and the latent representation of the face NeRF model is obtained.

The training process is divided into two steps: first, use EG3D to construct multi-view training data. After inputting the line draft to predict the three planes, based on the original EG3D rendering network, images from other perspectives are generated, and the real value is used as supervision to complete the training of the line draft three plane prediction network. Then, the weight of the three-plane prediction network of the line drawing is fixed, and the projection network is trained to project the three-plane features to the latent space of EG3D.

Figure 4 SketchFaceNeRF network architecture diagram, editing process.

Face NeRF Precise Editing

In order to support line draft face editing from any angle of view, this work proposes a three-dimensional consistent face line draft rendering method, adding an additional line draft generation branch to EG3D, which shares the same StyleGAN backbone network with the image generation branch, but has different decoder and super-resolution module. The training process uses the true value of the line drawing as supervision, and adds a regularization term to constrain the consistency of line drawing viewing angles.

Based on the generated 3D line draft, the user modifies the local area and draws a new line draft. Due to occlusion and other problems in single-view line draft input, it cannot represent the complete original 3D information, so it is difficult to maintain the consistency of non-edited areas before and after editing by direct reasoning.

To this end, the NeRF refinement problem of faces is modeled as an optimization problem. This work first proposes an initial value prediction method: using the line draft three-plane prediction network shared with the generation process, first directly predict the three-plane features corresponding to the line draft. In order to keep the non-editing area unchanged, the three-plane generated by the line draft is further fused with the original three-plane feature, and the encoding network shared with the generation process is used to back-project the three-plane to the latent space of the generative model, and the human Initial value for face editing.

Furthermore, a reverse optimization method is proposed to realize the fine editing of 3D face. Specifically, the algorithm renders a synthetic line draft through the line draft generation branch, and calculates the similarity with the hand-drawn line draft in the editing area. At the same time, in the non-editing area, the image generation branch renders the face image, and calculates the similarity with the original image. In order to ensure the spatial consistency before and after editing, the characteristics of the light sampling points in the non-editing area are further constrained to be the same. Based on the above constraints, the hidden code is reversely optimized to realize the fine editing of the face.

Part 3 effect display

As shown in Figure 5, given a hand-drawn line drawing, based on this method, a high-quality facial nerve radiation field can be generated. By selecting different appearance reference images, the appearance of the generated face can be specified. Users can freely change the viewing angle and get high-quality rendering results.

Figure 5 The 3D face generated based on the line drawing.

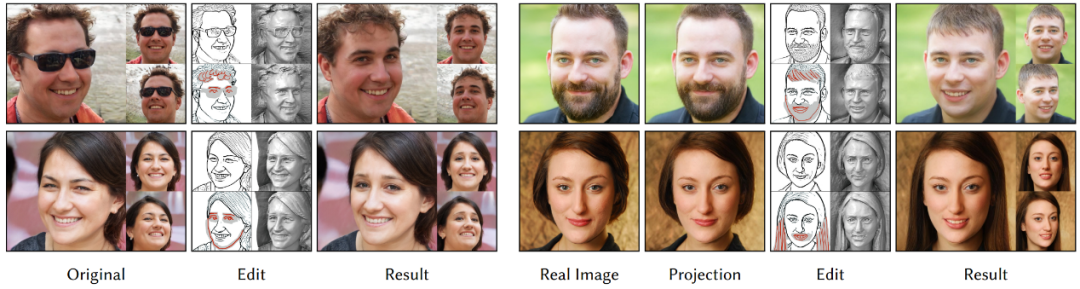

As shown in Figure 6, given a 3D face, the user can choose any viewing angle and modify the rendered line draft to edit the face NeRF. The left side shows the effect of editing a randomly generated face. On the right side, after a given face image is shown, the face generation model is used for back projection, and the edited result is further added.

Fig. 6 3D face editing results based on line draft.

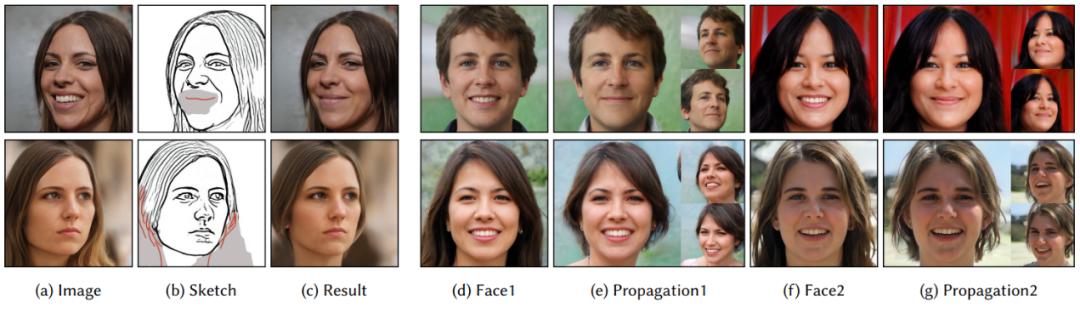

As shown in Figure 7, for a NeRF face, the user can add continuous editing operations to the face from different viewing angles. Using this method, better editing results can be obtained. At the same time, the features of the non-edited three-dimensional area are also perfected. Keep.

Figure 7 Continuous editing operations on faces based on line drawings.

As shown in Figure 8, thanks to the good nature of the latent space of the generative model, after the editing operation added to a specific person, the difference between the hidden codes before and after calculation can be obtained as an edit vector, which can be directly applied to other people in some cases to obtain similar edits Effect.

Figure 8 Editing propagation results, the effect of the editing operation on the left can be propagated to the face on the right.

Part 4 Conclusion

With the rapid development of artificial intelligence, many new methods of AI painting have emerged. Unlike generating 2D images, how to generate 3D digital content is a more challenging problem. SketchFaceNeRF provides a feasible solution. Based on hand-drawn line drawings, users can generate high-quality face models and support fine-grained editing from any angle of view.

Based on this system, we don't need to install complicated 3D modeling software and learn complicated skills, and we don't need to spend hours of time and energy. Just by sketching simple lines, ordinary users can easily build the perfect face model in their minds, and Get high-quality rendering results.

SketchFaceNeRF has been accepted by ACM SIGGRAPH 2023 and will be published in the journal ACM Transactions on Graphics.

Currently, SketchFaceNeRF has provided online services for everyone to use. The online system is supported by MLOps, the information high-speed rail training and reasoning platform of the Institute of Computing Technology, Chinese Academy of Sciences, and the on-line engineering service guarantee is provided by the Nanjing Institute of Information and High-speed Railway, Chinese Academy of Sciences.

Links to online services:

http://geometrylearning.com/SketchFaceNeRF/interface

For more details about the paper, and downloads of papers, videos, and codes, please visit the project homepage:

http://www.geometrylearning.com/SketchFaceNeRF/

See open source code:

https://github.com/IGLICT/SketchFaceNeRF

references:

[1] Lin Gao, Feng-Lin Liu, Shu-Yu Chen, Kaiwen Jiang, Chunpeng Li, Yu-Kun Lai, Hongbo Fu. SketchFaceNeRF: Sketch-based Facial Generation and Editing in Neural Radiance Fields. ACM TOG. 2023

[2] Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, Björn Ommer, High-Resolution Image Synthesis with Latent Diffusion Models, CVPR, 2022

[3] Adding Conditional Control to Text-to-Image Diffusion Models, Lvmin Zhang and Maneesh Agrawala, ArXiv, 2023

[4] Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, and Ren Ng. 2021. NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. Commun. ACM 65, 1 (dec 2021), 99–106.

[5] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014. Generative Adversarial Nets. In Advances in Neural Information Processing Systems, Z. Ghahramani, M. Welling, C. Cortes, N. Lawrence, and K.Q. Weinberger (Eds.), Vol. 27. Curran Associates, Inc.

[6] Eric R. Chan, Connor Z. Lin, Matthew A. Chan, Koki Nagano, Boxiao Pan, Shalini de Mello, Orazio Gallo, Leonidas Guibas, Jonathan Tremblay, Sameh Khamis, Tero Karras, and Gordon Wetzstein. 2022. Efficient Geometry-Aware 3D Generative Adversarial Networks. CVPR, 2022

[7] Jingxiang Sun, Xuan Wang, Yichun Shi, Lizhen Wang, Jue Wang, and Yebin Liu. 2022. IDE-3D: Interactive Disentangled Editing for High-Resolution 3D-Aware Portrait Synthesis. ACM TOG, 2022,

[8] Kaiwen Jiang, Shu-Yu Chen, Feng-Lin Liu, Hongbo Fu, and Lin Gao. 2022. NeRFFaceEditing: Disentangled Face Editing in Neural Radiance Fields. In SIGGRAPH Asia 2022

[9] Shu-Yu Chen, Wanchao Su, Lin Gao, Shihong Xia, and Hongbo Fu. 2020. DeepFaceDrawing: Deep generation of face images from sketches. ACM TOG, 2020

[10] Shu-Yu Chen, Feng-Lin Liu, Yu-Kun Lai, Paul L. Rosin, Chunpeng Li, Hongbo Fu, and Lin Gao. 2021. DeepFaceEditing: deep face generation and editing with disentangled geometry and appearance control. ACM TOG, 2021

[11] Xun Huang and Serge Belongie. Arbitrary style transfer in real-time with adaptive instance normalization. In CVPR, 2017

Editor: Wen Jing