Time-saving research report-professional, timely and comprehensive research report library

Time-saving search plan-professional, timely and comprehensive marketing planning plan library

[Free Download] A Collection of Popular Reports in March 2023

No need to go over the wall, ChatGPT can be used directly

4D dry goods: how ChatGPT works

A must-read handbook for entrepreneurship (with entrepreneurial ideas) in 2023

ChatGPT and other 22 AI tools that double your efficiency

ChatGPT research report (for internal reference only)

The development history, principle, technical architecture and future direction of ChatGPT

"ChatGPT: ZhenFund Sharing.pdf"

2023 AIGC Development Trend Report: The Next Era of Artificial Intelligence

How big is the potential of AI? Now, mind reading is coming: humans don't need to open their mouths, AI knows what you think. More importantly, this is the first time that AI has learned "mind reading" through a non-invasive method.

The link that ChatGPT can directly access in China supports drawing models. Click "Read the original text" at the end of the article to go directly to:

https://chatgpt.zntjxt.com

The results of this study come from the team at the University of Texas at Austin, and have been published in the journal Nature Neuroscience. They developed a decoder based on GPT artificial intelligence technology that can convert brain activity into a continuous stream of text, which may provide another new way for patients who cannot speak to communicate with the outside world.

According to the experimental results, the accuracy rate of speech perception by the GPT artificial intelligence large model can be as high as 82% , which is amazing.

1

The Exploration of "Mind Reading"

In fact, the exploration of "mind reading" in the technology circle has not just started recently.

In the past, Neuralink, the neurotechnology company established by Musk, has also been looking for ways to efficiently implement brain-computer interfaces. It also cooperated with the University of California, Davis, to realize experiments using monkey brains to control computers , aiming to eventually implant chips into the brain, using "filaments" to detect neuronal activity.

However, it is worth noting that Neuralink's solution is invasive. The so-called invasive means that the brain-computer interface is directly implanted into the gray matter of the brain, so the quality of the obtained neural signals is relatively high. The disadvantage of this method is that it is easy to trigger immune reaction and callus (scar), which leads to the decline or even disappearance of signal quality.

Corresponding to it is the non-invasive brain-computer interface, which is a human-computer interaction technology that can directly establish communication between the human brain and external devices, and has the advantages of convenient operation and low risk.

Previously, the industry could capture crude, colorful snapshots of human brain activity with functional magnetic resonance imaging (FMRI). While this particular type of MRI has transformed cognitive neuroscience, it has never been a mind-reading machine: neuroscientists cannot use a brain scan to tell what someone is seeing, hearing or thinking in a scanner.

Since then, neuroscientists have hoped that non-invasive techniques such as fMRI can be used to decipher the voices inside the human brain without surgery.

Now, with the release of the paper "Semantic reconstruction of continuous language from non-invasive brain recordings" (https://www.nature.com/articles/s41593-023-01304-9.epdf), the main author of the paper, Jerry By combining fMRI's ability to detect neural activity with the predictive power of AI language models, Tang can recreate with astonishing accuracy the stories people hear or imagine in a scanner. The decoder can even guess the story behind someone viewing a clip in a scanner, albeit with less accuracy, but a big improvement. This also means that the AI system can decode the thoughts in the brain without the need for any external devices to be implanted in the participants.

2

How did the AI know what it hadn't said?

In the few months since the release of ChatGPT and GPT-4 , we have witnessed the process of the large model continuously outputting content based on the prompt words.

To ask how the AI system understands the thoughts in the human brain, in the paper, the researchers revealed that after first letting the participants listen to new stories, functional magnetic resonance imaging (FMRI) can show the activity status of the participants' brains. Furthermore, based on the newly developed semantic decoder, these states are generated to generate corresponding word sequences, and by comparing the prediction of the user's brain response with the actual recorded brain response, the degree of similarity between each candidate word sequence and the actual word sequence is finally predicted , to see how accurate it is and whether it can "read mind".

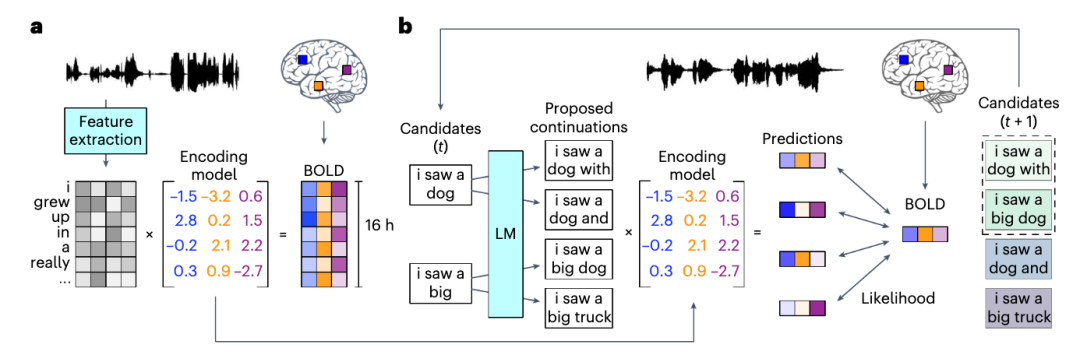

Specifically, to collect data on brain activity, the researchers had subjects listen to audio stories inside an fMRI scanner. At the same time, an fMRI scanner was used to watch how their brains responded to the words. As shown in Figure a, the AI system recorded the MRI (Magnetic Resonance Imaging) responses of 3 subjects listening to a narrated story for 16 hours.

The MRI data is then sent to a computer system. In the process, the researchers used a decoding framework based on Bayesian statistics. The large language model GPT-1 helps in the natural language processing part of the system. Since this neural language model is trained on a large dataset of natural English word sequences, it excels at predicting the most likely word.

The MRI data is then sent to a computer system. In the process, the researchers used a decoding framework based on Bayesian statistics. The large language model GPT-1 helps in the natural language processing part of the system. Since this neural language model is trained on a large dataset of natural English word sequences, it excels at predicting the most likely word.

Next, the researchers trained an encoding model on this dataset. During initial training, as shown in panel b, the brain responds differently when subjects listen to test stories that were not previously used for model training.

In turn, a semantic decoder can generate lexical sequences based on participants' brain activity, a language model (LM) proposes continuities for each sequence, and an encoding model scores the likelihood of the recorded brain responses under each continuum.

In simple terms, a semantic decoder learns to match specific brain activity with a specific flow of words. Then try to re-output these stories based on the stream of matched words.

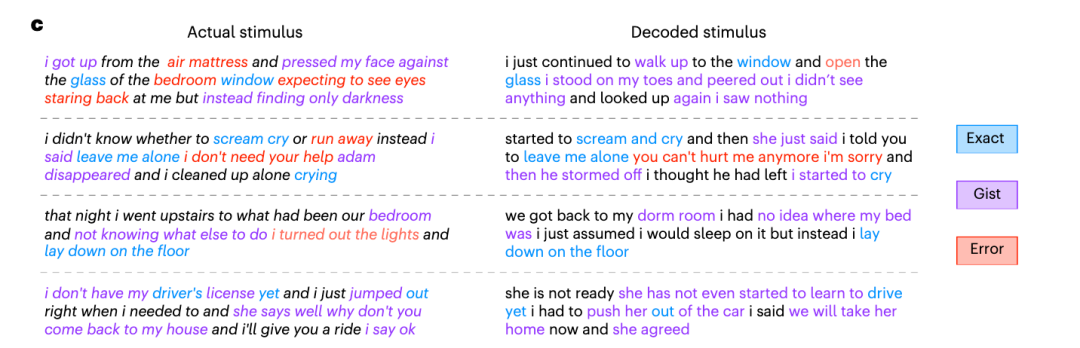

However, the semantic decoder mainly captures the gist of participants' thoughts, not the complete thought content word by word. As participants heard, "I got up off my air mattress and pressed my face against the glass of my bedroom window, expecting to see a pair of eyes staring at me, only to find there was darkness."

But the thought is, "I keep going to the window, I open the window, I don't see anything, I look up, I don't see anything."

For another example, if the participant heard, "I don't have a driver's license yet", the version decoded by the semantic decoder might be, "She hasn't learned to drive yet."

Semantic decoder captures participants' thoughts

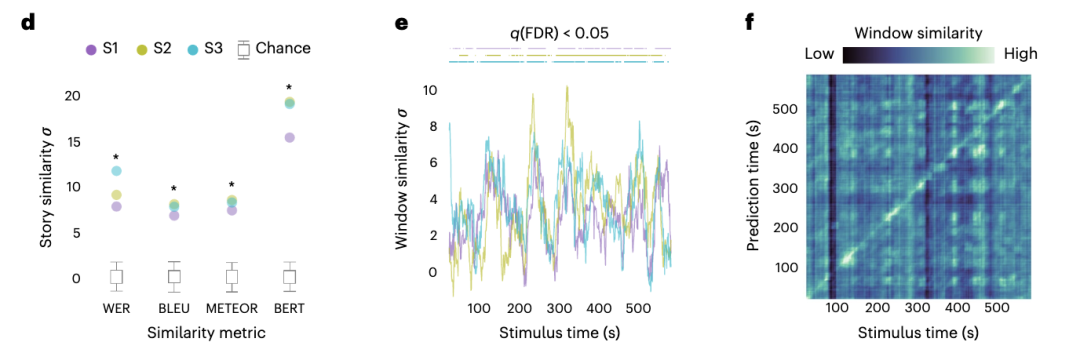

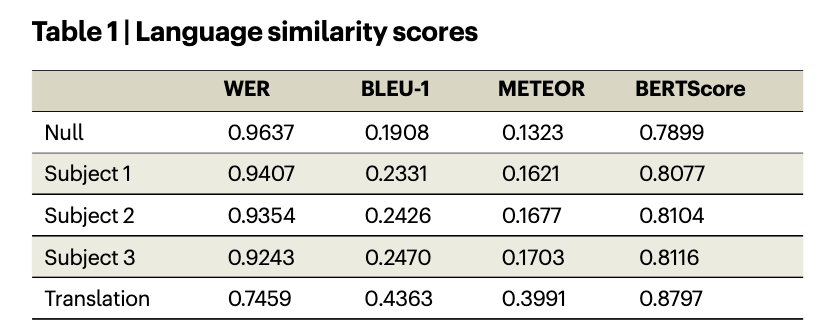

With this approach, the semantic decoder's predictions for the test story were significantly more similar to the actual stimulus words than expected across a range of linguistic similarity metrics. The accuracy rate is also as high as 82%.

Alexander Huth, another author of the paper, said they were surprised by how well the system performed. They found that the decoded word sequences often accurately captured words and phrases. They also found that they could extract continuous language information separately from different regions of the brain.

Additionally, to test whether the decoded text accurately captured the meaning of the story, the researchers conducted a behavioral experiment by asking subjects who had only read the decoded words a series of questions. Subjects were able to correctly answer more than half of the questions without seeing the video.

3

The semantic decoder has just started, and the road is blocked and long

Currently, however, the semantic decoder cannot be used outside of the lab because it relies on fMRI equipment.

For future work, the researchers hope that rapid advances in natural language neural networks will lead to better accuracy. So far, they've found that larger, modern language models work better, at least for the encoding part. They also want to be able to use larger datasets, say 100 or 200 hours of data per subject.

While this non-invasive approach may be of great benefit to research in the medical dimension as well as to patients, allowing them to communicate intelligibly with others, there are also privacy, ethical scrutiny, inequality and discrimination, abuse and There are many problems such as human rights violations, so it is very difficult to apply it in reality.

At the same time, the researchers showed that semantic decoders work well only on and in cooperation with humans they've been trained on, because a model trained for one person won't work for another, and generalization isn't currently possible.

"While the technology is in its infancy, it's important to regulate what it can and cannot do," warns Jerry Tang, lead author of the paper. "If it ends up being used without an individual's permission, there must be a (rigorous) regulatory process because there could be negative consequences if the predictive framework is misused."

The team has made their custom decoding code available on GitHub: github.com/HuthLab/semantic-decoding. The team is also known to have filed a patent application directly related to this research with support from the University of Texas system.

More content can be viewed in the complete paper:

https://www.nature.com/articles/s41593-023-01304-9

"More dry goods, more harvest"

[Free Download] A Collection of Popular Reports in March 2023

4D dry goods: how ChatGPT works

A must-read handbook for entrepreneurship (with entrepreneurial ideas) in 2023

ChatGPT and other 22 AI tools that double your efficiency

ChatGPT research report (for internal reference only)

The development history, principle, technical architecture and future direction of ChatGPT

"ChatGPT: ZhenFund Sharing.pdf"

2023 AIGC Development Trend Report: The Next Era of Artificial Intelligence

Application Practice of Recommendation System in Tencent Games.pdf

Practice of recommended technology in vivo Internet commercialization business.pdf

In 2023, how to formulate an annual plan scientifically?

"Bottom Logic" high-definition map

Practice of recommended technology in vivo Internet commercialization business.pdf

Basic problems of recommendation system and system optimization path.pdf

Honor Recommendation Algorithm Architecture Evolution Practice.pdf

Design practice of large-scale recommendation deep learning system.pdf

某视频APP推荐策略详细拆解(万字长文)