foreword

At the beginning, I decided to understand all the things mentioned in the article, just like I wrote several articles about ByteByteGo, I understood every sentence. But for "Phoenix Architecture", this is a bit too time-consuming and unnecessary. Some things may never be used, but the article will mention those things in order to fully introduce a content. So I still make some extra notes for some of my own questions, or things I want to know.

My initial idea was to summarize the article in the form of a blog, but in the process, I found it difficult to summarize. Every sentence in the book is necessary. This book is really dry and full of harvest.

Summarize

The original distributed era: The performance of computers is seriously insufficient, and a preliminary exploration of distributed is carried out.

Monolithic system era: For small-scale systems, it is easy to develop, test, and deploy; for large-scale systems, its biggest problem is not inseparable and difficult to expand, because it will follow the principle of layered architecture as a whole, code It will also be divided according to modules and functions in order to reuse and manage codes, and multiple methods like Jar, War or others can be deployed to fulfill the expansion requirements through load balancing. Its real problem is first of all that it cannot stop the spread of errors. Any error in any part of the code may then affect the entire program; second, it is not convenient for dynamic updates, and it is impossible to stop and update a certain module separately, and the maintainability is poor; The third is that it is not easy to achieve technical heterogeneity, and it is necessary to use the same language or even the same architecture. There is another fundamental reason for promoting microservices to replace it. In fact, it is a change in thinking, from the original "pursuing as little error as possible" to "errors are inevitable". To allow errors is the biggest advantage of microservices.

SOA Era: Also called service-oriented architecture, based on the way of remote service invocation, it also conducts a comprehensive exploration of distributed, puts forward very clear guiding principles, successfully solves the main problems in the distributed environment, and also proposes software development methodology. It not only pays attention to technology, but also pays attention to the research and development process. But its shortcomings are that the specification is too strict, the complexity is too large, and the universality is not strong, so it failed in the end.

The era of microservices: A single application is built through the combination of multiple small services, with features such as decentralized governance, heterogeneous technology; remote invocation; product-oriented thinking, similar to agile development, where everyone is not only developing, but also testing, operating and maintaining, etc. ; Data decentralization; strong terminal and weak pipeline, simplified communication mechanism, using RESTful style; fault tolerance and evolution; automation, etc., which makes software development more free. The disadvantage is that without unified specifications and constraints, the difficulty of software architecture design is greatly increased.

Post-microservice era: In the microservice era, people solve distributed problems at the software code level, and in the post-microservice era, using Kubernetes can directly implement distributed fine-grained management capabilities at the hardware level, thereby eliminating technical problems that have nothing to do with business The problem is stripped from the software level, so that software development can only focus on business.

No-service era: The vision of no-service is that developers only need to focus on business purely, without considering technical components, how to deploy, computing power, or operation and maintenance, all of which are served by cloud computing business completed. However, the current technology is not very mature, and it is not yet competent for problems such as complex business logic and dependence on server status.

main content

Service Architecture Evolution History:

-

The original distributed era:

In the early days, the performance of computers was seriously insufficient. In order to improve the performance and computing power of computers, some explorations of distributed, such as where are the remote services (service discovery), how many (load balance), network partition, timeout, or service error (fuse, isolation, downgrade), how to express method parameters and return results (serialization protocol), how to transmit information (transport protocol), and how to manage service permissions (authentication, Authorization), how to ensure communication security (network security layer), how to call services of different machines to return the same result (distributed data consistency), etc., put forward a lot of theories that are very influential in the future. -

The era of monolithic systems:

According to Wikipedia's definition, "Monolith means self-contained. A monolithic application describes a single-layer software composed of different components of the same technology platform."

First of all, the benefits of a small single system are obvious, easy to develop, test, and deploy, and because the calling process of each function, module, and method in the system is an in-process call, no inter-process communication will occur, and all modules and method calls There is no need to consider network partitions, object replication and performance loss. Therefore, the operation efficiency is very high.

As for the lack of a single system, it must be that the performance requirements of the software exceed that of a single machine, and the scale of software developers significantly exceeds "2 Pizza Team" (a quantifier for measuring the size of a team proposed by the founder of Amazon, which means that two Pizzas can feed The number of people who are full (about 6 to 12 people) is of value for discussion.

For a large monolithic system, people will say that it is indivisible and difficult to expand, so it cannot support a larger and larger software scale. This idea is actually biased. From a vertical point of view, it follows a layered architecture. The received external requests will be transmitted between layers in different forms of data structures, and will respond in reverse order after touching the last database; from a horizontal point of view Look, the monolithic architecture also supports splitting the software into various modules according to the dimensions of technology, function, and responsibility, so as to reuse and manage the code. The monolithic system does not mean that there can only be one overall program packaging form. , it can be completely composed of multiple Jar, War or other module formats. In the way of load balancing, several copies of the same single system are deployed at the same time to achieve the effect of sharing traffic pressure. This is also a very common extension. Its real problems are actually the following points:

1) It is difficult for a monomer to block error propagation. After the split, autonomy and isolation cannot be achieved. Defects in any part of the code will have a global impact that is difficult to isolate, and then affect the entire program, such as memory leaks, thread explosions, blocking, and infinite loops. Even if the problem is some higher-level public resources, such as the port number or the leak of the database connection pool, it will also affect the normal work of the entire machine or even other single copies in the cluster. (As mentioned above, with multiple Wars and Jars horizontally expanded, if one Jar is broken, the others will also be broken? This is not talking about this, but the real monomer) 2) It is not convenient to dynamically update

the program . It cannot be isolated (OSGi can be used, but it is very complicated), and it also means that it is impossible to stop, update, and upgrade a certain part of the code separately. Therefore, the maintainability is poor, and it is not conducive to grayscale release and A/B testing.

3) Facing the difficulty of technical heterogeneity . The difficulty of isolation also leads to the fact that the code of each module usually needs to be developed using the same programming language, or even the same programming framework (JNI can allow Java to mix C or C++, but this is usually a last resort and not an elegant choice) .

The problems listed above are not the root cause of today’s trend of replacing monolithic systems with microservices. The author believes that the most important reason is: the underlying concept of this architectural style is to hope that every part of the system, every code They are all as reliable as possible, and a reliable system can be built by having no or few defects. However, no matter how good the tactical level is, it is difficult to make up for the lack of strategic level. The idea of relying on high quality to ensure high reliability can work well on small-scale software, but the larger the system scale, the delivery of a reliable monomer The system becomes more and more challenging. It is precisely with the evolution of software architecture and the shift in the concept of building a reliable system from "pursuing as little error as possible" to facing "errors are inevitable" that the microservice architecture can challenge and gradually replace the monolithic architecture that has been in operation for decades. where the confidence lies.

This is an advantage when the system scale is small, but when the system scale is large or the program needs to be modified, the cost of its deployment and migration costs for technology upgrades will become more expensive. In order to allow the program to make mistakes, to obtain the ability of isolation and autonomy, and to achieve technical heterogeneity, it is the reason for the program to choose distributed again after the performance and computing power. -

SOA Era:

Chinese is called Service-Oriented Architecture (Service-Oriented Architecture). In order to split a large single system so that each subsystem can be deployed, run, and updated independently, before the arrival of SOA, developers We have tried many architectures, the following are three representative architectures:

1) chimney architecture, which refers to a design pattern that does not interoperate or coordinate with other related information systems at all. Using enterprises and departments as an example, is there really a department in the enterprise that does not interact at all? Moreover, such a system of "independent splitting" and "independent old age and death" is obviously impossible for enterprises to hope to see.

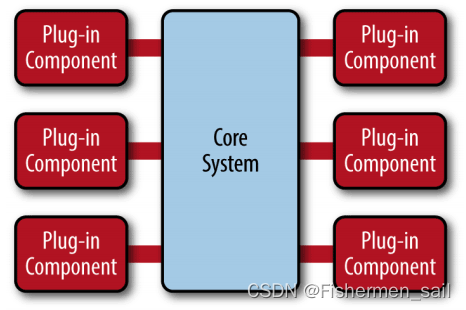

2) Microkernel architecture: In the chimney architecture, systems with no business relationship may also need to share some public master data such as personnel, organizations, permissions, etc., so you might as well put these master data, together with other subsystems that may be used by each subsystem The public services, data, and resources used are gathered together to become a core that is commonly relied upon by all business systems. Specific business systems exist in the form of plug-in modules, which can provide scalable, flexible, and naturally isolated features. , the microkernel architecture.

This pattern is well suited for desktop applications and is often used in web applications as well. However, the microkernel architecture also has its limitations and prerequisites. It assumes that the plug-in modules in the system do not know each other, and it is unpredictable which modules will be installed in the system. Therefore, these plug-ins can access some public resources in the kernel, but will not interact directly. However, whether it is an enterprise information system or an Internet application, this premise does not hold true in many scenarios. We must find a way to not only split out independent systems, but also allow smooth communication between the split subsystems. call each other to communicate.

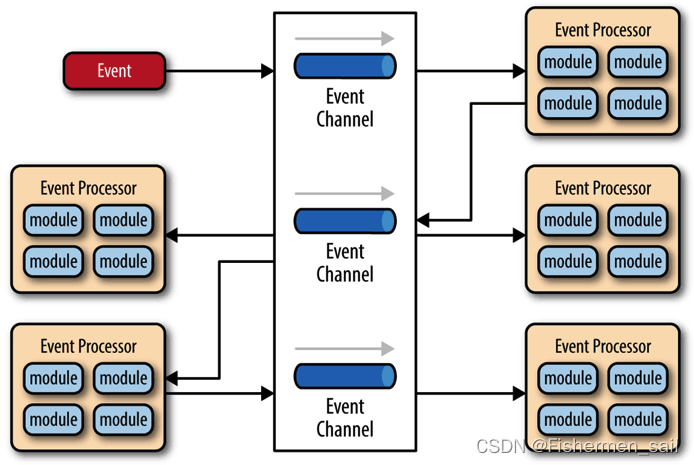

3) Event-driven architecture: In order to allow subsystems to communicate with each other, a feasible solution is to establish a set of event queue pipelines between subsystems. Messages from outside the system will be sent to the pipeline in the form of events, and each subsystem will obtain the event messages it is interested in and needs to process from the pipeline. Based on this, each message handler is independent and highly decoupled, but can interact with other handlers through the event pipeline.

After that, the invocation of remote services ushered in the birth of the SOAP protocol. Based on this, the software also officially entered the SOA era. Faced with the problems in the future era of microservices, most of which are also foreseeable difficulties when distributed services were first proposed, SOA has carried out more systematic and specific explorations.

"More specific" is reflected in that although SOA has stronger operability, there are clear guiding principles for software design, such as service encapsulation, autonomy, loose coupling, reusability, composability, statelessness, etc.; it is clear that SOAP is used as a remote The protocol of the call; use the message pipeline called the enterprise service bus (ESB) to realize the communication interaction between the various subsystems, and so on. With the support of this whole set of technical components that can cooperate closely with each other, if judged only from the perspective of technical feasibility, SOA can be regarded as successfully solving the main technical problems in the distributed environment.

"More systematic" refers to the grand ideal of SOA. Its ultimate goal is to summarize a set of top-down software development methodology, hoping that enterprises can solve the software development process in a package only by following the idea of SOA. In addition, SOA not only pays attention to technology, but also pays attention to the requirements, management, process and organization involved in the research and development process.

But too strict specification definition brings excessive complexity. And many superstructures such as ESB and BPM built on the basis of SOAP further aggravate this complexity. After all, the development of information systems is not stereotyped articles. Overly sophisticated processes and theories also require professionals who understand complex concepts to be able to control them. It can realize complex integration and interaction between multiple heterogeneous large-scale systems, but it is difficult to promote it as a widely applicable software architecture style. It didn't work out in the end either. -

The era of microservices:

Microservices is an architectural style for building a single application through the composition of multiple small services, which are built around business capabilities rather than specific technical standards. Each service can use different programming languages, different data storage technologies, and run in different processes. The service adopts a lightweight communication mechanism and an automated deployment mechanism to achieve communication and operation and maintenance. It has the following characteristics:

1) Build around business capabilities, which also emphasizes the importance of Conway's Law. When an organization designs a system, it will be limited by the organization's communication structure, so the product must be the epitome of its organization's communication structure; 2) Decentralized governance, microservices are more It is emphasized that when technical heterogeneity is really needed, one should have the right to choose "non-unification"; 3) Realize independent and autonomous components through services. The reason why we emphasize building components through "Service" instead of "Library" is because the class library is statically linked into the program at compile time and provides functions through local calls, while the service is an out-of-process component. Provide functions through remote calls; 4) product thinking, in the past, under the single architecture, the scale of the program determined that it was impossible for all personnel to pay attention to the complete product, and there would be a detailed division of labor in the organization such as development, operation and maintenance, and support. Members, everyone only focuses on their own piece of work, but under microservices, it is feasible for everyone in the development team to have product thinking and care about all aspects of the entire product; 5) Data decentralization, microservices The service clearly advocates that data should be managed, updated, maintained, and stored in a decentralized manner. In a single service, each functional module of a system usually uses the same database. It is true that centralized storage is inherently easier to avoid consistency problems. However, the abstract form of the same data entity is often different from the perspective of different services. For example, for the books in the store, the focus is on the price in the sales field, the inventory quantity in the storage field, and the introduction information of the books in the product display field. If it is used as a centralized storage, all fields must be modified and are mapped to the same entity, which makes it possible for different services to affect each other and lose their independence. Although it is quite difficult to deal with the consistency problem in a distributed environment, it is often impossible to use traditional transaction processing to guarantee it, but the lesser of two evils, some necessary costs are still worth paying;6 ) strong terminal weak pipe, weak pipe is almost a direct name against SOAP and ESB A bunch of complex communication mechanisms, these functions built on the communication pipeline may be necessary for a certain part of the service in a certain system, but it is an imposed burden for more other services. If the service needs the above additional communication capabilities, it should be resolved on the service's own Endpoint, rather than a package on the communication pipeline. Microservices advocate a simple and direct communication method similar to classic UNIX filters. RESTful style communication will be a more suitable choice in microservices; 7) Fault-tolerant design requires that there be an automatic mechanism for microservice design The services it depends on can perform rapid fault detection, isolate when errors persist, and reconnect when services are restored; 8) Evolutionary design, fault-tolerant design admits that services will go wrong, and evolutionary design admits that services will be scrapped disuse. A well-designed service should be able to be scrapped, not expected to live forever. If there are unchangeable and irreplaceable services in the system, this does not mean how excellent and important the service is, but rather a sign of the fragility of the system design. The independence and autonomy pursued by microservices is also against The manifestation of this vulnerability; 9) Infrastructure automation, infrastructure automation, such as the rapid development of CI/CD, significantly reduces the complexity of construction, release, and operation and maintenance work. Because the objects of operation and maintenance under microservices have an order of magnitude increase compared with the single architecture, teams using microservices are more dependent on the automation of infrastructure, and it is difficult for humans to support hundreds, thousands, or even tens of thousands of services. . The rapid development of , significantly reduces the complexity of construction, release, operation and maintenance work. Because the objects of operation and maintenance under microservices have an order of magnitude increase compared with the single architecture, teams using microservices are more dependent on the automation of infrastructure, and it is difficult for humans to support hundreds, thousands, or even tens of thousands of services. . The rapid development of , significantly reduces the complexity of construction, release, operation and maintenance work. Because the objects of operation and maintenance under microservices have an order of magnitude increase compared with the single architecture, teams using microservices are more dependent on the automation of infrastructure, and it is difficult for humans to support hundreds, thousands, or even tens of thousands of services. .

From the above definitions and characteristics of microservices, it should be obvious that microservices pursue a more free architectural style, abandoning almost all the constraints and regulations that can be discarded in SOA. However, if there is no unified specification and constraint, wouldn't the problems of distributed services solved by SOA in the past suddenly reappear? Indeed, there will no longer be a unified solution to these problems in microservices. Even if we only discuss microservices that will be used within the scope of Java, just a remote call problem between services can be included in the candidate list of solutions. : RMI (Sun/Oracle), Thrift (Facebook), Dubbo (Alibaba), gRPC (Google), etc.

The freedom brought by microservices is a double-edged sword. On the one hand, it cuts off the complex technical standards set by SOA. A simple service does not necessarily face all the problems in distributed at the same time, that is, There is no need to carry SOA's heavy technical burden like a treasure bag. Whatever problems need to be solved, whatever tools are introduced. On the other hand, because of this freedom, the difficulty of software architecture design has been greatly increased. -

Post-microservice era:

In the era of microservices, people choose to solve these distributed problems at the code level of software rather than at the infrastructure level of hardware, largely because the infrastructure composed of hardware cannot keep up with the infrastructure composed of software. The flexibility of application services is a helpless move. The software can split into different services only by using keyboard commands, and the service can be scaled and expanded only by copying and starting. Isn’t it possible for the hardware to change the corresponding application server, load balancer, DNS server, and network by typing the keyboard? Link these facilities? (That is, let the software only consider the business.)

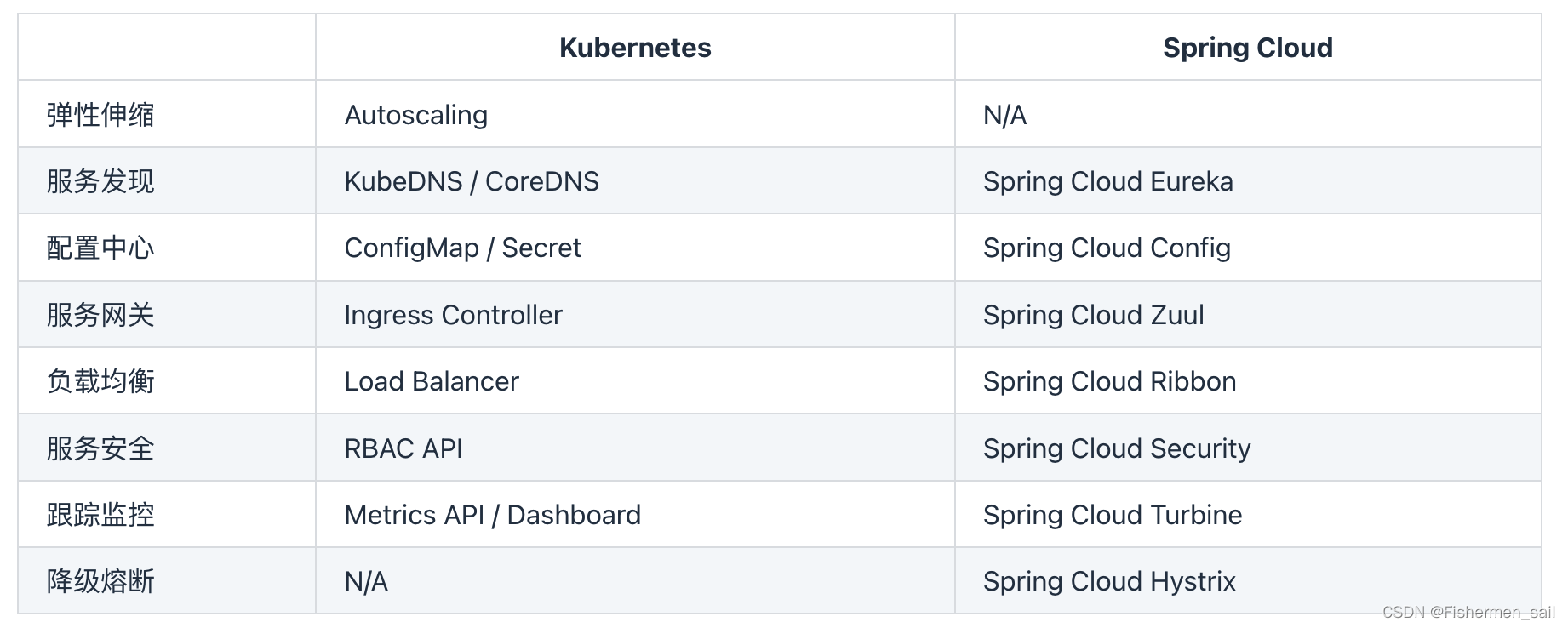

The solution to this problem is the container. However, the early container can only be used as a quick-start service operating environment. The purpose is to facilitate the distribution and deployment of the program. At this stage, it is packaged for a single application The container does not really participate in the solution of distributed problems. The real change comes from the successful development of Kubernetes. The following figure shows the comparison between the technical solution of Kubernetes to solve distributed problems and the traditional microservice solution. Although the methods and effects of solving problems are different because of their different starting points, this undoubtedly provides a new and more promising way of solving problems.

When virtualized hardware can keep up with the flexibility of software, those technical issues that have nothing to do with business may be stripped from the software level and solved quietly within the hardware infrastructure, allowing the software to focus only on business. From the software level to independently deal with various problems caused by distributed architecture, to the era of application code and infrastructure, software and hardware integration, and joint efforts to deal with architectural problems, it is now often dubbed "cloud native" by the media, which is quite abstract. to promote the name.

However, Kubernetes still has not been able to perfectly solve all distributed problems - "imperfect" means that pure Kubernetes is not as good as the previous Spring Cloud solution from a functional point of view. This is because some problems are at the edge of the application system and infrastructure, making it really difficult to fine-tune them at the infrastructure level. For example, microservice A calls two services of microservice B, called B1 and B2, assuming that B1 behaves normally but B2 has a continuous 500 error, then B2 should be fused after reaching a certain threshold, so as to Avoid an avalanche effect. If it is only handled at the infrastructure level, this will encounter a dilemma. Cutting off the network path from A to B will affect the normal call of B1. If it is not cut off, it will continue to be affected by the error of B2.

The above problems are not difficult to deal with in microservices implemented by application code such as Spring Cloud. Since program code is used to solve the problem, as long as it is logical, what functions you want to achieve is only limited by the developer's imagination and technology. capabilities, but the infrastructure is managed for the entire container, and the granularity is relatively rough, only down to the container level, making it difficult to effectively manage and control a single remote service.

In order to solve this kind of problem, the "Sidecar Proxy" (Sidecar Proxy), which is called "Service Mesh" today, is introduced. Refers to the injection of a communication proxy server into the Pod of Kubernetes, which quietly takes over all external communication of the application without the application being aware of it. In addition to realizing normal inter-service communication (called data plane communication), this agent also receives instructions from the controller (called control plane communication), and analyzes and processes the content of data plane communication according to the configuration in the control plane. To achieve various additional functions such as circuit breaking, authentication, measurement, monitoring, and load balancing. In this way, it is not necessary to add additional processing codes at the application level, and it also provides fine management capabilities that are almost no less than program codes.

It is difficult to judge conceptually whether a proxy service running in the same resource container as the application system should be considered software or infrastructure, but it is transparent to the application, and service governance can be realized without changing any software code. That's enough. Isolate technical issues such as "what communication protocol to choose", "how to schedule traffic", "how to authenticate and authorize" from the program code, and replace the functions of most components in today's Spring Cloud family bucket. Microservices only need to consider business Based on its own logic, this is the ideal smart terminal solution. -

The age of no-service:

There is no particularly authoritative "official" definition of no-service yet, but its concept is not as complicated as the previous architectures. Originally, no-service is also based on "simple" as its main selling point, and it only involves two pieces of content : Backend facilities and functions.

Back-end facilities refer to databases, message queues, logs, storage, etc., which are used to support the operation of business logic, but have no business meaning. These back-end facilities are all running in the cloud. For "backend as a service".

Function refers to the business logic code. The concept and granularity of the function here are very close to the function from the perspective of program coding. The difference is that the function in no service runs on the cloud, and there is no need to consider computing power or capacity planning. Call it "functions as a service".

The vision of serverless is that developers only need to focus purely on the business and do not need to consider technical components. The back-end technical components are ready-made and can be used directly without the troubles of procurement, copyright and model selection; no need to consider how to deploy, The deployment process is completely hosted on the cloud, and the work is automatically completed by the cloud; there is no need to consider the computing power, which is supported by the entire data center, and the computing power can be considered unlimited; there is no need to worry about operation and maintenance, and maintaining the continuous and stable operation of the system is cloud computing The responsibility of the service provider is no longer the responsibility of the developer.

The long-term prospect of serverless architecture looks good, but the author is not so optimistic about the short-term development of serverless. It is not suitable for those applications that have complex business logic, rely on server status, response speed, and require long links. This is because the assumption of "unlimited computing power" in no-service determines that it must be billed according to usage to control the scale of computing power consumed. Therefore, the function will not stay on the server in an active state all the time, and will only start running when the request arrives. This makes it inconvenient for the function to depend on the state of the server, and also causes the function to have a cold start time, and the response performance cannot be too good (currently, the cold start process of no service is probably at the level of tens to hundreds of milliseconds, for Java this class starts applications with poor performance, even to the level of close to seconds).

key sentence

- The most important driving force of architecture evolution, or the most fundamental driving force of this "from big to small" change trend, is always to facilitate the smooth "death" and "rebirth" of a certain service. The change of life and death of individual services is a key factor related to whether the entire system can survive reliably.

- Architecture is not invented, but the result of continuous evolution.

- It is precisely with the evolution of software architecture that the concept of building a reliable system has changed from "pursuing as little error as possible" to facing "errors are inevitable" is the foundation for the microservice architecture to challenge and gradually replace the monolithic architecture.

- The design purpose of the distributed service proposed by Unix DCE: "Let developers not care whether the service is remote or local, and can transparently call the service or access the resource".

- Business and technology are completely separated, and remote and local are completely transparent. Perhaps this is the best era.

other

-

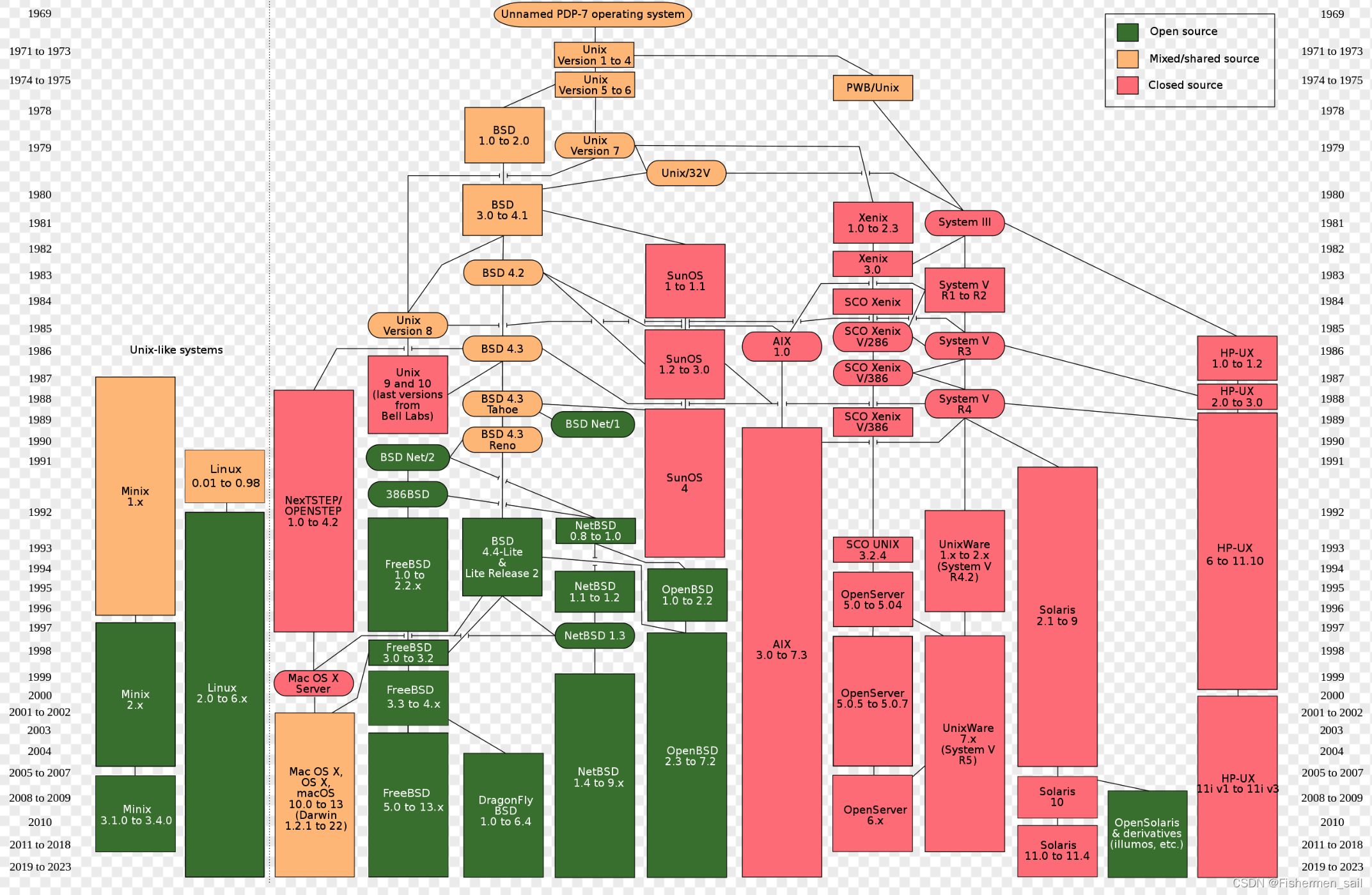

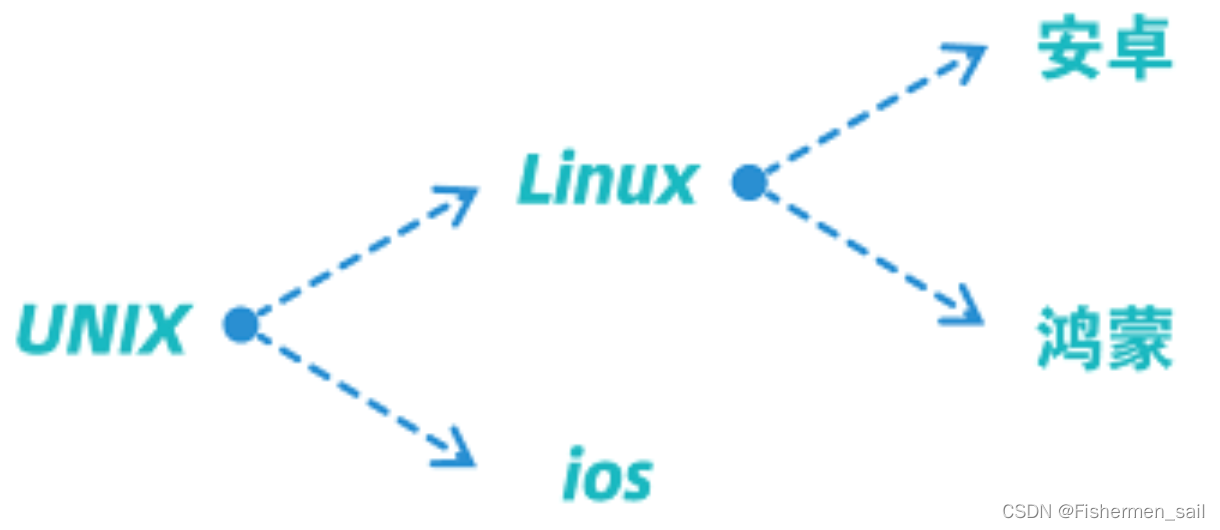

What is Unix?

Simply put, it is the originator of most operating systems (such as Windows is not), the following is an answer from Zhihu.The current system Windows, Mac OS, Linux and various distributions, etc., except that the Windows kernel of versions after Windows 3.1 is the nt kernel, all other systems and versions originate from Unix at the bottom. Windows only has 1 and 2, the bottom layer is related to Unix, and subsequent versions are completely irrelevant. Among them, Mac OS is a mixed kernel, which is a mixing of Unix and a kernel developed by a professor at Carnegie Mellon. Linux is completely unix-like.

When it comes to Unix, there are many associated words, such as Mac OS, Linux, AIX, HP-UX, and Solaris, all of which are Unix-like systems.

Unix-like systems (English: Unix-like; often referred to as UN X or nix) refer to various Unix-derived systems, such as FreeBSD, OpenBSD, SUN's Solaris, and various traditional Unix-like systems, such as Minix, Linux , QNX, etc. Although some of them are free software and some are proprietary software, they all inherit the characteristics of the original UNIX to a considerable extent, have many similarities, and all comply with the POSIX specification to a certain extent.

The trademark of UNIX is owned by the International Open Standards Organization. Only UNIX systems that conform to the single UNIX specification can use the name UNIX, otherwise it can only be called UNIX-like (UNIX-like).

Simpler graph:

-

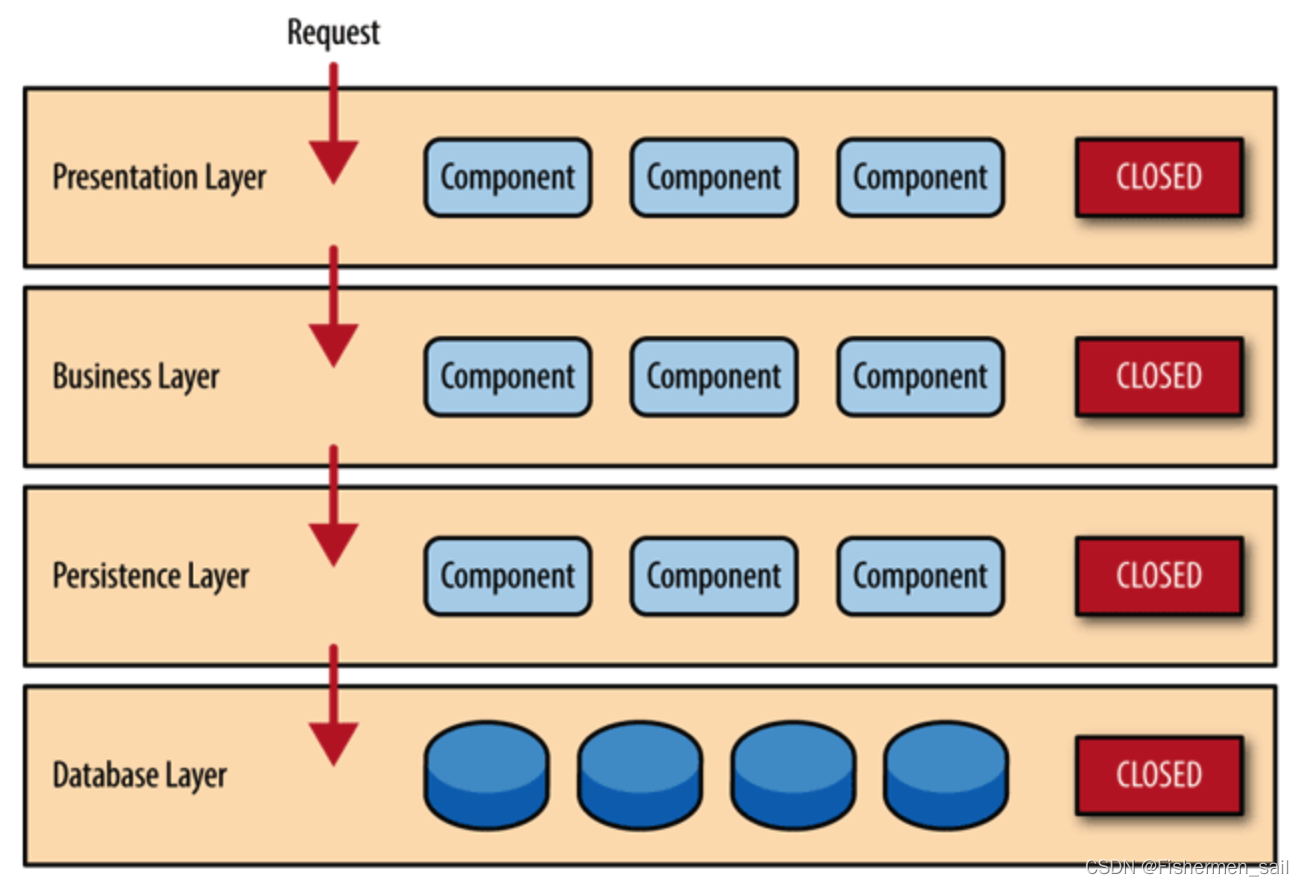

The meaning of layering

includes the layered architecture we are most familiar with, MVC architecture, model (Model)-view (View)-controller (Controller), what is the meaning of these layers? The essence of layered design is to simplify complex problems. The following is Zhihu’s answer:High cohesion: layered design can simplify system design, allowing different layers to focus on a certain module;

low coupling: layers interact through interfaces or APIs, and the relying party does not need to know the details of the dependent party;

reuse : Code or function reuse can be achieved after layering;

scalability: layered architecture can make code easier to scale out -

JAR, WAR

Jar and War can be regarded as file compression. This packaging is actually to compress the code and dependent things together.

The Jar package is a Java package, and the War package can be understood as a JavaWeb package. The Jar package is only packaged by projects written in Java, and there are only compiled classes and some deployment files in it. The War package contains everything, including the class files compiled from written code, dependent packages, configuration files, and all website pages, including HTML, JSP, and so on. A War package can be understood as a web project, which contains all the items of the project. -

Grayscale release

Grayscale release is a release method that allows some users to continue using product feature A and some users to start using product feature B. If users have no objections to B, then gradually expand the scope and migrate all users to B. This approach allows new features or changes to be tested and validated without affecting the entire system. -

The difference between multithreading and concurrency

First of all, a thread is an execution unit of a process and an intra-process scheduling entity. A process is a bit like providing an isolation environment for each application.

For concurrency and parallelism, concurrency means that multiple tasks are executed alternately within the same period of time, and share computing resources, focusing on task scheduling and resource management; parallelism means that multiple tasks are performed at the same time, using multiple processing units or computing resources, And independently, pay attention to the division and execution of tasks.

The purpose of multithreading is generally for parallel computing, and the operating system can assign these threads to multiple CPUs to run simultaneously. Then a single-core CPU cannot achieve parallel computing.

I suddenly have a question, why do we often hear about high concurrency but not high parallelism?

High concurrency means that the system can handle a large number of requests at the same time, while high parallelism means how many tasks the system can run at the same time. A request may require multiple tasks to complete, or multiple requests may share the same task. So high parallelism is just a means to achieve high concurrency.

High concurrency is a concept relative to users or clients, while high parallelism is a concept relative to servers or processors. What users or clients care about is whether the system can respond to their requests quickly, and they don't care how the system internally allocates and executes tasks. What the server or processor cares about is how to use multiple cores or multiple machines to improve the execution speed of tasks. Therefore, high concurrency can better reflect the external service quality of the system, while high parallelism can better reflect the internal working mode of the system.