Table of contents

1. The server uses docker to install kafka

2. springboot integrates kafka to realize producers and consumers

1. The server uses docker to install kafka

①. Install docker (docker is similar to the linux software store, and all applications can be downloaded from docker)

a. Automatic installation

curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun

b. Start docker

sudo systemctl start docker

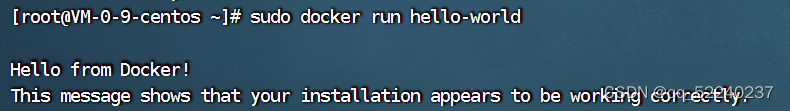

c. Verify that Docker Engine-Community is installed correctly by running the hello-world image.

// pull image

sudo docker pull hello-world

// implement

hello-world sudo docker run hello-world

d. The installation is successful

②、zookeeper

a、docker search zookeeper

b、docker pull zookeeper

③. Install kafka

a、docker search kafka

b、docker pull wurstmeister/kafka

④, run zookeeper

a、docker run -d --restart=always --log-driver json-file --log-opt max-size=100m --log-opt max-file=2 --name zookeeper -p 2181:2181 -v /etc/localtime:/etc/localtime zookeeper

⑤, run Kafka

a、 docker run -d --restart=always --log-driver json-file --log-opt max-size=100m --log-opt max-file=2 --name kafka -p 9092:9092 -e KAFKA_BROKER_ID=0 -e KAFKA_ZOOKEEPER_CONNECT=42.194.238.131:2181/kafka -e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://42.194.238.131:9092 -e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 -v /etc/localtime:/etc/localtime wurstmeister/kafka

b. Parameter description

Parameter Description:

-e KAFKA_BROKER_ID=0 In the kafka cluster, each kafka has a BROKER_ID to distinguish itself

-e KAFKA_ZOOKEEPER_CONNECT=172.21.10.10:2181/kafka Configure the zookeeper management kafka path 172.21.10.10:2181/kafka

-e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://172.21.10.10:9092 Register the address and port of kafka to zookeeper. If it is remote access, it needs to be changed to external network IP, such as Java program access, which cannot be connected.

-e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 Configure kafka's listening port

-v /etc/localtime:/etc/localtime container time synchronization virtual machine time

⑥. Check whether kafka can be used

docker exec -it kafka bash

cd /opt/kafka_2.13-2.8.1/

cd bin

a. Run the kafka producer and send messages

./kafka-console-producer.sh --broker-list localhost:9092 --topic test

b. Open a page and run the kafka consumer to send messages

./kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic test --from-beginning

⑦, the result is like this

⑧. Each message has a topic. The consumer specifies which topic to listen to. If the message comes in the message queue is the topic we specified to monitor, it will be consumed, otherwise it will not be consumed (the topic of production and consumption specified here by topic)

9. If the consumer is down, the producer will continue to send the message, and the message will not be lost. After the consumer restarts, it will receive all the messages sent after the downtime

2. springboot integrates kafka to realize producers and consumers

①. Create dependencies in pom

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

<version>2.7.8</version>

</dependency>

②, Arrangement kafka

a. Add the following configuration to the application.yml file: (Note: Two identical names in yml will report an error, such as two springs)

spring:

kafka:

#The ip address and port number of your own kafka

bootstrap-servers: localhost:9092

consumer:

#A group-id represents a consumer group, and a message can be consumed by several consumer groups

group-id: my-group

auto-offset-reset: earliest

producer: #serialization

value-serializer: org.apache.kafka.common.serialization.StringSerializer

key-serializer: org.apache.kafka.common.serialization.StringSerializer

b. Create a producer

@Configuration

public class KafkaProducerConfig {

@Value("${spring.kafka.bootstrap-servers}")

private String bootstrapServers;

@Bean

public Map<String, Object> producerConfigs() {

Map<String, Object> props = new HashMap<>();

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, bootstrapServers);

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

return props;

}

@Bean

public ProducerFactory<String, String> producerFactory() {

return new DefaultKafkaProducerFactory<>(producerConfigs());

}

@Bean

public KafkaTemplate<String, String> kafkaTemplate() {

return new KafkaTemplate<>(producerFactory());

}

}

The sendMessage method is used to send messages to Kafka.

@RestController

public class KafkaController {

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

@PostMapping("/send")

public void sendMessage(@RequestBody String message) {

kafkaTemplate.send("my-topic", message);

}

}

c. Create a consumer

@Configuration

@EnableKafka

public class KafkaConsumerConfig {

@Value("${spring.kafka.bootstrap-servers}")

private String bootstrapServers;

@Value("${spring.kafka.consumer.group-id}")

private String groupId;

@Bean

public Map<String, Object> consumerConfigs() {

Map<String, Object> props = new HashMap<>();

props.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, bootstrapServers);

props.put(ConsumerConfig.GROUP_ID_CONFIG, groupId);

props.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");

props.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

props.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

return props;

}

@Bean

public ConsumerFactory<String, String> consumerFactory() {

return new DefaultKafkaConsumerFactory<>(consumerConfigs());

}

@Bean

public ConcurrentKafkaListenerContainerFactory<String, String> kafkaListenerContainerFactory() {

ConcurrentKafkaListenerContainerFactory<String, String> factory = new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory());

return factory;

}

}

The @KafkaListener annotation declares a consumer method for receiving from

Messages read in the topic my-topic

@Service

public class KafkaConsumer {

@KafkaListener(topics = "my-topic", groupId = "my-group-id")

public void consume(String message) {

System.out.println("Received message: " + message);

}

}