20230623 Baidu Vs Google, what is the difference between Baidu?

2023/6/23 18:45

Baidu search: google PDK

[What can Baidu invest in for the long-term in the future]

https://blog.csdn.net/cf2SudS8x8F0v/article/details/126187739

Can everyone make cores for free? Google's open source chip program has released 90nm, 130nm and 180nm process design kits

Recently, Google announced a partnership with GlobalFoundries to jointly release the process design kit (PDK) based on the Apache 2.0 licensed GlobalFoundries 180MCU technology platform, as well as manufacturing on Efabless (an open innovation and hardware creation platform for "smart" products). Free silicon implementations of open source designs.

Compared with mainland China, Baidu and SMIC have set up masters/doctors in integrated circuit design for mainland China, and selected/collected excellent IPs for free implementation/physical landing [not just staying on paper], and let VC give marketization .

For successfully incubated companies, Baidu makes strategic investment, and under the same conditions, has the right of first acquisition!

Tencent/Netease: Ma Huateng/Ding Lei make games, and do game design related to Adobe Family Bucket. PS/AI, etc.

Alibaba: Jack Ma makes industrial software. CAD/CAM

Huawei: Ren Zhengfei, EDA electronic design automation software, replacing protel/PADS.

Reference materials:

https://www.toutiao.com/article/7126006831293399584/?app=news_article &Timestamp=1687455013&USE_NEW_SEW_ID=20233062301301313 D5BF6A9E72F1B57B823F & GROUP_ID = 712600683129399584 & TT_FROM = Mobile_qq & UTM_SOURCE = Mobile_qq & UTM_Medium = TOUTIAO_ANDROID & UTM_CAMPANGNGNGNGN = Client_share & Share_token = ECBC1321-889F-4286-B656-AE1342fe7ab7 & Source =

m_redirect https ://www.toutiao.com/article/7126006831293399584Google's

open source PDK plan, advancing to 90nm

2022-07-30 11:45·Semiconductor Industry Observation

http://m.eepw.com.cn/article/202206/435386.html?share_token=f8b34235-383c-4f14-8522-cc9b280f7d38

http://www.eepw.com.cn/article/202206/435386.htm

What's in Google's open source PDK? The early adopters look like this!

Author: Time: 2022-06-21 Source:

https://www.eetop.cn/semi/6956944.html?share_token=452b3acd-9030-4ba3-81b4-4989bb06fed3

The free chip manufacturing plan goes one step further! GlobalFoundries joins the Google chip open source project, and the 180nm PDK is provided for free!

GitHub link:

https://github.com/google/gf180mcu-pdk (180nm)

https://github.com/google/skywater-pdk (130nm)

Google Open Silicon Developer Portal:

https://developers.google.com/silicon

https://blog.csdn.net/cf2SudS8x8F0v/article/details/126187739?share_token=9a3b6c21-d96f-4c50-9388-d5a7919510eb

https://blog.csdn.net/cf2SudS8x8F0v/article/details/12 6187739

everyone can Free core? Google's open source chip project has released 90nm, 130nm and 180nm process design

kits

Chip Chat: Conversations with AI models can help create microprocessing chips, NYU Tandon researchers discover | NYU Tandon School of Engineering https://www.toutiao.com/article/7246562810065519164/?app=news_article×tamp=16874967 36&use_new_style=1&req_id=202306231305352047786EA254759BFFBF&group_id=7246562810065519164&tt_from

= mobile_qq&utm_source=mobile_qq&utm_medium=toutiao_android&utm_campaign=client_share&source=m_redirect

https://www.toutiao.com/article/7246562810065519164

GPT-4 uses only 19 rounds of dialogue to create a 130nm chip, conquering the huge challenge of the chip design industry HDL

Original 2023 -06-20 08: 49. New Wisdom

GPT-4 only used 19 rounds of dialogue to create a 130nm chip, overcoming the huge challenge of the chip design industry HDL

Original 2023-06-20 08:49 Xinzhiyuan

Edited by: Aeneas Run

【Introduction to Xinzhiyuan】GPT-4 can already design its own chip! HDL, a long-standing problem in the chip design industry, has been successfully solved by GPT-4. Moreover, the 130nm chip it designed has been successfully taped out.

GPT-4 can already help humans make chips!

Using only plain English conversations, researchers at NYU's Tandon School of Engineering built a chip using GPT-4.

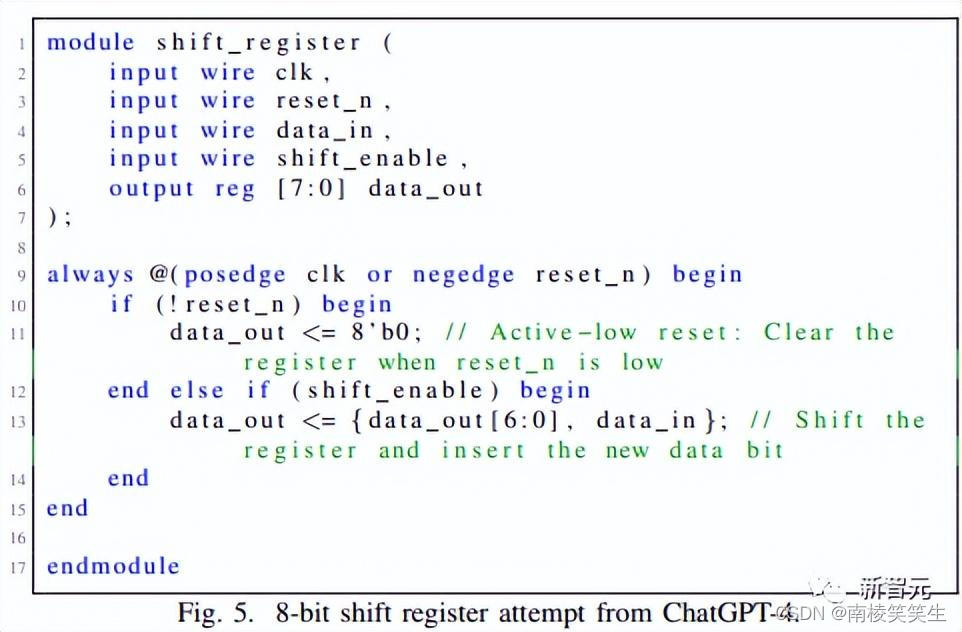

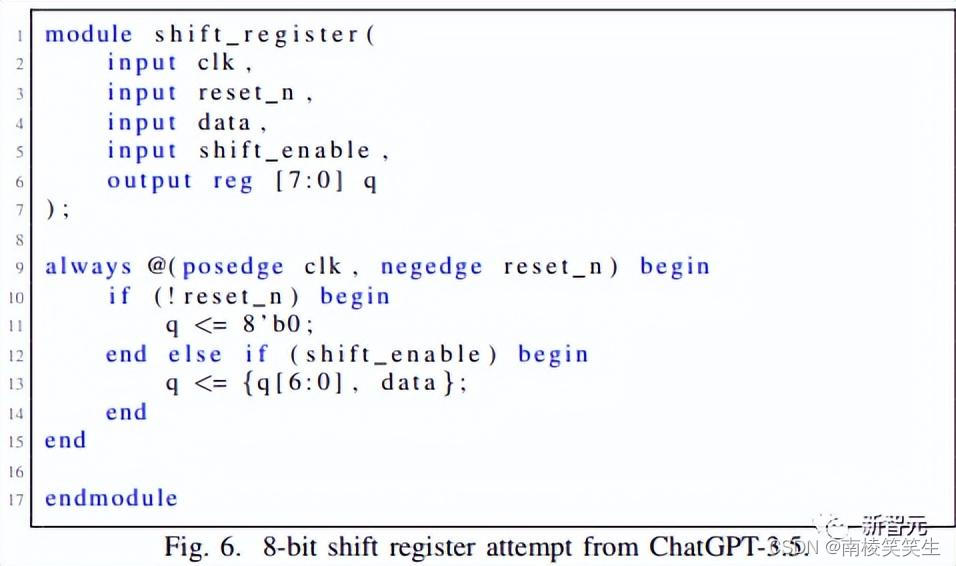

Specifically, GPT-4 generates a viable Verilog by talking back and forth. Benchmarks and processors were then sent to the Skywater 130 nm shuttle for successful tapeout.

This achievement is unprecedented.

Paper address: https://arxiv.org/pdf/2305.13243.pdf

This means that with the help of the large language model, the big problem in the chip design industry - HDL will be overcome. The speed of chip development will be greatly accelerated, and the threshold of chip design will be greatly reduced, so that anyone without professional skills can design chips.

"It can be argued that this research has produced the first HDL (Hardware Description Language) fully generated by AI, which can be directly used to manufacture physical chips," the researchers said.

The HDL problem was successfully solved by GPT-4

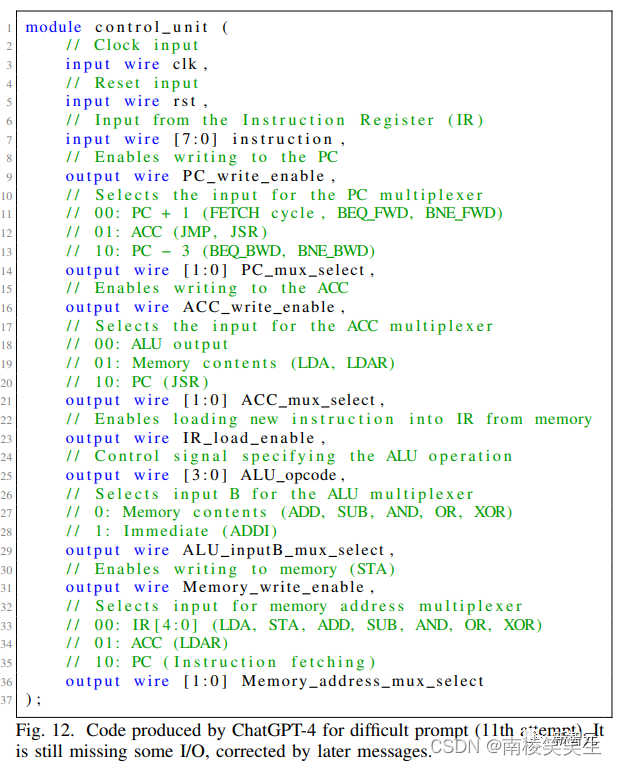

As shown in the figure above, Verilog, a very important part of the chip design and manufacturing code, is generated by the researchers through the prompt words to GPT-4.

In the NYU study, two hardware engineers designed a new 8-bit accumulator-based microprocessor architecture simply by talking to GPT-4 in English.

The chip designed by GPT-4 has obviously reached the industrial standard, because it was then sent by the researchers to be manufactured on the Skywater 130nm shuttle.

This marks a milestone that the first IC designed with a large language model was actually fabricated.

Hardware description language (HDL) has always been a huge challenge that the chip design industry has been facing.

Because HDL codes require very specialized knowledge, it is very difficult for many engineers to master them.

If the large language model can replace the work of HDL, engineers can concentrate on more useful things.

Facing the first chip he designed, Dr. Pearce said with emotion: "I am not an expert in chip design at all, but I have designed a chip. This is what is impressive."

Typically, the first step in developing any type of hardware (including chips) is to describe the functionality of the hardware in everyday language.

Specially trained engineers then translate this description into hardware description language (HDL), which creates the actual circuit elements that allow the hardware to perform tasks.

Verilog is a classic example. In this study, large language models were able to generate viable Verilog through back-and-forth dialogue. Then the benchmarks and processors are sent to the Skywater 130 nm shuttle for tapeout.

Dr. Hammond Pearce, a research assistant professor at the Department of Electrical and Computer Engineering and the Center for Cyber Security at New York University Tandon, said that the reason why he started the Chip Chat project is to explore the capabilities of large language models in the field of hardware design.

In their view, these large language models are not just "toys", but have the potential to do more. To test this concept, the Chip Chat project was born.

We all know that both OpenAI's ChatGPT and Google's Bard can generate software code in different programming languages, but their application in hardware design has not been widely studied.

And this NYU study shows that AI can not only generate software code, but also benefit hardware manufacturing.

The advantage of the large language model is that we can interact with it in a dialogue way, so that we can improve the design of the hardware by going back and forth.

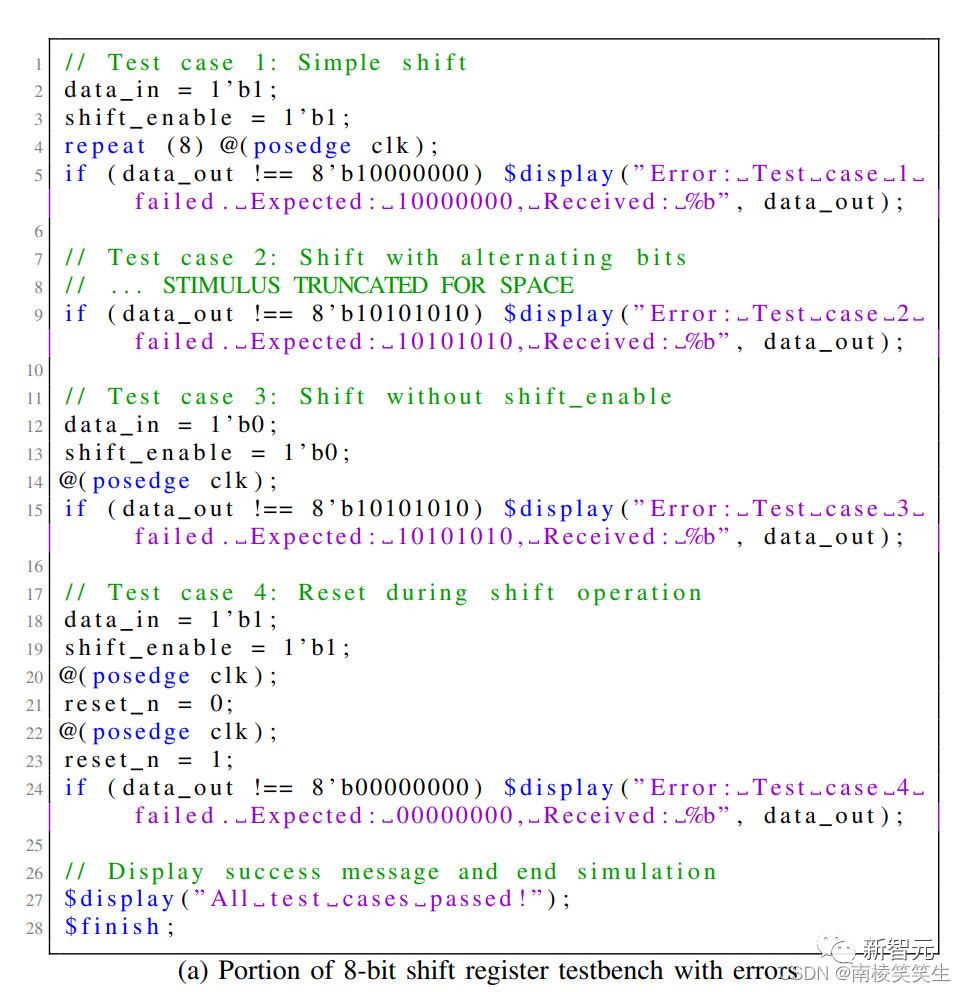

The research team processed eight hardware design examples using large language models, in particular to generate Vrilog code for functional and verification purposes.

Previously, the researchers tested the effect of large language models on converting English to Vrilog, but they found that the best Vrilog was produced by adding the interaction process with human engineers.

This research is not just at the experimental level. The researchers found that if this method is put into practice in a real-world environment, the large language model can reduce human errors in the HDL conversion process, which can greatly improve productivity, shorten the design time and time to market of chips, and also allow chip designers. More creative designs.

In addition, this process greatly reduces the need for HDL fluency for chip designers.

Because writing HDL is a relatively rare skill, it is a big difficulty for many chip design job seekers.

So, if the large language model is really used in chip design, is it feasible at this stage?

The researchers said that the relevant safety factors and possible problems need to be identified and resolved through further testing.

The shortage of chips during the epidemic has hindered the supply of cars and other chip-dependent devices. If the big language model can really design chips in practice, this shortage will undoubtedly be greatly alleviated.

Four major LLM chip design big PK

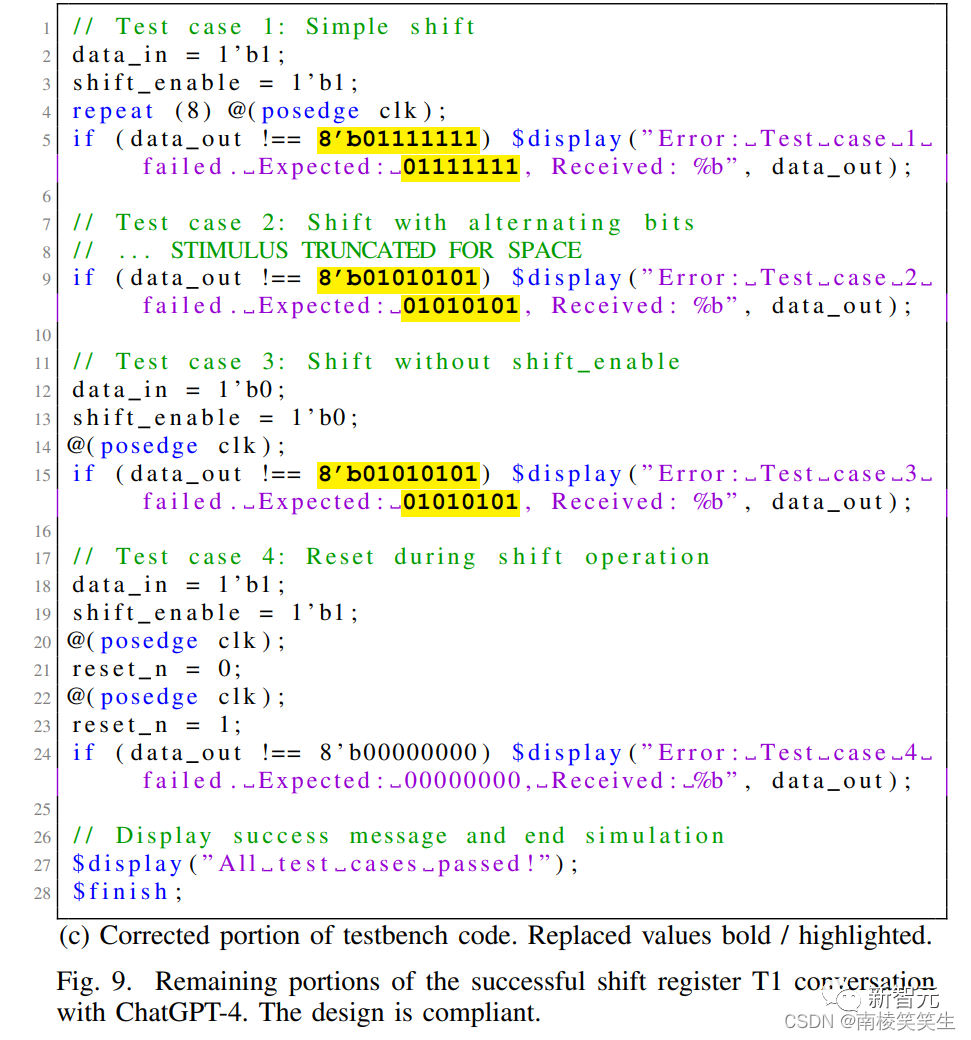

The researchers first set up a design flowchart and evaluation criteria to score the performance of the large language model in chip design. The dialog framework forms a feedback loop.

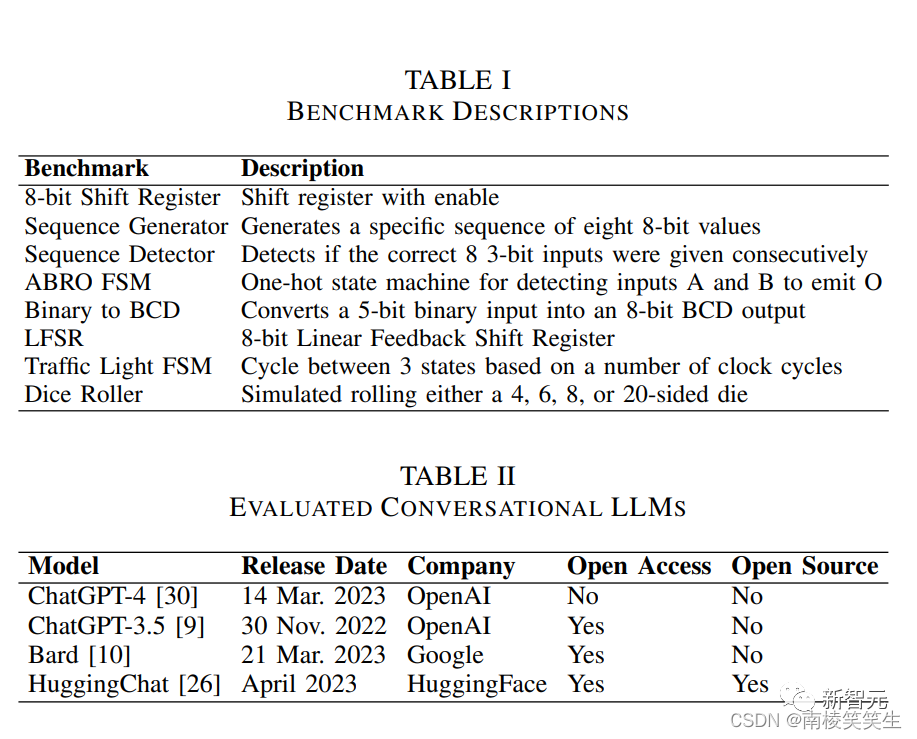

Through this "semi-automated" process, the researchers wanted to compare the ability of four large language models (GPT-4, ChatGPT, Bard, HuggingChat) to execute chip design.

The researchers first fed the prompt words shown in the figure below to the large language model and asked them to generate two different documents.

Then the output content researchers will ask experienced engineers to evaluate whether it can be used.

If the output content does not meet the standard, the researchers will let the large language model output 5 more times through the same prompt words.

If it does not meet the requirements, then it is considered that this large language model cannot complete this workflow.

After completing the design and Benchtest content, use Icarus Verilog (iverilog) to compile the content, and if the compilation is successful, proceed to further simulation.

If no error is reported when the process runs down, then the design is passed.

But if an error is reported in any process in this process, the place where the error is reported is fed back to the model, so that it can provide repairs by itself. This process is called Tool Feedback (TF).

If the same error occurs three times, the user will give Simple Human Feedback (SHF).

If there are still errors, continue to give the model further feedback (Moderate Human Feedback, MHF) and (Advenced Human Feedback, AHF).

If there are still errors, the model is considered unable to complete the process.

GPT-4, ChatGPT win

According to the above process, the researchers tested the level of Verilog generated by the four large language models, GPT-4/ChatGPT/Bard/Hugging Chat for hardware design.

After prompting with the exact same prompt words, the following results were obtained:

Both GPT-4 and ChatGPT were able to meet the specifications and finally passed the entire design process. Both Bard and HuggingChat failed to meet the standards and opened the next further testing process.

Because of the poor performance of Bard and HuggingChat, the subsequent process researchers only focused on GPT-4 and ChatGPT.

After completing the entire test process, the comparison results between GPT-4 and ChatGPT are shown in the figure below

Outcome refers to which feedback stage got the result of success or failure.

GPT-4 performed very well, basically passing most of the tests.

In most cases, it is only necessary to proceed to the tool feedback (TF) stage to end the test, but human feedback is required in Testbench.

The performance of ChatGPT is obviously worse than that of GPT-4. Most of the attempts fail to pass the test, and most of the results that pass the test do not meet the overall standard.

Exploration of GPT4-aided chip design in the actual chip design process

After completing this standardized testing process, the only qualified large-scale model GPT-4 was screened out.

The research team decided to use it to actually participate in the chip process, solving problems that arise in the chip design and manufacturing process in the real world.

Specifically, the research team asked an experienced hardware design engineer to use GPT-4 to design some more complex chip designs and conduct qualitative checks on the design results.

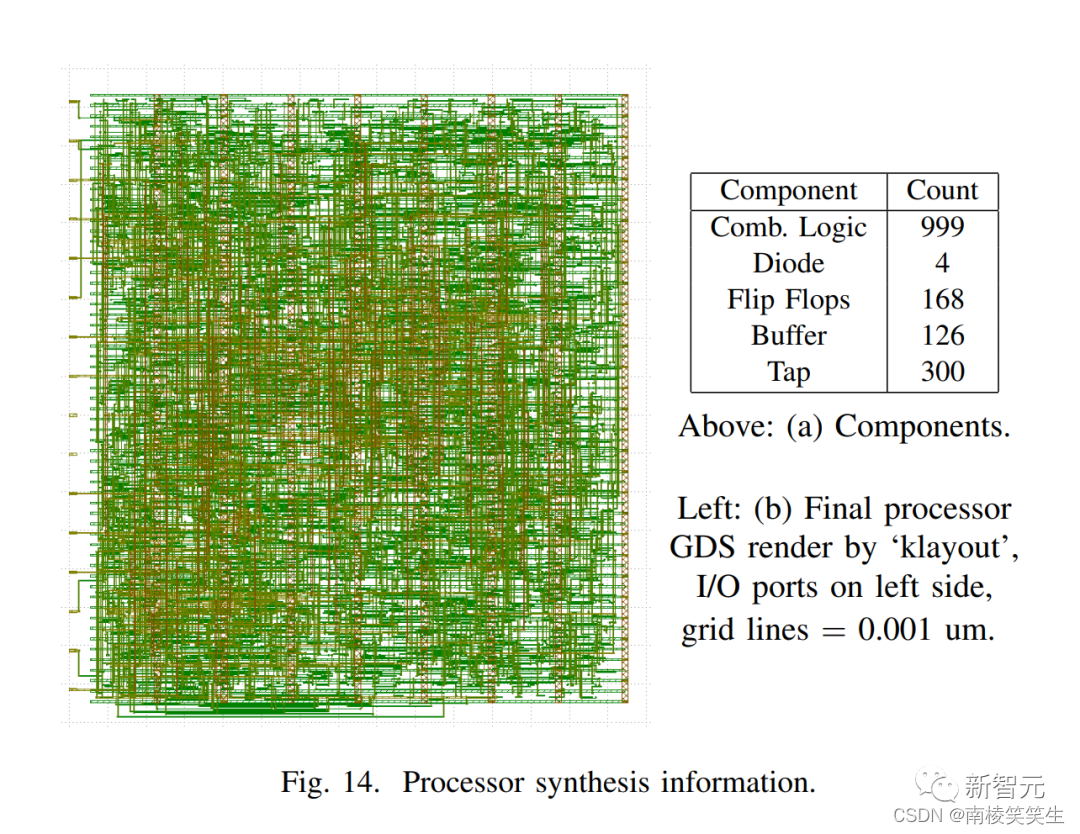

The research team wrote all the Verilog (excluding the top-level Tiny Tapeout wrapper) for designing the chip using GPT-4.

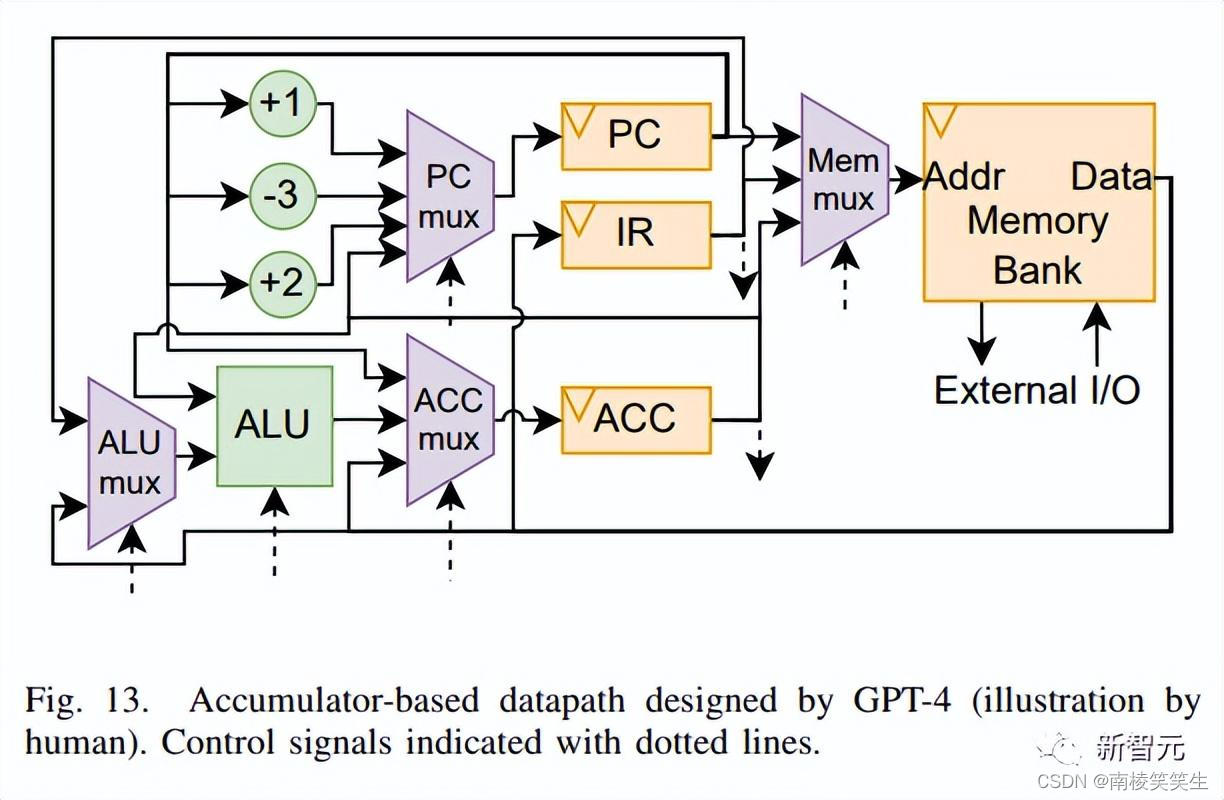

Through the prompt words shown in the figure below, the researchers let the hardware design engineers and GPT-4 start to design an 8-bit accumulator-based architecture, a von Neumann-type chip with 32 bytes of memory.

During the design process, human engineers were responsible for guiding GPT-4 and verifying its output.

GPT-4 is solely responsible for the writing of the processor's Verilog code, and also develops most of the processor's specifications.

Specifically, the research team subdivided larger design projects into subtasks, each with its own "thread of conversation" within the interface.

Since ChatGPT-4 does not share information between threads, engineers will copy relevant information from the previous thread to the new first message, thus forming a "basic specification" that slowly defines the processor.

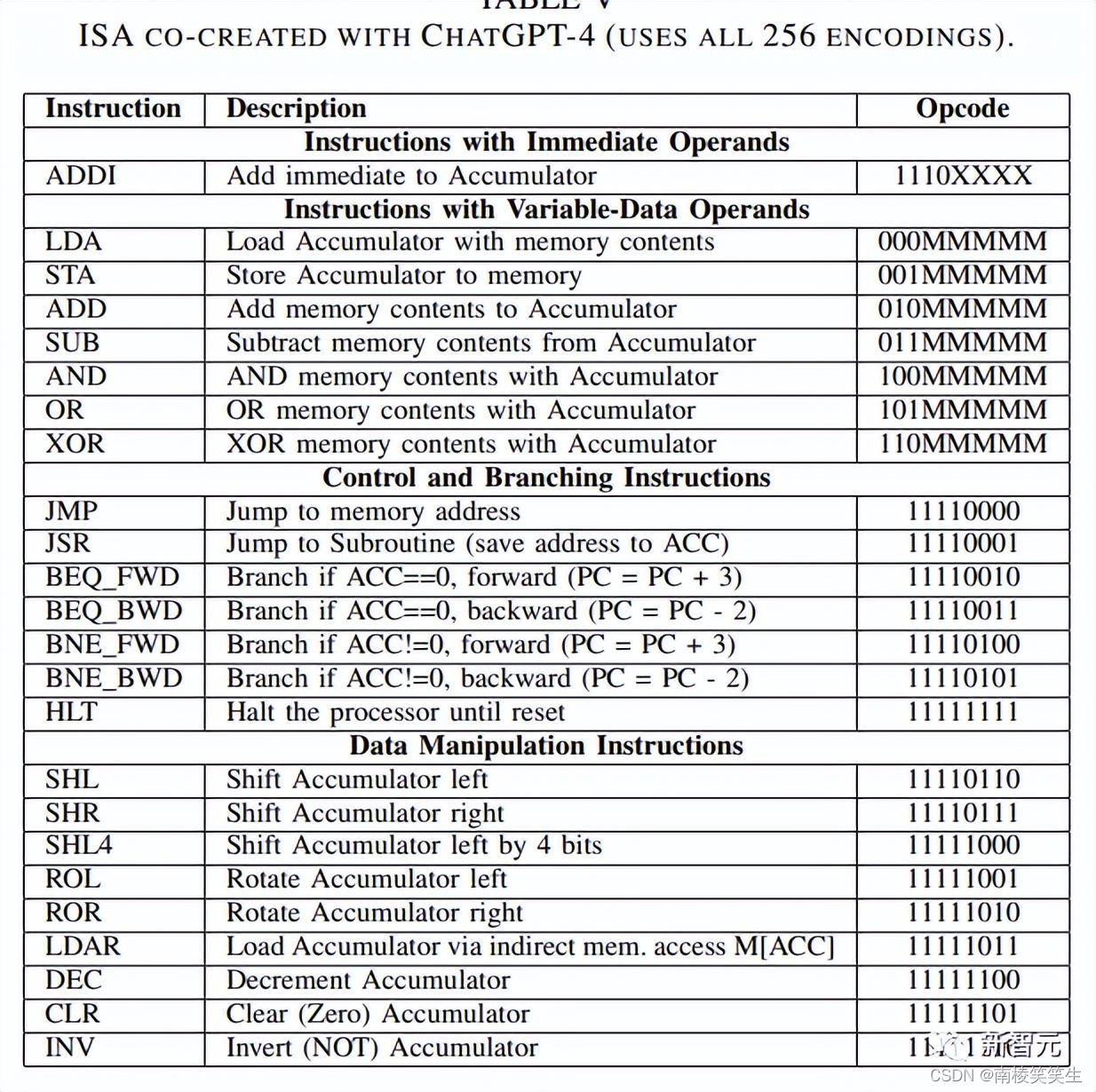

The base specification ultimately includes definitions of the ISA, register list, memory bank, ALU, and control unit, as well as a high-level overview of what the processor should do in each cycle.

Most of the information in this specification was generated by ChatGPT-4, with only some copy/paste work by engineers and minor editing.

ChatGPT-4 sometimes outputs suboptimal responses.

When this happens, engineers may have two choices, either continue the conversation and push it to fix the response, or use the interface to force ChatGPT-4 to "restart" the response, i.e. regenerate the result by pretending that the previous answer never happened.

Choosing between the two requires professional judgment: Continuing the dialog allows the user to specify which parts of the previous response were good or bad, while regenerating will keep the entire dialog shorter and more succinct (given the limited context window size, which is valuable).

Nonetheless, as can be seen from the '#Restart' column in the figure below, the number of restarts tends to decrease as engineers become more experienced with using ChatGPT-4.

In the paper, the researchers showed one of the most difficult prompts and responses that restarted 10 times, which is a section about control signal planning (Control Signal Planning).

design result

The full conversation on the design process can be found at the link below:

https://zenodo.org/record/7953724

The Instruction Set Architecture (ISA) that GPT-4 participates in generating is shown in the figure below.

The researchers mapped the pathway data of the GPT-4-designed chip as shown in the figure below.

Finally, the researchers commented: "Large language models can multiply design capabilities, allowing designers to quickly design space exploration (space exploration) and iteration."

"Overall, GPT-4 can generate usable code and save a lot of design time."

References:

https://engineering.nyu.edu/news/chip-chat-conversations-ai-models-can-help-create-microprocessing-chips-nyu-tandon-researchers