Introduction

`

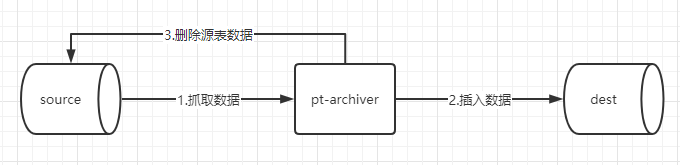

pt-archiver is a tool for archiving tables. It can be a low-impact, high-performance archiving tool that deletes old data from tables without having too much impact on OLTP queries. Data can be inserted into another table, which does not need to be on the same server. It can be written to a file in a format suitable for LOAD DATA INFILE. Or do neither and just do an incremental delete.

Features:

1. The data that needs to be cleaned can be obtained according to the where condition

2. Support transaction batch submission and data batch capture

3. Support the logical processing of deletion after successful insertion

4. Support document backup

5. Batch mode, automatically match the primary key, support repeated filing

6. The archiving speed is about 6 times that of the stored procedure script (local test)

7. Support archiving without writing binlog, to eliminate the problem of useless data occupying space and report parsing problems

1. Example

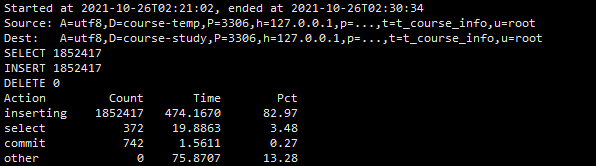

1. Conditional archiving, no deletion of source table data, non-batch insert method

pt-archiver --source h=127.0.0.1,P=3306,u=root,p=091013,D=course-temp,t=t_course_info,A=utf8 --dest h=127.0.0.1,P=3306, u=root,p=091013,D=course-study,t=t_course_info,A=utf8 --charset=utf8 --where "f_course_time < 100000 " --progress=10000 --txn-size=5000 --limit= 5000 --statistics --no-delete

Second, conditional archiving, do not delete source table data, batch insert mode

pt-archiver --source h=127.0.0.1,P=3306,u=root,p=091013,D=course -temp,t=t_course_info,A=utf8 --dest h=127.0.0.1,P=3306,u=root,p=091013,D=course-study,t=t_course_info,A=utf8 --charset=utf8 - -where "f_course_time < 100000 " --progress=5000 --txn-size=5000 --limit=1000 --statistics --no-delete --bulk-insert --ask-pass

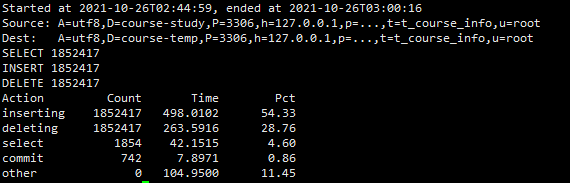

3. Conditional archiving, delete Yuanyuan Table data, non-batch insert, non-batch delete

pt-archiver --source h=127.0.0.1,P=3306,u=root,p=091013,D=course-study,t=t_course_info,A=utf8 --dest h=127.0.0.1,P=3306, u=root,p=091013,D=course-temp,t=t_course_info,A=utf8 --charset=utf8 --where "f_course_time < 100000 " --progress=5000 --txn-size=5000 --limit= 1000 --statistics --purge

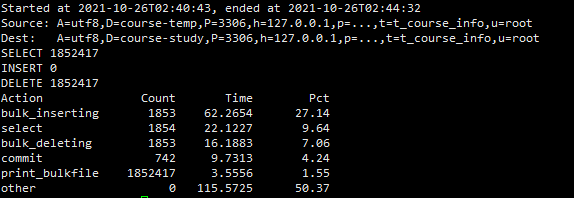

Four, conditional archiving, delete source table data, batch insert, batch delete

pt-archiver --source h=127.0.0.1,P=3306,u=root,p=091013,D=course -temp,t=t_course_info,A=utf8 --dest h=127.0.0.1,P=3306,u=root,p=091013,D=course-study,t=t_course_info,A=utf8 --charset=utf8 - -where "f_course_time < 100000 " --progress=5000 --txn-size=5000 --limit=1000 --bulk-insert --bulk-delete --statistics --purge

Five, conditional archiving of association table

pt-archiver --source h=127.0.0.1,P=3306,u=root,p=091013,D=course-temp,t=t_course_info,A=utf8 --dest h=127.0.0.1,P=3306,u=root,p=091013,D=course-study,t=t_course_info,A=utf8 --charset=utf8 --where "f_course_time < 1000 and exists (select 1 from t_course_category where t_course_category.f_id= t_course_info.f_category_id and t_course_category.f_state = ‘1’ ) " --progress=5000 --txn-size=5000 --limit=1000 --statistics --no-delete --bulk-insert --ask-pass

2. Performance comparison

3. Backup to external file

Export to an external file without deleting the data in the source table

pt-archiver --source h=127.0.0.1,D=course-study,t=t_course_info,u=root,p=123456 --where '1=1' - -no-check-charset --no-delete --file="/tmp/source/archiver.dat"

4. Installation

Installation:

#First download the Percona Toolkit compilation package

wget “https://www.percona.com/downloads/percona-toolkit/3.0.3/binary/tarball/percona-toolkit-3.0.3_x86_64.tar.gz”

#Unzip

tar xf percona-toolkit-3.0.3.tar.gz

#Enter directory installation

cd percona-toolkit-3.0.3

#Start compiling and installing

yum install perl-ExtUtils-CBuilder perl-ExtUtils-MakeMaker

perl Makefile.PL

make

make install

#Install After that, there will be a command

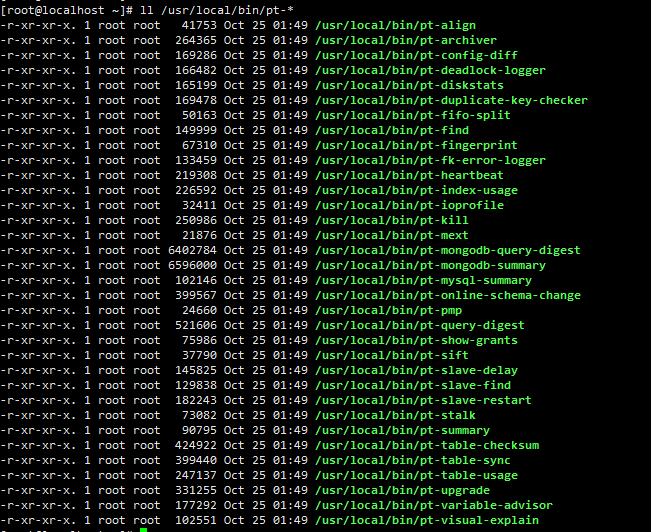

ll /usr/local/bin/pt-*

Four, common commands

-where 'id<3000' set the operating conditions

–limit 10000 fetch 1000 rows of data each time to pt-archive for processing

–txn-size 1000 set 1000 rows to submit once for a transaction

–progress 5000 output processing information every time 5000 rows are processed

–statistics end Statistical information is given at the time: the start time point, the end time point, the number of rows queried, the number of archived rows, the number of deleted rows, and the total time and proportion consumed by each stage, which is convenient for optimization. As long as --quiet is not added, pt-archive will output the execution process by default

--charset=UTF8 specifies that the character set is UTF8, and the character set needs to correspond to the character set of the current library to operate

--no-delete means not to delete the original data, Note: If this parameter is not specified, the data in the original table will be cleaned up after all processing is completed

– bulk-delete deletes old data on the source in batches

– bulk-insert bulk inserts data to the dest host (see the general log of dest to find that it is Insert data through LOAD DATA LOCAL INFILE on the dest host)

–dry-run simulation execution

–source source data

–dest target data

–local: add NO_WRITE_TO_BINLOG parameter, OPTIMIZE and ANALYZE do not write binlog

–analyze=ds After the operation is completed, optimize the table Space (d means dest, s means source)