On June 25, 2023, Jina AI released JinaChat, a multimodal large model API for developers and end users. Traditional large-scale language models often base their competitiveness on the basis of "many parameters" and "strong scoring". However, for application developers, the APIs of traditional model vendors do not allow developers to implement solutions at low cost. plan. As a result, the paradox of the AIGC era has arisen: everyone is a developer, but as a result, developers do not earn a penny, and model API service providers earn a lot of money. So today let us see how JinaChat broke the game.

The Pitfalls of Traditional Mockups: The Sisyphean Cycle

When using a large model in an actual production environment, we usually use long prompts, few-shot prompts, Chain of thoughts or AutoGPT to complete complex tasks. However, these capabilities need to introduce a large number of context windows, which is limited by the expensive token billing mechanism. The first hurdle we face is its high cost.

Using ChatGPT to ask a single question (zero-shot) usually costs 100 to 200 tokens. Especially in continuous dialogue scenarios such as chatbots and customer service, in order to maintain context memory, traditional large model APIs require developers to send additional historical messages each time until the context window is filled. For developers, most of the token cost is paid for historical news.

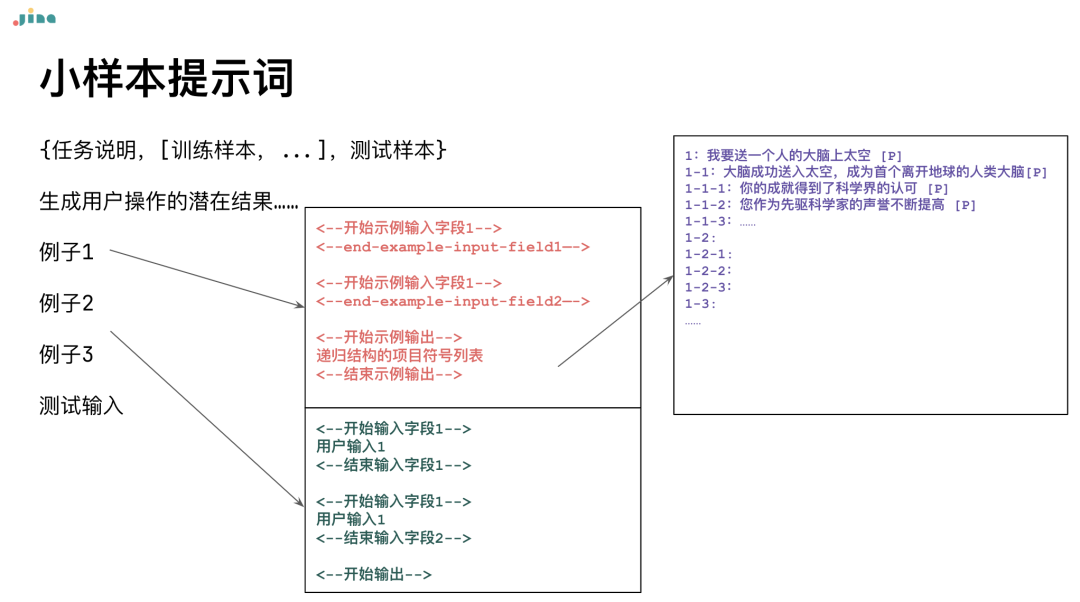

A few shot prompt is an advanced prompt word strategy commonly used in large models. It consists of {task description, [multiple training samples,...], test samples} to induce the large model to give better s answer. Among them, the two parts of "task description" and "multiple training samples" are basically fixed costs. When using small sample prompt words, the real variable lies in the test samples. However, traditional large-model APIs require developers to send complete prompts containing training samples every time they construct a small-sample decision to "activate" the model's contextual learning ability. That is to say, 90% of the cost of each decision is wasted on repetitive prompts, and less than 10% of the actual cost is actually spent on the cutting edge.

Next is the more expensive AutoGPT. Someone once said that behind the explosion of AutoGPT, OpenAI counted money. This is because every task in AutoGPT needs to be completed through the chain of thoughts: in order to provide the best reasoning, each step of reasoning will introduce the previous chain of thoughts, which will consume more tokens. Soon after the release of AutGPT We have calculated in detail that the cost of a simple task using AutoGPT is as high as 102 yuan.

Xiao Han, official account: Jina AI Dr. Xiao Han, founder of Jina AI: Unveiling the cruel truth behind the hustle and bustle of Auto-GPT

The small-sample prompt words and AutoGPT that seem to lead the future seem out of reach for most users and organizations from a cost perspective.

Good news for developers: JinaChat with low cost and long memory

"How much is the other party's token?"

"10 cents"

"How expensive is our token?"

"5 cents" "Reduce it to zero, and then get multi-modal at the beginning!"

The above conversation may come from the game advertisements that you often see recently. It is also one of the driving forces of our JinaChat: a large model API optimized for long prompts, allowing developers to keep their pocketbooks.

Cost advantage compared with similar large models

The traditional large model API cannot save and trace the conversation history, but JinaChat allows developers to call the previous conversation history through the chatId, making the big model API from stateless to stateful, thereby maintaining the memory of long prompts. More importantly, developers only need to pay incremental costs each time, without having to pay repeatedly for memory, which greatly reduces the developer's cost of use.

For example, if you design a complex Agent, you need to make decisions based on historical dialogue information. With the traditional large model API, you need to send all the content including Agent, CoT, memory, etc. every time, which is equivalent to climbing from the bottom of the mountain every time.

With JinaChat, you only need to send incremental information, and the previous conversation will be remembered by the model. You only need to specify the chatId, just like loading a game file, and continue climbing from the existing height without repeated payment .

You can even continue the chat history of the graphical interface in the API.

In addition to using chatId to achieve "file reading", JinaChat's single request price is also quite cheap. Taking the standard subscription as an example, short messages with less than 300 tokens are completely free, which meets most simple daily communication needs. As for more than 300 tokens, no matter how long the message is, the price is 0.56 yuan. If developers can effectively combine chatId, use long prompts to build historical information and training samples for the first time, and only send incremental prompts later, thereby reducing the cost to 300 tokens, which greatly reduces the development cost of complex LLM applications .

Go beyond text and lead a new era of multimodal interaction

"Starting the game to send god clothes" In JinaChat 0.1 version released on June 26, users can not only interact with JinaChat in a ChatGPT-like interface through text interaction, but also realize picture chat by uploading pictures. JinaChat supports image interaction as an important feature. It can call SceneXplain to achieve image parsing and content understanding.

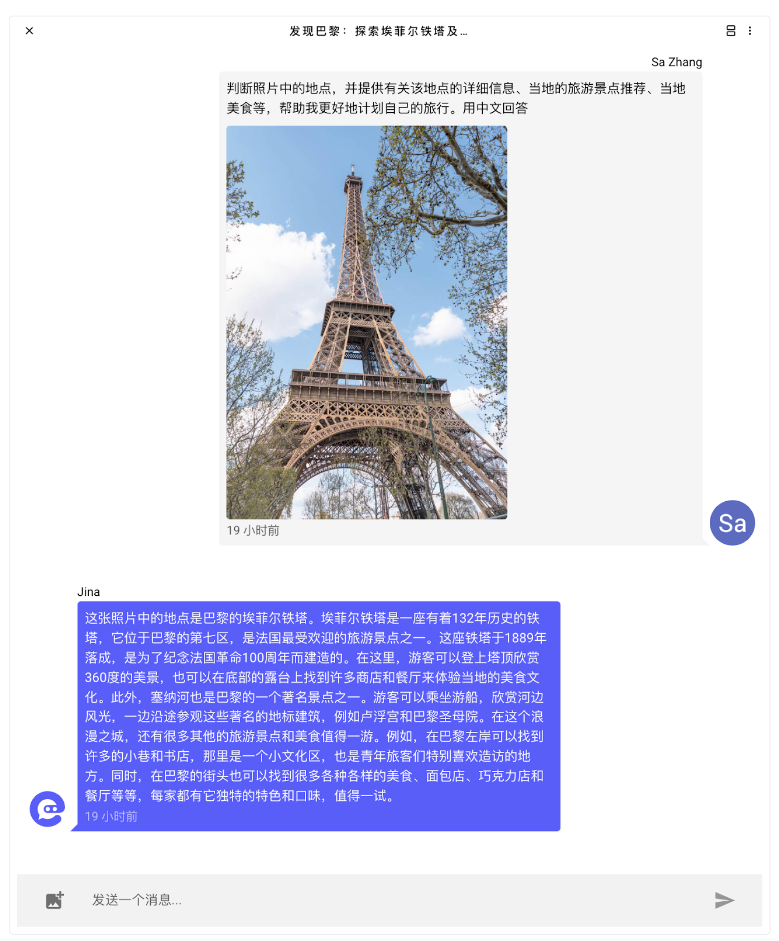

For example, when you send a travel photo, JinaChat can use image recognition technology to determine the location in the photo and provide relevant information, such as tourist attraction recommendations, local food, etc., to help you better plan your trip.

Combined with JinaChat's continuous memory ability, you will find that JinaChat's price/performance ratio is the most competitive on the market for complex queries with long conversations and multiple rounds of interactions. Other solutions are incomparable, and it can be said that "after passing this village, there will be no such store."

For another example, if you send a picture of a car, JinaChat can identify the category of the car and give information such as the brand, model, and highlights of the car. The in-depth image interaction can bring a new experience.

This multimodal interaction not only provides a richer user and developer experience, but also opens up vast possibilities for cross-domain applications.

At a deeper level, through JinaChat, the capabilities of large language models can be truly applied. Based on the powerful capabilities of JinaChat, developers can easily build complex LLM applications with multi-level dialogue structures and support for multi-modal input.

API seamless integration, broaden the horizons of developers

JinaChat's API is fully compatible with OpenAI's chat API. In addition, functions such as image interaction and dialogue recovery have been added to make it even better in terms of interactivity. Developers can easily replace it with JinaChat API to achieve a richer and more diverse user experience and meet the needs of different application scenarios.

import os

import openai

openai.api_type = "openai"

openai.api_base = "https://api.chat.jina.ai/v1"

openai.api_version = None

openai.api_key = os.getenv("JINA_CHAT_API_KEY")

response = openai.ChatCompletion.create(

messages=[{'role': 'user', 'content': 'Tell me a joke'}],

)

print(response)

This is JinaChat, a lower-cost, more-modal large-scale language model service that benefits developers.

Whether you need an AI conversational assistant or develop complex LLM applications, JinaChat can provide you with a powerful and affordable experience.

If you also want your application to be more interactive and intelligent, then JinaChat will be your first choice. In the future, JinaChat will continue to upgrade, enhance user experience with intelligent dialogue, and make our communication methods more diverse.

Enter JCFIRSTCN now and enjoy the first month free trial immediately!

Click chat.jina.ai, join us now, and explore more possibilities! Domestic users will be redirected to chat.jinaai.cn domain name to adapt to the domestic network environment when they visit for the first time. In addition, iOS mobile phone users please use Safari or open the link on the computer.