1. I/O control method

The previous blog introduced some basic concepts of device management, and the I/O control method was not explained in detail. One of the main tasks of device management is to control the data transfer between the device and the memory or CPU. There are 4 input/output control methods between peripheral devices and memory, which will be introduced separately below.

2. Program direct control mode

Also called program query mode.

The control of information exchange is completely implemented by the CPU executing the program, and a data buffer register (data port) and a device status register (status port) are set in the program query mode interface. When the host performs I/O operations, it first sends out an inquiry signal, reads the status of the device, and decides whether the next operation is to transmit data or wait according to the status of the device.

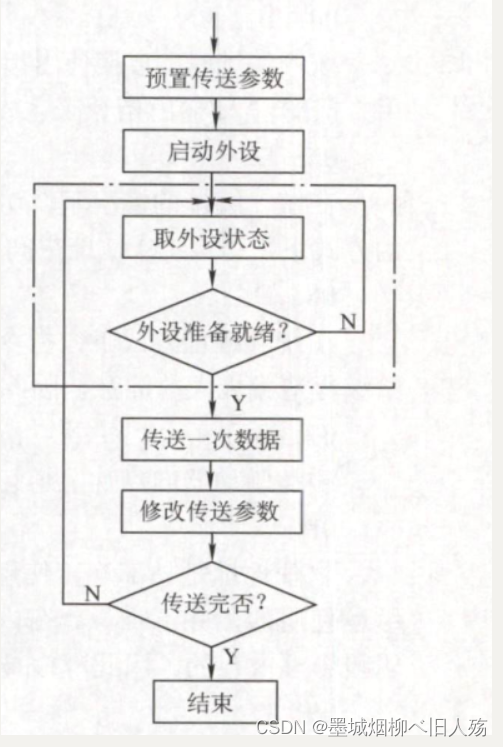

The workflow of program query mode is as follows:

- ①The CPU executes the initialization program and presets the transmission parameters.

- ② Send a command word to the I/O interface to start the I/O device.

- ③ Read its status information from the peripheral interface.

- ④The CPU continuously queries the status of the I/O device until the peripheral is ready.

- ⑤ Send data once

- ⑥Modify address and counter parameters.

- ⑦Judge whether the transmission is over, if not, go to step ③ until the counter is 0.

As shown in the figure, for each word that the computer reads from an external device, the CPU needs to perform a cyclic check on the status of the peripheral until it is determined that the word is already in the data register of the I/O controller. In the program direct control method, due to the high speed of the CPU and the low speed of the IO device, most of the time of the CPU is in the loop test waiting for the I/O device to complete the data I/O, resulting in a huge CPU resource waste. In this mode, the reason why the CPU will constantly test the state of the I/O device is that the I/O device cannot report to the CPU that it has completed the input operation of a character because no interrupt mechanism is used in the CPU.

In this control mode, once the CPU starts I/O, it must stop the running of the current program and insert a program into the current program. The main feature of the program query method is that the CPU has a "stepping" waiting phenomenon, and the CPU and I/O work serially. The interface design of this method is simple and the amount of equipment is small, but the CPU spends a lot of time inquiring and waiting during the information transmission process, and can only exchange information with one peripheral device within a period of time, which greatly reduces the efficiency.

Personal understanding: In this direct program control method, the CPU can only do one thing, and the CPU interferes frequently. It seems that without the CPU, nothing can be done, and each transmission is a word, which also shows its shortcoming of low efficiency. Time-consuming; and doing so, the logic is very simple, so the interface design is simple and easy to implement.

3. Program interruption mode

Also called interrupt-driven.

1. Program interruption

Program interruption means that in the process of the computer executing the current program, some abnormal conditions or special requests that need to be dealt with urgently occur, the CPU temporarily suspends the current program, and turns to process these abnormal conditions or special requests, and then returns to the computer after processing. At the breakpoint of the current program, continue to execute the original program.

Early interrupt techniques were used to handle data transfers. With the development of computers, interrupt technology has been given new functions, the main functions are:

- ① Realize the parallel work of CPU and I/O equipment.

- ② Handle hardware failures and software errors.

- ③ To achieve human-computer interaction, the user needs to use the interrupt system to intervene in the machine.

- ④Realize multi-program and time-sharing operation, and the switching of multi-program needs to rely on the interrupt system.

- ⑤Real-time processing requires the help of the interrupt system to achieve rapid response.

- ⑥ Realize the switching between the application program and the operating system (management program), which is called "soft interrupt".

- ⑦ Information exchange and task switching between processors in a multi-processor system.

Then, the interrupt program interrupt method is the realization of the first function of the interrupt technology. The idea of the program interruption method: The CPU arranges in the program to start a certain peripheral at a certain time, and then the CPU continues to execute the current program, without waiting for the peripheral to be ready like the query method. Once the peripheral hardware completes the preparation work for data transmission, it will actively send an interrupt request to the CPU, requesting the CPU to serve itself. Under the condition of being able to respond to the interrupt, the CPU temporarily suspends the program being executed, and then executes the interrupt service program to serve the peripheral. In the interrupt service program, a data transmission between the host and the peripheral is completed. After the transmission is completed, the CPU returns original program.

From the perspective of the I/O controller, the I/O controller receives a read command from the CPU, and then reads data from the external device. Once the data is read into the data register of the I/O controller, it sends an interrupt signal to the CPU through the control line, indicating that the data is ready, and then waits for the CPU to request the data. After the I0 controller receives the data fetching request sent by the CPU, it puts the data on the data bus and transmits it to the register of the CPU. So far, this IO operation is completed, and the I/O controller can start the next I/O operation.

From the perspective of the CPU, the CPU issues a read command, then saves the context of the currently running program (the scene, including the program counter and processor registers), and then executes other programs. At the end of each instruction cycle, the CPU checks for interrupts. When there is an interrupt from the IO controller, the CPU saves the context of the currently running program and goes to execute the interrupt handler to handle the interrupt. At this time, the CPU reads a word of data from the IO controller and transfers it to the register, and stores it in the main memory. Next, the CPU restores the context of the program (or other programs) that issued the IO command, and continues running.

In this method, although the data transfer between the main memory and peripherals (external memory can also be regarded as a peripheral, such as a disk) is still in units of words, the CPU does not have to wait for the completion of the peripheral data transfer. Finally, it can execute other programs, and after the peripheral device transmits a word of data, it sends an interrupt request to the CPU, and the CPU knows that the peripheral data has been transmitted, and then returns to process the transmitted data. At the same time, after issuing the read command, continue to do other things and repeat. In this device, the CPU and I/O devices work in parallel. Compared with the direct control method of the program, some CPU time is saved and the efficiency is significantly improved. However, because each word in the data is between the memory and the I/O controller The transmission between must go through the CPU, which leads to the interrupt-driven method still consumes more CPU time.

At this point, the program interruption method should be finished, but the interruption is mentioned, so let's take a place here to learn the specific process of program interruption.

2. The work process of program interruption

(1) Interrupt request

Interrupt sources are devices or events that request CPU interrupts, and a computer allows multiple interrupt sources. The time at which each interrupt source sends an interrupt request to the CPU is random. In order to record interrupt events and distinguish different interrupt sources, the interrupt system needs to set an interrupt request flag trigger for each interrupt source. When its state is "1", it means that the interrupt source has a request. These flip-flops can form an interrupt request flag register, which can be concentrated in the CPU or scattered among various interrupt sources.

A maskable interrupt is sent through the INTR line, and a non-maskable interrupt is sent through the NMI line. The maskable interrupt has the lowest priority and will not be responded in interrupt-off mode. Non-maskable interrupts are used to handle urgent and important events, such as clock interrupts, power failures, etc., with the highest priority, followed by internal exceptions, which will be responded even in the off-interrupt mode. (This involves the knowledge of the bus in the principle of computer composition, and I will also write a special blog about the bus.)

(2) Interrupt response arbitration

Interrupt response priority refers to the order in which the CPU responds to interrupt requests. Since the time when many interrupt sources make interrupt requests is random, when multiple interrupt sources make requests at the same time, it is necessary to determine which interrupt source to respond to the request through interrupt arbitration logic. The arbitration of interrupt response is usually through hardware queuing realized by the device.

Generally speaking, ① non-maskable interrupt > internal exception > maskable interrupt; ② in internal exception, hardware fault > software interrupt; ③ DMA interrupt request has priority over interrupt request transmitted by I/O device; ④ in IO transmission class interrupt request, High-speed devices take precedence over low-speed devices, input devices take precedence over output devices, and real-time devices take precedence over normal devices.

The third point below is explained here. The DMA interrupt request has priority over the interrupt request transmitted by the I/O device. Here, the interrupt request transmitted by the I/O device refers to the interrupt issued in the program interrupt mode, and the DMA interrupt request is what we will learn soon The interrupt generated in the third I/O control mode.

(3) Conditions for the CPU to respond to interrupts

The CPU responds to the interrupt request sent by the interrupt source under certain conditions, and after some specific operations, it turns to execute the interrupt service program. The CPU responds to the interrupt must meet the following three conditions:

- ① The interrupt source has an interrupt request.

- ②The CPU allows interrupts and enables interrupts (abnormal and non-maskable interrupts are not subject to this restriction).

- ③ An instruction has been executed (exception is not limited by this), and there is no more urgent task.

Note: The ready time of the IO device is random, and the CPU sends an interrupt query signal to the interface at a unified moment, that is, before the end of each instruction execution phase, to obtain the I/O interrupt request, that is, the time for the CPU to respond to the interrupt is at the end of each instruction execution phase. The interrupts mentioned here only refer to I/O interrupts, and internal exceptions do not belong to such cases. (This part is easy to understand if you have studied the instruction system. In fact, there are two types of interrupts: interrupts during program execution can be understood as internal interrupts, while I/O interrupts actually refer to external interrupts. Internal interrupts can also be called abnormal.

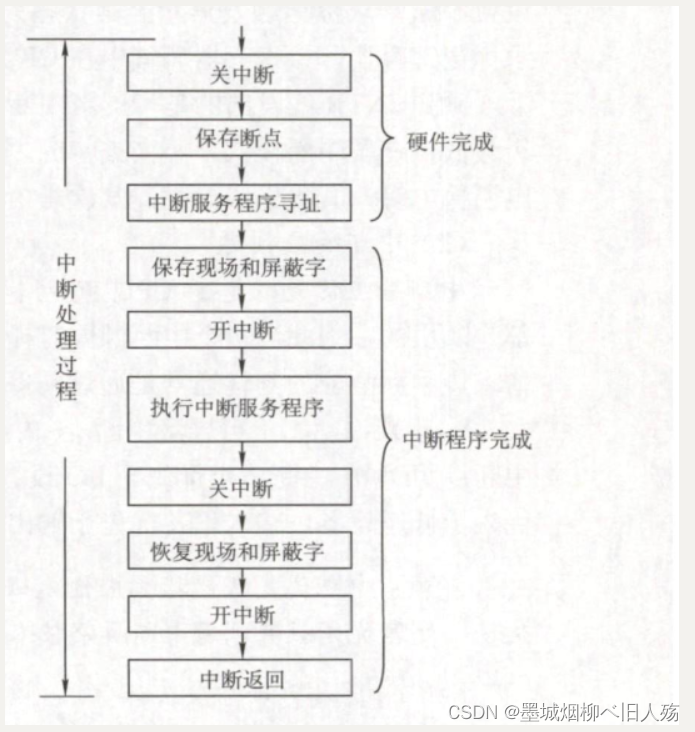

(4) Interrupt response process

After the CPU responds to the interrupt, after some operations, it goes to execute the interrupt service routine. These operations are directly realized by hardware, we call it interrupt implicit instruction. The interrupt implicit instruction is not a real instruction in the instruction system, but a virtual statement, which is essentially a series of automatic operations of the hardware. What it does is the following:

- ① Turn off the interrupt. After the CPU responds to the interrupt, it must first protect the breakpoint and scene information of the program. During the process of protecting the breakpoint and the scene, the CPU cannot respond to the interrupt request of the higher-level interrupt source. Otherwise, if the breakpoint or the scene is not saved completely, after the interrupt service routine ends, it cannot recover correctly and continue to execute the current program.

- ②Save the breakpoint. In order to ensure that the original program can be returned correctly after the interrupt service routine is executed, the breakpoint of the original program (the contents of PC and PSW that cannot be directly read by the instruction) must be saved in the stack or in a specific register. Pay attention to the difference between exceptions and interrupts: exception instructions are usually not executed successfully, and must be re-executed after exception handling, so the breakpoint is the address of the current instruction. The breakpoint of the interrupt is the address of the next instruction.

- ③ leads to the interrupt service routine. Identify the interrupt source, and send the corresponding (interrupt) service routine entry address to the program counter PC. There are two methods to identify interrupt sources: hardware vector method and software query method. This section mainly discusses the more commonly used vector interrupts.

(5) Interrupt recognition

There are two ways to identify exceptions and interrupt sources: software identification and hardware identification. The identification methods of exceptions and interrupt sources are different. Most exceptions are identified by software, while interrupts can be identified by software or hardware.

The software identification method means that the CPU sets an abnormal status register to record the cause of the abnormality. The operating system uses a unified exception or interrupt query program to query the exception status registers in order of priority to detect the type of exception and interrupt. The first query is processed first, and then transferred to the corresponding handler in the kernel. (It means that the interrupt is first judged according to the priority. If the priority is the same, look at the time before and after the occurrence, and select the handler corresponding to the interrupt to be executed)

The hardware identification method is also called vector interrupt. The first address of an exception or interrupt handler is called an interrupt vector, and all interrupt vectors are stored in the interrupt vector table. Each exception or interrupt is assigned an interrupt type number. In the interrupt vector table, there is a one-to-one correspondence between the type number and the interrupt vector, so the corresponding handler can be quickly found according to the type number.

(6) Interrupt processing diagram

Note: The scene and breakpoint, these two types of information cannot be destroyed by the interrupt service routine. Because the scene information can be directly accessed by instructions, they are usually saved to the stack by instructions in the interrupt service program, that is, realized by software; and the breakpoint information is automatically saved to the stack or the specified register by the CPU when the interrupt responds. That is, implemented by hardware.

Interrupt mask word:

The mask word in the interrupt processing process, also known as the Interrupt Mask Register (Interrupt Mask Register, IMR), is a binary bit sequence used to control which interrupt signals can be responded by the CPU. By setting the interrupt mask word, you can disable some interrupts or allow some interrupts to respond under specific conditions.

When the CPU is executing an interrupt service routine, in order to prevent other interrupts from interfering with the execution of the current program, the interrupt mask word can be used to shield the response of other interrupt signals. At the same time, the interrupt mask word can also be changed dynamically according to the needs, so as to realize flexible interrupt control.

3. Multiple interrupts and interrupt masking technology

If a new higher priority interrupt request occurs during the execution of the interrupt service routine by the CPU, but the CPU does not respond to the new interrupt request, the interrupt is called a single interrupt. If the CPU suspends the current interrupt service routine and turns to process a new interrupt request, this kind of interrupt is called multiple interrupt, also known as interrupt nesting.

If the CPU has multiple interrupt functions, the following conditions must be met: ① Set the interrupt command in advance in the interrupt service routine. ② The interrupt source with high priority level has the right to interrupt the interrupt source with low priority level. Interrupt processing priority refers to the actual priority processing order of multiple interrupts , which can be dynamically adjusted by using interrupt masking technology, so that the priority of interrupt service routines can be flexibly adjusted to make interrupt processing more flexible. If the interrupt masking technique is not used, the processing priority and response priority are the same. Modern computers generally use interrupt masking technology. Each interrupt source has a mask trigger. 1 means that the request of the interrupt source is masked, and 0 means that it can be applied normally. All mask triggers are combined to form a mask word register. The mask word The contents of the registers are called mask words .

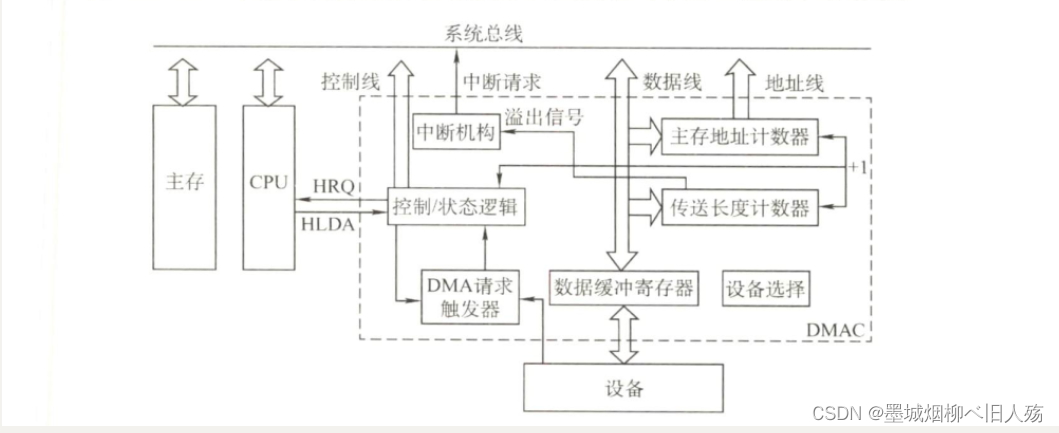

Four. DMA mode

1.DMA

In the interrupt-driven mode, the data exchange between the I/O device and the memory must go through the registers in the CPU, so the speed is

still limited, and the basic idea of the DMA (direct memory access) method is that the I/O device and the A direct data

exchange path is opened up between memories, completely "liberating" the CPU. The characteristics of the DMA method are as follows:

- 1) The basic unit is a data block.

- 2) The transmitted data is directly sent from the device to the memory, or vice versa.

- 3) CPU intervention is required only at the beginning and end of the transfer of one or more data blocks, and the transfer of the entire block of data is completed under the control of the DMA controller.

The DMA method is a control method in which group information is transmitted entirely by hardware. It has the advantage of program interruption, that is, in the data preparation stage, the CPU and peripherals work in parallel. The DMA method opens up a "direct data channel" between the peripheral and the memory, and the information transmission no longer passes through the CPU, which reduces the CPU's overhead when transmitting data, so it is called the direct memory access method. Since the data transmission does not pass through the CPU, there is no need for cumbersome operations such as protecting and restoring the CPU site.

This method is suitable for large-volume data transmission of high-speed devices such as disks, graphics cards, sound cards, and network cards, and its hardware overhead is relatively large. In DMA mode, the role of interrupts is limited to processing at the end of faults and normal transfers.

During the DMA transfer, the DMA controller will take over the CPU's address bus, data bus, and control bus, and the CPU's main memory control signals are prohibited from being used. And when the DMA transfer is over, all rights of the CPU will be restored and its operations will begin. It can be seen that the DMA controller must have the ability to control the system bus.

2. DMA transfer method

When exchanging information between the main memory and the IO device, the CPU is not passed through, and the CPU cannot control the main memory at this time. However, when the I/O device and the CPU access the main memory at the same time, conflicts may occur. In order to effectively use the main memory, the DMA controller and the CPU usually use the main memory in the following three ways:

- 1) Stop CPU memory access. When the I/O device has a DMA request, the DMA controller sends a stop signal to the CPU to make the CPU leave the bus and stop accessing the main memory until the DMA transfers a block of data. After the data transmission is over, the DMA controller notifies the CPU that the main memory can be used, and returns the control of the bus to the CPU.

- 2) Cycle embezzlement (or cycle stealing). When the I/O device has a DMA request, there will be three situations: ① is that the CPU is not accessing memory at this time (such as the CPU is executing a multiplication instruction), so the I/O memory access request does not conflict with the CPU; ② is The CPU is accessing the memory. At this time, the CPU must wait for the end of the access cycle, and then the CPU will give up the bus possession; ③ I/O and the CPU request memory access at the same time, and a memory access conflict occurs. At this time, the CPU must temporarily give up the bus possession . I/O memory access priority is higher than CPU memory access, because I/O may lose data without immediate memory access. At this time, the I/O device steals one or several access cycles, and releases the bus immediately after transmitting a piece of data. , is a single-word transmission method.

- 3) DMA and CPU access memory alternately. This method is suitable for the case where the working cycle of the CPU is longer than the main memory access cycle. For example, if the working cycle of the CPU is 1.2us and the access cycle of the main memory is less than 0.6us, one CPU cycle can be divided into two cycles, C1 and C2, in which C1 is exclusively for DMA access and C2 is exclusively for CPU access . This method does not require the application, establishment and return process of the bus use right, and the bus use right is controlled by C1 and C2 in time-sharing.

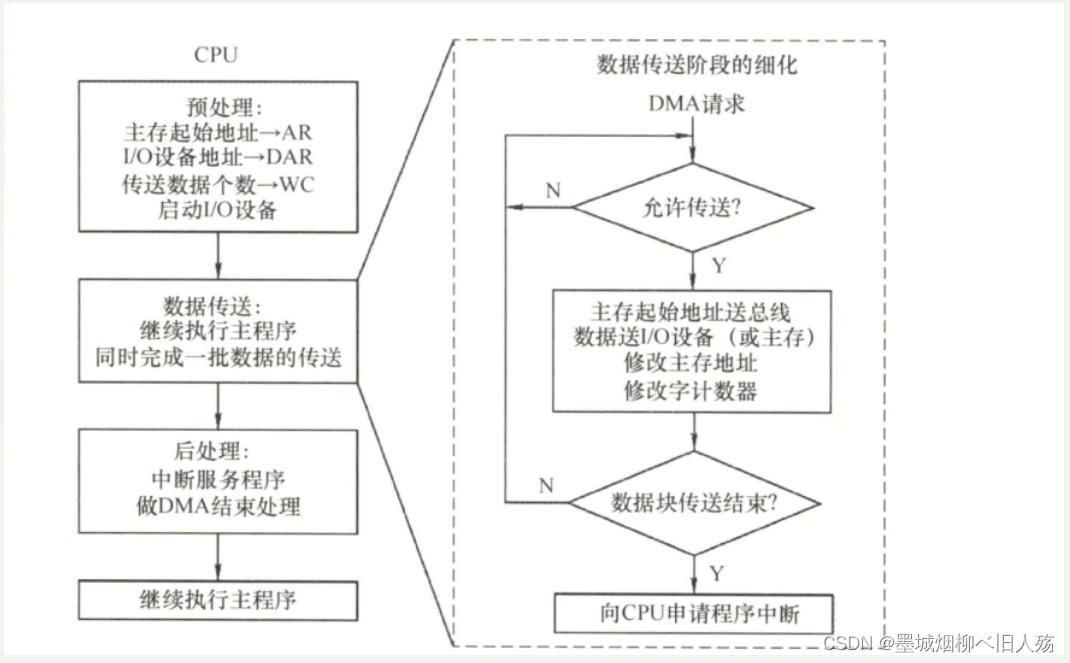

3. DMA process

The data transmission process of DMA is divided into three stages: preprocessing, data transmission and postprocessing:

- 1) Pretreatment. Some necessary preparatory work is done by the CPU. First, the CPU executes several I/O instructions to test the state of the I/O device, initialize the relevant registers in the DMA controller, set the transfer direction, start the device, and so on. Then, the CPU continues to execute the original program until the IO device is ready to send data (input case) or receive data (output case), the I/O device sends a DMA request to the DMA controller, and then the DMA controller sends a DMA request to the CPU Send a bus request (sometimes these two processes are collectively referred to as a DMA request) to transfer data.

- 2) Data transmission. The data transmission of DMA can take single byte (or word) as the basic unit, and can also take data block as the basic unit. For transfers in units of data blocks (such as hard disks), the data input and output operations after the DMA occupies the bus are all implemented through cycles. It should be pointed out that this cycle is also implemented by the DMA controller (rather than executing the program through the CPU), that is, the data transfer phase is completely controlled by the DMA (hardware).

- 3) post-processing. The DMA controller sends an interrupt request to the CPU, and the CPU executes the interrupt service program to complete the DMA processing, including checking whether the data sent to the main memory is correct, testing whether there is an error in the transmission process (transfer to the diagnostic program if an error occurs), and deciding whether to continue using the DMA Send other data, etc.

4. The difference between DMA and interrupt driven mode

- ①The interrupt mode is the switching of the program, which needs to protect and restore the site: while the DMA mode does not interrupt the current program, does not need to protect the site, and does not occupy any CPU resources except for pre-processing and post-processing.

- ②The response to the interrupt request can only occur at the end of the execution of each instruction (after the execution cycle); and the response to the DMA request can occur at the end of any machine cycle (fetch, indirect, and after the execution cycle) .

- ③The interrupt transfer process requires CPU intervention: the DMA transfer process does not require CPU intervention, so the data transfer rate is very high, which is suitable for group data transfer of high-speed peripherals.

- ④ The priority of the DMA request is higher than the interrupt request.

- ⑤ The interrupt method has the ability to handle abnormal events, while the DMA method is limited to the transmission of large amounts of data.

- ⑥ From the perspective of data transmission, the interrupt method is transmitted by the program, and the DMA method is transmitted by the hardware.

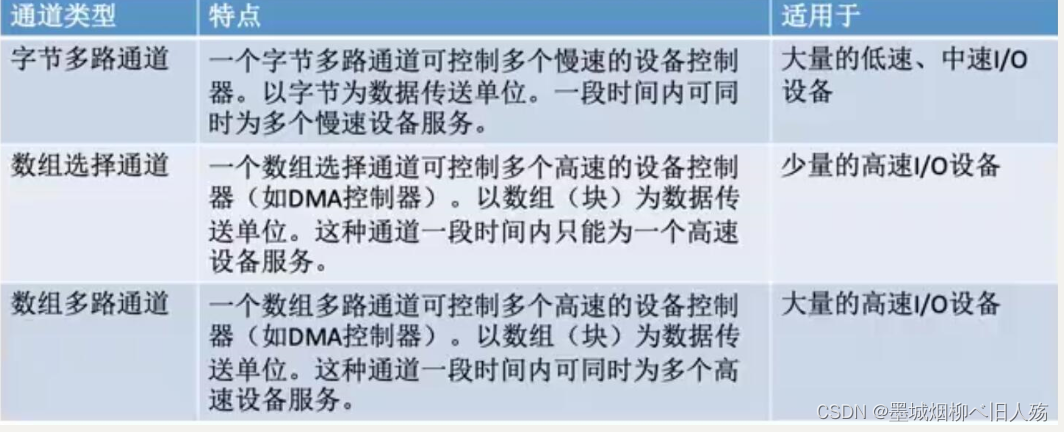

5. Channel control method

An I/O channel refers to a processor dedicated to input/output . The I/O channel method is the development of the DMA method, which can further reduce the intervention of the CPU, that is, the intervention of reading (or writing) a data block is reduced to the reading (or writing) and processing of a group of data blocks. Intervention related to control and management as a unit. At the same time, it can realize the parallel operation of CPU, channel and IO device, so as to improve the resource utilization rate of the whole system more effectively.

For example, when the CPU wants to complete a group of related read (or write) operations and related control, it only needs to send an I/O instruction to the I/O channel to give the first address of the channel program to be executed and the address to be accessed. After the channel receives this command, the channel executes the channel program to complete the I/O task specified by the CPU, and sends an interrupt request to the CPU when the data transmission ends. The difference between an I/O channel and a general processor is that the type of channel instructions is single, and there is no memory of its own. The channel program executed by the channel is placed in the memory of the host, that is to say, the channel shares memory with the CPU.

The difference between the I/O channel and the DMA method is that the DMA method requires the CPU to control the size of the transferred data block and the location of the transferred memory, while in the channel method these information are controlled by the channel. In addition, each DMA controller corresponds to one device and memory to transfer data, and one channel can control the data exchange between multiple devices and memory.

It is a hardware technology, understand it as a weak chicken version of the CPU.

In the memory of the host, that is to say, the channel shares memory with the CPU.

The difference between the I/O channel and the DMA method is that the DMA method requires the CPU to control the size of the transferred data block and the location of the transferred memory, while in the channel method these information are controlled by the channel. In addition, each DMA controller corresponds to one device and memory to transfer data, and one channel can control the data exchange between multiple devices and memory.

It is a hardware technology , understand it as a weak chicken version of the CPU.