In the process of learning the Dark Horse 2023 big data tutorial, the following configurations were first completed according to the video: [Required]

[Dark Horse 2023 Big Data Practical Tutorial] Big Data Cluster Environment Preparation Process Record (3 virtual machines)

Dark Horse 2023 Big Data Practical Tutorial] VMWare Detailed process of virtual machine deployment HDFS cluster

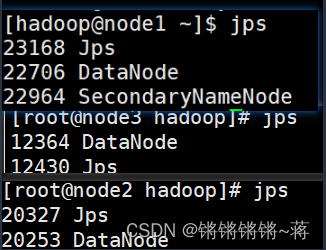

Finally, the hadoop user of node1 enters start-dfs.shone key to start the hdfs cluster successfully, and the three virtual machines use the `jps' command to view the results as follows:

It shows that the cluster deployment is basically successful, but the webpage cannot be opened when "node1:9870" is entered on the webpage.

show configuration port

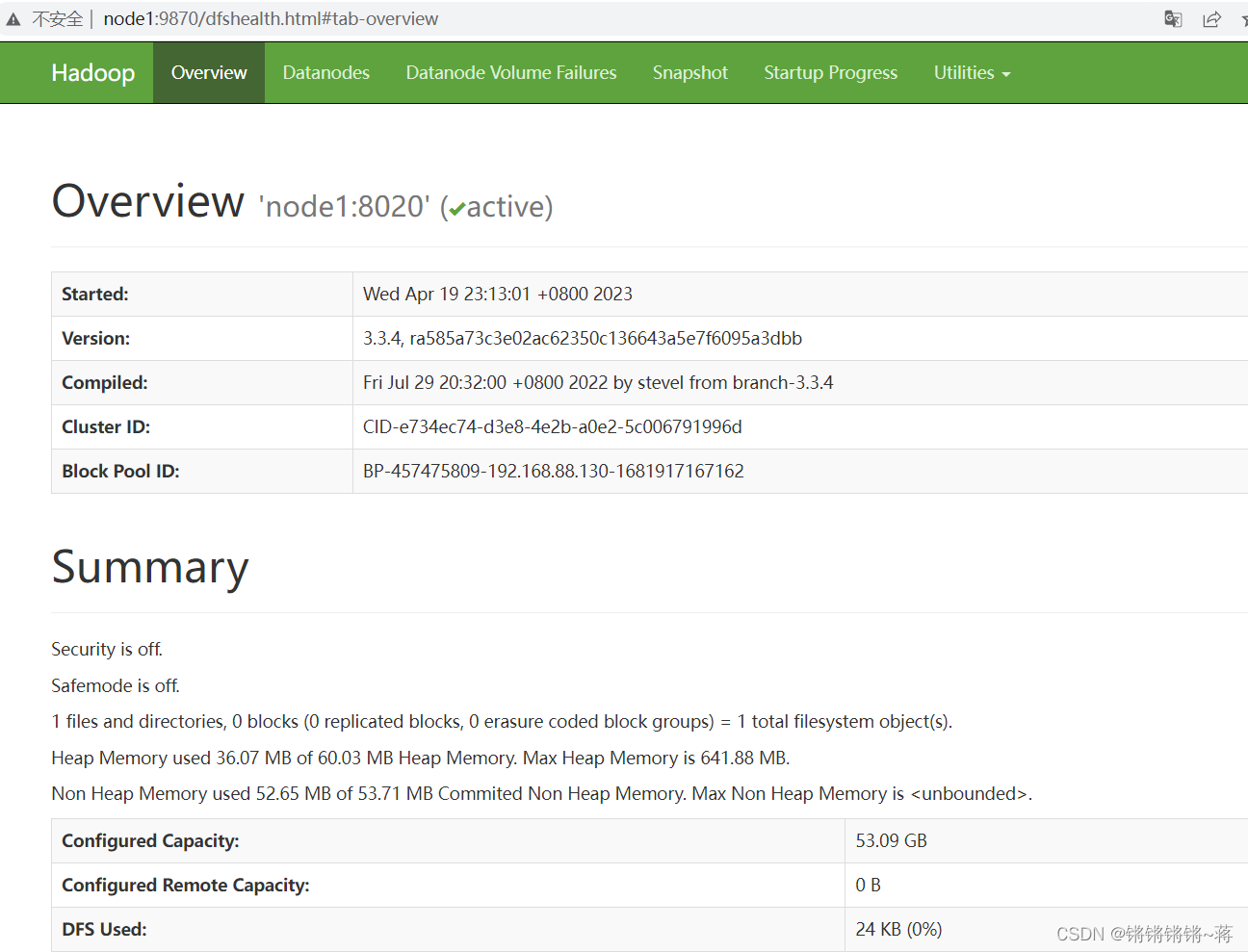

Finally, by displaying the configuration in the hdfs-site.xml file to access the port of hdfs through the browser:

advance to the corresponding directory of hdfs-site.xml, I deployed it according to the tutorial, so

cd /export/server/hadoop/etc/hadoop

vim hdfs-site.xml

<configuration></configuration>Add to it:

<property>

<name>dfs.namenode.http-address</name>

<value>node1:9870</value>

</property>

Finally successfully opened the web page:

other reasons

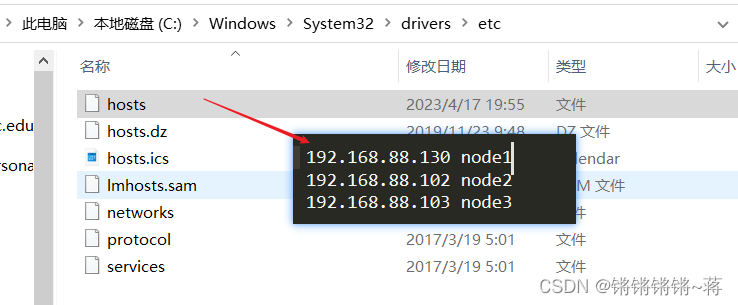

local hosts

Note that I use node1 here because the three virtual machine nodes are configured in the hosts of the local computer, as follows: [

This is also one of the reasons why the webpage may not be opened]

Hosts of C:\Windows\System32\drivers\etc document

firewall

Another reason why the webpage cannot be opened may be that the firewall is not closed. Check the firewall status first:

systemctl status firewalld

if it is not dead, perform the following settings:

systemctl stop firewalld (turn off the firewall)

systemctl disable firewalld (set to turn off the firewall at startup)

Whether the node is opened successfully

You can enter the command jps to check whether your corresponding NAMENODE and DATANODE nodes are started.

If the situation in Figure 1 appears, 3 nodes have been successfully opened.

I use one key to start the hdfs cluster: start-dfs.sh

If you encounter an error that the command is not found, it means that the environment variable is not configured properly, you can execute

export/server/hadoop/sbin/start-dfs.sh

export/server/hadoop/sbin/stop-dfs.sh with an absolute path

If the above other reasons are configured step by step according to the video, it should not appear. The problem is mainly solved by using the display configuration port.