Text AI - ChatGPT and painting AI - Stable Diffusion, thunder on the flat ground, suddenly entered the homes of ordinary people.

If time can be fast forwarded, people in the future will probably say:

People at that time experienced these emotions in short succession. From unbelief, try it; to far exceeding expectations, to be afraid; to relieved admiration, progress comes too fast. People thought that AI was a lighthouse in the distance, but in a blink of an eye, they discovered that it was human beings behind. Looking at the AI again, I was shocked to find that it was far behind.

As a developer, I wonder how it would feel if I let AI do my job? In this article I want to share with you my explorations of the past two days.

Previous situation review

If you are very lucky and have not experienced this wave of AI yet, the following is a brief review.

For example, in the screenshot below, a person is talking to another creature that seems to be all-knowing and omnipotent. This is ChatGPT , which belongs to the natural language processing branch of machine learning NLP . This text chat AI is a very large language model based on RLHF, the reinforcement learning technique with human feedback.

There are two key points here: RLHF, artificial intervention in the training process with a reward mechanism, so that it can judge like humans; the super-large language model is to fully reveal human civilization in the form of words to endow it with wisdom.

If you have recently seen mushrooming works of art in Moments, or played various text and drawing applets. These are most likely another AI, which is used to convert text into pictures, and belongs to Stable Diffusion, a branch of machine learning CV computer vision , working silently for you in the background.

For example, the picture below is an AI-generated work after describing the text "a glowing alien mushroom" to Stable Diffusion.

Brain source

It just so happened that Apple recently updated the performance of the Apple chip in the Core ML large model in iOS 16.2 and macOS 13.1. The paper is published as follows, and the optimization for Stable Diffusion was added for the first time. Today's chip technology, and the neural network engine in mobile phones and computers, can finally support this kind of deduction at the moment.

I was thinking, can I write an iOS program, this program can use Stable Diffusion, let me draw a picture anytime, anywhere on the iPad and computer. In this way, you can turn your brain holes into pictures at any time, and you don’t need to watch advertisements or pay monthly fees, relying on various third-party providers.

Out of instinctive laziness, curiosity about the future, and answering the question of whether AI can replace humans. I want to throw a brick first and let ChatGPT write this program for me. My idea is that this program can have a text box for me to enter information; a countdown timer to see the progress of the generation; a table that allows me to see the time it takes for different devices to generate pictures; a button, Allows me to export and share pictures.

If you can continue to fantasize, this application can have some animations, and it would be great if the picture can be switched at will after drawing. The switching style here is not simply adding a filter, but imitating what I want, such as the outline drawing style, a certain stroke style and so on. To also be able to use it on a Mac, I want a tool that's always there.

With the above fantasy in mind, ChatGPT and I started working to write such a Stable Diffusion painting application.

work and feel

The picture below is the painting application written by me and ChatGPT in 2 days, realizing all the ideas mentioned above. You can see that in this application, I typed a sentence describing a stuffed creature in the text box, and then I got a drawn picture (just like the process of making this application, I describe AI, and then harvested this application).

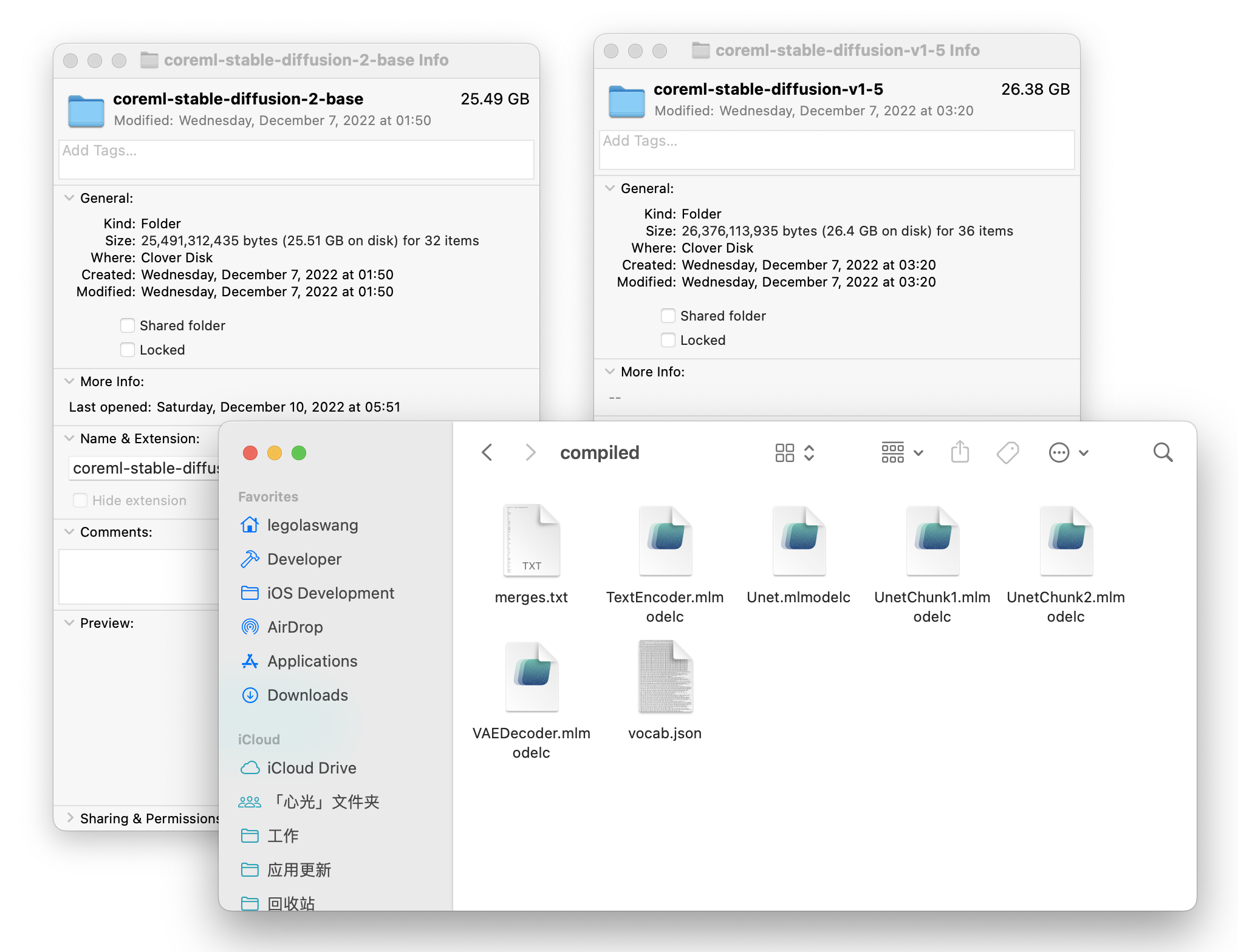

It doesn't make much sense to repeat the technical details. Let's talk about the schedule of these two days and what role AI plays in it. To get started, you first need Stable Diffusion's machine learning model. The download step should have been simple, but because GitHub was marked as a fraudulent website, the process was a lot more cumbersome.

In the step of solving the download, we encountered problems such as the difficulty of installing HomeBrew on the M chip, and the existence of big pits in Git lfs Clone. Originally, I used search engines to solve problems, but I soon discovered that the answers given by search engines or Q&A websites for these possibly niche questions were often irrelevant. In this step, ChatGPT gave a targeted and practical solution for every difficulty I encountered.

What amazes me is that for your question, you can continue to ask when you encounter any difficulties or the method proposed by ChatGPT is invalid. With the help of continuous question and answer with GPT, the download of the model in the figure below was completed.

Now that I have the model, I need some explanatory text. For different devices, list the table, such as the left picture below. At the same time, I also need some horizontally arranged UI elements on the right side of the picture below, such as painting buttons, switching style buttons, sharing buttons, etc.

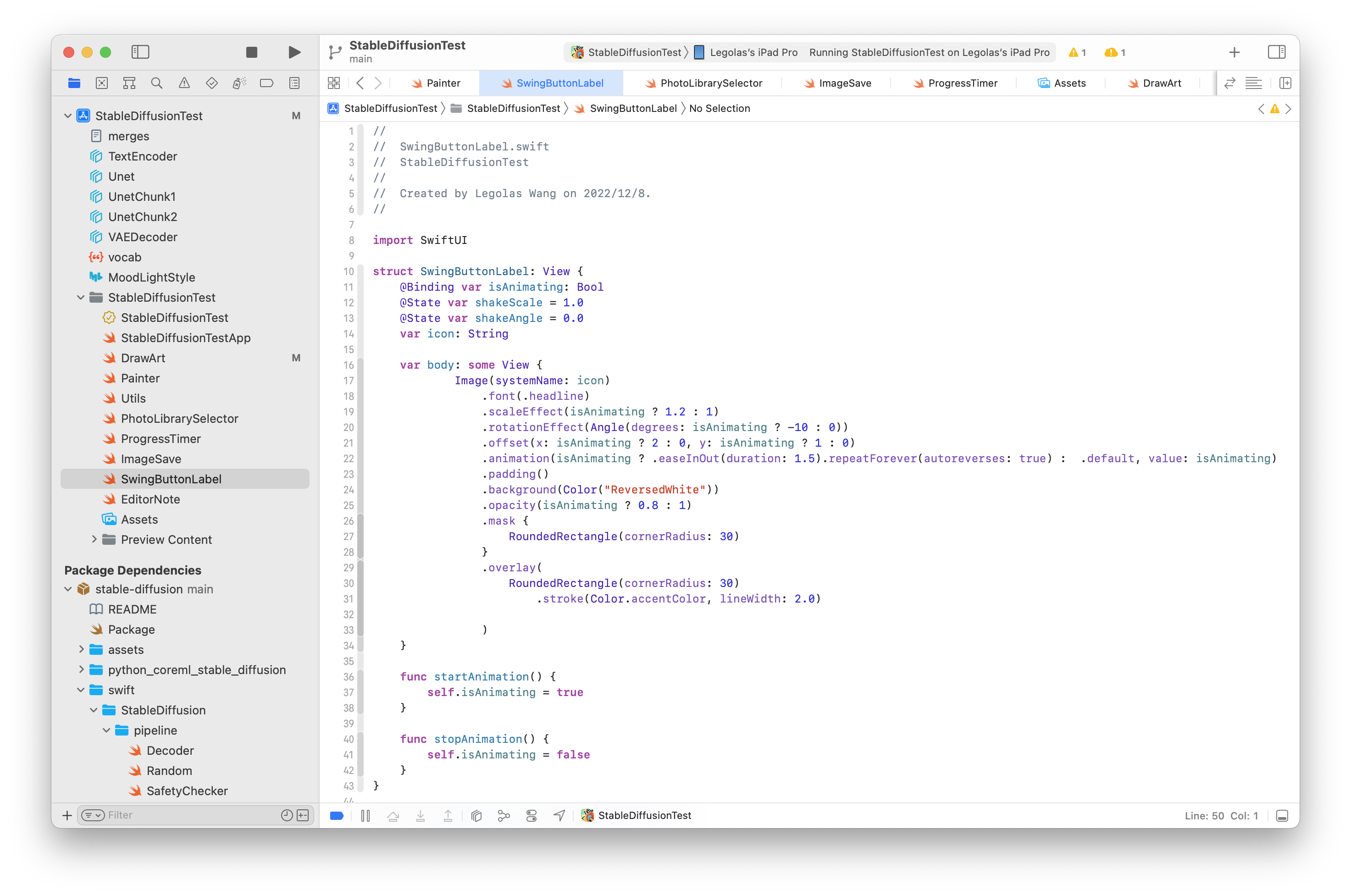

Although I can use SwiftUI to write it myself, but in line with the basic principle that I don't do it where ChatGPT can work, I handed over the UI part to it to write. And what I do is to describe the picture I want to ChatGPT as much as possible, such as the painting button in the code below.

This is how I described it to ChatGPT in English, probably "I want a button that can be animated, support two functions, one starts the animation, and the other ends. The animation needs to be zoomed, just name it yourself." Then I received the following complete SwiftUI code, and I was responsible for copying these codes into Xcode, selecting a suitable icon, and the UI was basically completed.

So far, I've been blown away by ChatGPT's ability to work. I can also write the above codes, but it takes at least ten minutes. For ChatGPT to generate the complete code, it only takes a few seconds. I feel that my life has been greatly saved. More importantly, the code it generates is basically correct, and the naming is very standardized.

If ChatGPT is capable of UI and animation, is the logic part also feasible? With such a mentality of giving it a try, I also described to ChatGPT the more complicated On Demand Resource, that is, the logic of loading the code according to the demand and storing it to the disk after the resource is downloaded.

During this process, I was mainly responsible for debugging with Xcode and connecting different modules. In terms of workload, ChatGPT and I almost reached a division of labor between one and half.

It took 2 days to complete the completed application as follows. Look at the animation below for 5 seconds, and you will see the whole process of text generation into pictures, brush animation during painting, style transfer and export.

Among them: countdown in the process of image generation (written by ChatGPT), model storage logic (written by GPT), machine learning style switching (the machine learning model I trained), sharing and saving (written by me, because the knowledge of 2022 is used ChatGPT does not yet).

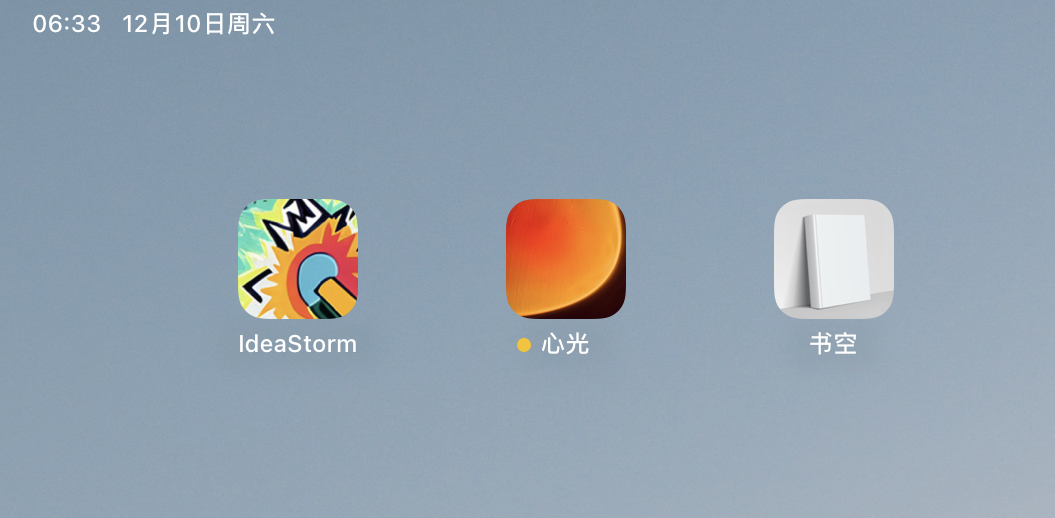

The progress has reached here, and it is only a matter of adding a name and an icon. I have always wanted a brainstorming app to visualize the brain hole, so I called it IdeaStorm, which means idea storm. Since this application is in cooperation with ChatGPT, and finally made an application such as painting AI Stable Diffusion, in line with the principle of handing over complex problems to AI, I let this application itself draw an icon for myself.

The following is on the far left, IdeaStorm, an attempt to write AI with AI, the icon drawn for myself.

Summarize

Can AI work for us? In other words, can AI replace our jobs? In this practice, I did not compromise any original plan because IdeaStorm is a cooperation with AI. In 2 days, from the attempt to explore and land, the answer is yes.

Some people once said that the current hardware performance is excessive. In this experience, the excess performance is quickly replaced by demand. For example, I am working with ChatGPT and developing M1 test with M2. The computer and equipment should be good, but the performance bottleneck is my unexpected permanent feeling. I am often waiting for the equipment to run, to do machine learning, to do model derivation, and to do project compilation.

It turned out that it wasn't that the hardware slowed down, but that with the blessing of AI, I became faster. The node that originally took dozens of minutes to advance is now fast forwarded to the next one in just a split second. It is indeed the feeling of productivity being liberated.

(Ao Runju uses IdeaStorm's creation)

As stated at the beginning of the article:

People thought that AI was a lighthouse in the distance, and the focus was on the distance, very far. In a blink of an eye, it turned out that the ones behind were human beings. Looking at the AI again, I was shocked that it was beyond reach.

After trying it myself, this is how I feel. During the 2-day development process, ChatGPT is the highest evaluation I can give it.