Y. Liang, Y. Li and B. -S. Shin, “Auditable Federated Learning With Byzantine Robustness,” in IEEE Transactions on Computational Social Systems, doi: 10.1109/TCSS.2023.3266019. 可免费下载:https://download.csdn.net/download/liangyihuai/87727720

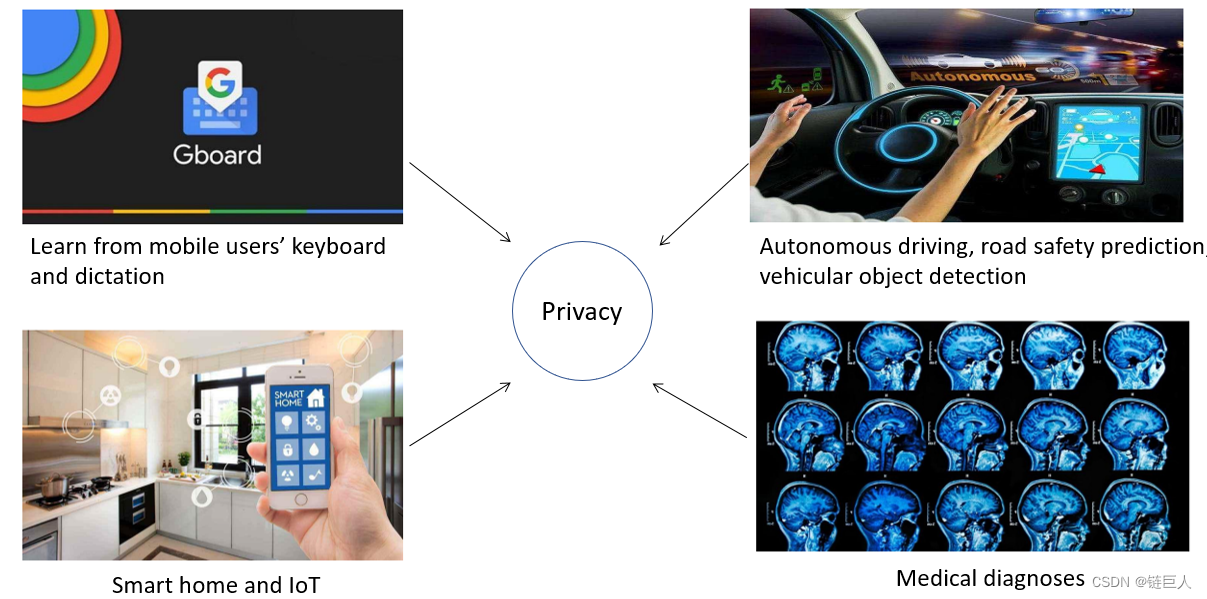

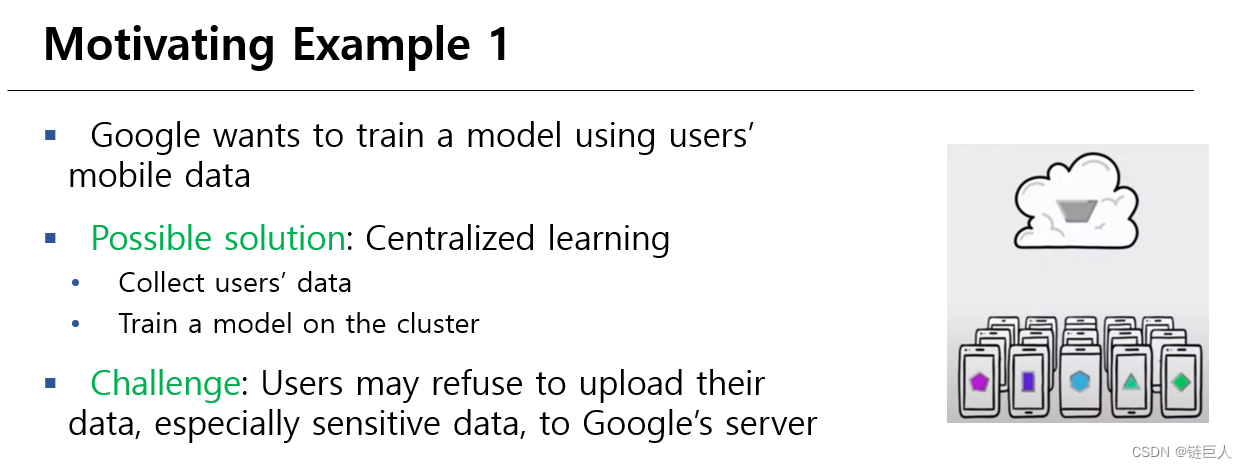

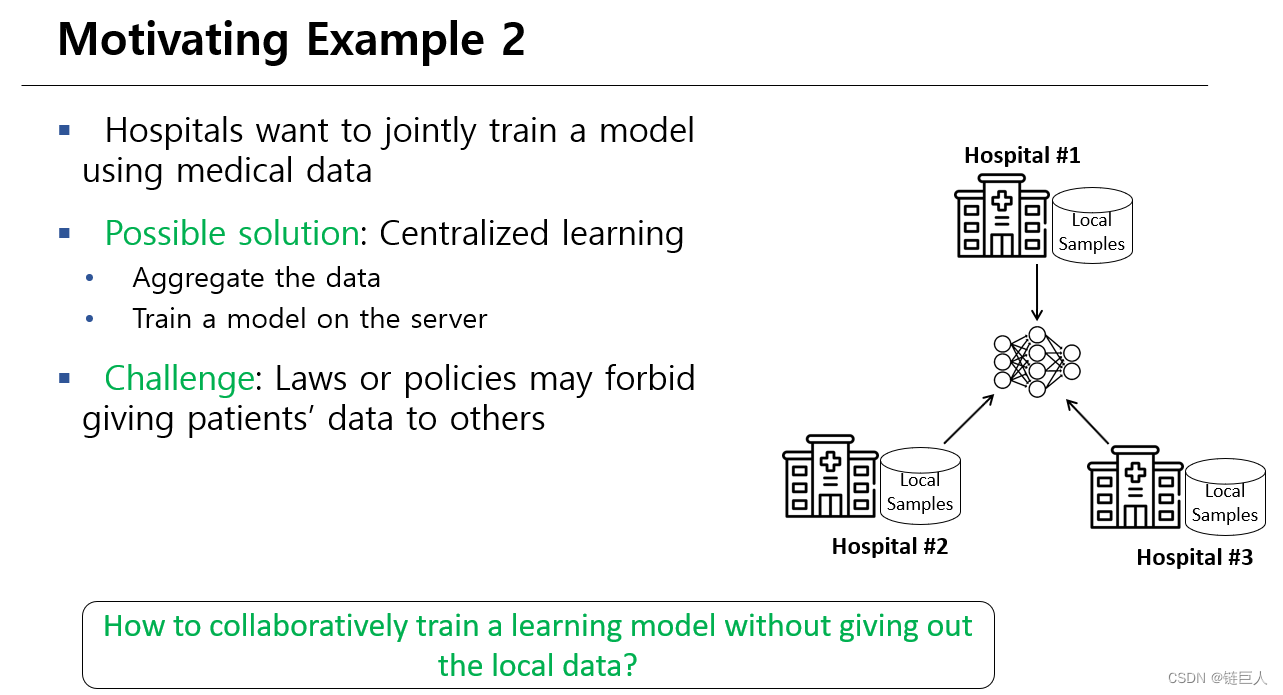

Machine learning (ML) has brought about disruptive innovations in many fields, such as medical diagnostics. A key enabler of ML is large amounts of training data, but existing data (e.g., medical data) is underutilized by ML due to data silos and privacy concerns. The following two figures give examples of problem applications caused by data islands.

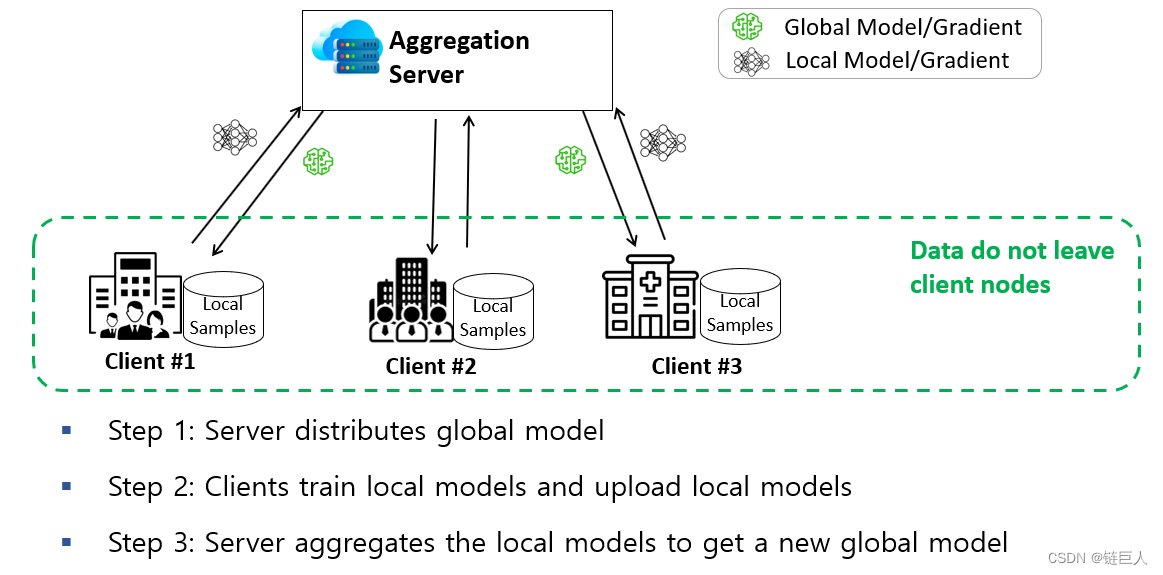

Federated learning (FL) is a promising distributed learning paradigm to solve this problem. It can train a machine learning model among multiple parties without requiring the client to upload data to the server or others.

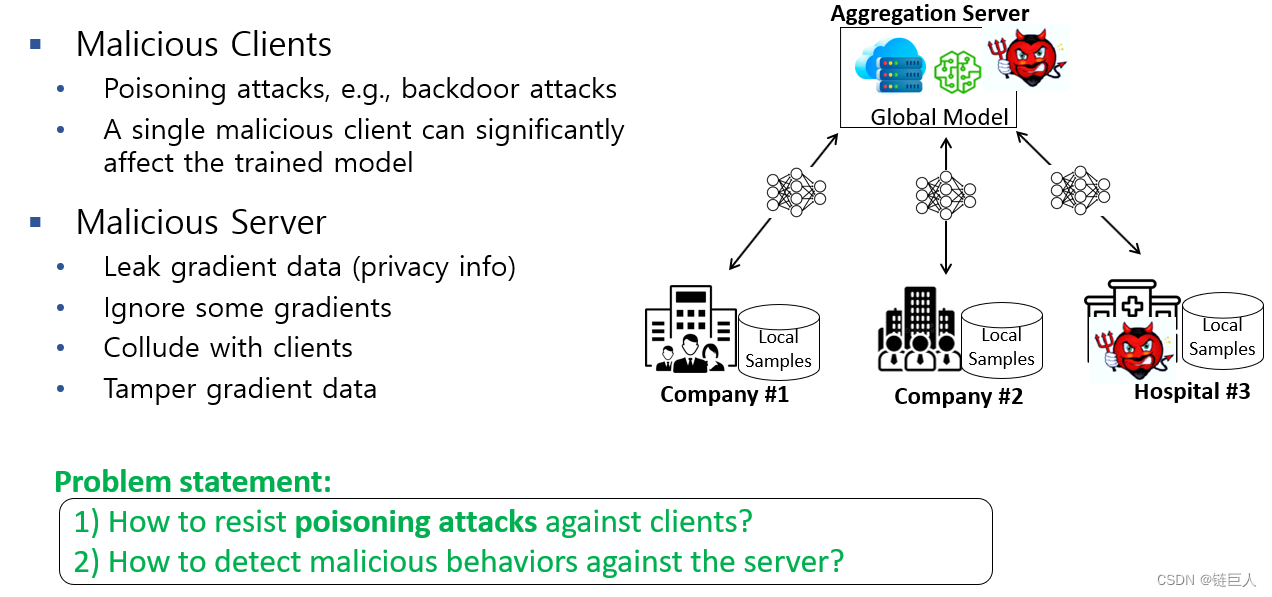

However, existing FL methods are vulnerable to poisoning attacks or privacy leaks by malicious aggregators or clients. As shown in the figure below:

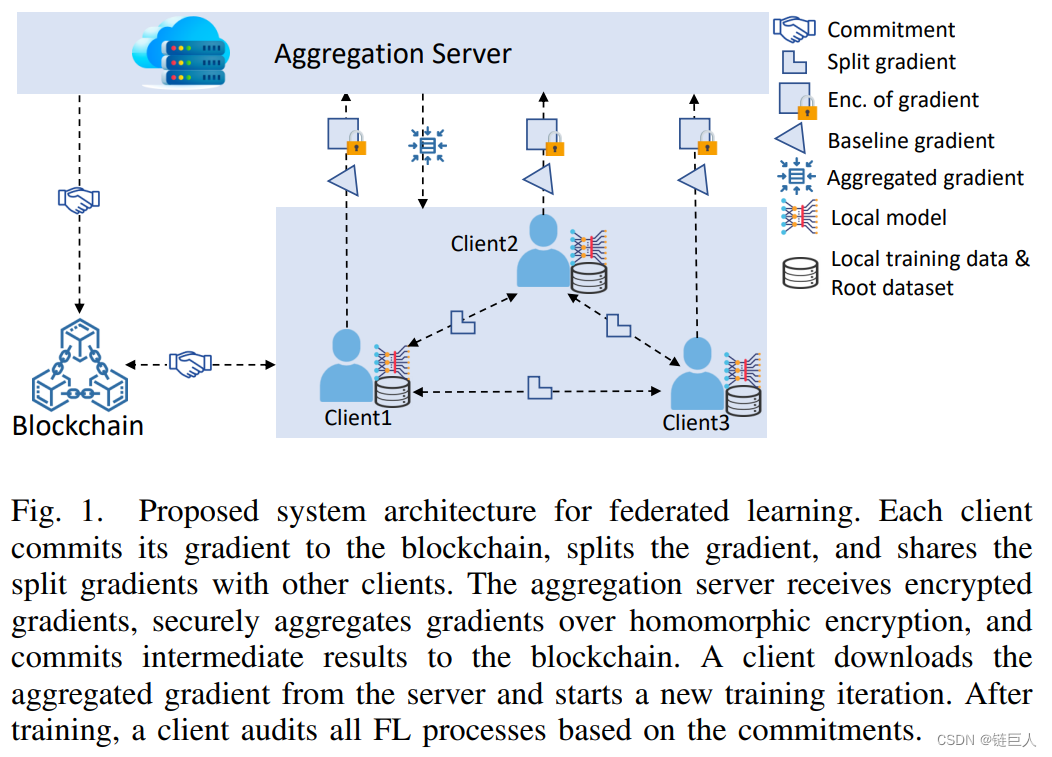

This paper proposes a Byzantine-robust auditable FL scheme for aggregators and clients: the aggregator is malicious but usable, and the client implements a poisoning attack.

The paper aims at the cross-silo setting of federated learning (between multiple companies and organizations), and solves the following problems:

- Privacy protection: use homomorphic encryption technology to protect gradient data. Even if the client and the aggregator collude, their privacy protection cannot be broken.

- Protects against poisoning attacks with an accuracy approaching that of the FedAvg scheme.

- auditable. Any participant can audit to determine whether other participants (clients or aggregators) are honest, such as whether data has been tampered with, whether gradients have been aggregated honestly, and so on.

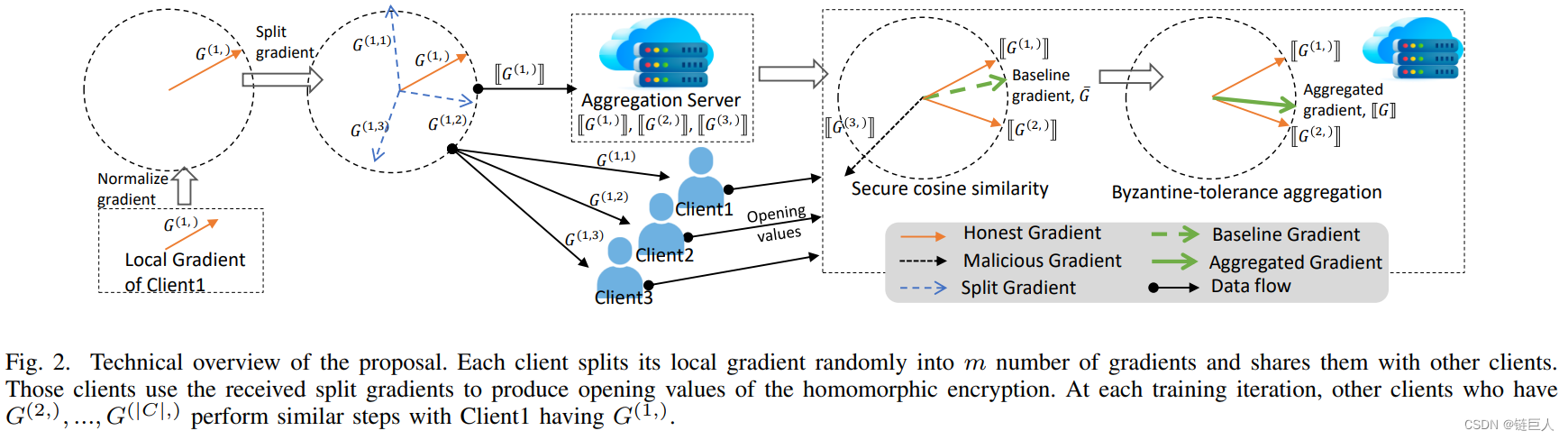

First, a Pedersen Commitment Scheme (PCS) for homomorphic encryption is applied to preserve privacy and commitment to the FL process for auditability. Auditability enables customers to verify the correctness and consistency of the entire FL process and identify misbehaving parties. Second, an effective divide-and-conquer technique is designed based on PCS, allowing parties to cooperate and safely aggregate gradients against poisoning attacks. This technique enables clients not to share a common key and cooperate to decrypt ciphertexts, ensuring the privacy of clients even if other clients are compromised by an adversary. This technique is optimized to tolerate loss of clients. This paper reports a formal analysis of privacy, efficiency, and auditability against malicious actors. Extensive experiments on various benchmark datasets demonstrate that the proposed scheme is robust and achieves high model accuracy against poisoning attacks.

For more information, please check the original text of the paper: free download: https://download.csdn.net/download/liangyihuai/87727720

Thanks!